8 Rate limiting service

This chapter covers:

- Using rate limiting.

- Discussing a rate limiting service.

- Various rate limiting algorithms.

Rate limiting is a common service that we should almost always mention during a system design interview, and is mentioned in most of the example questions in this book. This chapter aims to address situations where 1) the interviewer may ask for more details when we mention rate limiting during an interview, and 2) the question itself is to design a rate limiting service.

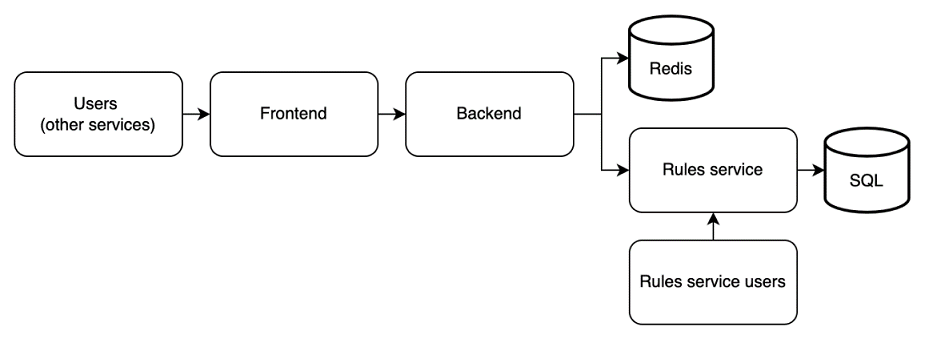

Rate limiting can be implemented as a library or as a separate service called by a frontend, API gateway, or service mesh. In this question, we implement it as a service to gain the advantages discussed in chapter 6. Figure 8.1 illustrates a rate limiter design that we will discuss in this chapter.

Figure 8.1 Initial high-level architecture of rate limiter. The frontend, backend, and Rules service all also log to a shared logging service; this is not shown here. The Redis database is usually implemented as a shared Redis service, rather than our service provisioning its own Redis database. The Rules Service users may make API requests to the Rules Service via a browser app. We can store the rules in SQL.

Rate limiting defines the rate at which consumers can make requests to API endpoints. Rate limiting prevents inadvertent or malicious overuse by clients, especially bots. In this chapter, we refer to such clients as “excessive clients”.