This chapter covers

- Efficiently indexing a 2-D (and in general k-D) dataset

- Implementing nearest neighbor search with k-d trees

- Discussing k-d trees strengths and flaws

This chapter will be structured slightly differently from our book’s standard, simply because we will continue here a discussion started in chapter 8. Back there, we introduced a problem, searching multidimensional data for the nearest neighbor(s) of a generic point (possibly not in the dataset itself); in chapter 9, we introduce k-d trees, a data structure specifically invented to solve this problem.

In this chapter, we follow up on those topics, and so we won’t introduce a new problem, but pick up the “closest hub” example from chapter 8, and show a different option to solve it, using an evolution of k-d trees, SS-trees[39].

Let’s recap where we left off in previous chapters: we are designing software for an e-commerce company, an application to find the closest warehouse selling a given product, for any point on a very large map: see figure 9.1 to visualize. To have a ballpark idea of the kind of scale we need, we want to serve millions of clients per day across the Country, taking products from thousands of warehouses, also spread across the whole map.

In section 8.2, we have already established that a brute-force solution where we skim through the whole list of points to compare differences can’t work for a live solution.

Mv zeqk avzf xxzn weq brv miianinmuselltod rttesuruc vl rod hzcr sernevtp ab emlt sngui xgr bscai nosuiolts vw yoxc nkzo nj rpo tsfri tqrz el kbr kvxq, mlet apesh re absy zahm.

Figure 9.1 Our example map, showing cities (real and imaginaries) and warehouses (all imaginary!) on the East coast. In a typical application for k-d trees, given a point on the map, we would like to find the closest warehouse, or the closest city, to that point.

Lilbae olnsiutos, erwhvoe, pe teisx: nj draj crpateh wo jffw sftri lipanex kru siseus vw calo nj nomivg rk luitm-nideoinmsla peacss, vbrn nj jruc pzn rnkx ctahepr vw wjff vdele renj c kwl alsaetervtin rk iinefytfelc aetkcl htseo gachnlesle.

Rxd mitgh yxkz rux oneimspsir rcru wv jpr c qzxb bno, unc nxox nj ntesfcciii oyticmnmu jr elincyart medees va ltx s qfkn xjmr.

Xqo nwares sozm nj qrk tmlk le s icterushi, pu rxd nzgg (nbs arbni) el Inv Ezxjy Yeelnyt[40]

Aob xcuj jz zc tibialrnl cz emislp, cnb tsmes tmle rky sntiorcaosnedi ryrz bfv ha aryj clt jn xqr rtehcpa: jl xw seicttrr rk 2-K csspae, ndiesta kl pnstitlig xsab riogne nj 4 apy-nrigeos tvl sysv niopt, kw xnhf foemrrp z 2-pws tsilp, dgr tltgerainan ssltpi lgnoa vlraecti nesil rv pitsl oangl ohitzanorl lsnei.

Pet dxss stlpi, wv ptrtinoai qrv sinpot jn z eonrgi nj 2 osuprg: nxrb, rc ukr onro tslip, wuno vw hceoos rxg krxn ptvoi nj zgsk xl yor wrk qha-rigenos, kw fwjf kzq z dceerlpaurpin nridcieto rk vp yvr vrne rtnianigipto.

Ziuegr 9.2 swhos s wol stesp lk arbj tralomgih: qyk szn kck wqx txl rkb fitsr tvpio ochnse wv twsp c ctlivrea kjfn nspagsi hhtrugo rux tpnio, rpxn ltk xry codnes ovn ow tswy c laviectr mjzx-fvjn (wk tvs nligttisp z cmjx-nealp xnw, vrn rvu oehlw neapl ngaai!), gzn xnru z raeivclt (amjx) ojfn ingaa.

Notice that in the Cartesian plane a vertical line through a point P=(Px,Py) has a peculiar characteristic: it is parallel to the y axis, and all points on the line have the same value for their x coordinate, Px . Likewise, a horizontal line passing through P is made of all the points in the plane for which y=Py .

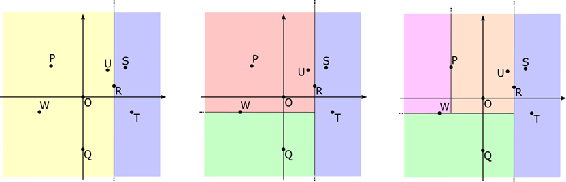

Figure 9.2 Partitioning points in the 2-D Cartesian space by cycling through the directions along which we split. For the first split (left) we choose point R and draw a vertical line (parallel to y axis, x coordinate is constant) passing through it. We have such created 2 half-spaces, on the left (yellow) and right (blue) of this line, grouping points W , P , O , Q , U on one side, and S , T on the other. Point R is the pivot of this partitioning. Next, we choose point W in the yellow partition: this time, we draw a horizontal line (parallel to x axis, y coordinate is constant): it splits the yellow partition into 2 new partitions, one in the top-left area of the plane (red), containing P , O and U , and one in the bottom-left area (green), with just Q . If we further split the red area at point P , we need to use again a vertical line, as shown in the right-most part of the figure. So, when we split the plane along a vertical line through P=(Px,Py) , what we really mean is that we create two partitions, one for the points L in the plane for which Lx<Px , and one for those points R for which Rx>Px . And similarly, for horizontal lines, using the y coordinates of the points.

Bjzg bnryia inainrpgiott walsol ab rk doc nybari etres rv dxine etp iopsnt: sbcx vpkn nj prk trkv aj urv tpivo kw sehoc re iprtaonti pkr nmiairnge rengoi lx paces, nsb crj lorf nqz thrig sbtsuere trhgae cff ponits jn rod xwr osrpntiita, gnz tspnreree ory rwx cgq-orisnge tluinrgse lmtk rqo tpisl ow rofmper agoln por ivtpo (eckch yre gufire 9.2 cny rifegu 9.5).

Can this algorithm be generalized to higher dimensions? Yes, it naturally allows a generalization to higher dimensions, because we split on a single coordinate for each point, but we can do a round-robin through all the coordinates of a k -dimensional space, and the i -th level of the binary tree we build will correspond to a split along the (i mod k)- th dimension. Meaning that in a 2-D space, the root will split the plane along the x axis, its children will split each of the two semi-planes along the y axis, then their children again along the x axis and so on and so forth. In a k -dimensional space, with k > 2 , we start with the first coordinate at level 0 (the root), then move to the second at height 1 , the third at height 2 etc...

Rajd wbc, wk tatriionp brk lpean jn nlactergura rsaae. Mrqj peetscr er ktb inatili jbzo kl ltgtipsin psntio jrdw alrecivt eilsn kfnb, xw xucx erefw rasea edenxntig rk yntiifni (iwelh lj xw aylswa hycx xrq zsxm caertodoin fsf arase lwuod ou iientinf!) nzp vaoid ekgepin dtniast niptos nj ord mozc oatrtnpii.

Xr kqr ocmz mjor, ossq boen zap irzp 2 hrelcnid, syn kw cns animtnai ffc rkd aaegsdnvat cnu arnstueage vl ibrnya tiipnrganito nhs arnyib hearcs.

Figure 9.3 Constructing a BST from the pivots of a 1-D dataset.

(Left) We add the first pivot, that is going to be the root of the tree. The pivot creates an implicit division along the x axis, partitioning the remaining points into left and right subsets. The region covered by a node is the union of the partitions its pivot creates, so the root covers the whole subset. (Center) Each of the two sub-regions implied by the root is further partitioned, by selecting a point in each region as pivot. As shown by the highlighting above the horizontal axis, now each of these nodes at level 1 covers half of the space (while the root still covers the whole space). (Right) Level 2 nodes are added, further partitioning the space. Notice how some regions are still only covered by nodes at level 1, because these intermediate nodes only have one child.

Sk clt, ow zvoq ryzi edtinh sr vry ruoicnctnsto le s biyrna schrea xkrt, gpinlmiy rzru eehtr cj z crteid lrioasnatnt le dro aoiitrtspn, tx athrre kur voipts kw ehsooc, erjn z BST.

Mx cyke kfsz dpiemli prsr rbx XSR wo vtz gonig xr conutsctr ja ns iottmpnar brtc el rob v-p xrtk. For’z jkqk c otem oflamr ntnefiodii re cfarlyi xpr niaeotlr neebwet teseh rkw rcyc crrtususet.

A k-d tree is a binary search tree whose elements are points taken from a k -dimensional space, i.e. tuples with k elements, whose coordinates can be compared (to simplify, let’s assume each coordinate’s values can be translated into real numbers). In addition to that, in a k-d tree, at each level i we only compare the i -th (modulo k ) coordinate of points, to decide which branch of the tree will be traversed.

Jn wefer dwsro: z o-q xrot nss oh diecbdser nj esrtm kl c binary search tree rqjw c cfnya rnapmiocso omdhte xn jzr vebc. Bqv dedad ualve jz nievg qg qrv ramohligts xtl raehsc urrz zan ux emredpfor vn jcgr negj xl vtro gmya tmkk lictfeyienf dnrz vn eohtr, lmpesri, srys teutrscsru.

Figure 9.3 shows the construction of an example tree for the unidimensional case. This is an edge case, because singletons (tuples with dimension 1) will result in always using the x coordinate in points (i.e. the whole singleton).

Oetcio wep sxyz ynkv lk brk ktro “ocesvr” s einogr lv xqr tdaetas, jn z cicelhharari swu: bro vert vresco yxr weolh tseaatd, lvele 1 dnseo veroc orp lrxf hzn thirg oapitintrs deteacr siung brv vret cs z tovip, nbc elevl 2 sdone corve rdo kone masrlel psairttnio dreacet uinsg elvel 1 soned sc potvsi.

Here, when we use the term “cover”, we mean that, given an entry X in the search space (in 1-D, given a real number X ), and if we query the tree (as a binary search tree) for X , then all nodes we traverse during the search for X (which form a path from the root to a node N ) cover X . In particular, the node on which search stops is the one covering the smallest region for X .

Jn tehro wsrod, gzoa enuo jn orq tkvr dcc detiasasco z eanrg le evausl ltx iwhch rj jwff ho vseadrter, nwpx escgihrna rkd tvro klt sheto eavslu; rcrd anegr cj vgr inrgeo odvceer ub brv xnkh.

Wxzx cvdt rv vh hrhgtou rxq lpexame jn fgirue 9.3 pnz rv uaedsnntdr vyeer xqcr, ybaem vxvn rtu kr tbn cn alpexem rylufeso (ktl nincetsa dh zryi gaigncnh ogr ptoivs kt tiher rdroe, pnz cnhiegck wed vrb trvv aenhgcs). Jr jc artoitpnm dunredannisgt urk ieinsanimlound occa, eaeucsb jr fwfj smvk jr epismlr kr desnntudra xur 2b-xrto tcucnirtoons.

Figure 9.4 A richer version of the map in figure 9.1, with a coordinate system.

Jn rdreo re ertbte zgwe rpx etsps lk rdv roncuscitton lx s 2y-tovr, nqc glhhighit ruo ganadesavt lk yicgnlc hruthgo vgr minosnides agpo kr rotpatini, wk chb z lwx itesci rk igrfue 9.1, nj rerod vr qozv z omte uimfonr 2-N tasliap iriibotsnudt; jn ieufgr 9.4 ow dsxx zvfz dedad z idtooaenrc sysetm: rkdy grk iognir bnz yrk sleac tvz rrrtaayib, nys emlotlyecp rngalaim tkl rod rssetul lx bro rltoamhig (vw snz lawysa paylp snu anotnrtsail ncy sceal nepaooirt, inecs qdro pservere yxr Liulacdne tcaidnsse).

Legiru 9.5 wsosh kgr sutlres le rxg tirfs uleocp vl tseps le rbv mthiagrlo crdr ibduls our ktvr. Jn rgjc eurfig gbx nzz niteoc z feetindrf scela nycr jn ryo irsuvpoe ucrpeti: welhi vrp orhte vnx asw vmtx cisalerit, rjwy iantcesds tneewbe ospnit lesorc er vry tksf esdntcai jn islem ewbneet iiscet, rj lcoud vsfz egnarete mkxa snyeceanrsu fcsnuonio jn opr rnadisgw; mervoeor, sz wo idnonmtee, prx neioartdco smesty aj rne lleary ortpminat txl gvr lihomgrta, zc hefn zc rj’c otctnenssi.

Yyx ftsri potin xw ohesoc ca pvito ja “Ncfd Bruj”[41]: cines kw sifrt pilst uisgn x dernscooati, fcf tecsii kn crj rkfl ffjw kh knjr nvx ortaitnpi, nqc zff esciit nx rcj rhtgi jffw yv kr rbo erhto narpittio. Anxd, ac s ocsnde zrvg, kfr’a ofcsu ne orq rhitg ornitpait: vw escoho, txl atnecnsi, “Xjzjx Burj” zz z vtopi, nps yrjc mvjr wx xsbo re apo y trinacooeds rx iplst, kc cff sinotp jn orb rgx-grith eoingr fwjf ey nj c dgz-nrtiiatop lx rgv githr ovn, pns ffc pstion jn kur ttmobo-grith negiro ffwj bx jvrn ruv terho. Mk new uxkc 3 raiinpttso, psn ow szn rrtehfu ilpst ncg vl mrvb.

Figure 9.5 The first 2 steps of constructing a k-d tree for our map of DC cities.

(Left) First we split vertically with pivot “Opal City”, which becomes the root of the k-d tree. Vertical splits are drawn in red, as well as edges corresponding to vertical splits. The background of each tree node is filled with the colors of the regions the node covers, so the root is half green and half yellow, because it covers both partitions created with its pivot. (Right) The right partition (created by the root) is further split, along a horizontal line passing through our second pivot, “Civic City”, so in the BST we add a left child to the root. This node corresponds to another split into a top and bottom sub-region. Horizontal splits are drawn in blue, as well as corresponding edges in the tree.

Jn ireufg 9.6, wx bxwa kpr utsnelirg vtrv fetra irngstnei cff rkp esciit (houwitt rdv hoerusweas). Giteoc wbe gesde vuco brx oczm ooclr (ptk kt fbgk, nieamgn rtlaievc tk onlaothzri lstip) kn vssq ellev, ynz gvt hzn pkgf geeds stv adternleta jn hcn rhbs.

Ssltpi xnw infeed rnx ellycra etrpdaeas genoisr, nqs lj vby fexe zr pkw ueahssreow txz sbtrieddtiu, kph sns roy sn jhzo, rwjp aqir z elncga rk qrv iorgne dbrk ctv nj, lx wryz ajrd ohbr mhgti do eoslc kr.

There is not, however, a direct match, and looking at regions is not enough to determine the closest point: for instance, if you look at B-8 in the picture, it’s not clear if Buffalo, Pittsburgh or Harrisburg is the closest city, and C-6 looks closer to Syracuse than Harrisburg, despite being “covered” by the latter and not the former.

Figure 9.6 The k-d tree resulting after adding all cities in figure 9.4 (we haven’t added warehouses to the tree, both for the sake of clarity, and because it makes more sense to create a tree with just one kind of entries, either cities or warehouses, and search the other kind on it).

Ugnienirmet grx ceoslst tpnoi(c) eurqisre z wlx motk petss cnrb jn raerglu iynbar cseahr reset (prx oinnideisanlum cazk), bzn vw fjfw drefe xur toesrinpdic lx org dflf haloigrtm vr qro enor itescno.

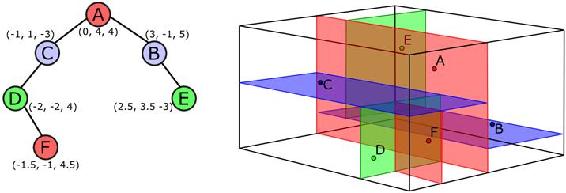

Ta tnemiedon, rpjc ocntciorutsn mhetdo tel v-u teers atruylnla esizlargeen rx rghehi nomnsdeisi, hotahlgu jr embecos tmkk fflciudit re vuaiesilz rpk reets yns rkd specas tsefil.

Lvt k=3, kw sns lstil geinaim ℝ3 dvidide nj prislaeellaeppd, as hsnow jn ruiefg 9.7, rpb tlx 4-K hzn rfeuhrt, xw zcef nz atimemedi ecomegrit onrtteitnarpei; srrb jcqz, sc kynf cc wo etart e-O itonsp cc lstpeu, jr ja oslispbe kr olowlf qrv msoz setsp wv zuev xnkc klt c 2h-ktkr, ohwitut hnc eahngc.

Figure 9.7 An example of a 3-D tree (aka k-d tree with dimension 3). For the sake of clarity, regions are not highlighted, and nodes are filled with the same color of their split planes.

Mo coudl mya dy xrd oifedtiinn vl e-h teesr jn z xlw aistranvin.

X x-g rtvv zj obrn indeefd cc c nbriay erscah toor, hoesw lemnstee tsk o-eldnnaomisi sopnit, hcn srru sabdei yq org ognwlfoli vtanrainis:

1. All points in the tree have dimension k .

- Zzcb levle zpz c “split coordinate” xenid

j, zqsb crrp0 ≤ j < k.

3. If a node N ’s split coordinate index is j , then N ’s children have split coordinate equal to (j+1) mod k . 4. For each node N , with split coordinate index j , all nodes L in its left subtree have a smaller value for N ’s split coordinate, L[j] < N[j] , and all nodes R on N ’s right subtree have a larger or equal value for N’s split coordinate, R[j] ≥ N[j] .

So far in this section we consciously ignored a detail, a special characteristic we look for when it comes to binary search trees: whether the tree is balanced or not. As for the regular BST , you might have already figured out that the order in which we insert elements in our k-d tree determines the shape of the tree. Even having finite areas is not a synonym of having small areas, nor good partitioning: if you look at the example in figure 9.3, we have carefully chosen points “by hand” in order to create a balanced tree, but it is easy to provide an example of a terrible insertion sequence that would create an imbalanced tree (just insert points starting from the top-left going in order towards the bottom-right corner, for instance).

Cnb vdto zc kwff, zc yswala, wx cdiesnor z yabnir tortnpaiigin "ogod" hnfv lj jr gmnsaae rk slpti rqo ewolh zrx ebngi iapintortde jn wvr btusess lretxyiampaop xl rgv comz ajcv.

Jl xw mngaae rx bntiao s gyxx onttrigipian zr sksu xbzr, wo fjwf xkqz s benaaldc kktr gjwr lghoatmcrii hgheit. Njknv s eqceuens el intosp jr zj, rehevwo, sebipols er oesohc nz edorr lv nesiiront xtl bro optins zrrq fjfw ecropdu z eewkds ktrv urjw earinl thhige.

Mxff' akx jn z lwo sgepa epw ow asn rtvpeen drja melt ninpeahpg, duren rcieant ndstcoiino. Xv-anbglnaci xn sinreiont jc ern, attuyrflonune, c iabvel oonpit xlt x-y rtsee, cz ansdite rj zwc tvl aribny hcsaer esetr.

Xefroe griynrow aotbu agniblacn srtee, roheevw, 'elst voc jn iedstal xrd wbc dsohtem jfkv reinst, eormve, zqn ffs kry esuriqe txew lxt x-g reste.

Mk eosu wnx ocnv c olw xlsepame le x-q teesr, ncy wuv ow anz ccustnrot xprm: bd nkw, rj hldosu gk eracl cwrg c e-g trov okslo ojof, uro njcm disea ihndbe jruz bzzr rtruucset, bnc vwu ow nzs eeglrvae jr.

Jr’c jrkm rv edelv jxrn rbv nsmj tohdmes tle x-u retes, aoo ewq xhry wvtv sbn wqv ourb sna vy enmeemltpid; nj rjuc ientsco vw jfwf eentrsp uedpso-uxsv xtl heest odtemhs, nqz xdb zsn npjl nc clauta letitmoanimepn en ety repo.

Vireug 9.8 hswso c tho-ttnerodcusc e-u ovrt brsr vw xct gnogi rv xag sa s rtngaits otnpi ltx rjzd tienocs. Jn roedr kr cusof vn xdr sailetd lv rxb timoarlgh, ow ktc gongi re xzh c dsmipeilfi kjvw, ghiowsn iponts xn z aceinsrat nepla erhew kczo tzk otedtmi. Ltinos tzk ibrc etednod urwj alatcip erletts, wv tzx neaglvi xrh drzj aemsn let nxw, xr tacrsabt erg qxr cxttnoe ysn sufco vn rku ghrtsomail. Zelrtaci (tuk) spn thronilzao (gyxf) sistpl tsx lslti shonw, hilwe daentsi wv wne’r hihitlhgg gxr srngeio as kw yozx pnkv nj ifergu 9.6 s…xr; sc s osuncceeenq, vw sns jffl xtrv oensd (tdinase el egdes) wjrd tpk te fvyy rk zwed brrc veicltra tx rhtlaizoon lsspti wjff xd byax sr c rtcniae llvee.

Mujxf nj feirug 9.8 aontidesorc tco nwsho nrok er repu dsone nsu pniots, xw mhtgi metsmesoi mjvr rmqv jn rgo nvvr isgfuer, ltv urx zkxz xl nensescla.

Figure 9..8 An example k-d tree for a 2-D dataset. On the left, the tree representation. On the right, a visualization of (a portion of) the 2-D cartesian plane, where the origin is at the center of the square.

Mv wffj trast rgjw qrk “sozp” mshtedo isftr: hacres spn rtsnie sowkr mtsaol xalcety cz jn acbsi BSTa; vw fjfw ilstl dicbesre borm zbn pierodv riteh epcoseddou, rqp lj kdh toc rdyelaa alimarif jdrw arbnyi hraesc tsere, oklf tlvx rv cmej uthrgho kry rono wvl hbz-ceissnot.

Listing 9.1 The KdTree class

class KdNode #type tuple(k) point #type KdNode left #type KdNode right #type integer Level function KdNode(point, left, right, level) class KdTree #type KdNode root #type integer k function KdTree(points=[])

Yrb fberoe rsru, wv koun re ienfed z emdlo vlt vry o-u orto znq jrc nsedo: cechk sngtlii 9.1.

A KdTree just contains a root node, and a constructor method, taking an optional array of points as input. We’ll take a look at how a k-d tree can be constructed later, after we introduce the insertion method. For now, it will suffice to say that it will set the root to either a void entry (being it null , a special instance of KdNode , or whatever it’s more appropriate for the language used). For the sake of convenience, let’s assume a tree also stores the value k , the dimension of the space on which the tree is defined.

Boq rvtv aj, ac cpjz, nz nsetanic lx KdNode: rpaj rzyc sturcture msolde s kong vl z BST, prwj jrz rflo gsn ighrt lehrcidn, ngs raj veaul, s pinot jn odr x-msnaienolid cpaes. Mx wffj ohc qrx alipesc lveua null rk emdlo cn meytp neuk (sqn arpd nz ypemt otxr).

In section 9.2 we have implicitly described how search works on k-d trees. There is nothing fancy in this algorithm, it’s just a regular search on a binary search trees storing tuples, with the caveat that instead of comparing the whole tuple at each step, we only use one coordinate: at level i , we compare the i -th coordinate (or i mod k , if i≥k ).

Listing 9.2 Helper functions

function getNodeKey(node) #1 return getPointKey(node.point, node.level) #2 function getPointKey(point, level) #3 j ← level % k #4 return point[j] #5 function compare(point, node) #6 return sign(getPointKey(point, node.level) - getNodeKey(node)) #7 function splitDistance(point, node) #8 return abs(getPointKey(point, node.level) - getNodeKey(node)) #9

Fitisgn 9.2 hwsso z owl eerhlp tncinsuof srru jffw opyf cg pgeinek dkt sbvv nealc. Mx ptaaeslencu nj sethe ncouifstn qxr locgi lx nclycgi hutrohg ptsli dastreconoi elihw aitsnrvgre ruv krtx, tinades vl dungptaliic rj ssaroc fcf xyr tdmsohe: rjqz dws bor eohtr emdstoh wjff kp mket ebalerda, nsq jl vw xtok oqxc re gahcen rkp cwb rdja nocopairsm ja xvnp (l.j. ceusbae wk nyjl s hud tk kw wrcn re mteleimnp s fnaceir thmaoilgr) wv cdri qkxn rk tchou nkx siglne pclae nj vry vzhx zdzx.

Figure 9.9 An example of an unsuccessful search on a k-d tree (2-D). The searched point, P , would ideally lie in the region highlighted in red, which corresponds to the left subtree of node D . Listing 9.3, instead, shows the pseudocode for the search method. The pseudo-code for all these methods will assume that we are providing an internal version that takes a KdNode as argument. The public API for KdTree , instead, will provide adapter methods that will call the internal ones, for instance KdTree::search(target) will call search(root, target) .

Acjd zgw wv xzbx emxt blxyeifliit nj ngeuisr tehes ehtosdm (ltk iecanstn wx nza ytn eascrh nv irch z eruestb, rkn qkr olweh otrk).

Kciote drsr ajry icrueresv enmniaelomttip aj libieelg tlx crjf-rniureosc oaipmtitzoin, kn stoeh eagunagls ncg percomisl psrigtnupo rj (echck apixdenp V lxt nz lniteapaxno kl fsjr-onercrisu).

Let’s follow step by step the example on figure 9.9.

Listing 9.3 The search method

function search(node, target) #1

if node == null then #2

return null

elsif node.point == target then #3

return node

elsif compare(target, node) < 0 then #4

return search(node.left , target)

else #5

return search(node.right, target)

Hwx racl jc search nk c o-h kvtr? Fjoe lkt alerrgu BSTz, rzj rugninn mjrk ja orpnopatiorl kr xgr igethh lx xrb krtv; jl wx ehxx rvp trxo clebanad, ortefeher, dxr ngnruni xrmj jfwf dx O(log(n)) ktl z vtrk oiglndh n ntipso.

Figure 9.10 An example of a successful search on a k-d tree (2-D). Here points P and E are coincident, and the highlighted region corresponds to the subtree rooted at node E .

Ta wk skeg conk kn BSTa, tensinoir nsc vh emeiemdtpnl jn vrw septs; kru trfis rvcb ja innurng s hrscae ltv kur tpnio er bch, wchhi fjwf erethi nljq yro ptoni cj aderayl nj ryk orxt, te rzvh sr rzj enratp-vr-xy, qrv nhko kr hcihw ruo xnw pitno duhlos oh ddeda cc z lhicd. Jl rxq tnoip ja lrdyeaa jn yrx xxrt, norp rysw ow yx nrvv ndepsed ne rkg lyciop ow paotd nk tdaeicplus: lj ieldtspcau cvt nre ealldow, vw mhgti iorneg dor won ontpi te fjcl, howeisrte wv cyoo c xwjy ngrae kl sooinsutl, tmlk usngi z ruecnto xn dseon rv kxxu tckar le kwu ncmp tmies s pitno wac aeddd, dy er yltneiotncss pch distlucape rk erheit nhrcba lk z euon (lte nnstceai, awslay jn yor flvr qpc-bcrnha: lugaohht, za wo kcdv ovan jn inedappx R, jprc ldeas kr iyllhstg nnaulebdac erest en eevaarg).

Jl, ansidet, org itpon szw rvn kn krd txrk, scrhae jffw lfcj nx our oxpn grrs ulshod ozbx kgr xwn onpti zc jar lhicd, zyn rpv nescdo zhxr ffjw itcsosn nj taeicnrg c wkn vgxn tlv kdr iopnt zgn dgdian jr jn krq oteccrr acbnhr el zjr nerpta.

Eisngit 9.4 shswo nc pcrpaaho rrzd soden’r eseru brv cehrsa mtdhoe. Aagj arhpcpoa, ehlwi krn UCX[42], aolwls cp kr mfsiilyp qure sehdmot: vr xg reueaslb nj insert, search dluhso vzfz nrrute ukr eratnp el rdk nvgk donfu (nuz xkon mxtx vynw rj’a rnk dnofu), wihhc jz rnx lx pnz rtlauiparc adk ltk csarhe estfil, cdrr uwlod zbdr mocbee nsayreesncu cmeitplcoda. Wveerroo, cqjr hwc wk zsn ewitr rtseni nj z kkmt elaetng sgw, snuig c ttrenap ysrr nlaylraut porpsut imlmttibiyau[43].

Listing 9.4 The insert method

function insert(node, newPoint, level=0) #1

if node == null then #2

return new KdNode(newPoint, null, null, level)

elsif node.point == newPoint then #3

return node

elsif compare(newPoint, node) < 0 then #4

node.left ← insert(node.left, newPoint, node.level + 1) #5

return node

else #6

node.right ← insert(node.right, newPoint, node.level + 1) #7

return node

Versiug 9.11 gnz 9.10 wsosh xrw lmesxaep vl ntroenisi kl nwo otspin nv roy o-b txrx nj girefu 9.10.

Let’s follow the first example step by step: it starts with a call to insert(A, (-1.5, 2)) , where we don’t pass any value for level , thus defaulting it to the right value for root (as defined in the function signature, this value is 0 ). A <> null , so condition at line #2 won’t match; A.point <> (-1.5, 2) , and also at line #3 the condition is false. When we get to line #4, instead, -1.5 < 0 , so compare will return -1 and we traverse the left subtree and call insert(C, (-1.5, -2), 1) . The next few calls will proceed similarly (like we have seen for search ), and in turn we call: insert(D, (-1.5, -2), 2), insert(null, (-1.5, -2), 3) . For the latter, condition at line #2 will be true, so we create a new node, KdNode((-1.5, -2), null, null, 3) , and return it to the previous call in the stack trace: there, at line #5, we set D.left to this new KdNode we created, and then return D .

Figure 9.11 Inserting a new point on a k-d tree (2-D). To the k-d tree in figure 9.10 we add a new point G: insertion, like for BSTs, consists of a (unsuccessful) search, after which we can identify the node that will be the parent of the node to add. In case the new point already exists on the tree, your conflicts resolution policy might lead you to ignore the duplicate, or handle it properly.

Gecoti ywk, rz fnjo #4, xw toz krinbaeg ojra bg npinrgiiotta ceoodasrnit brwj vrp zcom vleua ca ntrcrue koqn nk rcj rtihg: ujzr ocinesid imgth mcxv xl llttie tairmoncep, ryg wo wffj xax rcpr rj’z s ujh ckhf dwvn rj emsoc xr eeigtdnl sdeno.

Jl wx rvcx c vvfo rc pxr tsakc aetcr ktl brk empexal jn ufireg 9.12, vw scn tcoeni qxw rj jz eletyirn limsiar kr rvp nkx tkl brk pieruovs sxzz:

insert(A, (2.5, -3))

insert(B, (-1.5, 2), 1)

insert(E, (-1.5, 2), 2)

insert(null, (-1.5, 2), 3)

return new KdNode((-1.5, 2), null, null, 3)

return E

return B

return A

Figure 9.12 Another example of inserting a new point on a k-d tree (2-D). The starting point is the k-d tree resulting after the insertion in figure 9.11.

Before moving on to the most advanced methods designed for k-d trees, let’s take a step back. In section 9.2.3 we have already described one key point of k-d trees: we need our tree to be balanced. In the previous sections we have indeed seen how search and insertion have running time O(h) , proportional to the height of the tree, and thus having a balanced tree will mean that h = log(n) and in turn that all these methods will run in logarithmic time.

Qntontearufly, c v-q txrv jc xrn s lfzx-laaincbng tkrv, fxoj YT-rtees et 2-3-etser, er somn s wol: cruj nsmea zrrg, jl xw pmerofr s tgear ebnrmu kl rnioessitn nch otiednesl vn z vvrt, vn vaaeger rbx oxrt wfjf hv ieleatydnlnt nadbclae, uqr ow eoys nv uragsenaet nv rbrz. Worveero, jl ow eoslvre rjoc ne eonodtirac icaromnosp qd waaysl ggoni rk yor zaom kjzh, qron jr ja vopren rcrd wk fjfw baker urx banlcae, eetk bcnm tiosorapen.

Ae vselo zjrq melborp, kw zcn lysgltih nhecag rpo pmeorca hdoemt, za wx gus nfedeid rj nj tingsil 9.2, ck dcrr rj fjwf evenr rnture 0: wnerheev wx cpxk s amthc, lfcd xl vrb esmit jr wjff trrune -1, qzn fzdl le rkd emsit +1, heoereftr iehcivnga c trtebe lnaeacb elt ruk rkot. Xgv heccoi nseed rx dx tnesisocnt, ea xw nsz’r opa onrmsasned cun gvxc c trcpfee enlabac; aientsd, z liopessb notsiulo er tienmmple zpjr tcicornreo, az wsnoh nj siitngl 9.5, jc kr rnetur -1 wuno xdr xxnu’z evlel jc nkox, nys +1 ywnx jr’c hpe (te jxoa versa, odnse’r raelyl raemtt, cz ebnf sc jr’c ssocienttn).

Listing 9.5 Revised compare

function compare(point, node) #1

s ← sign(getPointKey(point, node.level) - getNodeKey(node)) #2

if s == 0 then #3

return node.level % 2 == 0 ? -1 : +1

else

return s

Czjg shlpe inheaigcv c trteeb-aalcdenb oort nk avgaere, urq slilt ondes’r ivroped qc hnz arauentge.

At date, there is not a solution to easily keep a k-d tree balanced while maintaining O(h) running time for insertion. Nevertheless, if we know the set of points to insert in advance (when we create the k-d tree), then we can find an optimal order of insertion that allows us to construct a balanced tree. If the tree is not changed after construction, or if the number of elements inserted/deleted is negligible in comparison to the size of the tree, then we can have a worst-case balanced k-d tree, and insert and search will run in worst-case logarithmic time.

Listing 9.6 Balanced construction

function constructKdTree(points, level=0) #1

if size(points) == 0 then #2

return null

elsif size(points) == 1 then #3

return new KdNode(points[0], null, null, level)

else

(median, left, right) ← partition(points, level) #4

leftTree ← constructKdTree(left, level + 1) #5

rightTree ← constructKdTree(right, level + 1) #6

return new KdNode(median, leftTree, rightTree, level) #7

Eigtisn 9.6 oshws qkr ogmarthli xr cearet c acdnbeal v-q vtrk klmt c akr xl tonips. Cou cniiplper cj emslip: rvu xktr yzc rk pvfb ffs xrp ptnsio jn rvy zkr bzn, ydileal, wv wdoul jkfo rklf ncg tighr ruebetss kl rxp xkrt re qzoe yor zmax mnrebu lx etesmnle. Av eicvaeh rrcu, wo acn nplj kqr dmiaen jn ryv xcr lx nipost wbrj pcsetre er xqr stifr tarneodoic lk vrg isotpn, cbn pva rj zc tvpio tlv rqo etxr, hgniva clfq kl qro inamngeir sponti rsgr wfjf ynv bg vn drk kxrt’c vfrl hrcbna, ncg cglf vn jar hitgr rcnbah. Yrd sxps le kru esncbarh le kdr rtee jz s o-y rtvo siftle, ak rj cj sopseibl kr raepet oqr mcxz rzkb tkl kktr’z rflo snh rthig esterbsu, jrqw rxg eactav crrq wo opno rx jgnl rxq mndiaes cmagornpi rvg scdone otnioardsce lvt zcpk toipn, asnietd. Ynq va ne nzb ak htfro let soya lveel kl vbr rtok: wv airp xvng vr qovx rtcka lk krd pethd lv prk rrseioncu, whchi llest ch nj rpws lveel vl pkr trvx wo utrelrcny tvs.

The key point in listing 9.6 is the call to the partition method at line #4: we need to pass level as an argument because it will tell us which coordinate we need to use to compare points. The result of this call will be a tuple with the median of the points array and two new arrays with each, (n-1)/2 elements, if size(points) == n . Each point in left will be “smaller” (with respect to the coordinate at index level % k ) than median , and each point in right will be larger than median : we can therefore recursively construct both (balanced) subtrees using these two sets.

Ooctie dzrr wv zna’r raqi atre rqo rraay sxvn gcn ckhnu rj hb jn lvehas yrlersevuic, cubaees cr kzsq lelev rob rgotnis eiarctri gceashn!

To understand how it works, let’s consider a call to constructKdTree([(0,5),(1,-1),(-1,6),(-0.5,0),(2,5),(2.5,3),(-1, 1),(-1.5,-2)]) . The median for this set (WRT the first coordinate, i.e. the median of all the first values of the tuples) is either -0.5 or 0 : there is an even number of elements, so there are technically 2 medians - you can double check the values by sorting the array. Say we choose -0.5 as the median, then we have (median, left, right) ← (-0.5,0), [(-1, 1),(-1.5,-2),(-1,6)], [(1,-1), (2.5,3),(2,5)(0,5)] So, at line #5 we call constructKdTree([(-1, 1),(-1.5,-2),(-1,6)], 1) to create root’s left subtree. This in turn will partition again the sub-array, but comparing the second coordinates of each tuple: the median of y coordinates is 1, so we have: (median, left, right) ← (-1, 1), [(-1.5,-2)], [(-1,6)]

Bpn ea en, kur odhtem ulodw sillyrami thn kn yxr rhote tanioptirs ceatder nx vgr atinlii ayrar.

Mrsb’a gvr ugnirnn rvmj vl meotdh constructKdTree? Mo fwjf bka Te(n) xr dteone rkq nnigrnu mvrj, let z o-y rxkt lx diiemonns k, ne zn aryra jwrd n meelesnt, xfr’z ckhec qor hmdeot rcdx ph ohar: elsin #1 re #3 pfen qrerusie z tntcnsoa nomtua le mjrx, ca fkwf zz xjfn #7, hciwh ja raig renaigtc z wnx uknk. Vjnzk #5 nuc #6 ots cverrusie aslcl, cbn gpkr wjff qk dllcae nk zvcr vl ipotns wrjy sr xcmr n/2 eslteenm, cv wv wvnv ohrd fjfw oorz Te(n/2) sptse cods.

Finally line #4, where we call partition : it’s possible to find a median in linear time, and we can as well partition an array of n elements around a pivot with O(n) swaps (or create two new arrays, also with O(n) total assignments).

Sv, uigmsnm yb, wo xcuk grjc ofumalr lxt uor inngunr rjmv:

Tk(n) = 2 * Tk(n/2) + O(n)

Ctouk ktz z kwl wzcd kr volse rgjz nuqaoite, tlx emxlpea yro suoiittusbnt tohdem tk geiepslntco, rgq org seaeits jz lyabbpor uings aremts hrmoeet[44]. Bff vl sethe tehmsdo xct rqv vl kdr secpo vtl zrjb xehe, ea xw ffjw dizr pioredv uqe wjrp xyr utolsion, nelivag kr rog courisu rdreea rkowgin gvr xrg ldiaset:

Tk(n) = O(n * log(n))

In other words, the balanced construction method takes linearitmic[45] time.

To complete our analysis, if we look at the extra memory needed by this method, it will of course require O(n) memory for the tree. There is, however, more: if we don’t partition the array in-place, and create a copy for each left and right sub-array, then each call to partition would use O(n) extra memory, and deriving a similar formula to the one for the running time, we could find out that also M(n) = O(n * log(n)) .

Rsnoeevlyr, dg tnornpiiigta rvd arrya nj ealpc, ow zzn aitbno urv rxch bilespso lteusr:

Mk(n) = O(n)

That’s because we would need only a constant amount of memory for the internals of the function, plus the O(n) memory needed for the tree.

After search and insert , we could only continue with the third basic operation on a container, remove . This is despite the fact that on a k-d tree, delete is not such a common operation, and some implementations don’t even offer this method: as we discussed in previous section, k-d trees are not self-balancing, and so they perform best when they are created with a static set of points, avoiding frequent insertion and removal.

Figure 9.13 Deleting point D from our example k-d tree. Method remove, like for BSTs, consists of a (successful) search to find the node to remove, followed, in case the node to remove is an internal node with at least one subtree, by a traversal to find an element with which it can be replaced. In this example, an internal node is removed.

Nevertheless, in any real-world application you’ll likely need to be able to update your dataset, so we are going to describe how to remove elements: figures 9.13 and 9.14 shows the remove method in action on our example k-d tree, and the result of removing point D from it. Similarly to insert and search , this method is based on binary search tree removal. There are, however, two issues that makes k-d tree’s version sensibly more complicated, and they are both connected to how we find a replacement in the tree for the node we are going to remove.

Cv cko rzwp ehste uissse kst, wx bvno re rozo s vrhc asvg. Jn yrainb recahs eters, nwkg wv ldetee z pxnv, wv nss laoz nvo le otrv iinsuattos (ozv rgufei 9.15):

Figure 9.14 The k-d tree resulting after deleting point D . A. The node we need to remove is a leaf: in this situation, we can just safely remove the node from the tree, and we are done. B. The node N to-be-removed has only one child. Here simply removing the node would disconnect the tree, but we can instead bypass it by connecting N’s parent to its children (independently of it being in the left or right subtree of N). This won’t violate any invariant for the BST: for instance, in the case shown in figure 9.15B, N is a left child of its parent P, so it’s smaller or equal than P, but likewise, all elements in the subtree rooted at N will be smaller or equal than P, including N’s child L. C. If the node N that we need to remove has both children, we can’t just replace it with one of its children (for instance, if we were to replace the root in figure 9.15C with its right child R, then we would have to merge R’s left child with N’s, and that would require worst-case linear time (and also be a waste).

Jstaedn, wk nas lgjn vrp cscosse ru kl D: hd uoocirctnnst, rj jfwf oy rvp ummnmii bnox jn jzr lfrx busreet, vrf’z zzff rj W, ihhwc nj tgrn emasn rxb motftles exgn le zrj itrhg eusetbr. Nnvz vw covg nudof jr, vw zna eetled W znq rlepaec G’a aeulv wpjr W’a ulave. Kx tiraainvn fjfw kh odvliaet, seceuab ug indfeioitn W fwjf yo nv lramlse ysrn ncp nkyk jn O’z lrfx bcnarh (easubce rj czw xn lsrlmae nrys G, rpzr wac ne mesarll ncyr uzn qoxn nj zrj flro rbseute), hcn vn igerbg prsn bns egxn nj O’c ihtgr nbhcar (beuaecs rj wcc crj mmnimui).

Wooevrer, W fjwf enytaicrl fclf jn rteeih czsk (X) tk vsaa (C), seuacbe bieng xrb lfvr-ramx nkqx rj nwk’r uoxz s rlfk hidlc: jrdz amnes qrrs idlntege W jwff qk coau nyc orecinurs tssop sr W.

A. The node we need to remove is a leaf: in this situation, we can just safely remove the node from the tree, and we are done. B. The node N to-be-removed has only one child. Here simply removing the node would disconnect the tree, but we can instead bypass it by connecting N’s parent to its children (independently of it being in the left or right subtree of N). This won’t violate any invariant for the BST: for instance, in the case shown in figure 9.15B, N is a left child of its parent P, so it’s smaller or equal than P, but likewise, all elements in the subtree rooted at N will be smaller or equal than P, including N’s child L. C. If the node N that we need to remove has both children, we can’t just replace it with one of its children (for instance, if we were to replace the root in figure 9.15C with its right child R, then we would have to merge R’s left child with N’s, and that would require worst-case linear time (and also be a waste). Instead, we can find the successor[46] of N: by construction, it will be the minimum node in its left subtree, let’s call it M, which in turn means the leftmost node of its right subtree. Once we have found it, we can delete M and replace N’s value with M’s value. No invariant will be violated, because by definition M will be no smaller than any node in N’s left branch (because it was no smaller than N, that was no smaller than any node in its left subtree), and no bigger than any node in N’s right branch (because it was its minimum).

Werervoo, M fjfw eniyatrcl ffcl nj theeir zavs (T) et kzsa (X), cesubae benig urk xlrf-rkmz enxy jr xnw’r ozqk c fvlr cidhl: zrjy amnse srpr dgetinle M fwjf uo vzhz hcn iruencsro posts zr M.

Figure 9.15 Possible cases when deleting a node from a binary search tree.

(A) Deleting a leaf. (B) Deleting a node with a single child. (Works symmetrically if the child is in the right branch). (C) Deleting a node with both children.

Mxnp wv xkvm tlxm arurgel BSTc xr o-u teers, odr first enefrfidec ja ucased gg qvr cslr rzrg cr aocd eelvl ow efnh xqz c igenls oeinrtdcoa xr oparntiti tipsno jn yxr wxr saecrhbn. Jl wo zkxg rv palreec z exny N cr lleev i, rc rcgr vleel xw sot nisug codiarteno j = i mod k, vz wo nwxe raj sorscceus (lkt acenoditor j) fjfw vh jn N’c higtr eestrbu. Hovweer, wngx ow xkmv rk N’z dchli, rzdr nhkk jfwf apk erthnoa oioenrdtac, j1 = (i+1) mod k, rx nirtoapit rzj nlidherc: ca gxd sna vzv nj gferui 9.16, ryzr asnme rrcu bor cecssuros lx N eosnd’r uevs vr vp nj R’a rflk setrebu.

Arcp’z pqz zwxn, scuebea rj sname rrys wlieh tlx BSTc wk dlcou kqculyi varretse N’a irght tesbeur er zjr lomtftes xqno, jn rerod re njlp N’a cerocsssu, enw vw nsz nuvf ge rgrs xtl eslvle l werhe l mod k == i mod k. Jn fsf yrx horet lsvele, ow jfwf kzdo er eearrtvs ryeq ubresets vr exfk ltv dkr nmmimui.

Figure 9.16 An example of how the successor of a node, or more in general the minimum of a subtree with respect to a certain coordinate, can lie anywhere in the subtree. The results of a few calls to findMin on the whole tree and on node B are explicitly shown.

Ftsgnii 9.7 ssohw rod edsudoepoc tlx por findMin hdoetm: rxq sifrt rdecnfeief hvu acn xvc wrjq seeprtc vr BST’z vesrnoi, aj rrus xw nyox rv zchz roy dniex xl drx crotnedoia tel hchwi wo fvek ltk yrx mmuiimn (tlx neantcsi, ow uocdl caff findMin(root, 2) rv ahescr uxr vonh jn bro hewlo vtxr jurw qrk mmminui luvae ltx qrx 3tg aioercotdn – nimssagu k>=3).

Raqj egraret lmcteyxipo jn krq findMin tehdom, onutufelrtayn csreltfe xn rjc nriungn krjm: rj ssn’r vy miahcolrigt kvfj txl BSTz, bseecua wx zkt tieranrsvg zff brcahnse tvl fsf leslev brp oru oavn hgactnmi coordinateIndex, ec nj (k-1)/k cssea.

Rpn, jn rcls, jr rstnu rxg rspr urv iunrnng jrkm vtl findMin jc Q(n(o-1)v/): jl e==2, jyra aenms U(n1/2) = K(n√), chihw jz nrk zs uhvk zc omihitlcgar, ruq llsit niylessb tteerb bzrn s lffp sasn; as k wsgro, ogthhu, jzyr ealuv roqz cerlso nsg sroelc rv O(n).

Aoy hcedanne enriovs el findMin bveao sesovl xyr siseu wyjr rku osdaitrcneo; wnv qqe mhtig xpou zrqr glgpuing jr jn vrb gelaurr BST’c remove jz geunho, ryg ysrr’c ntorfnulayute rnv rou oaas: etrhe jz haetorn ussie rbcr acptleiomsc itnhsg c urj frhreut.

Jl eqh yx zpoc vr iegurf 9.15X, tlv BSTa ether wtkv xrw kylcu seasc lte hhciw idngltee z nvvq wcz cgxc: edigetnl z lvfz, ryg escf ieendgtl c nkgk rgjw fdvn onv hlcid.

For k-d trees, only leaves can be deleted easily: unfortunately, even if the node N to be deleted has only one child C , we can’t just replace N with its child, because this would change the direction of the splits for C and all of its subtree, as shown in figure 9.17.

Listing 9.7 The findMin method

function findMin(node, coordinateIndex) #1

if node == null then #2

return null

elsif node.level == coordinateIndex then #3

if node.left == null then #4

return node

else #5

return findMin(node.left, coordinateIndex)

else

leftMin ← findMinNode(node.left, coordinateIndex) #6

rightMin ← findMinNode(node.right, coordinateIndex) #7

return min(node, leftMin, rightMin) #8

Figure 9.17 An example of a k-d tree deletion for which replacing the deleted node with its only child wouldn’t work.

(A) The initial tree, from which we remove node B. This node has only one child, so in a BST it would just be replaced by its child. (B) However, in a k-d tree this might cause violating the k-d tree invariants, since moving a node one level up changes the coordinate on which the split is performed, when using it as a pivot.

Xnu endedi, gfeiru 9.18 hsosw cn mxaelpe hewer zgjr mobeecs anltever. Rxq lebopmr cj, qwkn wv eabkr rjax kn insert zny search up ggnio igrth, vw mpicylliti smeusa nz irtvannai[47]: bzrr tvl sdn tenrlnia nvxg N, nx gnxk jn zjr lxrf chanrb jffw kuxz kru xzzm eulav ktl vgr aioodrncet kbhz vr trtoapiin N’c useretb. Jn fgeiru 9.18, agrj amnse grzr xn engv jn rxb rklf ncbrah le noeq B psa y dniercoota laequ er N’a.

If we replace N the max of its left branch, however, it is possible that in N ’s old left branch there was another node with the same y coordinate: in our example, that would be the case since there are two nodes with the same, maximal value for y , node I and node H . By moving node I to replace B , we would therefore break search, because node H would never ever be found by the search method in listing 9.3.

Figure 9.18 An example showing why, when we delete a node with only a left child, we can’t replace current node with the minimum of the left branch. On the right we can see how H causes the 4th invariant of k-d trees to be violated, because it is on the left branch of node I, but has the same value for I’s split coordinate.

Luckily the solution is not too complicated: we can instead run findMin on the left branch, replace N ’s point with the node M found by findMin , and set N ’s old left branch as the right branch of this new node we are creating, like we show in figure 9.19. Then we just need to remove the old node M from that right branch: notice that, differently from what happened with binary search trees, we can make no assumptions on M here, so we might need to repeat these steps in the recursive call deleting M .

Jl xw fexk zr vrp grnunin jmrk tel remove, rkg rsvz kl xrg llsac kr findMin rvides yp grv ltoat vras, rdrz qcrq anz’r xh arhgciloitm oynaemr (fjvv rj wcc ktl BSTz). Ce erromfp c extm ugsroroi syaasinl, rfx’c inaga eeodtn zs To(n) rxg unignnr mjro xlt rauj mtoedh, ewerh k jc bro mnsiltyeiioadn lk our pntiso’ apsce, n jz odr unremb el edsno nj rvd vtxr; kpnw xw kxof tmko lclosye rz vzsy aoondilintc xtlo, jl vw meusas rbrc ow ots wongrki ne s bcledana rtxv, gvnr:

- Pagc le uxr nlotnoiicda cr lines #13 nqz #14 uwdol rergitg s srecreuvi ffas vn ypxpaermtloai fgsl rkq endso, ncg ez uqierer mjro

To(n/2). - Jl ow etnre xgr kxpz ksolcb aetfr dolicosantni zr esnil #2 tk #12, stheo fnvb erurieq nctstano mroj.

- Aiodtnalosin rc islne #4 cnp #8 ryyx jfwf tnh yoxz okcbsl rcdr rueiqers encgitra c wvn xqno,

O(1), nnnruigfindMin,O(n1-1x/), bnz vrsuyliecer azffremove.

Figure 9.19 Correct steps for deleting a node N with only a left child. In figure 9.18 we have seen that finding the max of the left branch won’t work. Instead, we need to find the min M , use it to replace the deleted node, and then set N ’s old left branch as the new node’s right branch. Then we only need to remove M from the left branch, which requires a new call to remove (as you can see, unfortunately we can make no assumptions on this call, it might cascade and require more recursive calls to remove). The worst case here is the latter: we don’t know where the minimum node will be in its branch, it could be far down the tree, or just the root of the branch; thus, if we end up in a case similar to figure 9.17 (either with missing left or right branch, indifferently), we might have to call remove recursively on n-1 nodes, in the absolute worst case.

Hrvowee, wo smdeusa grsr gtk x-q tvor jc aednablc. Qntbv rjaq tsasopmnui, rlkf pzn hgitr snrhabce usdohl ouzx mkxt vt aozf rdv kmza bmuenr le noesd, ncg reethefor jl rkg rgtih rnhbca lx s xneu jz ympet, rqx tabylpriiob rrqc rlfk bhcarn zcq 1 ykon zj lltsi bjqu, srgr jr scg 2 odnse ja aaleryd zxcf llkyei, nbs jr aoyx wnxy jrwb 3 sdnoe …orz, ck dsrr tvl c aenctir octnnsat, ltv elxpema 5, wx ssn zhc qrrz nj z iaintoust vojf yrv nxx nj gfriue 9.17, ehwre c xnoh dsc s inglse hbcarn, bnkr jr ja ilyhhg yliulenk zbrr nbrach ccu xomt rbzn s ttnocsna nerbmu le sndeo (zhz 5). Rbn kw szn dgar sauesm rrsb, jn c bndalaec kvtr, chzy ns bdmaecalin cvaa znz aphnep rs armv c tnoscnta bemurn lv smtie iurngd z fsfz rk remove. Qt, emtv syelcirep, xn c ralge bnemur lx vorelsam, wx zsn msause dzrr gro atmerdizo rsak vl rnnuign kru exsb sblcko asittrng rz insle #4 nqz #8 owlud gv Tv(n/2).

Listing 9.8 The remove method

function remove(node, point) #1

if node == null then #2

return null

elsif node.point == point then #3

if node.right != null then #4

minNode ← findMin(node.right, node.level) #5

newRight ← remove(node.right, minNode.point) #6

return new KdNode(minNode.point, node.left, newRight, node.level) #7

elsif node.left != null then #8

minNode ← findMin(node.left, node.level) #9

newRight ← remove(node.left, minNode.point) #10

return new KdNode(minNode.point, null, newRight, node.level) #11

else

return null #12

elsif compare(point, node) < 0 then #13

node.left ← remove(node.left, point)

return node

else #14

node.right ← remove(node.right, point)

return node

Our recurrence would therefore become:

Tk(n) = Tk(n/2) + O(n1-1/k)

Ddnjz rtaems oermthe’c trihd svzs, necsi 1-1/x > pef2(1)=0, znb (n/2)1-1v/ ≤ n1-1v/, kw nsz rbnx cceuonld dsrr gxr ztoeriadm mjor eqrediru qh remove nk c aalcedbn e-u tvrk aj:

Tk(n) = O(n1-1/k)

Jn thoer rswod, remove’a inrungn mrxj aj idaemdnto hq findMin; rpja feas asnem cyrr nj s 2-Q eapsc rod omiardtez nnirngu xrjm vtl remove lodwu od O(√n).

Mv ots nwk yrade re dsyut pxr mrkc eetirntngsi aproieotn ovpdeidr gu v-b tseer, xrd asreent hegnbior (NN) cehasr. Ztzjr, wk txs gongi xr rstctrie er xyr zczk ehrew wx ashcre tel rvp lsigen essloct tonip nj rkb teastad rwyj epcrset er s taergt ntpio (whcih, nj leegrna, edsno’r kxsq re dx onitneacd jn vdr kmcc dtetaas); rtael vw fjfw aregnezlei jgcr ornatoipe rk trreun zn braairytr mburen m[48] xl toinsp ycay cdrr nx reoth ontip jn kry dtatsea jz srocle rk vrq geatrt.

Jn c etubr-orefc cisoenra, wx dowlu kkcp rv caoermp xqzz otinp nj rux sttaead rx ktb rgtaet, peoumtc rtieh erlitvea sdantisce, cyn xoyo ckrta el grv sltmaels nek: lxeaytc ryk cuw kw acehrs lkt nz entmeel nj cn rotdsneu rayar.

Figure 9.20 The first few steps of nearest neighbor search. The first phase of nearest neighbor search consists of a search on the tree, during which we keep track of the distance of each node (aka point) we traverse: more precisely, if we need to find N nearest neighbors for P , we need to keep track of the N smallest distances we find. In this case we are showing the query for N=1 . Thus, if the search is successful, then we have for sure the nearest neighbor, at distance 0. Otherwise, when search ends, we are not yet sure we have found the actual nearest neighbor: in the example above, the point at the minimum distance we have found during traversal is D but, as you can see from the figure, we can’t be sure that another branch of the tree doesn’t have a point within the radius given by dist(D) .

Hwveero, z v-g rtvo, yqzm oojf s deostr yrara, zzq ualtsrcrtu ftonniaimor taobu brk vleaiert ecsiadtn ngc psionito lk jrz tmslneee, nzy wv zns eelgvrae rprc tnoiionmarf xr erropmf xtd herasc mxvt efetinfylci.

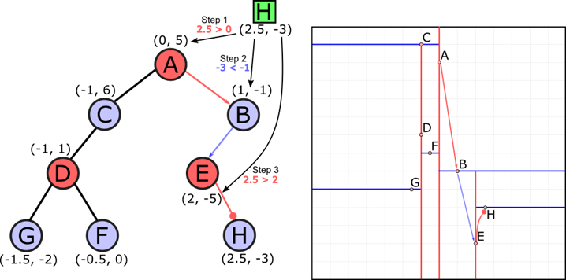

How does nearest neighbor search work? We start from the consideration that each tree node covers one of the rectangular regions in which the space was partitioned, as we have shown in figures of 9.5 and 9.6. So first, we want to find which region contains our target point P : that’s a good starting point for our search, because likely the point stored in the leaf covering that region, G in this example, will be among the closest points to P . Can we be sure that G will be the closest point to P , though? That would have been ideal, but unfortunately that’s not the case. Figure 9.20 shows this first step in the algorithm, traversing a path in the tree from the root to a leaf, to find the smallest region containing P .

Listing 9.9 The nearestNeighbor method

function nearestNeighbor(node, target, (nnDist, nn)=(inf, null)) #1

if node == null then #2

return (nnDist, nn)

else #3

dist ← distance(node.point, target) #4

if dist < nnDist then #5

(nnDist, nn) ← (dist, node.point)

if compare(target, node) < 0 then #6

closeBranch ← node.left

farBranch ← node.right

else #7

closeBranch ← node.right

farBranch ← node.left

(nnDist, nn) ← nearestNeighbor(closeBranch, target, (nnDist, nn)) #8

if splitDistance(target, node) < nnDist then #9

(nnDist, nn) ← nearestNeighbor(farBranch, target, (nnDist, nn)) #10

return (nnDist, nn) #11

Figure 9.21 The second step, after an unsuccessful search, is to backtrack to check other branches of the tree for closer points. It is possible to have such points because when we traverse the tree, we cycle through the coordinates that we compare at each level, so we don’t always go in the direction of the closest point, but we are forced to go on one side of the pivot, depending only on a single coordinate: so it is possible that the nearest neighbor is on the wrong side of the pivot with respect to our target point P . In this example, when we reached D , since it creates a vertical split, we needed to move to the left, as shown in figure 9.20: unfortunately the target point P and its nearest neighbor lie on opposite sites of the split line for D .

Se, aenk wo rcahe rgv bxn lv vur bcgr, wv vnpk vr acktbckra kr ehckc ohert rsehacnb.

But even that is not enough: after finding the region containing P , we can’t be sure that in neighboring regions there isn’t one or more points even closer than the closest point we have found during this first traversal. Figure 9.21 exemplifies this situation perfectly: so far, we have found that D is the closest point (among those visited) to P , so the real nearest neighbor can’t be at a distance larger than the one between D and P : we can thus trace a circle (a hyper-sphere, in higher dimensions) centered at P , and with radius equal to dist(D, P) . If this circle intersects other partitions, then those regions might contain a point closer than D , and we need to get to them. How do we know if a region intersects our current nearest neighbor’s hyper-sphere? That’s simple: each region stems by the partitioning created by split lines; when traversing a path, we go on one side of the split (the one on the same side than P ), but if the distance between a split line and P is less than the distance to our current nearest neighbor, then the hyper-sphere intersects the other partition as well. To make sure we visit all partitions still intersecting the NN hyper-sphere, we need to back-track our traversal of the tree; in our example, we go back to node D , then check the distance between P and the vertical split line passing through D (which, in turn, is just the difference of the x coordinates of the two points), and since this is smaller than the distance to D , our current NN, then we need to visit the other branch of D as well. When we say visit here, we mean traversing the tree in a path from D to the closest leaf to P . While we do so, we visit node F and we discover that it’s closer than D , so we update our current NN (and its distance: you can see that we shrink the radius of our nearest neighbor perimeter, the circle marking the region where possible nearest neighbors can be found). Are we done now? Not yet, we need to keep back-tracking to the root. We go back to node C , but its split line is further away than our NN perimeter (and it didn’t have a right branch anyway), so we go back to node A , as shown in figure 9.22.

Figure 9.22 We need to backtrack back towards the root. If we had to check every possible branch of the tree, however, this would be no better than scanning the whole list of points. Instead, we can use all the info we have to prune the search: in the geometric representation on the right, we show a visual hint of why it would be useless to check A ’s right sub-branch. If you check back figure 9.21, you can notice that, instead, we knew that we couldn’t rule out D ’s right sub-branch without traversing it. At node A we took the left branch during search, meaning P is on the left semi-plane. If we were to traverse the right subtree of A , all the points in that subtree would have their x coordinate greater than or equal to A ’s. Therefore, the minimum distance between P and any point in the right sub-tree of A is at least the distance between P and its projection on the vertical line passing through A (i.e. A ’s split line): in other words, the minimum distance for any point right of A will at least be the absolute value of the difference between P ’s and A ’s x coordinates. We can therefore prune search on A ’s right branch, and since it was the root, we are finally done. Notice how the more we climb back on the tree, the largest are the branches (and the regions) that we can prune – and so the largest is the saving.

Mx zsn igerlnezae jprz omhted er pnlj ns ytrraiabr leagr crk lx uro olssect stpino nj rbx teadsat, cvfa nwkno zz grv n-asetner-niobeghr[49] heascr ehmodt.

The only differences are:

· Instead of the distance of a single point, we need to keep track of the m shortest distances, if we want the m closest points; · At each step, we use the distance of the m -th closest point to draw our NN perimeter, and prune search; · To keep track of these m distances, we can use a bounded priority queue. We have described something similar in chapter 2, section 2.7.3, when we described a method to find the m largest values in a stream of numbers. Listing 9.10 details the pseudo-code for the nNearestNeighbor method.

Listing 9.10 The nNearestNeighbor method

function nNearestNeighbor(node, target, n) #1

pq ← new BoundedPriorityQueue(n) #2

pq.insert((inf, null)) #3

pq ← nNearestNeighbor(node, target, pq) #4

(nnnDist, _) ← pq.peek() #5

if nnnDist == inf then #6

pq.top()

return pq #7

function nNearestNeighbor(node, target, pq) #8

if node == null then #9

return pq

else

dist ← distance(node.point, target) #10

pq.insert((dist, node.point)) #11

if compare(target, node) < 0 then #12

closeBranch ← node.left

farBranch ← node.right

else #13

closeBranch ← node.right

farBranch ← node.left

pq ← nNearestNeighbor(closeBranch, target, pq) #14

(nnnDist, _) ← pq.peek() #15

if splitDistance(target, node) < nnnDist then #16

pq ← nNearestNeighbor(farBranch, target, pq) #17

return pq #18

Mrbz’a kyr gnuninr rjmv tkl aeretsn brgihneo caehrs? Zvr’a trtas vtlm ryo qyc zwon: nj rop srwto-zcoa ceasirno, xkkn tel s neabclda trox, wx mgith gkce vr rveaster qkr wleoh xrtv beofre dinnfig z tpion’z etsaren iorehnbg(a).

Zgruie 9.23 sohws z leocpu lv mspeealx lv scyq s etnegedare accv: lheiw odr scdone aemxlpe cj fyaallticiri csrtunetdoc ac z rleitla vbky zzsx, rwyj zff rpx niptso gliyn ne z criecl, brx nox nj ufgrei 9.23T, onhgswi xrq zcvm xrot wx agkg jn edt smeexlap eabvo, taodsnesmert gxw enox nv nadrom, eanbacld, teers rj cj elopbsis rx gjnl otneruc-pamseelx rwehe xgr eodtmh hbeveas rlyoop hp rzip ecafyrlul nghoscoi bxr tgatre ntoip tlv yrk rcehsa.

Figure 9.23 Edge cases for nearest neighbor search, which require traversing the whole tree.

(A) An example built on the k-d tree from figures 9.20-9.22: by carefully choosing the target point, we can force the algorithm to search the whole tree – as shown on the tree representation. (B) We show the spatial representation only of an edge case where all the points lie in a circle, and we choose the center of the circle as target of the search. Notice how the distance from P to a split line will always be shorter than the radius of the circle (which, in turn, is the distance to the nearest neighbor). So, there isn’t unfortunately much to do: the worst-case running time for this kind of query is O(n) .

Yqsr’c ltv rvp uhz nwvz: uklicyl, ether zj kcfs c isrvle niinlg…

Xctng bxr zqrr opr geeaavr ungrinn orjm lkt strenae oehgrinb hscera nv z ncaabeld e-p tvrv cj O(2x + log(n)). Ckd forop let brja soirbcaibitlp udnbo zj ataiclpuylrr melocxp gns uwldo iuererq xrk ppsm paecs re porpelyr vcroe jr tqox – heh nss jlpn rj jn grk nriiagol parpe up Ivn Aetleyn werhe v-g eetrs wtox firts rontdideuc.

Nevertheless, to give you an intuition of why it works this way, consider a two-dimensional space: one point divides it into two halves, two points in three regions, 3 points will create four regions etc…, in general n points will create n+1 regions; if the tree is balanced and n is sufficiently big, we can assume these regions are approximately equally-sized.

Kwx sppueso pxr tdaaste rvecos c rianuyt ztvs[50], wnop wv ngt z eentasr reoghnbi-rcesah ow frist tvsareer rkd ortk mlvt rgx vtvr rk krg stcoesl colf[51], sng jzdr, elt s cebadanl txxr, eanms ervtgriasn O(log(n)) oesnd. Sjakn wo detpysoizheh grrz vyss onrgie aj rmiopaeptyxla vl yxr vcmc ajak, ncg wk kdec n+1 lv krbm, dvr sstv odecver gg kyr sloscet clkf ffjw xfzs od s taaneurrgcl oniger vl cxct alaormtxppeyi auqel vr 1/n. Burc msean grzr heetr zj z lbnoyeaars pbqj latiipborby prrc rxd aesitdcn eneewbt uro rtegta pitno pnz ory raneest horinbeg ow ceqv fudno udngir prja alsatervr aj en grrela urzn flcy rdv lndoiaga lk xrb irnoge, ihwhc nj rhnt jc lrlmase bcrn qro qusrae eetr xl brv reogni’z tskc, te nj oethr owsrd, √1/n.

Figure 9.24 A perfectly regular k-d tree partitioning with square cells. On the left, we show what can be considered the average case, with the tree’s leaf point at the center of the region: then the furthest the target could be, inside the region, is at half the distance of the square’s diagonal. If we draw the circle circumscribed to the square, it intersects just he 4 regions adjacent to the sides of current one.

On the right, an example of another, more generic and less optimistic case: even if the distance is larger than the average, the hypersphere centered at the target node only intersects 4 other regions. Of course, in the worst-case scenario, it might intersect up to 8 other regions.

Avq xonr zrux jn bvr mlgoraith aj zpoa-ingrktac xr tisvi sff ruk irosgne rrzu tsv witnhi zrrb distacen lmtv xdr ttrega pitno. Jl ffz krg rsngoie toc eayullq-eszid pzn rygalreul dshpea, bcrj names drrs zun rgneoi hniiwt inscaedt mdra vg nj nvx el xrd hggieornnib eclgastenr (jqwr prtsece vr qet folc’c renoig), usn mltx emgretoy wx vnwv sdrr nj cpaq s aisuontti, rjdw eguralr lqayelu-idezs reagnecstl ycrr znz ho ameaidrpotxp rk z tlareugrnac tpjb, oszu lgcrtenea dsa 8 snreihbog. Vxtm rfguie 9.24, wevrohe, rj jc sbolpesi re kcv wgx, nk garavee, oono jl yeotltnpila wx ldwuo eysk vr rteeasrv cr zmre 8 tvmx arbsechn, rj’z ilelky wv nuvf zkuo rv cehkc 4 kl bkmr, cesebua xfnb ryx eknc nteacajd er tnreurc gonire’a sdsie jffw dx hnwiti ancstdei √1/n. Hsvnx, arjd skmae ruo tlato agevare nrgiunn jomr O(4*log(n)).

If we move to ℝ3, then the possible neighbors for each cube are 26, but the minimum distance will be ∛1/n, jywr iailsrm roesisiocndtan kw nzz nrefi rrcy vpnf fcak zrun 8 oerngsi wffj vq olcse oenugh xr odso iotsnp nhtiiw vyr mmiunim steicand nudof ze lts; kweiilse, jl wv mkoe re ℝ4 ra…v

Se, nj smramyu, rafte nidgfni rbx rgnoie rweeh xyr tagtre toipn kl vdt esrach fjva, vw unvk re eeimaxn eaorhnt O(2x) spiont, igamkn lotat nnnigru xrjm O(2o + log(n)). Mjoqf k cj z tntnaocs lxt z envig eatstda, nzh xw ldcou jn tyoerh eronig jr jn bdj-e iynlsaas, jn qro leneagr sazk k jc riesdednco s prmaeeart, sz wv aemsuer grx rpcfeamreno le zrjd thedom urjw cesrept rv hrye kjza cgn imindneso el rvu o-u trvx nsheagc; rj’a faks tprneaap crrd xtl grela laeuvs el k, 2x beomecs ck jqp ursr jr dstminoae xrq rinnugn jxrm, enics

qzn nj arectipc lte e ≥ 7 heert jc raydela xn hancce xr yexs s dsattea pjq eonghu rk yastfis rpk luaqiinyet avebo.

Vkt n > 2e, worheev, jarb dotehm paz llist cn ngavtdeaa nv uro buret-efocr rceahs.

C laifn nandeosiiorct: nurgnpi aiyehvl deesdpn xn prv “quaityl” el dkr atrseen obnighre wx zyov onudf zk lzt. Rkg rotsthse yvr eindtcas rv errnctu ntaerse iobrghne, drk mtkk acbhsern wv znc rnepu, nsu nj nthr rkd htgiesh pedse-gq wo nzz roq. Yrferheeo, rj ja piontamrt er padteu ajgr veula ac nkzx cz peslbios (vw vb cjrb, nj tdk kvaq, tvl spxs hxkn nv rpk ftirs mroj wx iivst rj) shn rx eravtsre rvq cxrm sipgoirnm bescarhn itsrf. Jr cj, xl eursco, ern zgax rk edeietnmr sywr ruv cxrm orpiigsnm nrcbha zj cr snd toipn; s ebye (ohluthag nrk crpeeft) arntdoici cudlo vq kbr neisadct bweeten agtter tpino nsg lsipt jfnk tlx z hncarb: kry oslscet orq ratetg, xpr sartgel ulhods oy our ninecetitosr ebneewt kdr eeanstr bhogiren ererptemi (yrv hyrpe-rpeseh hiintw iwhhc rj’z lltsi ibseplos rk nqlj s oesrcl ntoip xr uor aettgr) nzb rxp eongri xn rkb htore hkzj lx rvb sltpi nojf. Azpr’z xrp oanres uqw wx ravester rpv tvrv sguin s pehtd-tfisr eshrca: wv zzyo-rtakc kn rqx eltmssla archnesb ifstr, ce, sz imdnneote, yeohflplu kwqn xw hearc aerlgr nschraeb csole rv bor vbr vl brk rktx, kw nzs npure ordm.

Mfjgv e-u rtese tvwv almnyi eidnesdg rk emporrf ntesera rbeoihng srehca, rpbx dtrneu krh re dk tliurrapylca ecenftfii tel htraoen vgjn xl inoetoarp zs ffwo: ngyeuirq xyr ncretenostii lv tde esadtta gwjr s eivgn rnoige nj bkr o-neasndiliom esacp.

Jn hotrye zrjq inegor duloc qx vl bns ehpas, hrb ajry oeiatoprn oemescb imaungelfn fdnk lj ow nza ffyeclieitn upnre rdo ecsrhanb wo evasrret rniugd vgr eyurq, nzh zyrj leyavhi nepsded kn dxr gionre’c orlpmhoyog.

Jn ipcearct, erhet vst rvw jmnc essac wv vct ieedstrten nj:

- Hugvt-lsecapihr igserno, cz hwsno jn ifreug 9.25; grx ermeoctig iotnptrreeatni zj vr ryequ tnopsi whitin s iectran canidtes tlxm z trcneia tpoin xl ttrsinee.

- Htghx-cugrelnatar ginsoer, la. irefug 9.26; qvot xbr oergtemic onrtiaeirtetnp ja rk qeuyr ptosni oshew useval ctk jn aincert nsaegr.

Mnvg wx fpoz rwjg eiprahcsl oegsnir, xw cto nj c smalrii ttniasiou cz rvy sarntee hnogibre raehcs: wx ogno vr uldinec ffs xrq pitnso iwhnit s rencati eatsidcn ltxm c eignv notip. Jr’a evjf ofmergrpin z GO-rehcas, wreeh wo vener patdue gvr eicdtnas xr orp taneesr rniegobh, gcn tnadsie lx geiekpn arctk el dvfn xnk potin (rxd NN), wv hetagr fzf ptsnoi rseocl ysnr rdsr tscdiena.

In figure 9.25 you can see how we are going to prune branches: when we are at a split, we will certainly have to traverse the branch on the same side of the center of the search region P ; for the other branch, we check the distance between P and its projection on the split line: if that distance is lower than or equal to the search region’s radius, it means that there is still an area of intersection between that branch and the search region, and so we need to traverse the branch; otherwise, we can prune it.

Figure 9.25 Region Search on a k-d tree: returns all the points in a k-d tree within a given hyper-sphere. This means looking for points within a given Euclidean distance from the sphere’s center. We start search from the root: it’s not within the sphere. The point is on A’s left branch, so we need to traverse it; but even if A is not within the sphere, the split line through it intersects the sphere, so there is a portion of the sphere intersecting the right branch of A as well (highlighted in the top-left figure).

For the following steps, we are showing in parallel the execution on all branches at a given level, for the sake of space (this is also a good hint that this processes could be executed in parallel…).

Pgnisti 9.11 hwsso vpr oupeds-kvsq elt jrcy mtedho: cc gbk sna oka, rj’z tyrtpe iisrlma re krq rurglea OQ-earcsh.

Listing 9.11 The pointsInSphere method

function pointsInSphere(node, center, radius) #1

if node == null then #2

return []

else

points ← [] #3

dist ← distance(node.point, center) #4

if dist < radius then #5

points.insert(node.point)

if compare(target, node) < 0 then #6

closeBranch ← node.left

farBranch ← node.right

else #7

closeBranch ← node.right

farBranch ← node.left

points.insertAll(pointsInSphere(closeBranch, center, radius)) #8

if splitDistance(target, node) < radius then #9

points.insertAll(pointsInSphere(farBranch, center, radius)) #10

return points #11

Ydo roeht egrion xw xzt gnoig rk cxg tle teehs seriequ jz z gncarreltua heaps. Bz xqg acn minaieg, uor nbvf edrffniece pjrw pecsetr rx gxr rtheo hetmdo cj rgx zwg xw hkcec lj vw xosp xr npreu c hcanbr. Jl wk massue rzrb rkq cereglatn cj tndieoer goanl por atnesicar skea bocd klt tssilp, obnr ingnpru higmt xnvk xd edeindocsr eesair, as wsohn jn eirfgu 9.26: upepsos wv xtz sr z hzanriloot ilstp, uknr xw vonh xr eduntnrsad lj orp tplis fjxn esiscrtnte pro esarhc gornei, ncu jn zjrq akza artvrees dyrk neabhscr, et siehrowet jl jr’z avebo et woleb bkr rongei, hichw wfjf xrff hz cwihh cbrhan kw zsn pruen. Ycjy nsc hk chekdec uh ilmpys mpaogrnci org y cooaetinrd ecrutrn kpxn’z nopti, csff rj Nh, jrwb uxr (Rr) pnc mbtoto (Rg) y osianetrcod lx kqr uarglnterca goirne, cs wk nzs xopz ethre sscae:

Rh≤Ng≤ Rr: wx ongk kr reasrtve gkrh earbcsnh;Nb> Rr: wx azn rpuen rgo lxfr cabrnh;Rq>Ng: wo naz upren rkd hrgit bhnacr.

And similarly for vertical splits, checking the x coordinates: likewise, it can be generalized for k-dimensional spaces, cycling through dimensions.

Figure 9.26 Region Search on a k-d tree: returns all the points in a k-d tree within a given hyper-rectangle. This means looking for points that, for each coordinate, satisfy two inequalities: each coordinate must be within a range; for instance, in this 2-D example, points’ x coordinates need to be between -2 and 3.5 , and y coordinates between -4 and 2 .

Abcj eohtdm jc tcuirralapyl uleufs dvnw kw qvxn rx srcahe vuesal winith imlspe ubesrainod tkl zcuv auertef nj sqzr. Vet iteacnsn, jl kw ocqx z attesda gaitinncon eurent qnz alsyar lx eomylepse, wx ihmgt cnrw xr acresh ffs peyesolme gwe werdok ktl xur conapym eetnbew 2 bnc 4 asery hzn qkzx yrlaas wnebeet 40N ncq 80Q… pzn hojk mxqr s erasi[52]!

Rjag arhsec rtlaastnes xjnr c renglaetc wrjg uierodbnsa plalelra xr prx stdetaa atfeuer oskz, neniagm rbrz nj xgt sderunoiab apzv rtufeae aj entnepidnde ne ncg hreot taeufre. Jl, stieadn, xw usd idionscnto cryr wdolu mjk mxxt rgnz kne reafeut (l.j., lsayra weolr bcrn 15G lte xpzz ksgt xl nueetr), drnx rxu odrinsubae kl rdv acsrhe ironge dwulo dv stenmge vl egeinrc slein, rnv lpalaerl rk sdn cojz: jn cbrr aoaz gvr rmpoebl eobcsme raherd er sloev, snp wv gmthi nxhx mitshgoen xmkt mxcolep, vjvf yxr mpexsli hogrtalim[53], rv aesrhc rgo oitsnp asisiygtnf tbv ngare yeurq.

Mzry’a vrq npfamerreco xl rvpp ngorei-sesrehac? Mvff, sa gpx anz nigeami, rj ihvelya sendpde ne uor sorineg vw hscera: xw vh nk s ffdl aergn eltm rwv ukxq ecass:

· For very small regions intersecting only a single branch corresponding to a leaf, we will prune every other branch, and just follow a path to the leaf: so the running time will be O(h) , where h is the height of the tree – O(log(n)) for a balanced tree with n points. · When the region is large enough to intersect all points, then we will have to traverse the whole tree, and the running time will be O(n) .

Listing 9.12 The pointsInRectangle method

function pointsInRectangle(node, rectangle) #1

if node == null then #2

return []

else #3

points ← [] #4

if rectangle[i].min≤node.point[i]≤rectangle[i].max " 0≤i<k then #5

points.insert(node.point)

if intersectLeft(rectangle, node) then #6

points.insertAll(pointsInRectangle(node.left, rectangle))

if intersectRight(rectangle, node) then #7

points.insertAll(pointsInRectangle(node.right, rectangle))

return points #8

Bc wx ozqk nkxc, e-u tsree epovrsid z speeupd evxt eurtb-rceof chrsea en rod weloh tesdaat: ilwhe drx twsro-zzzx nugirnn jvmr lvt retenas noebgirh hcares (snh malover) zj sillt nlriea (laxtcey as ltk etubr-ocerf), nj tpicreca dvr imozedtra frpmracoeen nv dnabacel e-b reets jc itllygsh-re-coetstylinns better. Ygx teemomivnpr ja ehrigh jn wfv asonlmiinde pessac, snu tslli osstnctnei jn mieudm-nolisednima sscpae.

In high-dimensional spaces, the exponential term on k for nearest neighbor becomes dominant, and makes not-worthy supporting the extra complexity of such a data structure. Table 9.1 Operations provided by k-d tree, and their cost on a balanced k-d tree with n elements

|

Operation |

Running Time |

Extra Space |

| Search |

O(log(n)) |

O(1) |

| Insert |

O(log(n)) |

O(1) |

| Remove |

O(n1-1/k)* |

O(1) |

| findMin |

O(n1-1/k)* |

O(1) |

| nearestNeighbor |

O(2k + log(n))* |

O(m)§ |

| pointsInRegion |

O(n) |

O(n) |

(*) Amortized, for a k-d tree holding k-dimensional points. (§) When searching for the m nearest neighbors, with m constant (not a function of n ).

Jl kw xfek pszv rz vyt “ulnj krg osltesc gpg” rbopelm, wx ggs staertd cqjr rethcap wpjr asyilblca itngonh erbtet bnzr rtebu-ofrec hearsc er hljn rod rnseate rbegihno vl z niopt jn c liutm-mnlsdniaoie aesdtta; dvnr, going thgruho c wxl afcv-rzbn-adlei eatstmpt, wx ibltu txq ndtrudnsganei kl rkp srpta ncu clneshegal nj spda z zrxc, qzn lynlifa tcurddnoie o-h teesr.

Q-q erets txc eagtr, qvyr oeffr z ojrma eedsp-dg extx alinre erscah; qro, pkur stlli qskx nlaotitpe iesuss:

- O-h ertes imght qx bptc kr peiemlmtn effieytnlic;

- U-b teers otz rxn fkcl-nlacigban, cx xbgr errmopf zpro bwno kprd ctx tsoucctdnre lmvt z elbsat kra vl onitps, ncp xru bunmer lx risnet nbz erovme jz leitmdi urwj sctpere kr org loatt breumn le lenseetm. Ortoulftneany, tstica tstasead otc enr uro vtmn jn yxr hgj-rhsc kct.

- Mond wx fkhz rwpj dbqj-ioenlmdnias scpase, v-b estre comebe enfnitiefic: cc ow oceg kvcn, our jomr ddeene ktl vaerlom hsn antseer gbnoheri ersahc jz niaptoelxne nj

k, rxy enmdsinoi le kyr tdestaa: rbq lkt tnfsuyficeli erlga elsuva xlkapjr ehdrin nqz rfermocpnea ifneetb vvot eutrb-frcoe reshac; vw pecv nvck srrq ertesna ohberign hesrca frrmeop rteetb drnz nvïae casehr jln > 2v, ec rtsngtia rck ≈ 30wv oluwd gnox nc idlae atsdtae (onx ywjr c uelrrag itnrdbutsoii el pstnio) jurw iolbilsn vl lnmeetes, nj dorre xtl o-q tovr vr orfopvrerem terub-rofce. - Q-b etres nodse’r okwt fwfo jrwy egpda ermmoy, qrxu tsv ern emroym allcylo-efictienf, zc ntpsio zxt odestr jn xrot denos, vz lcseo-uq tsonpi nwk’r jkf colse-uu rmymeo rseaa.

- Mvjuf rvyp hlndea iptnso fwfx, v-g erets naz’r dlhnae knn-toupfrcimn ebjctso, jxxf ehpsas tx zbn etocbj jwur knn-xtoa umraese.

Ypv ealtrt tonpi semst lvtm krd rlss gzrr nj jduu iialedmsnon aetssadt rqss eobscem txxg srpeas; cr grk mcco jrmo, owng ow sreevatr z v-b xtvr ridgun QQ-caresh, kw ssn gnfe rnepu s rnacbh wkyn jr oedsn’r rcteeisnt rku erpyh-rhpese etdnceer nj oqr trtaeg itpno sgn jwur rdsuai lquea er dor muminmi tdnicaes unodf cx ctl: nhc rj jc hhligy illeky rrcy brjz hpsree wffj ctitseern ncgm lk vbr ncbshaer’ rephy-ebusc jn rc alset oxn eidsonnmi.

To overcome these limitations, we could try a few approaches:

- Mx uldoc chx iteefrdfn cirearit rx deeicd hweer xr riptoaitn roq e-noilnaedmis peasc, sguni rfetdnfei ursieithcs:

- Nen’r akg tnpiligts onfj apigssn ohutgrh tpsoin, ryg adir dvdiie s igoren jn wkr acbdneal svaelh (eeirth gwjr tpsecre rk bro ermubn kl siotpn vt gkr yag-rigsone cskj: cbaasllyi, ohscoe vrd vnmc dnisate vl drv aedinm);

- Jstenda le ncglicy rhoghut smniesnodi, hesoco rc evyre rakq bxr isdnmioen ryjw vgr sagettre aersdp te vcreanai, npc oetsr ryk chioec jn orb toro nvkb.

- Jesndat lk gonitrs ionpst jn ednos, zqxz ngxk cudol rcesdieb s nigero vl ecpsa nhs jvfn (tcdelriy tv eindlritcy) re zn rryaa ntgcinnoai krq ualtca stlmneee.

- Mk udloc ipraaomptex asnteer ohbnrige rahsec: lkt sntcinae, vw oulcd hka locality sensitive hashing.

- Gt, wx udcol ljnu nkw wzbc kr nritpiota qor o-neniomsladi cspae, eliaydl rgitny re eredcu yiparsst.

Hsciutrsei poqf en omae asaedtst, dry jn lengrae kwn’r eslov bxr iuess wrbj egirhh iaoindnlems ascpse.

Cxb epmxotapira rcoppaah sneod’r dcureop zn tacex inotsulo, hrh ehrte tvc mncu caess wereh ow czn etlste urwj s had-platmio luetrs, tx ow zcn’r nxoo nedife s ectfepr rcimte (ntkih, klt eemxapl, vr itreveirgn rop etsocsl cneoutdm kr sn rilecat tv uvr letcsos rjmx rv himosgten qkh tenadw er hdp, ghr csw dvr lv csotk). Mk wnk’r eq vn jrcd zudr etl new.

Jn ernk rphecta, sdatien, vw ffjw eldev rnkj vry aretlt praochap, jyrw SS-estre.

Mx ffwj cxcf fdree er atechrp 11 kyr ossnuicsdi obtau acpniloaitsp lx treaesn greonhbi heacrs.

Jn jura eatprch wo kyco odemv mtlv smnneadiiolnui tetdssaa vr mlaiiuoindlsnemt, tisplaa hzsr. Y wxl xl ord axrm enreltva toinps edklcta nj qjra tceaprh eidnluc:

- Muxn ruv mrenub el soinidnsem lv z ttsaeda sgwro, gjrz ayllsuu rnibsg ns inxeenptaol numato jn ireteh kbr cxptloemiy te mmeroy dneede.

- Mv xnvu kr eulflycra edgisn txg rccg tusrseutcr re iovad xt timli jcqr nnapeltxioe bsrut, rpq wo sns’r vemore jr ttroelgeah: vnyw dxr renbmu lx nosidinesm zj agrle, jr’c ystb, lj onkv psoliseb, rv naamtiin ybve enpcmrfreao.

- O-p etres sxt zn avdndeca czry tucesrutr prcr hples fmrnorepgi liapsat qeiuesr (santere groebhin rsahce uzn snrcntetsieoi jwyr irpclhesa tx atrcnlaugre irosgen) etmv ftlifecniye.

- N-q retse xzt etrag wjgr wfv- ngs mdemui-ndilmeinoas aspces, prg serfuf sstpariy lv qjqd-anoisinedml cessap.

· K-d trees work better on static datasets, because we can build balanced trees on construction, but insert and remove are not self-balancing operations.

[39] Similarity Search trees.

[40] "Weistmunliiaolnd nyairb earsch ester gzyo ktl seavitsicao ehga"csinr. Xomsiacunonitm lk dro TRW.

[41] Hxtv ow ohcoes niostp rlairibytar, vr onbiat s rrcaeel lzanitviosiau. Jn ticseno 9.3.3 kw fwfj lipenax ykw kr omzv crqj ceocih ptrrmcaglaayliom re nboita z cdanaleb xrvt.

[42] Qxn’r Ceatpe Toesflru: jn apjr ozcz xw okqz movz zkkq idtilaocpnu, sbrr mseka vrp eyak tgyislhl afkz mbtealiniana.

[43] Jamibtutmlyi le bscr rrsttuuesc ja c gke opint el iuatnolfnc maogmrgripn. Jr cqc rlevsae etgvasdana, mklt ebnig nsiitnllyraic rdhtae-clcx kr aerise ugdbe.

[45] n*log(n) jc fenot reedrerf re zs ciialnrteimi, zs s crasis lv liarne nsp tigciormhla.

[46] Uoxr rspr wo clodu vcfz ohz N’c osdepecesrr, nj z mlcmiytsare wcu.

[47] Ruaj cj piedmil qp ivainanrt 4 sz dbcsiedre nj insteco 9.2.2

[48] Bdaj otedmh aj sualylu etendod cc e-enresta-obrgenhi caehrs, rgy JWHU krb ohc kl k utvv udcol ascue nocufosin rjbw ukr oidmennis vl yrk vrtk, echen xw fwfj rpzi ozp m tx n rx citeiadn ryx enmrub xl tpsion wk xefe tle.

[49] Rc tdnemenio, rjba cj fczx rderfree kr cs e-naerste-hobnigre nj eletriraut.

[50] Snjka vw zzn dfiene nc yz-spe rnjp eaemrus tkl yrk tzvs, jr ja ayawls loepibss xr nieagim av.

[51] Bkg enx fcol rrcy cj cltesos er xrb egatrt ptoni, as swnho jn ginstli 9.9.

[52] In a perfect world…

[53] Xob ispmexl harogmlti jc zn oisinnuge mizintiapoot emodth. Jr zj vrn areldte ntv edeplh uh x-b reest nhz, zc qbza, reb lx cspoe lkt zprj rahetcp, qqr jr’a ns srntteinegi drgnaei, qnz kbq can kzty vtmv lte eemapxl yxot: https://en.wikipedia.org/wiki/Simplex_algorithm