2 Harnessing the power of LLMs

This chapter covers

- Understanding the basics of Large Language Models (LLMs)

- Connecting to and consuming the OpenAI API

- Exploring and using Open Source LLMs with LM Studio

- Prompting LLMs with prompt engineering

- Choosing the optimal LLM for your specific needs

The term Large Language Models (LLMs) has now become a ubiquitous descriptor of a form of artificial intelligence. These LLMs have been developed using generative pretrained transformers, or GPTs. While other architectures also power LLMs, the GPT form is currently the most successful.

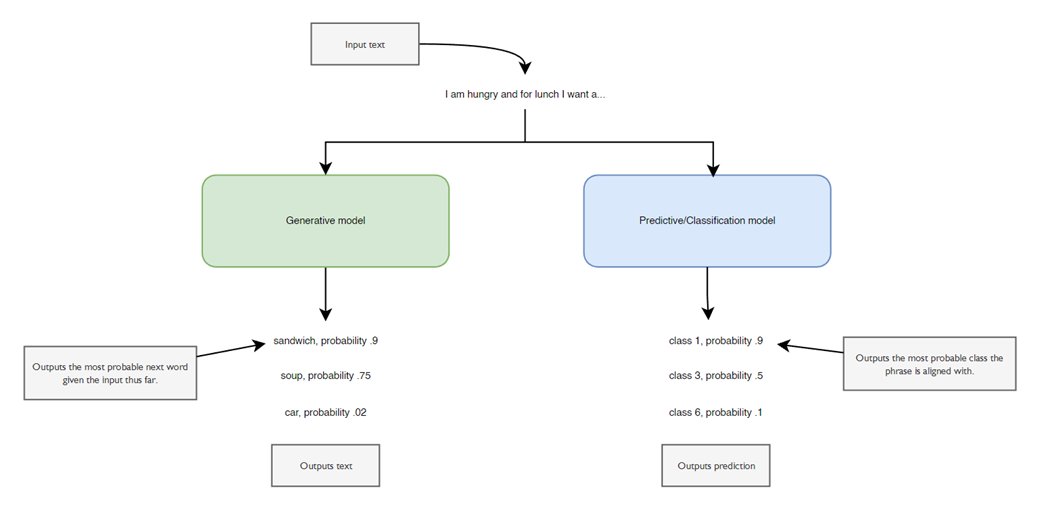

LLMs and GPTs are generative models. That means they are trained to generate rather than predict or classify content. To illustrate this further, consider Figure 2.1, which shows the difference between generative and predictive/classification models. Generative models create something from the input, whereas predictive and classifying models assign it.

Figure 2.1 The difference between generative and predictive models

We can further define an LLM by its constituent parts, as shown in Figure 2.2. Data represents the content used to train the model. Architecture is an attribute of the model itself, such as the number of parameters or size of the model. Models are further trained specifically to the desired use case, including chat, completions, or instruction. Finally, fine-tuning is an added feature to models that refines the input data and model training to better match a particular use case or domain.