10 Model inference & serving

This chapter covers

- BentoML for Model Serving and building model servers

- Observability and Monitoring in BentoML

- Packaging and Deploying BentoML Services

- Using BentoML and MLFlow together

- Using only MLFLow for model lifecycles

- Alternatives to BentoML and MLFlow

Now that we have a working model training and validation pipeline, it's time to make the model available as a service. In this chapter, we will explore how to seamlessly serve your object detection model and movie recommendation model using BentoML, a powerful framework designed to build and serve machine learning models at scale.

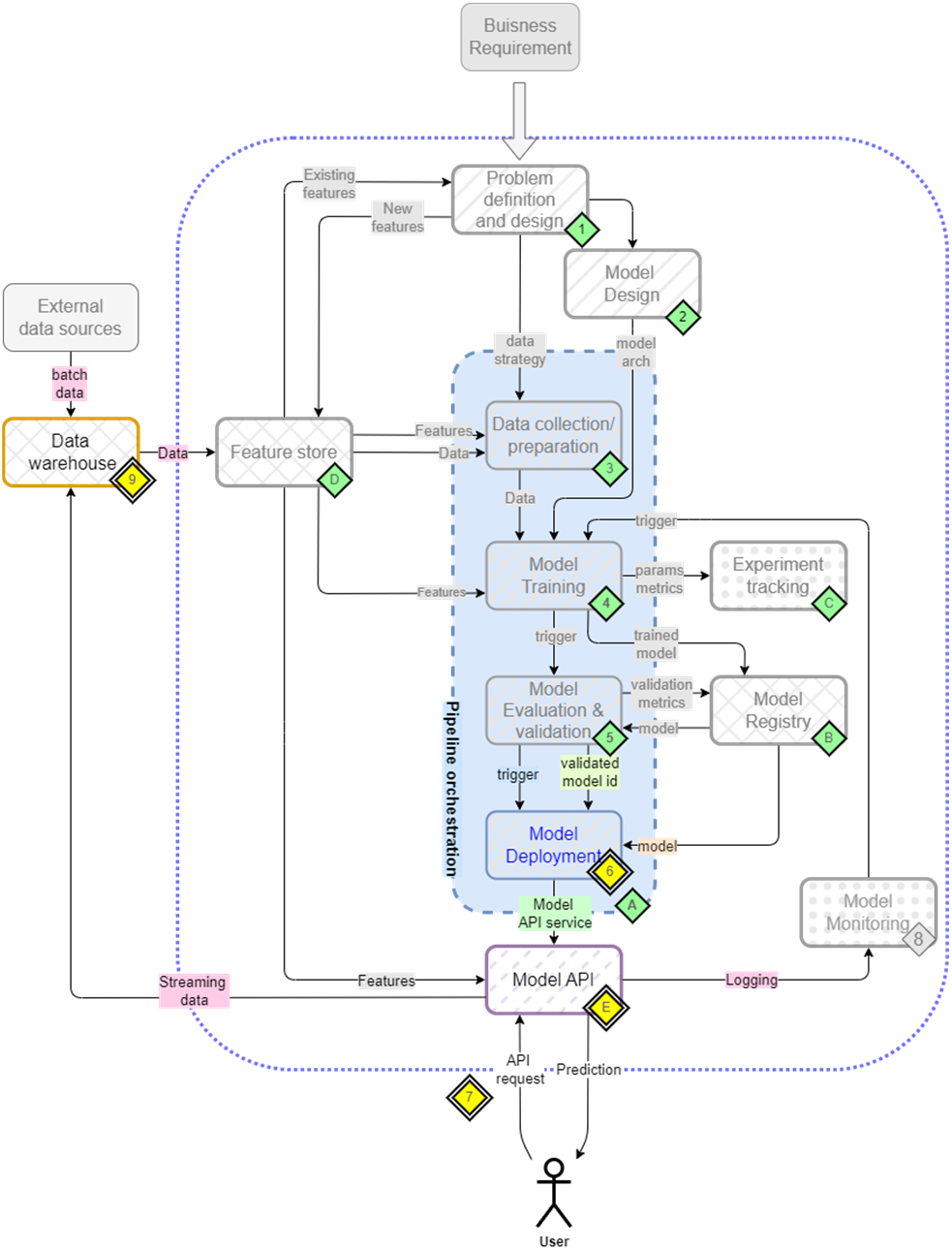

You'll have the opportunity to deploy the two models you trained in the previous two chapters, gaining hands-on experience with real-world deployment scenarios. We'll start by building and deploying the service locally, then progress to creating a container that encapsulates a service for deployment, integrating it seamlessly into your ML workflow (Figure 10.1).

Figure 10.1 The mental map where we are now focusing on model deployment(6) and making the model available as an API(E)

Self-service model deployment offers several advantages for engineers developing MLOps: