chapter nine

9 Model training and validation: Part 2

This chapter covers

- Storing and retrieving datasets with Kubernetes Persistant Volumes

- Using MLFLow and Tensorboard to track and visualize training

- Importance of lineage and experiment tracking

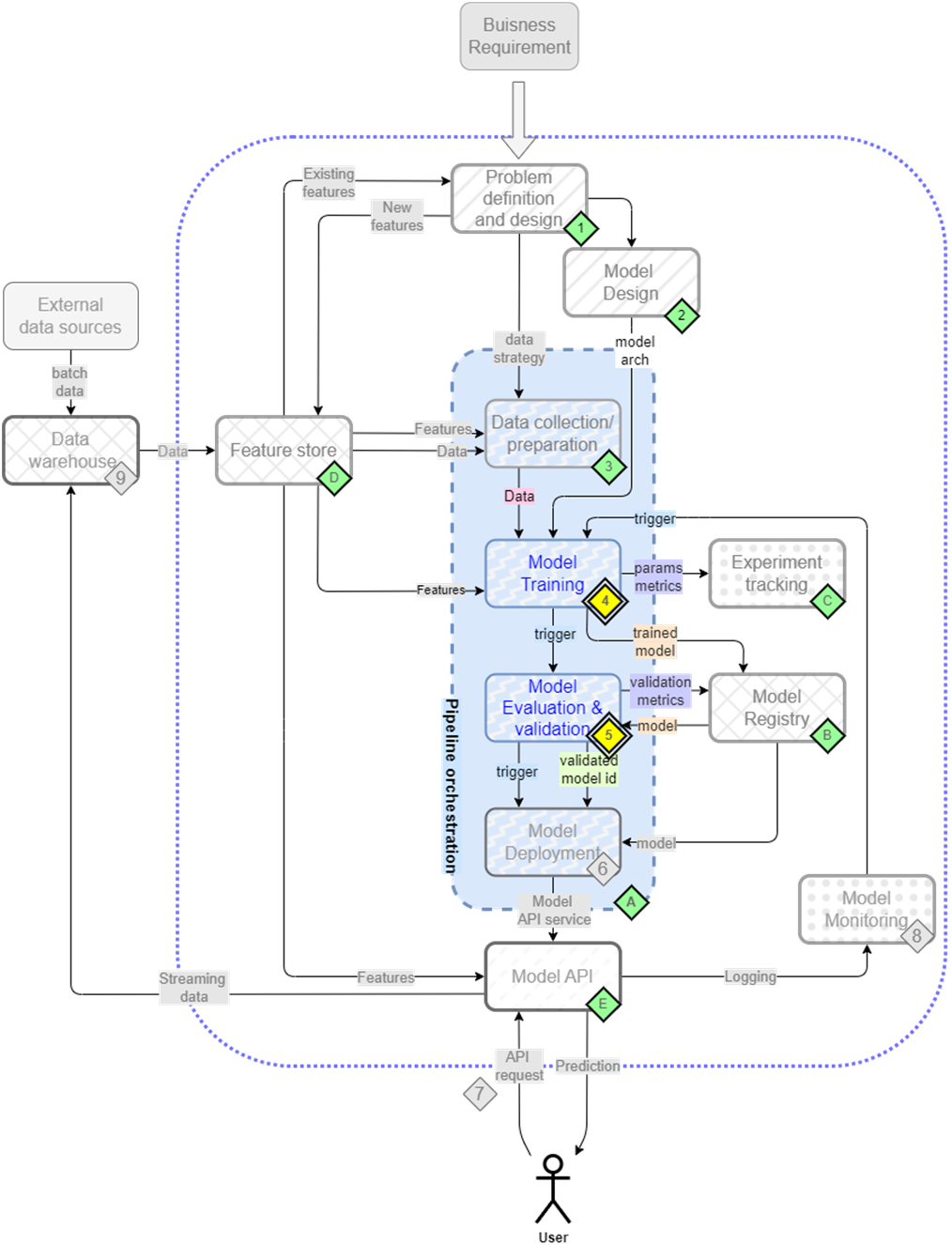

In production ML systems, effective model training extends beyond just algorithms and datasets - it requires robust infrastructure for data management, experiment tracking, and model versioning (Figure 9.1).

Figure 9.1 The mental map where we continue focusing on the second and third step/component of our pipeline - model training(4) and evaluation(5)

While Chapter 8 focused on building basic training pipelines, this chapter tackles the challenges of scaling these pipelines for production use. Through hands-on examples using both our ID card detection and movie recommendation systems, we'll explore how to manage large datasets efficiently with Kubernetes Persistent Volumes, track experiments systematically with MLFlow and Tensorboard, and maintain clear model lineage for production deployments.