Appendix C. Qwen3 LLM source code

While this is a from scratch book, as mentioned in the main chapters, the from scratch part refers to the reasoning techniques, not the LLM itself. Implementing an LLM entirely from scratch would require a separate book, which is the topic of my Build A Large Language Model (From Scratch) book (http://mng.bz/orYv).

However, for readers interested in seeing the Qwen3 implementation we use in this Build A Reasoning Model (From Scratch) book, this appendix lists the source code for the Qwen3Model model that I implemented in and that we import from the book's reasoning_from_scratch Python package:

from reasoning_from_scratch.qwen3 import Qwen3Model, Qwen3Tokenizer

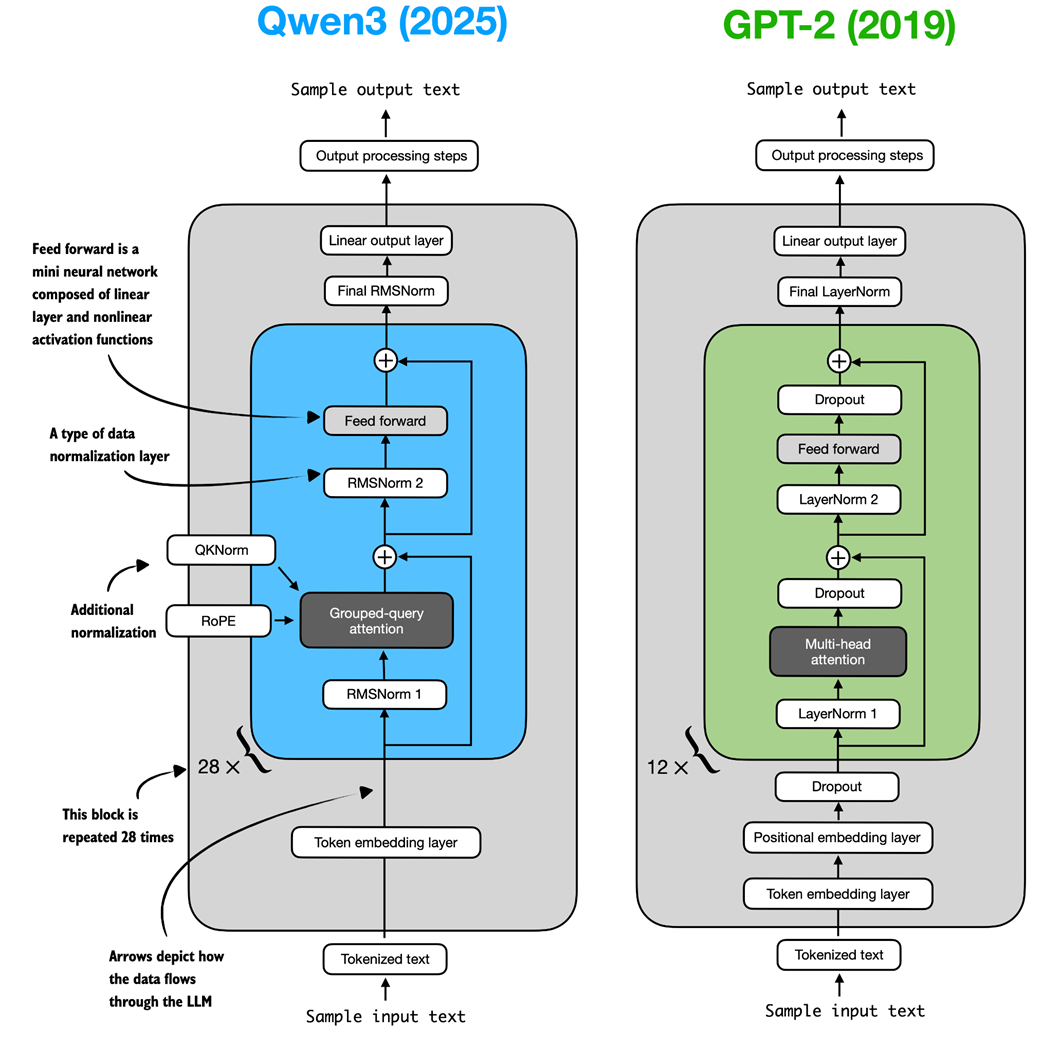

As shown in figure C.1, the Qwen3 architecture is very similar to GPT-2, which is covered in my Build A Large Language Model (From Scratch) book. While familiarity with GPT-2 is not required for this book, this appendix mentions comparisons to GPT-2 for those who are familiar with it. In fact, I wrote the Qwen3 implementation by porting the GPT-2 model from my other book piece by piece into the Qwen3 architecture, such that it follows similar style conventions to improve readability.

Figure C.1 Architectural comparison between Qwen3 and GPT-2. Both models process text through embedding layers and stacked transformer blocks, but they differ in certain design choices.