Appendix F. Common approaches to model evaluation

F.1 Understanding the main evaluation methods for LLMs

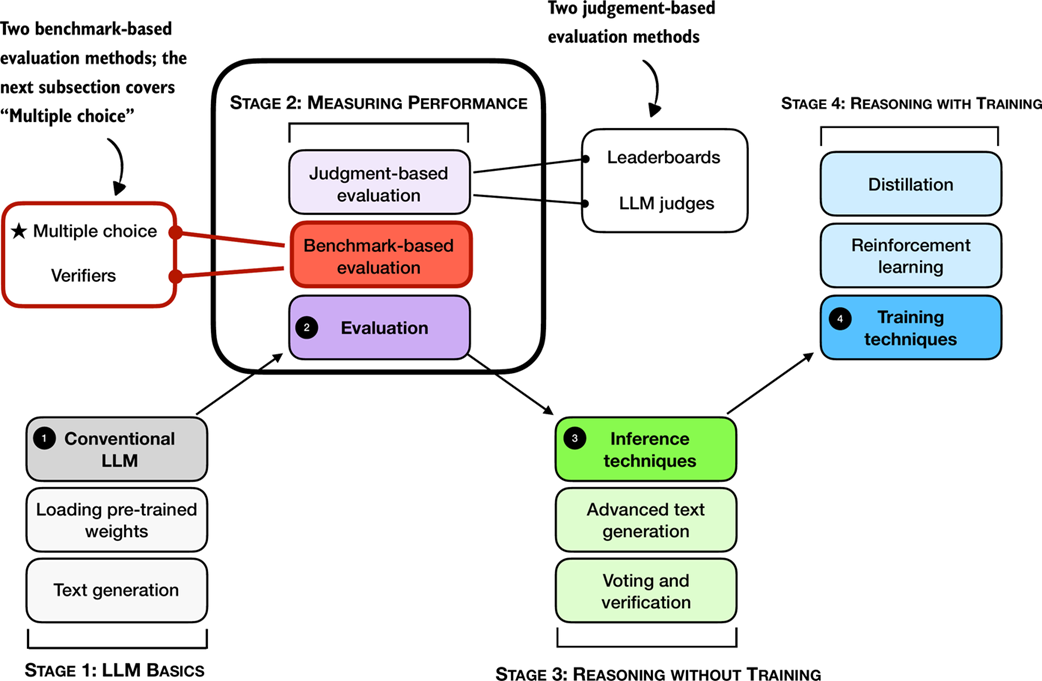

There are four common ways of evaluating trained LLMs in practice: multiple choice, verifiers, leaderboards, and LLM judges, as shown in figure F.1. Research papers, marketing materials, technical reports, and model cards (a term for LLM-specific technical reports) often include results from two or more of these categories.

Figure F.1 A mental model of the topics covered in this book with a focus on the two broad evaluation categories, benchmark-based evaluation and judgment-based evaluation, covered in this appendix.

Furthermore, as shown in figure F.1, the four categories introduced here fall into two groups: benchmark-based evaluation and judgment-based evaluation.

Other measures, such as training loss, perplexity, and rewards, are typically used internally during model development. (They are covered in the model training chapters.)

The following subsections provide brief overviews of each method.

F.2 Evaluating answer-choice accuracy

We begin with a benchmark‑based method: multiple‑choice question answering.

Historically, one of the most widely used evaluation methods is multiple-choice benchmarks such as MMLU (short for Massive Multitask Language Understanding, https://huggingface.co/datasets/cais/mmlu). An example task from the MMLU dataset is shown in figure F.2.