7 Improving GRPO for reinforcement learning

This chapter covers

- Interpreting training curves and evaluation metrics

- Preventing the model from exploiting the reward signal

- Extending task-correctness rewards with additional response-formatting rewards

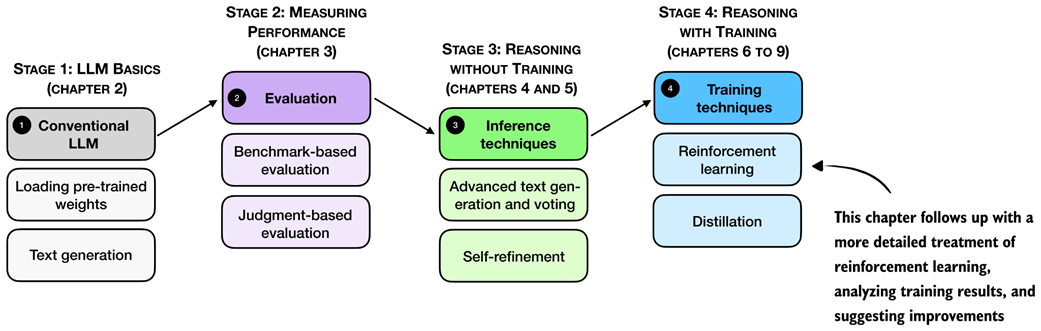

Previously, we implemented the GRPO algorithm for reinforcement learning with verifiable rewards (RLVR) end to end. Now, as shown in figure 7.1, we pick up from that baseline and focus on what happens when we run longer training.

Figure 7.1 A mental model of the topics covered in this book. This chapter provides a deeper coverage of the GRPO algorithm for reinforcement learning with verifiable rewards.

In particular, we will discuss which metrics are worth tracking (beyond reward and accuracy), how to spot failure modes early, and why training can become unstable even when the code is "correct." We then introduce practical GRPO extensions and fixes used in reasoning-model training.

7.1 Improving GRPO

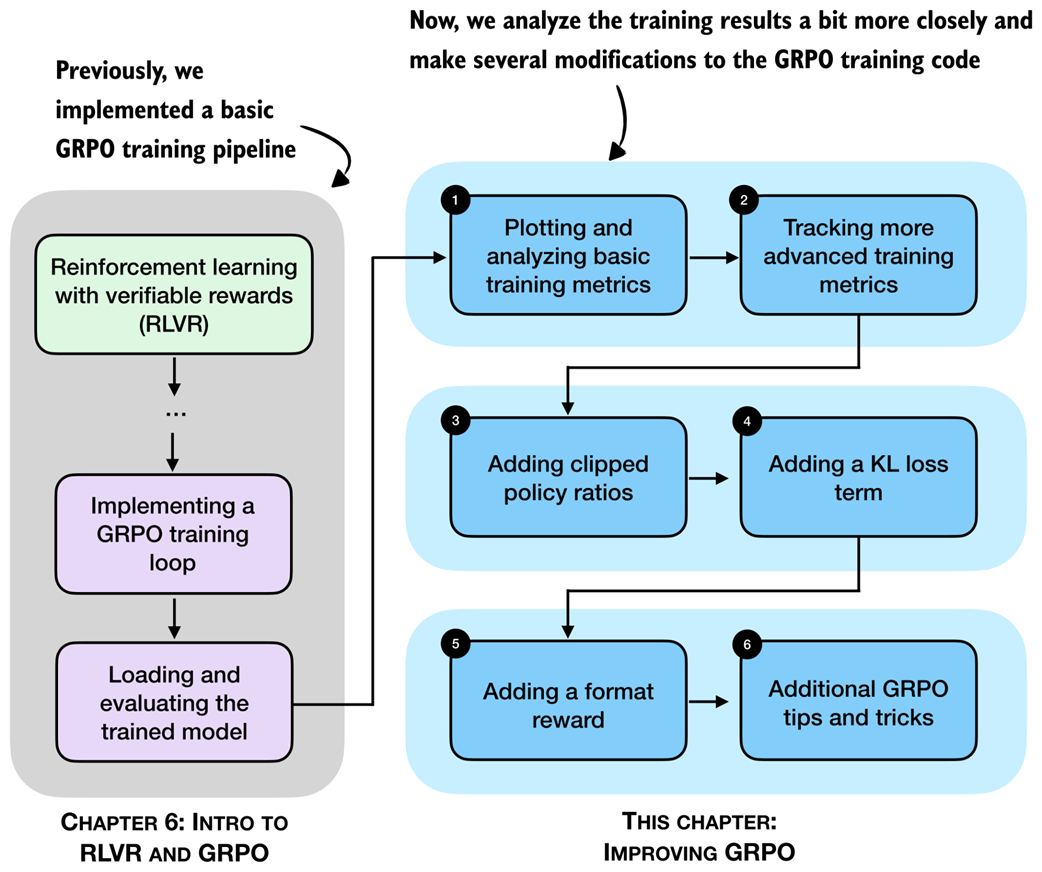

After implementing GRPO (group relative policy optimization) in the previous chapter, we now revisit and analyze the training run more thoroughly. Also, we discuss a collection of practical tips and algorithmic choices that become important in real training runs. These topics are summarized in the chapter overview in figure 7.2.

Figure 7.2 A chapter overview showing the different topics being covered in this chapter.