chapter three

3 Enabling actions: Tool use

This chapter covers

- LLM limitations and why they need tools

- Tool calling and execution

- Building and integrating custom tools

- MCP for tool standardization

On their own, LLMs can't access external data or interact with external systems. They need tools and the ability to select and utilize those tools, which is central to implementing agents. A basic agent performs tasks step by step by repeatedly selecting the next action to take, and tools make this possible.

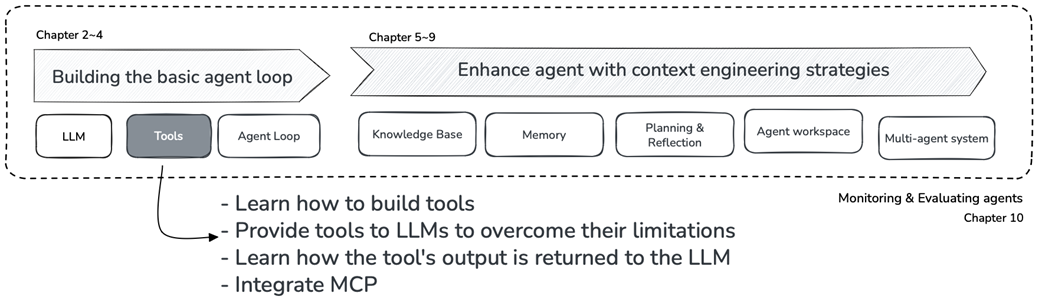

Now, let’s explore how to build tools and inform the LLM how to use them. We'll also learn how the LLM chooses the appropriate tool for a given task and relays tool executions back to the LLM. Then we'll examine the Model Context Protocol (MCP) by Anthropic, an initiative that standardizes the development and use of tools, as shown in figure 3.1.

Figure 3.1 Book structure overview - Chapter 3 in focus.