6 Adding memory to your agent

This chapter covers

- The role of memory in LLM agents

- Managing context growth with sliding window, compaction, and summarization

- Implementing sessions for multi-turn conversations

- Building asynchronous human-in-the-loop workflows

- Creating long-term memory for cross-session knowledge retention

Memory is what separates a stateless tool from an intelligent assistant. Without memory, an agent cannot recall previous events within the same task, continue conversations from earlier sessions, or learn from past experiences. Each interaction starts from scratch, forcing users to repeat context and preventing the agent from improving over time.

This chapter addresses memory in three usage patterns. First, we implement context optimization strategies to prevent context explosion during complex problem-solving. Second, we build Session and SessionManager to maintain conversation continuity across multiple interactions, extending this architecture to support asynchronous human-in-the-loop workflows. Finally, we create a long-term memory system that extracts, stores, and retrieves knowledge across session boundaries using vector search.

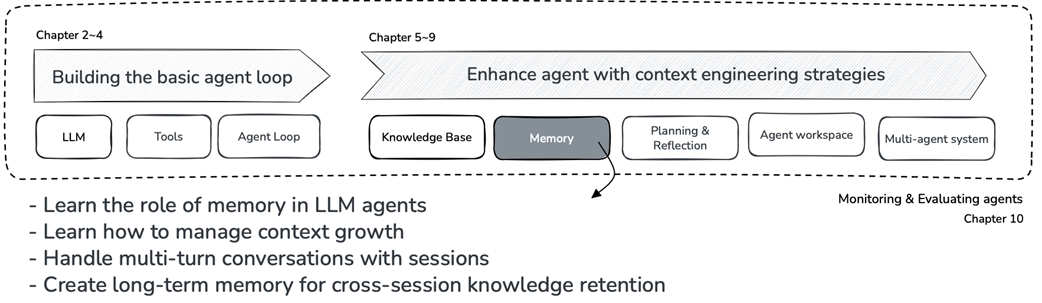

Figure 6.1 Book structure overview: Chapter 6 in focus.