chapter three

3 Grounding Outputs with RAG

This chapter covers

- Retrieval augmented generation (RAG) and how it overcomes limitations of stand-alone LLMs

- Core components of a RAG architecture - retrievers, generators, orchestrators

- Indexing and structuring knowledge sources to enable relevant passage retrieval

- Building sample RAG systems with LangChain for simplifying orchestration

In our prior chapter, a world of possibilities opened in constructing conversational AI through prompting - carefully crafting input texts to large language models (LLMs) to shape helpful, eloquent chatbot responses. However, despite the disruptive potential, major gaps remain in flexibility for real-world assistance.

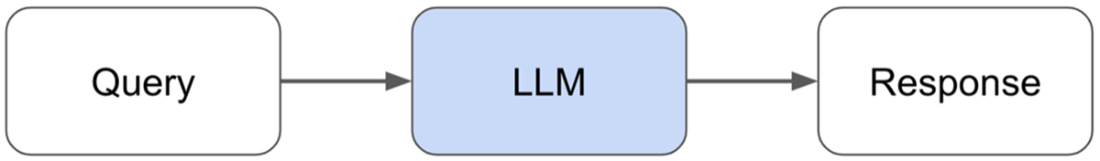

Figure 3.1 Basic prompting through interacting with LLM directly. [1]

With basic prompting (shown in figure 3.1), LLMs have no direct means to access live external data streams beyond their training corpora. However, allowing chatbots to incorporate dynamic knowledge is crucial, as we see below for our retail ecommerce chatbot. Could prompting alone enable a shopper asking:

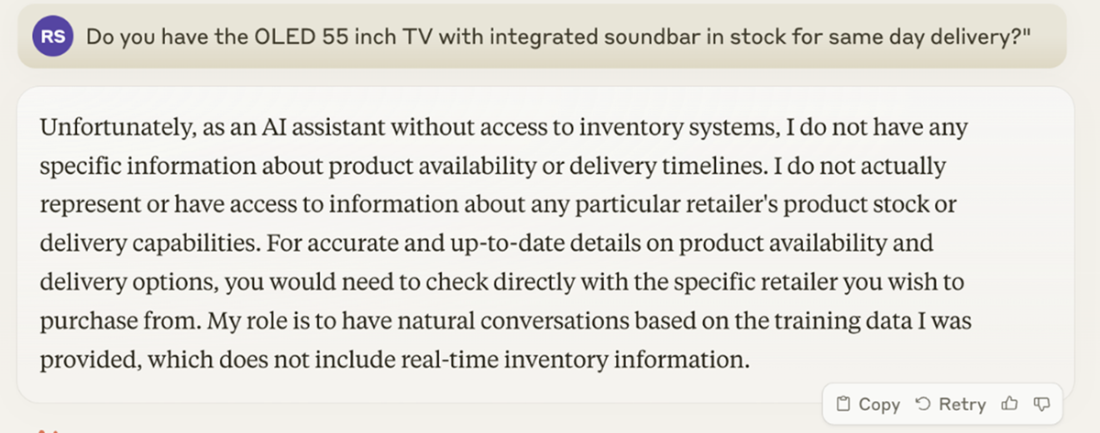

Figure 3.2 Asking an LLM (Claude) about a specific question related to inventory systems