4 Embeddings and Vector Search

This chapter covers

- How embedding models power retrieval-augmented generation systems by mapping text into vector space

- Why embedding quality determines whether the right documents are retrieved

- Choosing between commercial, open-source, and domain-specific embedding models based on your domain, constraints, and goals

- Designing hybrid and multi-stage retrieval pipelines that combine dense, sparse, and reranking components for better precision

- A hands-on walkthrough for building a hybrid retriever for an employee policy chatbot

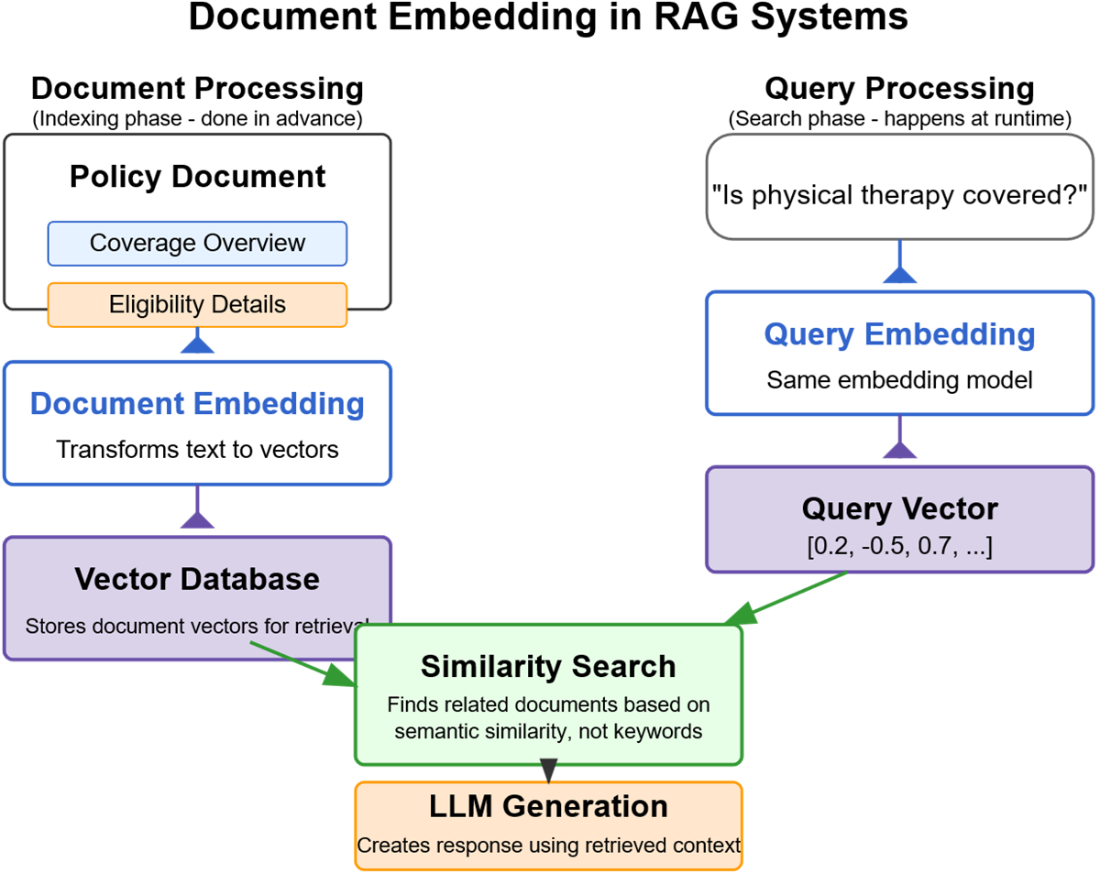

A major healthcare company launched a chatbot designed to help patients navigate their insurance plans. It was built using Retrieval-Augmented Generation, or RAG, backed by a well-trained LLM and connected to internal policy documents (figure 4.1). On paper, everything looked solid. The system had access to accurate information, and the model could generate fluent, helpful responses.

Figure 4.1 Document embedding in RAG systems

But shortly after launch, users began reporting problems. The chatbot frequently failed to answer questions that should have been easy. When someone asked whether physical therapy after surgery was covered, the model gave either a vague reply or an outright “I don’t know”—even though the answer was clearly stated in the documents it had access to. In some cases, it even gave outdated or incorrect information.