I started my career in image processing. I worked in the Missile Systems Division of Hughes Aircraft on infrared images, doing things like edge detection and frame-to-frame correlation (some of the things that you can find in any number of applications on your mobile phone today—this was all the way back in the 80s!).

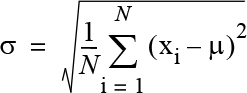

One of the calculations we perform in image processing a lot is standard deviation. I’ve never been particularly shy about asking questions, and one that I often asked in those early days was around that standard deviation. Invariably, a colleague would write down the following:

But I knew the formula for standard deviation. Heck, three months in I had probably already coded it half a dozen times. I was really asking, “What is knowing the standard deviation telling us in this context?” Standard deviation is used to define what is “normal” so we can look for outliers. If I’m calculating standard deviation and then find things outside the norm, is that an indication that my sensor might have malfunctioned and I need to throw out the image frame, or does it expose potential enemy actions?

What does all of this have to do with cloud-native? Nothing. But it has everything to do with patterns. You see, I knew the pattern—the standard deviation calculation—but because of my lack of experience at the time, I was struggling with when and why to apply it.