4 Loading data into DataFrames

- Creating DataFrames from delimited text files and defining data schemas

- Extracting data from a SQL relational database and manipulating it using Dask

- Reading data from distributed filesystems (S3 and HDFS)

- Working with data stored in Parquet format

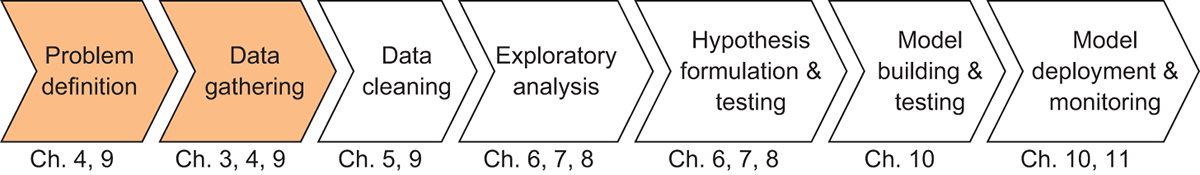

I’ve given you a lot of concepts to chew on over the course of the previous three chapters—all of which will serve you well along your journey to becoming a Dask expert. But, we’re now ready to roll up our sleeves and get into working with some data. As a reminder, figure 4.1 shows the data science workflow we’ll be following as we work through the functionality of Dask.

Figure 4.1 The Data Science with Python and Dask workflow

In this chapter, we remain at the very first steps of our workflow: Problem Definition and Data Gathering. Over the next few chapters, we’ll be working with the NYC Parking Ticket data to answer the following question:

What patterns can we find in the data that are correlated with increases or decreases in the number of parking tickets issued by the New York City parking authority?

Perhaps we might find that older vehicles are more likely to receive tickets, or perhaps a particular color attracts more attention from the parking authority than other colors. Using this guiding question, we’ll gather, clean, and explore the relevant data with Dask DataFrames. With that in mind, we’ll begin by learning how to read data into Dask DataFrames.