chapter three

3 Text embeddings

This chapter covers

- Preparing texts for deep learning purposes, using word and document embeddings

- Showing you the benefits and drawbacks of self-developed text embeddings and pre-trained embeddings developed by others

- Implementing word similarity with Word2Vec

- Retrieving documents via document embeddings, using Doc2Vec

After reading this chapter, you will have a practical command of basic and popular text embedding algorithms, and you will have developed insight into how to use embeddings for NLP. We will go through a number of concrete scenarios to reach that goal.

But first, let’s review the basics of embeddings.

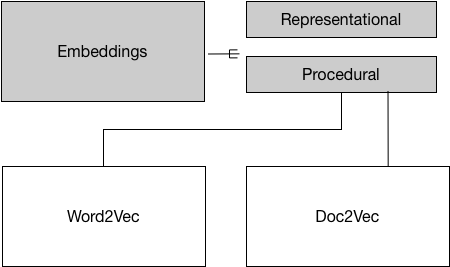

Figure 3.1. There are different types of embeddings: Representational and Procedural. We will discuss two types of procedural embeddings in detail: Word2Vec and Doc2Vec.

Embeddings are procedures for converting input data into vector representations. As mentioned in Chapter 1, a vector is like a container (such as an array) containing numbers. Every vector lives in a multidimensional vector space, as a single point, with every value interpreted as a value across a specific dimension. Embeddings result from systematic, well-crafted procedures for projecting ('embedding') input data into such a space.