chapter seven

This chapter covers:

- How to implement attention in multi-layer perceptrons (static attention) and LSTMs (temporal attention).

- How attention may help to improve performance of a deep learning model.

- How attention helps to explain the outcomes of models, by highlighting attention patterns connected to input data.

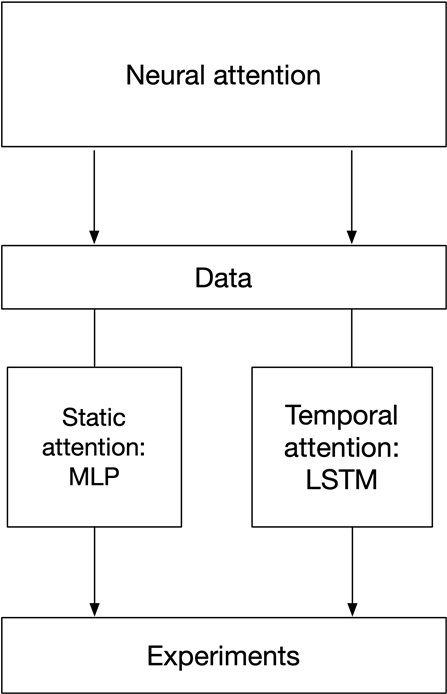

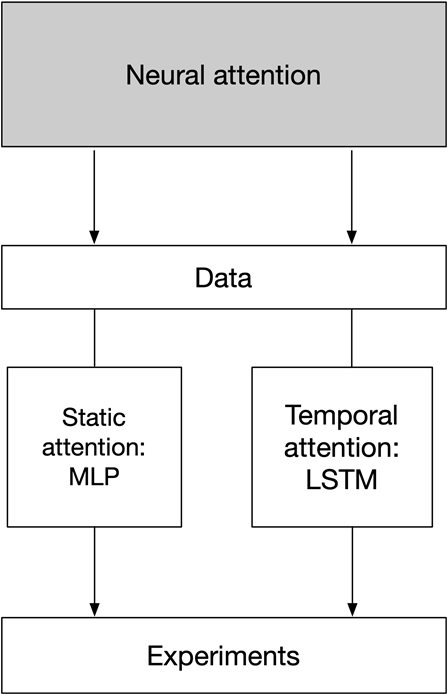

The following picture displays the chapter organization:

Figure 7.1. Chapter organization.