This chapter covers:

- Hard parameter sharing for multitask learning.

- Soft parameter sharing for multitask learning.

- How to combine hard and soft parameter sharing into mixed parameter sharing.

You will learn how to implement three different approaches of multitask learning and to apply these to practical NLP problems. In particular, we will apply multitask learning to three datasets:

- Two sentiment datasets, consisting of consumer product reviews and restaurant reviews.

- The Reuters topic dataset.

- A part-of-speech and named entity tagging dataset.

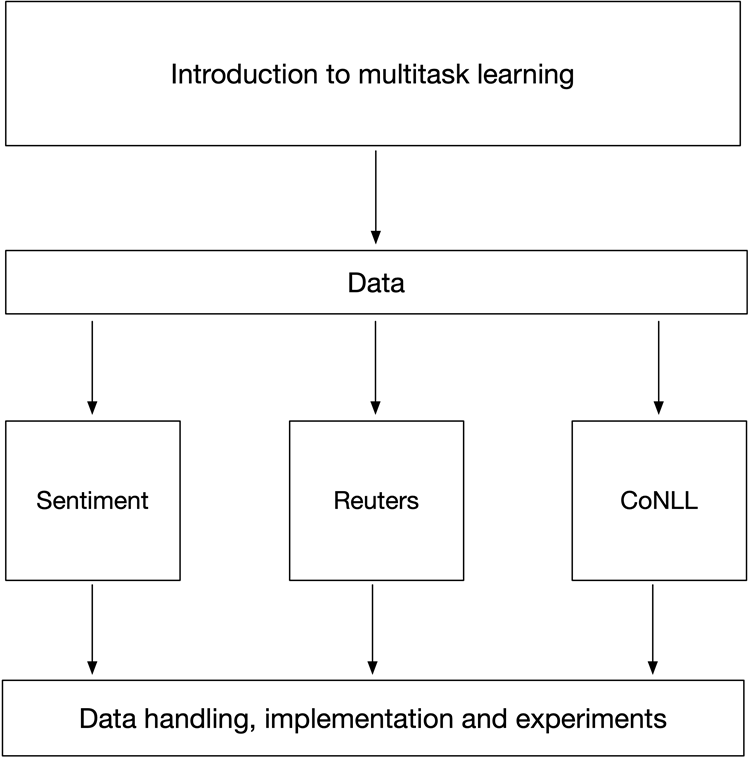

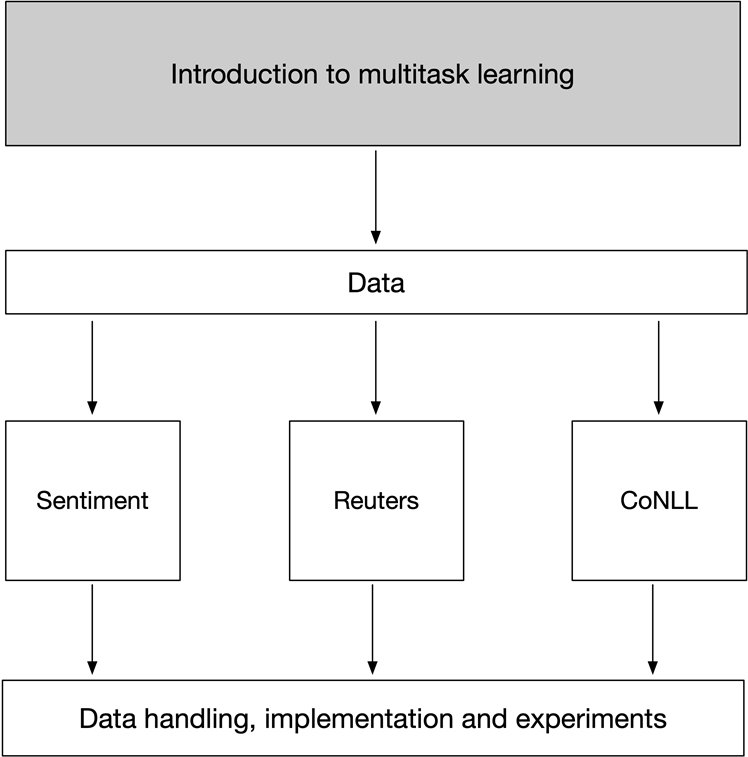

The following picture displays the chapter organization:

Figure 8.1. Chapter organization.

Multitask learning is concerned with learning several things at the same time. An example would be to learn both part of speech tagging and sentiment analysis at the same time. Or learning two topic taggers in one go. Why would that be a good idea? Ample research has demonstrated, for quite some time already, that multitask learning improves the performance on certain tasks separately. This gives rise to the following application scenario:

Scenario: Multitask learning.

You are training classifiers on a number of NLP tasks, but the performance is disappointing. It turns out your tasks can be decomposed into separate subtasks. Can multitask learning be applied here, and, if so, does it improve performance on the separate tasks when learned together?