chapter six

6 Using a neural network to fit the data

This chapter covers

- Activation functions: the key difference between neural networks and linear models

- Working with PyTorch’s

nnmodule - Solving a linear-fit problem with a neural network

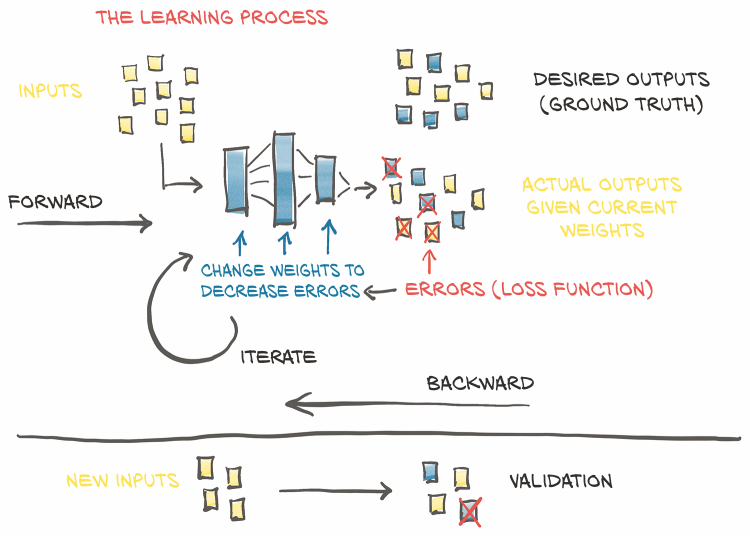

So far, we’ve taken a close look at how a linear model can learn and how to make that happen in PyTorch. We’ve focused on a very simple regression problem that used a linear model with only one input and one output. Such a simple example allowed us to dissect the mechanics of a model that learns, without getting overly distracted by the implementation of the model itself. As we saw in the overview diagram in chapter 5, figure 5.2 (repeated here as figure 6.1), the exact details of a model are not needed to understand the high-level process that trains the model. Backpropagating errors to parameters and then updating those parameters by taking the gradient with respect to the loss is the same no matter what the underlying model is.

Figure 6.1 Our mental model of the learning process, as implemented in chapter 5.