chapter three

3 End-to-end Transformer Fine Tuning

This chapter covers

- How to specialize a very small language model to generate working Manim code.

- The hyperparameter tuning process in Transformers fine tuning.

- How to evaluate the quality of the generated code.

This chapter walks through a complete end-to-end example of fine tuning a very small model (GPT-2 small) on a specific task. Everything explained in this chapter applies to fine tune any GPT-like model on generative tasks.

3.1 Data preparation

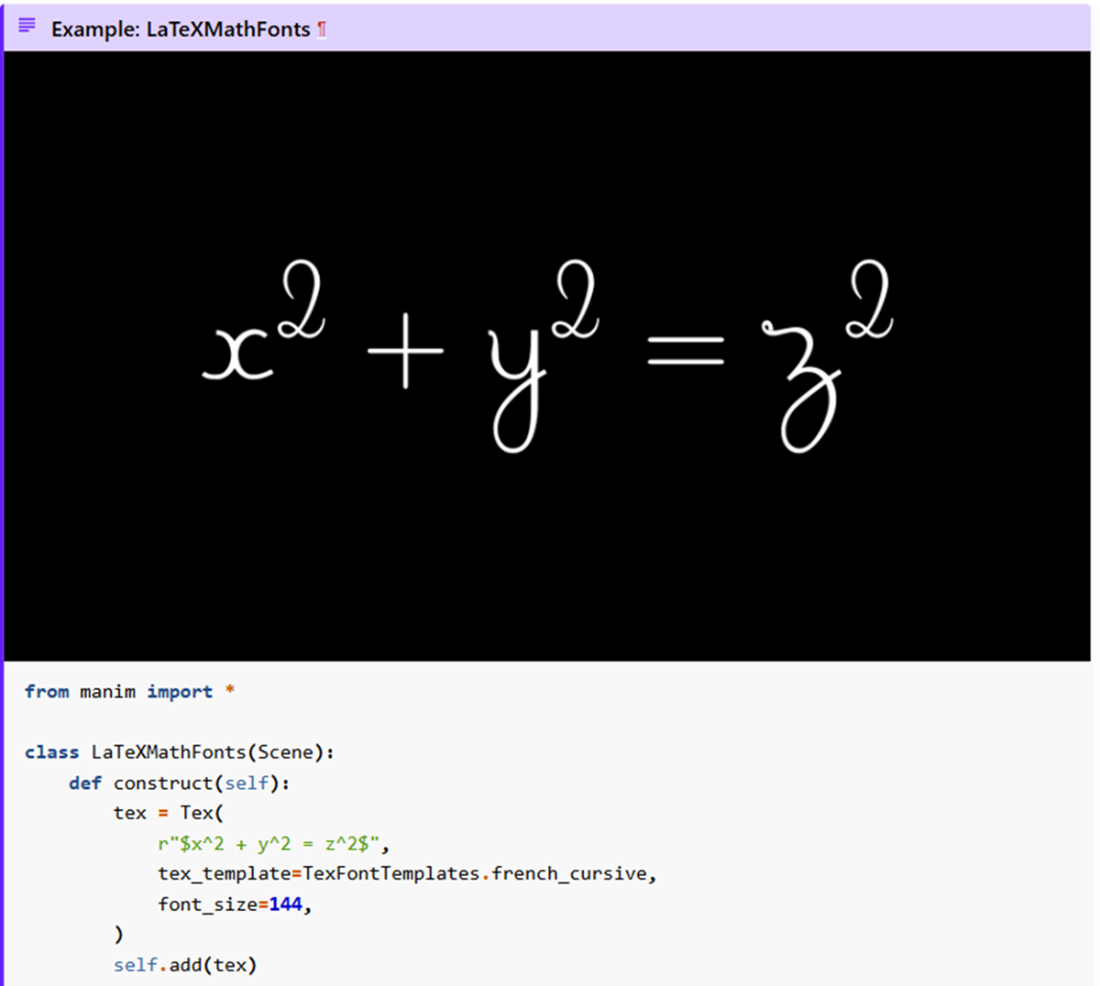

Our goal in this chapter is to fine tune the GPT-2 small model (https://huggingface.co/openai-community/gpt2) to generate working Manim code starting from a prompt in natural language (English). Manim (https://github.com/ManimCommunity/manim) is an Open Source Python animation engine for explanatory math videos. It's used to create precise animations programmatically. Figure 3.1 shows an example of Manim code and the corresponding rendering:

Figure 3.1 An example of Manim code (bottom) and the corresponding rendering (top).