4 Scaling with The Compute Layer

This chapter covers:

- Designing scalable infrastructure that allows data scientists to handle computationally demanding projects.

- Choosing a cloud-based compute layer that matches your needs.

- Configuring and using compute layers in Metaflow.

- Developing robust workflows that handle failures gracefully.

What are the most fundamental building blocks of all data science projects? First, by definition data science projects use data. There isn’t always huge amounts of data involved but arguably you can’t do machine learning and data science without any data. Second, the science part of data science implies that we do something with the data, that is, we execute some computation to process data.

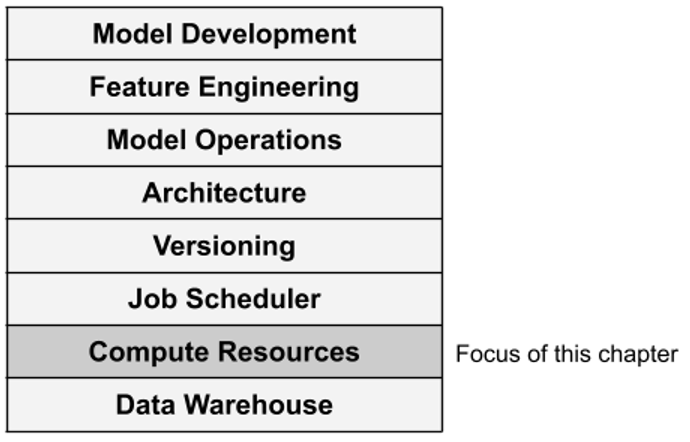

Correspondingly, data and compute are the two most foundational layers of our data science infrastructure stack, depicted in Figure 4.1.

Figure 4.1. Data science infrastructure stack with the compute layer highlighted

How to manage and access data is such a deep and broad topic that we postpone an in-depth discussion about it until Chapter 8. In this chapter, we focus on the compute layer which answers a seemingly simple question: After a data scientist has defined a piece of code, such as a step in a workflow, where should we execute it?