Chapter 1. Deep learning and language: the basics

Chapter 2 from Deep Learning for Natural Language Processing by Stephan Raaijmakers

This chapter covers

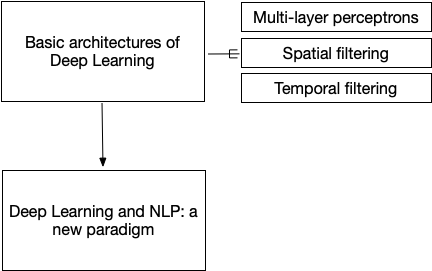

- The fundamental architectures of deep learning: multilayer perceptrons, and spatial and temporal filtering.

- Initial practical exposure to deep learning models for natural language processing.

After reading this chapter, you will have a clear idea of how deep learning works, why it is different from other machine learning approaches, and what it brings to the field of natural language processing. As a prerequisite, you should be familiar with the Keras (Python) library for deep learning. This chapter will introduce you to some high-level Keras concepts and their details through examples. A more in-depth tutorial on Keras in Appendix 1. This book does not pretend to be a full and systematic introduction to Deep Learning. Such a thorough introduction to Deep Learning can be found in the Manning book Deep learning with Python.

The following scheme shows the organization of this chapter.

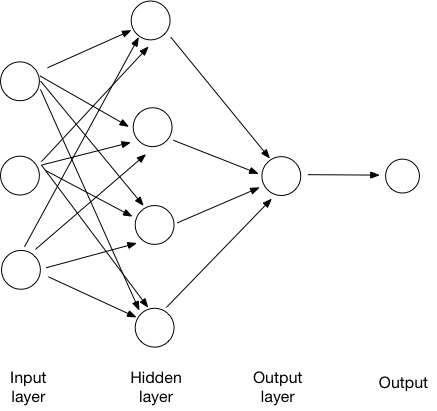

The prototypical deep learning networks is a multilayer perceptron or MLP. See the picture below for a simple multilayer perceptron, which only has one single hidden layer.

Figure 2.1. A generic Multilayer Perceptron (MLP).