Appendix A. Fundamentals of classical ML for fraud detection

This appendix covers

- Types of classical machine learning models

- Typical machine learning lifecycle

- Fixing class imbalance in fraud datasets

In this appendix, we explore different types of classical ML models. We understand how the ML lifecycle works especially in context of fraud detection. Finally, we take a closer look at the class imbalance problem in fraud detection and the ways of tackling it.

A.1 Types of ML models

There is a plethora of classical machine learning models out there today suited for a variety of tasks. In this section, we focus on the ones that are particularly useful in fraud detection. That said, this is still not an exhaustive exploration of all possible fraud detection machine learning models, but rather a brief overview of some of the most used ones.

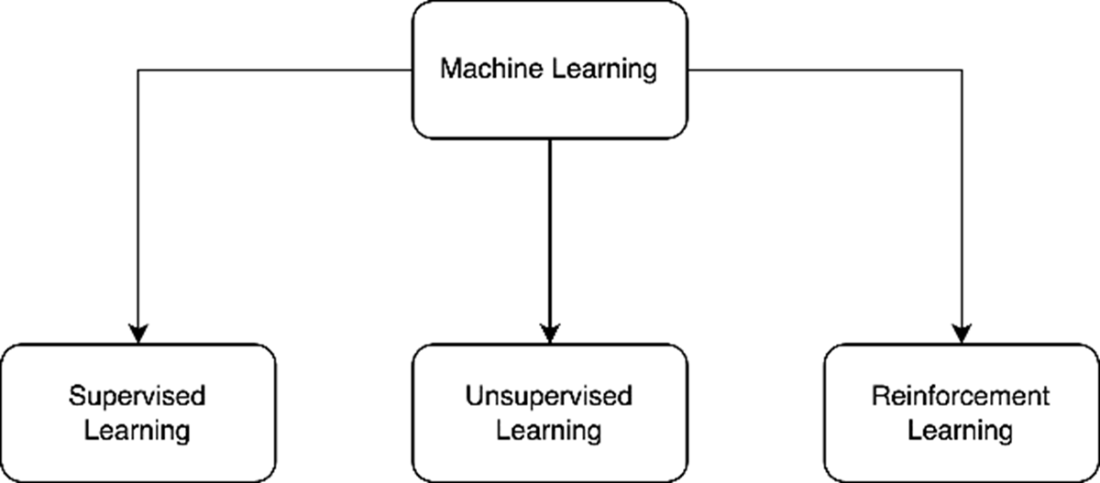

Figure A.1 Subdivisions of machine learning

Machine learning at the core is all about learning patterns within data, and using these patterns to make decisions or predictions for a given task. Machine learning is divided into a few categories based on how the inputs and outputs are defined as shown in figure A.1: