Appendix C. Example of a Deep Generative Model

Deep generative models are generative models which use artificial neural networks to model the dataspace. One of the classic examples of a deep generative model is the autoencoder. In this appendix, we give an overview of autoencoders and how to use these to train a model for predicting digits in MNIST. This appendix supports Chapter 5 and should be read by those who have had less experience with generative models.

C.1 Autoencoders

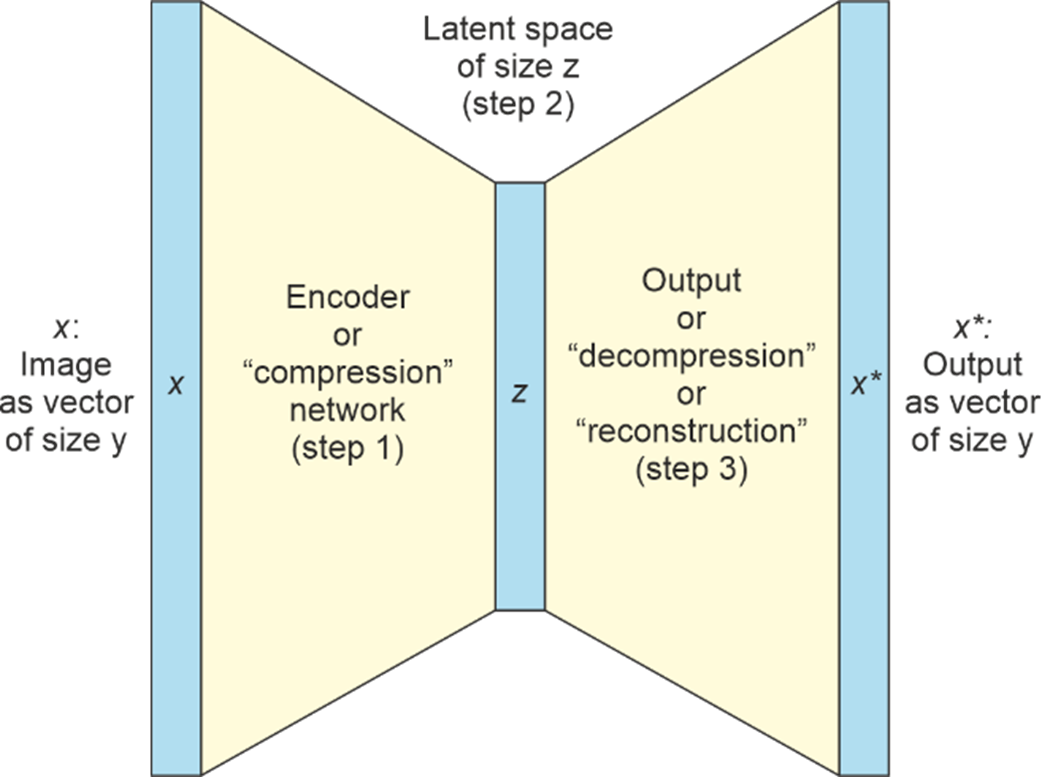

Autoencoders contain two key components, the encoder and the decoder, both represented by neural networks. They learn how to take data and encode (compress) it into a low dimensional representation as well as decode (uncompress) it again. Figure C.1 shows a basic autoencoder taking a large image as input and compressing it (step 1). This results in the low dimensional representation, or latent space (step 2). The autoencoder then the reconstructed the image (step 3) and the process is repeated until the reconstruction error between input image (x) an output image (x*) is as small as possible.

Figure C.1 Structure of an autoencoder - taken from “GANs in Action”

In order to learn more about autoencoders and how they work, we will apply them to one of the most famous datasets in machine learning, the MNIST digits dataset.