4 Graph Attention Networks (GATs)

This chapter covers

- The notion of attention and how it is applied to GNNs

- The GAT and GATv2 layers in PyG and when to use them

- How to use mini-batching via the NeighborLoader class

- Implementing and applying GAT layers in a spam detection problem

In the last chapter, we examined convolutional GNNs, focusing on GCN and GraphSage. In this chapter, we extend our discussion of convolutional GNNs by looking at a special variant of such models, the Graph Attention Network.

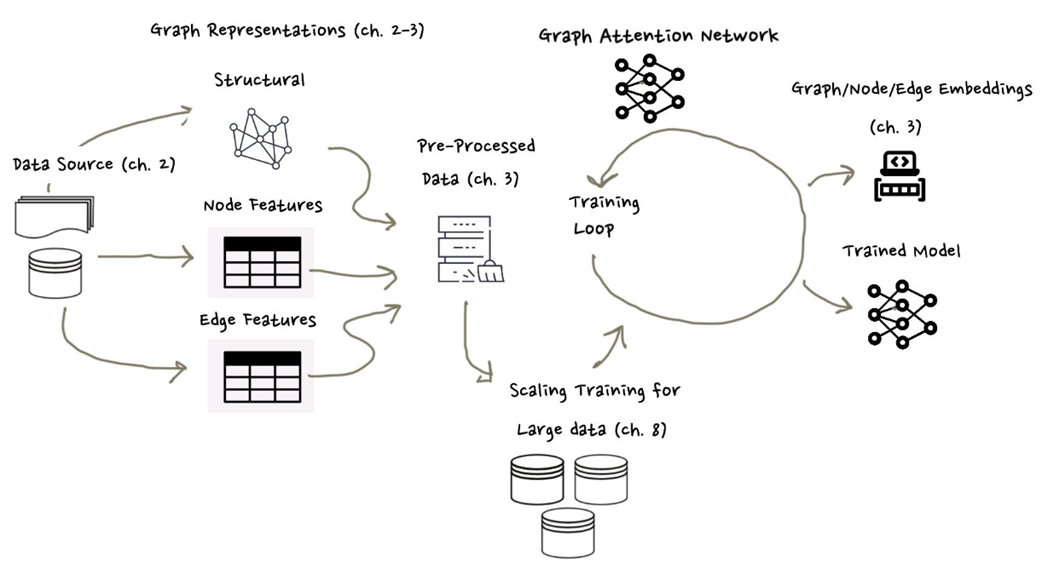

Figure 4.1 Mental model of GNN training process, with the subject of GATs in context.

As with the previous chapter, our goal is to understand and apply Graph Attention Networks (GATs). While these GNNs use convolution as introduced in the previous chapter, they extend this idea with an attention mechanism to highlight important nodes in the learning process. This is in contrast to conventional convolutional GNNs which weights all nodes equally.

As with convolution, attention is a widely used mechanism in deep learning outside of GNNs. Architectures that rely on attention (particularly transformers) have seen such success in addressing natural language problems that they now dominate the field. It remains to be seen if attention will have a similar impact in the graph world.