chapter eleven

11 Large Language Models (LLMs)

This chapter covers

- Understanding the intuition of large language models

- Identifying and preparing LLM training data

- Deeply understanding the operations in training a large language model

- Implementation details and LLM tuning approaches

11.1 What are large language models?

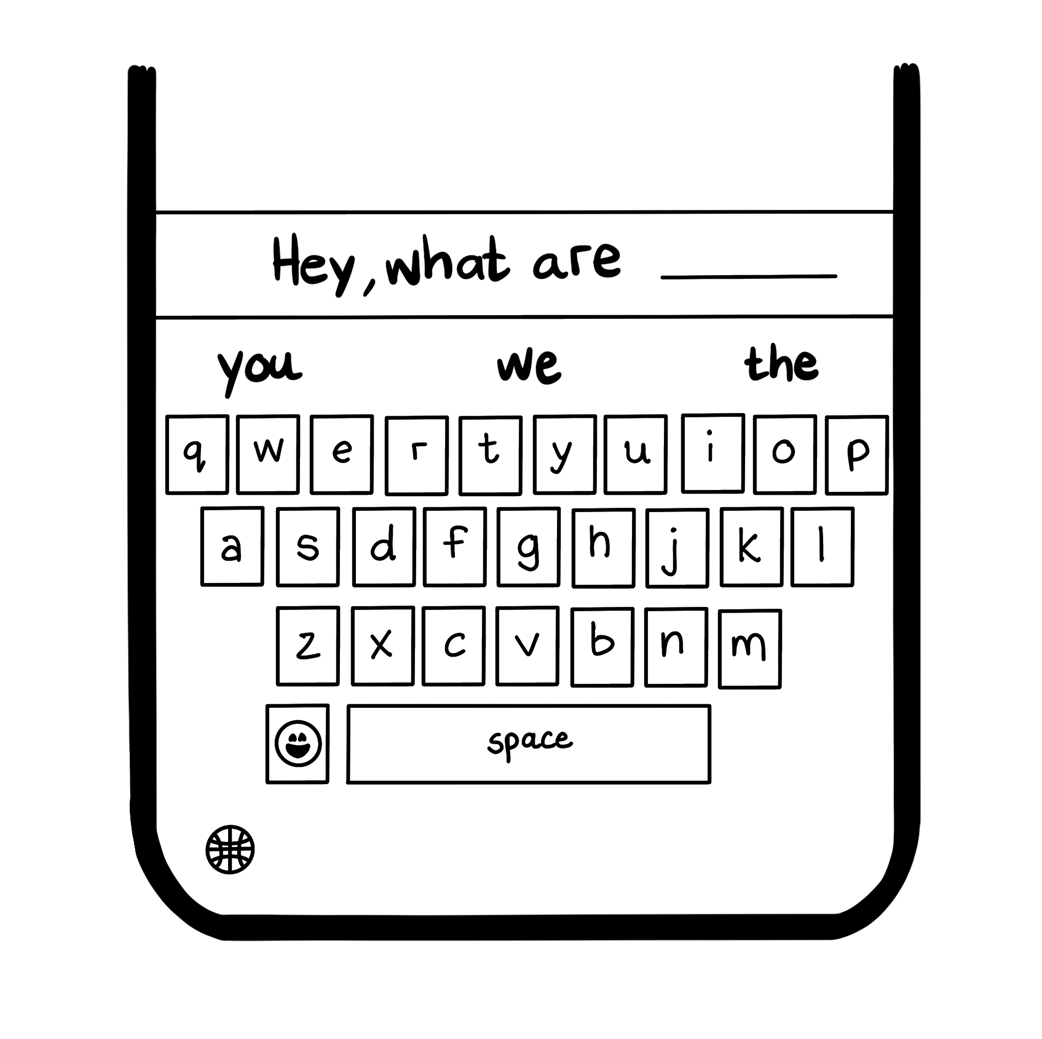

Large language models (LLMs) are machine learning models that are specialized for natural language processing problems, like language generation. Consider the autocomplete feature on your mobile device’s keyboard (figure 11.1). When you start typing “Hey, what are…”, the keyboard likely predicts that the next word is “you”, “we”, or “the”, because these are the most common next words after the phrase. It makes this choice by scanning a table of probabilities that was trained on commonly available pieces of content - this simple table is a language model.

Figure 11.1 Example of autocomplete as a language model

A large language model (LLM) is exactly the same idea, with some fundamental upgrades to enable interesting capabilities that come with predicting more than one word at a time: