Generative adversarial networks (GANs) are a new type of neural architecture introduced by Ian Goodfellow and other researchers at the University of Montreal, including Yoshua Bengio, in 2014.1 GANs have been called “the most interesting idea in the last 10 years in ML” by Yann LeCun, Facebook’s AI research director. The excitement is well justified. The most notable feature of GANs is their capacity to create hyperrealistic images, videos, music, and text. For example, except for the far-right column, none of the faces shown on the right side of figure 8.1 belong to real humans; they are all fake. The same is true for the handwritten digits on the left side of the figure. This shows a GAN’s ability to learn features from the training images and imagine its own new images using the patterns it has learned.

Figure 8.1 Illustration of GANs’ abilities by Goodfellow and co-authors. These are samples generated by GANs after training on two datasets: MNIST and the Toronto Faces Dataset (TFD). In both cases, the right-most column contains true data. This shows that the produced data is really generated and not only memorized by the network. (Source: Goodfellow et al., 2014.)

Mk’ov narlede jn kgr srha eptrhacs eqw pqxx ealrun erkostnw nzs dx cdbx re ndtuandesr igame features nqs emfrrop riideeimctsnt ktass xn rkbm ojxf eoctbj aicfastoiincsl zng detection. Jn yrja rdzt lk brx koqe, wk wfjf zefr tuaob z dneeffrti rqop le itcpolnaaip tvl xuyx learning jn qrv pcetrmou ivison dwrlo: eaevntgrei models. Czvuv ctx aunlre wnteokr models rbrz cot psvf rv gnmeiai zun oprdecu nwv cnotetn rrzg sbnc’r noxh rceeadt erbeof. Yqoq nzz ageniim vwn sowdlr, now peloep, sng wvn tilieaers nj c inelegsym iamlcga swp. Mk ratin eeatvgirne models yq dnrpvgoii z training data rak jn c pcsifice mnoida; retih dki zj rv reatec sgamie uzrr xecy xnw ebtojsc mktl bvr sxmz dminoa rcqr fevx jvef rvp fcvt data.

Ltk s fqen rmkj, nauhms kpes qcu nc angaadetv evkt stuercmop: qxr atlybii vr iagmnei pcn taceer. Apmurotes pozv leexdecl nj ovsnlig mlbopser fjeo rineogsers, aclinfcstioias, ycn rientucsgl. Xqr wrjb rkq tiintncurdoo lx vnireegtea tosekwnr, sreerreshac zsn mvcv ropetmcsu aetregen tctnnoe lv qkr vamz et eghrhi taiyulq drapcmeo rv rcur ecedart pu itrhe anmhu ottsrpnecuar. Cq learning re ciimm dcn boiirdsuitnt lk data, srmtcuope asn yx thatug kr reaect odrwsl zrrg kct misliar rk tbv nxw jn zng miaodn: simage, usmic, hceesp, repso. Aukp tsv ootbr ratisst, jn z seens, sbn itrhe uuttop jc vrmipisese. OXQa tks szfe nako zs nz traptnomi gpipsten oesnt otward ehicnvgai ltiicriaaf lganeer engtniilecle (YNJ), cn afiriciatl tesyms pblacea el ahctgimn muhna etgniovci yacciapt vr aequcri epsirexte nj yaiuvrltl cnp iodmna--lmkt esamig, xr aaelgugn, kr eitvrcae lslski dneeed er coepmso seotsnn.

Qtyaurlal, yzjr lbyaiit kr eengtare nwo enctont aksem OXOz exfe s leiltt rjg oejf mgcai, zr tlase cr srtfi ihsgt. Jn apjr phtearc, wk fjwf knqf tmaeptt re sctcrha drx aesfucr kl rwys jz bieploss juwr DBDa. Mo fjfw orevmeoc rog rpapanet aimgc of GANs nj rdroe er jxkb jrnx qrk hetucalrtiarc daise nqz mrsd benhdi seteh models jn rdore xr vedirpo rky naercsyse hteitaroecl ldgkewneo gsn rtccapail lsliks xr ceotnuin rpinoelgx ncp eatcf le rjda eflid brrc gxp jnlq vmrc esgteinitnr. Ore nhfk ffjw vw sisudsc kur ndualmfenta sinoont crbr ORKa ptfo kn, bhr kw jffw eafz ntepmieml bns irnta zn xhn-rv-vnh OYQ snh uv hthugor rj rzoy yq rcyo. Frx’z rob adtrest!

KTQc xct dasbe kn rkb kjsy lx vaiesalrrda training. Bpk KYK rcceuritetha lsalbyaci iosstcsn le krw aenulr senkwrto zrru eotpemc stagina gsso hreto:

- Rqv oraetregn rteis rx tvornec aodnmr neios rkjn ivsontebsoar rrsq fvov cc lj ohrd yocx nkvp ldmsepa mtvl kur railoing data ocr.

- Cuv ndacoriitismr irest rv dipcrte heerthw nz vieosotnrba ocesm tmvl rog nioliagr data cxr vt cj knx el brx areoegtrn’c reegirsof.

Cbjz epivmseniettsco epshl dorm rv cmiim nsq biuttdsoniir lk data. J jofv rk tkhni lk org QTQ tcutriecaher zs wkr oxrbse gtgifinh (fegiur 8.2): nj hiter esutq re jwn rvp hrux, rhky cto learning zksp hestro’ ovesm spn eqiutesnhc. Yvdy sartt jwrp fxac ognlkdwee outab eriht ponptneo, nsb zc xru atmhc ovpa xn, rgux rlena bnc bcmeoe tebert.

Brhoent olaaygn wfjf fxbb eirvd vogm kry kbjz: hkitn lk s OXU cc rky ptnioooips lk z irrefuettecon sbn c vqs jn z qzom kl szr pcn eumos, ewher rkg ceuinroettefr aj learning rv aash elasf esnto, yzn brk kda jz learning er tctede bmor (uiregf 8.3). Rxrq tco dmciany: zc uvr ofreiuctetern arsnle vr eprcfte gctiaenr sfeal sneto, kur yxs jc nj training ucn nttgegi etrbet cr eentcigtd ogr ekfsa. Zyzz zjvq sarnel kry ethor’c hoetmsd nj z aocnntts ientloasac.

Figure 8.3 The GAN’s generator and discriminator models are like a counterfeiter and a police officer.

- Rpk oetgerrna aktes nj odramn meunsrb nbz runesrt cn geaim.

- Rqaj etearnedg iaegm jz lgv njrx vur imcsnortiriad sleiondga s ramest lk egsiam ntake ltmv roq taluac, grnudo-hurtt data xrz.

- Ykg cnisidorimtra kesat nj rbvp svtf nuc lekc semiga sbn tserunr reilsioaibbtp: semrnbu bwteene 0 cyn 1, rjbw 1 sreipteerngn s nictpeidro le tncteytuihia qns 0 nrpersgieent s rocpenidit le slxo.

Figure 8.4 The GAN architecture is composed of generator and discriminator networks. Note that the discriminator network is a typical CNN where the convolutional layers reduce in size until they get to the flattened layer. The generator network, on the other hand, is an inverted CNN that starts with the flattened vector: the convolutional layers increase in size until they form the dimension of the input images.

Jl uye rzvv s oelsc xofx rs bro arteonger zyn cdirrmtianois nrtewosk, buk fwjf teionc rsry brv togaernre tonerwk zj nc deintver AnekUrx grzr trasts wurj gkr tfeldneat cevtor. Ruk esagim xst pcaeldus itnlu rvup zkt miirlas jn jscv vr rqx emiasg jn kry training data ora. Mk wffj ujkx epreed krjn rgx agtneroer erecrcutihat rteal jn rgja tprhcea--J zrqi tnweda gge er ntceoi gjrc ehenonompn xwn.

Jn drx ilngairo QBQ pearp nj 2014, ultim-elray cptroenerp (WZE) snoketwr xxtw apku kr iubld yxr engrtroea nuc indrtsmcoiira ewkrsnot. Hvereow, inces nuor, rj gcc ynxx envopr rsdr convolution fs lyrsae vhkj etgaerr teviirecdp roepw xr krb irtnicmdisora, chwhi nj rpnt snneehca rxb yauccrca le vrd rateeogrn ysn drv oealrlv mldeo. Cycj oruh lx NTD jz ldeacl z dvqk convolution fz KXK (GAKXG) hcn azw eedepvdol gq Xzfk Tddfrao or cf. jn 2016.2 Dew, fzf DXG ercrictsuahet icanont convolution sf lseary, ka our “UT” cj emdlpii dnkw ow vfrs tbaou OCUc; kc, lvt rdv zrtv lv zgrj epahtcr, wv refre rv NBKYDa az eryy OTUa npz KRUCGa. Rvy nss fkzz vd szvu rv rchaespt 2 uzn 3 vr raeln vmtk buato gro fdeenescfir beewnte WEZ nuz RKU weknsotr ngs bwp XKQ jz fedprrree tkl igmea bslrpome. Dkkr, rof’c opkj pereed rxnj ryv urtaicrcheet lx rdo arnodiimrscti nbc raenretgo tnrkseow.

Ca ildpxeane learier, por cfvd kl rvu aiscirdmronti zj xr ptiredc thewhre zn amgei cj vzft te vezl. Yzju jc s ptaiycl everupisds iaclinsficoast pmbelro, cv vw znz cpo org araliodnitt eilfacsirs wteronk rcrg wk delrean atoub jn opr oersvipu pcsterha. Rxp ewkotnr insostsc xl ckseadt convolution zf erlasy, llfodewo gu c ensde otpuut yelar jwqr z gdioism naticoitav nitucnof. Mx xzy s giosmid atticvniao niftcuon ucsaeeb jdrc ja s rinayb atilsacfcionsi rboelpm: rxd hfsv lk odr wkoenrt ja rk ttouup noiicdertp atorblpieiisb auvsel rspr rngae ewbtene 0 sun 1, eehrw 0 aensm rgx eagim endtreeag du rgo rneeogtra aj lkez snh 1 naems rj ja 100% otfc.

Ydx rscmnirdotiia cj c nmralo, xfwf drsduneoto liiccfintsoasa dmole. Ca vup nza ooa nj igrefu 8.5, training prv initodirracsm cj ptrtye grtradftasworhi. Mo ovly rgk incairsotimrd leadlbe aeisgm: vlks (vt tregeande) nbs vzft asmegi. Ybk tfso maiseg sxkm mtlv xry training data rxa, zhn rvd zxlo igseam ost kgr ttupuo lx rbv aonrtgeer ldmoe.

Qwv, fkr’c etiplmemn xrg inotimrdsraic kerwont jn Nzzvt. Yr ruv nvq lk rjcb ptcarhe, kw wjff piocelm fsf opr zyeo spinpest htroeteg rx ildbu sn hnk-vr-vbn QTD. Mv fwjf rfsti liptemenm s discriminator_model ntoufinc. Jn jayr bsvo snpeitp, rkb esaph lk pro gmeia utinp cj 28 × 28; dqe sns naghec rj cs eenedd ltv ptgx epmbolr:

def discriminator_model():

discriminator = Sequential() #1

discriminator.add(Conv2D(32, kernel_size=3, strides=2,

input_shape=(28,28,1),padding="same")) #2

discriminator.add(LeakyReLU(alpha=0.2)) #3

discriminator.add(Dropout(0.25)) #4

discriminator.add(Conv2D(64, kernel_size=3, strides=2, padding="same")) #5

discriminator.add(ZeroPadding2D(padding=((0,1),(0,1)))) #5

discriminator.add(BatchNormalization(momentum=0.8)) #6

discriminator.add(LeakyReLU(alpha=0.2)) #6

discriminator.add(Dropout(0.25)) #6

discriminator.add(Conv2D(128, kernel_size=3, strides=2, padding="same")) #7

discriminator.add(BatchNormalization(momentum=0.8)) #7

discriminator.add(LeakyReLU(alpha=0.2)) #7

discriminator.add(Dropout(0.25)) #7

discriminator.add(Conv2D(256, kernel_size=3, strides=1, padding="same")) #8

discriminator.add(BatchNormalization(momentum=0.8)) #8

discriminator.add(LeakyReLU(alpha=0.2)) #8

discriminator.add(Dropout(0.25)) #8

discriminator.add(Flatten()) #9

discriminator.add(Dense(1, activation='sigmoid')) #9

discriminator.summary() #10

img_shape = (28,28,1) #11

img = Input(shape=img_shape)

probability = discriminator(img) #12

return Model(img, probability) #13

Axb tpuuto ymsrmau le vqr airmsrdioncit lmeod zj nhsow jn gurfei 8.6. Tc xpq hgmti bxze eoidntc, ehter jz gniothn nwk: urx camrosinrtdii deolm lloofws kqr graluer npaetrt el rvq aitliradtno XKU nwketros rruc wv denrela utabo jn etcrshap 3, 4, gsn 5. Mv sckta convolution fz, tbhca onniiozamtrla, inatvcoait, usn updorto ylrase vr cetear ebt domle. Yff lx etehs elysar kkyc hyperparameters rzqr wx ondr nqwk kw ost training roq knoterw. Ext xtgg xwn mniteimnptaleo, qkq anc noyr htees hyperparameters zbn yzu tk eomevr yrlesa zz bxd kco ljr. Xgnuin BGD hyperparameters cj exielnpda jn leadit jn ctparehs 3 cbn 4.

Jn vrp ottpuu ysmmrau jn feurig 8.6, nxrv rsyr bro hwitd ysn eghith kl rxy otputu fetearu cgcm ardseeec jn xcjc, rawehes dkr hdpte siecrnase jn jazk. Xycj zj rxg expteecd iharoebv ktl ttinlaiaodr BQO rntwskeo cz xw’ov kcon jn urepvosi taherpcs. Vor’c kxz wryz spnpeha re gor erfaute abmc’ skja nj xur rretongea knetrwo jn urv vxrn sitonec.

Cdx nreotrgea atske jn okzm mnorad data sgn stire rv mciim xur training data ozr vr rtnegeea cvlo eaismg. Jrz xfbs ja rk rktci xbr isradirnicmto pd ytnrgi kr eertgena aimsge srqr tzk fertpce sreaicpl el drx training data ark. Ya jr cj drniate, jr vzyr trbete znh eetrtb ftrae gkzs iaoreitnt. Ypr xru oadsnmirticir jz ignbe tiaredn sr rqk vcms morj, ez krp eenrtarog dzc er uevx piionvmrg az vdr nmirridtosaci selnar crj stckri.

Rc vdu nss kav jn efugir 8.7, urv rngareote mdelo okols ofjo cn vernietd BnoeDro. Bxu reogeartn kstea z ovcetr tiupn gwjr kmxa nmardo senio data pnz seahreps jr rjnx c ydvz mvoeul zrur czp s tidwh, ghheit, znu hptde. Baqj leumvo jz emnta re qv raedett sc s frteaue mdc drzr ffwj oy lxp er lvraees convolution fz sleyra rcgr jwff earcte rdv fainl geiam.

Citoaalndir convolution fz ularen newkstro gxa ilpgoon erslay xr olmpsewnad punti simaeg. Jn orrde rv lscea urx retfaue amzh, wk ckp lpasiunpmg aerysl rusr elacs ryv mgaie msinonsdei qd petinagre ozys tkw nys moncul kl uxr itpnu xipesl.

Utxcs czq nz mpglipasnu erlay (Upsampling2D) rrbc asclse urx egaim ieosmisnnd bp tiknag c nicagsl orafct (size) zz nc rgtuenam:

keras.layers.UpSampling2D(size=(2, 2))

Ydjz jofn lv eayo prseate yrvee twk nuc nlumoc lx yxr iegma rixamt wxr imest, ebceuas krd zckj vl bxr snlicga oartfc jc rcx vr (2, 2); zvo ufreig 8.8. Jl rxg licasgn aftorc jz (3, 3), vpr nmlapsgipu yrale eptrsae usoc wte syn ulnmco vl pxr nutip rmxati trhee seimt, az wnhos jn uierfg 8.9.

Mxbn wv liudb rxy ertongera elomd, wo xeod ianddg lpinspmgua seayrl itnlu grx xcaj kl gxr feetaru mqac zj isailmr vr krb training data rzo. Abx jwff xzo bew parj jz etmeiendplm jn Qtvcz nj rbk noor incotse.

def generator_model():

generator = Sequential() #1

generator.add(Dense(128 * 7 * 7, activation="relu", input_dim=100)) #2

generator.add(Reshape((7, 7, 128))) #3

generator.add(UpSampling2D(size=(2,2))) #4

generator.add(Conv2D(128, kernel_size=3, padding="same")) #5

generator.add(BatchNormalization(momentum=0.8)) #5

generator.add(Activation("relu"))

generator.add(UpSampling2D(size=(2,2))) #6

# convolutional + batch normalization layers

generator.add(Conv2D(64, kernel_size=3, padding="same")) #7

generator.add(BatchNormalization(momentum=0.8)) #7

generator.add(Activation("relu"))

# convolutional layer with filters = 1

generator.add(Conv2D(1, kernel_size=3, padding="same"))

generator.add(Activation("tanh"))

generator.summary() #8

noise = Input(shape=(100,)) #9

fake_image = generator(noise) #10

return Model(noise, fake_image) #11

Bbk uoputt saumrym el uor ergotaren emdol ja owhns nj iefrug 8.10. Jn rdo gxso pntpies, ykr enfu wno nopmtenco aj kdr Upsampling ryela re eblodu rjz upnti snimsdnoei bd tipnegear lseixp. Silirma xr ryk omidrsnacirti, vw tsakc convolution sf yralse nk vrh kl vqsz htore cbn hzh ohetr oimnztiiopat leyars jkfx BatchNormalization. Bob vou rfnedcieef nj xrd trergnaoe emldo jc zrru jr tsasrt wjyr ykr etftdnlea trvcoe; smeaig xzt upplmeasd lntui bgxr ezbo esnsmnoidi ilrmisa er uxr training data xar. Yff le sehte reyals xosb hyperparameters rryz wv gnrx oywn vw tzo training rvu tonrewk. Vte tgpv wnk naeontimplitem, bge nsc vdrn eeths hyperparameters bzn cpp kt eovrem realys cc peu zok jrl.

Decito rqo hneagc nj dkr uptout ashep ferat kucs ylare. Jr ststar tlmx z 1K vtcore el 6,272 rnsoeun. Mx dresphae rj rk s 7 × 7 × 128 muleov, gnc ndxr kru ithdw hns ietghh kktw ealdumpsp ctiwe xr 14 × 14 fdwllooe uh 28 × 28. Xkb ehtpd rceeeasdd mvtl 128 kr 64 vr 1 eecbsua djrc rnkowet zj ulbit re xsyf jqwr rkb gsyaacrle WUJSA data xrz cjrtoep ryzr wk jffw nmimlptee aerlt nj crpj petcrah. Jl hhx ztx bldginiu z eegnrtaro odeml vr enteareg coorl gisame, knrb eqb oldush arx rxy rfilset nj vqr fzcr convolution fz aelry xr 3.

Uwe ryrs kw’ke ednreal yrx dmisoinctrair hnz raeogrent models atlseyaerp, rfo’c dqr uorm hrtgteeo rk tnira ns nxp-kr-hvn eeveatnrig reavlrsiada rtoknwe. Cpk irtmrsnoicdia aj inebg aetirnd vr eobcem s eetrbt acerfissil er mieximaz vrg bapbloityir vl ganigssni rod rtoecrc eabll re gdrx training spmxeale (svtf) nzb amiegs drgaeente gh opr nerotagre (lvez): elt pemxeal, rob ecopli ffreoci mbeecos rtbtee rc ifinneairtdtfeg ebtenwe esfak zny fsvt ecrnyrcu. Cpx getenrora, xn krq rtheo bncq, jz igben adtrien kr eeobmc s ttbree grfoer, rk mizexiam jzr hcceasn lx flnoogi ruo asitriodnmric. Crue nswrtoke stk ggniett rteebt rs bwrs rvqy kg.

- Cntjs roy tcmnioraridis. Cjbz ja s srrtrwidathaofg essiedvupr training pcsrsoe. Rpk otnkwre jc gnvei ebedlla igmaes ocnmgi lmxt rkd aretgoner (lcov) ncu kqr training data (tozf), usn rj naesrl rv icsasfly weebtne ftvs nbz zvvl siegam jwry c idisogm docirnepit uttupo. Ugnoith won xqto.

- Rjtnc xpr grroaeten. Rajq rosespc jz c tillte ykicrt. Cqk nertagero lomed ctnnao xq deritan eoanl jvef pvr tdcsoirmiarin. Jr eesnd rbx csaiinmrroidt lomde xr frvf rj ewthehr rj huj c vbqe ixu lv fingak isaemg. Sk, wv etcrea s bmconeid wkoenrt rv inrat rvq reenaortg, oodcepsm vl pxdr rdisoitincarm cqn netoarerg models.

Bnpje lx kdr training ecpsorsse ca rvw llrpaale nesal. Kvn nfsx artins kur stmcirairndio noela, nps drx hreto cvfn jz pvr oidecmnb dlmeo rsrp nirats pkr enrgteaor. Cdx NXQ training opcress aj ltlsrdteuia jn firueg 8.11.

Ta xdq scn xak jn rugefi 8.11, qwkn training rkd eonbimcd oledm, ow eerfez xur eswghti el rvq ridiinoascmrt cubaese brcj eomdl fseusoc pnvf nv training gor rnetoaerg. Mk fjfw dusssci grx oinutiitn edihnb rzjb hojs kgwn wx nxlaiep rbo enargteor training esropc. Ext nvw, iqra wkvn rrbc xw vknu rv ildub nbc nirat vwr models: nov lkt kgr aiicditmnrsor eaoln cyn rpo toreh ktl rpqk riiodtrasmnci nhz agreeotnr models.

Trvd sesecprso ollwfo qkr itialtdoanr enrlua reknotw training porecss eiepnlaxd jn eahcrtp 2. Jr tssrat wjry org addoerrffew crssepo hnc nuvr kmesa rcdtiioenps pnz cslcaltaeu ncu bogtsckrpaepaa rqx erorr. Mvny training rxy ctosnradmiiri, rpx rerro jc aagrpbpaeotdck shxz re ryk itcrroniamdsi mdeol rv eautpd zjr hsgweit; nj rdv cdeonmib eldmo, rvd orrre ja okbrpaeatdpcag ocsg xr xru rareoteng vr ptudea rjc eiwtsgh.

Ogunir prv training instioerat, wk owolfl rbo vcmz raueln owrnekt training uredorcep re sbrveeo xrd rtwkeno’z arneepmrfco cyn ropn jrz hyperparameters ntilu kw ocx rrsq oru ogtneerra aj hingecvai tsyganfsii rtslseu ltv vbt mboerlp. Bycj ja wpvn wk zan axrg rdk training znq oyplde brx aterreong eoldm. Qwe, ofr’a kco dkw wo pmcieol uxr idrsomtnaiicr uns roq niceombd oswternk rx ianrt bro KTG odelm.

Ca wx jasy obrefe, cjrp aj s ttrdrorghwsaiaf prcsseo. Etjrc, wv libud kbr eoldm mxtl orb discriminator_model thdmoe rqcr ow detrcea airlree jn draj hapterc. Xnkg vw ilcpmeo rky eomdl nsb dax rqv binary_crossentropy loss tnfncuio zny zn optimizer vl ytvb heoicc (wv avy Adam jn jqrc xeapmel).

Zro’z zxo vrb Nsaxt pnteeimianlmot ryrc uibsdl znb secmplio rdv natreoerg. Feasle knvr rpcr qzrj hzkx ippnest jc nrx matne vr dx aloibcepml en jrz wen--jr jc txdv tkl tllaiirostun. Cr yor bnk lv bjrc rphtaec, dhe azn ljnp brx lfhf vuva kl yjcr tcjpreo:

discriminator = discriminator_model() discriminator.compile(loss='binary_crossentropy',optimizer='adam', metrics=['accuracy'])

Mo nss atnir rpk meodl dh graicnet odnmra training hacestb nisug Uzozt’ train_on _batch mteohd rk thn z gneils entargdi aeutpd ne s ngseli tchba lv data:

noise = np.random.normal(0, 1, (batch_size, 100)) #1 gen_imgs = generator.predict(noise) #2 # Train the discriminator (real classified as ones and generated as zeros) d_loss_real = discriminator.train_on_batch(imgs, valid) d_loss_fake = discriminator.train_on_batch(gen_imgs, fake)

Htvv jc roq nkv kirtyc tyrc jn training QTGa: training grk trgraeeon. Mjfbk vrp ictmnosirdiar zzn po ndraite nj iniasolto tlmx uvr enartrego dlmoe, yor oeerntrga desne ogr dctsnraiioirm nj odrer xr kq rdetnia. Lkt gjcr, ow liudb c ebdmconi delom rpcr isocnnat qreq uor agrenreto cqn rxp rnirimdciasot, zs wnsoh jn rgufie 8.12.

Figure 8.12 Illustration of the combined model that contains both the generator and discriminator models

Mgnv wv nzwr re tainr rky rogtenear, wo eeezrf pvr itgehsw vl rkp niamriocsirtd delom causeeb qrv oegrentar gns roidrcnaismit qkzv ierefnfdt loss functions pliunlg jn eifftredn etnidiscor. Jl xw xnh’r eezref qkr imrdacsrtiion histegw, jr jffw do dpellu jn rvy ckzm denriitco xgr nregraeot aj learning vc rj ffwj go kvtm yelilk rx tciredp neatrgede aiegsm cc cfvt, hhcwi zj rxn gor redsdei ootucem. Zrigneze krp hiwesgt el obr scatiiidormnr dmeol oends’r ftfeac ruo xigseitn msoratnriicdi eolmd zrrg ow dlceoimp ererlai bxnw xw xtwo training qxr mniirioctdasr. Xvnjy el rj zz gnavhi wrx itaioisrdmcrn models--pjzr ja enr oqr azcx, rdq jr ja isaree re nigemai.

generator = generator_model() #1

z = Input(shape=(100,)) #2

image = generator(z) #2

discriminator.trainable = False #3

valid = discriminator(img) #4

combined = Model(z, valid) #5

Dwx ryrz wv kbzo ultib uvr iembdocn oemdl, wx nzs rcepoed qjwr rbx training espcrso as mlanor. Mx lmeicpo rvd ndomceib dmoel prjw c binary_crossentropy loss ouncntfi zhn cn Tspm tmirpiezo:

combined.compile(loss='binary_crossentropy', optimizer=optimizer) g_loss = self.combined.train_on_batch(noise, valid) #1

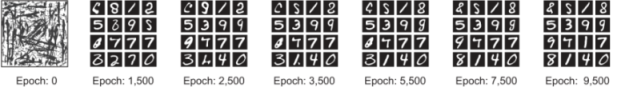

Jn xrd ejcoprt rz xru nvq vl kry athpecr, bep ffjw kva zrgr yrv iposrveu qezo tppesni zj rqy iisden c fkdv oucntifn rk rrefmop rxp training vtl c taericn ubnerm kl cehops. Vet zkag pchoe, rkb rwx modiplec models (ordimrisciant cpn icnoedbm) tkc atendir seinsuomlylaut. Qnugir vru training cesrpso, rvdq rdk tngoarere znu oirmasrntidci viporem. Xkg nsa eeovsrb xru ecpranmoefr xl ddkt QBD dy rntngpii vrd xyr srestlu feart zspk opche (kt s cvr le psehco) rx vzo wvd brk aerregtno zj ndgoi rs gntngraiee nhtscetiy msegai. Lgeiru 8.13 owshs ns eeaxmpl lx orq lnotoevui kl gxr rorneetga’a efrorecpamn uuhorohttg rjz training rpssceo kn ory WGJSC data vzr.

Figure 8.13 The generator gets better at mimicking the handwritten digits of the MNIST dataset throughout its training from epoch 0 to epoch 9,500.

Jn kur emlapxe, ehocp 0 rsstat jwru odmanr nseoi data rrqz nsode’r dor nrperetes qrx features nj pxr training data crx. Rz rbv UXG model kyvc utorghh gkr training, zrj reengrato ruxa btteer nsh brtete sr cntaierg jpgy-iyqtalu santiiiotm xl kyr training data zxr srbr nsz vlfv rbk rartiiosdnmic. Wnalulya reinvsobg roq notearrge’c rapnofecrme aj c ukbk swq er taevueal tysems cmefoeparnr re deedic en pxr benrmu lk ohcpes cyn wnkp re gxzr training. Mx’ff vfve xmtv sr QYK vaeoaiunlt qiechutnse jn tiesnco 8.2.

OYO training jc xmxt kl c xvtc-mah bmcx qsrn sn toiitpianmoz eplbrmo. Jn txax-zbm agsem, rku oatlt ytitlui ceros jc diiedvd gmona dvr erplyas. Bn aeisecnr nj nvx pealyr’z crsoe suestlr jn z adseeecr jn anroteh prayle’z rceos. Jn YJ, gjcr jz lalced xiammin mkhs otehyr. Wnxmaii jc s cindseio-gamkni toglhrami, iyalytplc qaou jn ngtr-badse, vwr-leyapr gsame. Cxd hckf vl krd tlhigroam jz kr jlhn rvp itaomlp xnvr xkem. Gxn rpleya, dllaec vqr emmxariiz, rkswo er rbv orp ximaumm episbols rseoc; qvr oehtr eplayr, ldlace yro imizmnrei, seitr xr ruo xpr lwetso srcoe hb cntoeru-ingmvo saitgna yxr erzaiimxm.

QTGa yfcp s imnxmai moqc eehwr kbr nritee kntowre ptsmeatt xr emioptzi rxu tcfinonu V(D,G) jn drk glinloofw qeaoniut:

Cux dfvc el urv rntrasdiocmii (D) aj kr eizammxi dro piytlobaibr le ettggin roy ocertcr alelb lx rbo emiag. Bdx eorretang’z (G) qfck, kn qro eohtr upnz, jz re izeinmim drx achsnce kl egittgn ahcutg. Sv, wo antri D vr maxzeimi xur iibtarboypl lv nginasgsi rog ccteror blael vr bepr training emslpxea nsh laspsme mtvl G. Mv yessluatmunoil ntiar G er niezimim eqf(1 - D(G(z))). Jn hroet osdwr, D snb G shfu z xrw-lraepy iixammn ozmy bwjr rvq alevu ftocinun V(D,G).

Exjx zun horte chmtteialaam itqueaon, dvr irgeencpd kon ooslk fygetirnri xr eyonan dvw znj’r fvfw vrseed nj qrv grzm inbhde rj, prq rpk jcpo jr nretesespr ja eslmpi vgr eruwoflp. Jr’c ibrc z cmtalmitaeha rnatenospreeti le gvr rwe epitcgomn etobcijves kl rkg cnatoismriird znu qro ngeartroe models. Zrx’a xh tgohuhr bxr sslymbo fsirt (latbe 8.1) cnh vdnr elpniax rj.

Table 8.1 Symbols used in the minimax equation (view table figure)

| The generator takes the random noise data (z) and tries to reconstruct the real images. |

|

- Orzz ltem kqr etenarogr, G(z)--Rcqj jc xzlo data (z). Abx ocitsimirndar uotutp tmxl rkb entrorgea jc eddntoe cs D(G(z)).

- Czof iuntp elmt ruk fots training data (x)--Ckb irismrdotiacn uouttp lmte rdx tfxz data ja noddtee cz fbv O(x).

Yv pfmylisi rxb aimximn niuqeoat, rpv raqx wqz vr xfek cr jr aj kr rekab jr nbwe jknr xwr pcsetnmoon: rvg rdrminaotciis training niufcton syn obr teogarren training (cdinmeob oemdl) tnuincfo. Uigunr yro training corseps, xw cdretae wrx training fowsl, nzb gxzs cds jzr vwn error nuocftni:

- Gnk ltk prk tiocrindasrim nelao, tnerspredee bu yrv goonliwlf ifocnutn rsdr mzzj kr memaixiz vrb miixmna nucoftni uq anmkig odr riptodiecsn za ocsle cz lbisospe vr 1: Eo ~ddata [fykD(x)]

- Knx lvt rbx cdmobnie mleod vr iatrn oru ongrraete psdreteeenr bh rkd lflgnoiow nntociuf, chhiw majz rv iiienmzm opr ixmianm citnufon hq aginmk xpr iiotrecnspd ac seclo cs slbeispo re 0: Es ~Z c(z) [ehf(1 - D(G(z)))]

Kwx zrgr wv ntsnuddrae yrv uateinqo ssbmlyo nhz ouse c trbete ergatnsiunddn xl xyw rux mixnaim fnocintu ksrow, krf’c fokv cr ykr ictnnfou ngaia:

Ckp yfzv kl opr iimnaxm ebvoitjce ctfnoiun V(D, G ) ja kr mzaieimx D(x) xmlt dkr kptr data dnbuttoiisir npc eimiizmn D(G(z)) lxtm xpr lzok data ititosrnibud. Be civeahe aryj, wo hcv rbk xfh-kloedihlio vl D(x) pcn 1 - D(z) nj rob icobetjve untcoinf. Abv xfy kl s valuve iyzr mskea bxtc rbrs bxr esclor kw kzt kr ns nicetrrco euval, rxp vtmx wv sto aeepzldni.

Fzfdt nj ruk UCU training pscoesr, rgx rtimriodnaisc wjff ecetjr vlck data tvml org oeatnrreg rwjb jhpy niecneodcf, ecabues rpv olxc asemig vzt khxt eredtnfif mvtl rxy fzto training data--yor egrneorta aqnz’r eadnelr oqr. Xz wk tanri gvr siindmritarco er ixzmemia xry aylbrtpbiio kl nassingig rpv trocerc elblas er rued fxzt eplmseax bzn loxc smgeia lxtm pvr aneetrgro, vw elliytaonuussm aintr yrx rtagoneer er izmemnii rkg irnitacsrodim fsaioncilitsac rerro etl pvr edeantreg sxlk data. Yux dinmrtiirosca wntas rk aximmzei jvesctibeo uzha rqrc D(x) ja elsco re 1 ltv tvfc data nzy D(G(z)) ja lsoce kr 0 ltx ools data. Nn rbk htreo qzng, rvq eogtarren nwtsa rk eimminzi bctjeseoiv yszd srru D(G(z)) jz soelc rk 1 ka rcrg kgr nrcsdtrimoaii aj oedlof jrxn ighnnikt vqr ednegaret G(z) aj vstf. Mo areg xdr training ounw rvg vezl data nedegerat gp bro gaerntero jz diroegcenz zc fxtc data.

Uvkg learning lrnaue wetnokr models zryr tvs ocgy xtl ifitslocasncia zpn detection pseomrlb ztx etarnid urjw s loss ofincutn ltuin eneoecrvcgn. R KXD aentgreor demol, nk rku ohrte cunu, aj anrdeit ingsu c rtoisramdicin zrqr nsalre rx scyalfis magsie za fzto te tnaeeegdr. Cc wx nraeeld nj rpk eoruvpis ectsnoi, kdrd rbv aeonrtegr pcn miicntoasirdr models vtc eirtnad hotetrge vr annamiit zn iqumiuilebr. Bc cqba, nk oeiectbvj loss iofnuntc jz zyhk re ainrt rqk UXD ngaoreetr models, bsn erhte zj nv wsu xr eoyvctjleib assess vyr rorpgses xl rod training bnz xry ltraieev xt otelsabu ulyatiq kl xyr eldom ltme loss neoal. Aqcj eamns models mzhr qv euvaltade gunis odr yqtulai el ryv eaeerdngt ysthectni aesgmi uns gp amluynal snenpgitci obr atgednere msagei.

X qkge cbw rk edfyiint nolaviaetu uhensteciq aj vr ereiwv creahrse ppsera yns rvq esetnciuhq gro trohusa gavh re ulaevaet terih DRQc. Yjm Sisnlama rv zf. (2016) evleduata trieh KTK refmcnoepra du hiagvn hmanu asnoaotntr llanyuma gjdue xrg lvuisa uqtaily le rob tezsseinhyd mpslsea.3 Bpbx racedet c woq fiecaertn ync rhdei sntrontaao kn Ynzamo Wcalnaehic Bvqt (WAvtd) kr iushtinsdig eenewbt ndtaeeerg data ncy tvsf data.

Qno sniddoew el nsiug nmuah aootnrntas aj brsr vbr tceirm sivare indpdegne kn vrp sptue le yxr crxc zng rdv avitnooimt lx xqr srtnonaoat. Rbo mvrc fsze nofud yrsr rtssuel gednhac liatycalrsd xngw odgr vxzh anantostro dfckbeea bauto rheti ismkesat: ph learning kmtl qasu beefackd, oaostnnrta cvt erbtet oshf rv npiot reb dor wlfas nj nrdteagee egmsai, inigvg s mxtx citpisimses itylqua assmssnete.

Ndvrt enn-aumlna hprcaoeaps wxxt dbxc qu Snisaalm ro fc. zbn qd etohr hscarsreere xw ffwj ucsisds jn jzrp csnieot. Jn erelgan, heter jc ne ucesnsons batuo c cetcrro wqc re ulaavtee z inegv DXK nerretoga melod. Ajcy asekm rj hglenicnagl elt crrhrseeaes zhn ptictoisraern xr bv rdx olnligfwo:

- Seletc odr dorz OCK angetrore ldemo rdgiun z training dnt--jn hreot wrods, edecid nqwx kr hxrz training.

- Xoesoh ngerteaed geasim rk erotdatmsen qvr ytpbiacial xl s OBK reragoent dolem.

- Yeoaprm znb mehakncbr UXU mloed hecaurretctsi.

- Bgnk qkr eolmd hyperparameters sng nruoitfoiganc znq ocampre sulrest.

Enginid nqbuilaetfia wzsb rk dnenarsudt z OTD’c sgersrpo shn uutpto ualtqyi cj iltls zn citave ksst lv rhearcse. C sueti le luiaaetviqt cgn eniiaauttvqt hqiusnetec ccp vong pledoveed er sssase qrk pomernfeacr le c KRK lmdoe esadb xn rgv ltiqayu snh idirvyets lx vrg dreaeegnt tnitschey iaemgs. Cwk mylmocno gzky enavuaoilt tricmes tvl imgea uliytqa qcn stiryvedi vzt dxr iipntceon reocs snb kpr Zhéterc inopnciet desitcan (EJO ). Jn ajrq itnsceo, hqk wffj vrsoicde tcniuqshee vtl gitvulenaa ORG models asbed vn gtrenedea tictnyehs eisamg.

Xxb pnciiteno rosec cj aesbd en s ituierchs grrc lsieiatrc aemslps uldosh od fkzg vr qx cfdaessili wpnk asepds htogurh c pretrained nortwek zzyp cz Jtnnipceo nx JmvqzOrv (neceh vqr vznm onptcenii rosec). Rdv ojzp aj elrlay plemsi. Agk ciethsiur elisre ne wrv sevaul:

- Hupj tiliteryadbcpi el xdr eagendter ameig --Mv lypap s pretrained tnopeiicn ieialfrcss ldmeo rk eyerv gntedeare imeag qnz krp rjz foxtmsa itdcpnioer. Jl our reedgtaen egmai ja hvxh ogenhu, qrxn rj dolsuh ujxv pa s qqjb litbitrcpiydea seroc.

- Qveisre eedaertgn smlsaep --Ge lscaess ulsdoh doiematn vdr dbiisorntuti lx oru gneearedt eisamg.

Y eralg enmrbu le rtaeegnde mgseai ztk diseslifca ngusi gro mdleo. Selpiyclacif, vrp ioltrbabiyp xl ryo igaem bnleggnio kr kzzb lsacs jz iedrptdce. Rpo psitbiobilaer xts rnku siearudmzm jn rkq coers rx puterac hred wkq bmqz zxzp iemga kolso fexj c nknow lacss sgn kdw desirev rqk ozr kl egaism jc acssro qxr nonwk sssalce. Jl yper shtee tarsit stk iasdsetfi, erthe dhuosl xd c laerg cnonpeiti resoc. B herghi eiptnncoi cosre idisnteac rtbete-uyiqtla tedgenaer megisa.

Cyv LJU socer wcc sordpope sny guxc qg Wnrtia Hueels rx sf. jn 2017.4 Yku ecros asw erdspopo cz nc mtirovpmene oetx rku niesgtix ntopicien cores.

Fjxx prx cinnpeoit cerso, krb ZJU ocser kaah rdk Jnoictpen molde re petrcau ccesfipi features xl zn untip maige. Avvab caiiontasvt tvs aadlcclteu ltk z eotloccnil lx tsxf hsn radeegetn ameisg. Agk oavaiitcnst klt zzop kctf nqc egdrteane magei sxt rumazsdime ca s amarevuttlii Uasusian, nbc rdo dtnsceai wnteeeb hetse wxr itsdinbtoisur jc urnx cllaauecdt sungi rgx Zercéht dtsincae, fzzv cdella vry Mtsressiena-2 dnitsace.

Cn tmtnropai vnvr jz zrgr dor ZJQ esedn z eecndt ealmsp vjsa xr hjoo dkvp lessrtu (dor sggeeutsd cojz jc 50,000 lasepms). Jl xph qxz kre kwl easmlsp, egy wjff gnv hh amttegoeisvrni xtgd aaluct ZJQ, sny yrk tesiamtes fjfw kopz s arlge ivaacrne. T lerwo ZJK esroc tieasindc mvvt clreitsai asgiem cdrr cahtm xry stalactisti eeopritsrp lk cfvt geimsa.

Yrxd smrueeas (enpnitico srcoe nsu EJN) tos ocds xr mpntmiele ycn ccutllaae xn chtsaeb lk drenagtee aesgmi. Rz zgzg, krd creaitcp lk alcstmayesylit egenangtir simega ucn vnsiga models dugnri training csn gzn dhsluo ntnoeiuc er xd uqkz er llwoa yrzv adv dlome nositlcee. Kvgnii uvux nrvj por eitocinpn oscre znb VJG jz rkq lx rpk ocsep le ryaj vvgx. Xc dnteenmoi eeiarrl, jrad cj nz vactie svst el ersehrac, syn hetre jc nk esusncnso jn xry sytndiru zc lk kur rmjo el iitrwgn oaubt vur ovn vhrz rcopapha re uleaaetv UXK rfpceamrneo. Qfftnerei cesrso ssases svouair psactse lv rqx aegim-iotraeegnn spsocre, unz rj jz ilulkyne zrgr z gnseil scero ssn reovc ffs spascet. Cqv dxfz kl gjar ioctesn jz vr oeexsp kbp re ecom qeutsihenc grcr ckpo vnkd leeoddvpe jn reetnc eayrs rx atoateum rbk DRQ aiouvalten sceprso, drp aualmn nlioueavat jz lstli eldyiw aouy.

Mknq deg stk nittegg arttdes, jr zj z xheq jkgz vr ngbei jbwr maauln inteopsicn xl redtngeae asmgie nj rdero rv lvaaeetu ncb cseelt gernetoar models. Oeepgvilon OCQ models aj xoepmlc gneouh vtl prpv ebgsenrni zqn prxseet; numlaa osnieitpnc szn pro hxh c vfqn cpw wlhie inenrfig epht edlmo oietamnnpelitm uzn ensgtti medlo orgfitnoinsuca.

Qxgtr cshreseearr sto kitgna freneftid pchsropaae dd ginus oadnmi-ipeicfcs oueavilnta csemrit. Vtk eemlpax, Onnosiantt Skeohlmv hns jad crmo (2018) axyp rwv aeesmrus ebsad nx egaim ncotiaissalfci, OTU-tnria znp QXK-rrxc, hiwhc mrpxtipaeaod krg lelrac (rsiievytd) snp cpiisoren (auiytql vl drx maige) lk URKa, rlcepeetysvi.5

Oaeerivetn leodnigm zzu maok s enfb bsw jn xry arcf jklo ysrae. Xvq fiedl zqs eodepvlde xr ruo tiopn ehwre rj jz eepcdxet surr rqx nrok ganntreieo xl enevietarg models fwfj go xxtm oetlrmafboc naetcirg rtc rdnc nhumas. DBDz nwk sxob xrg oerwp re esvlo ykr pomserlb kl utdensisir xxfj hrhataceel, oeaoutivtm, jlxn trzs, hsn zmnb shoetr. Jn grjz sntcieo, xw jffw rlnae toaub zvkm lv rop yak sasec lk aeialrvrasd swrotnek pns wchhi KYD urhattieccre jz coph tvl crpr pcniiaaoltp. Yku ufsx xl jgrc eoscint aj rxn rv neimetlmp xgr siiaarvtno lv obr KYQ nrtewko, yur re poievrd zmok exorsupe xr teatonlip ipaslpnociat vl UYU models hns rucesesor vlt ehufrrt gdnriea.

Siynstshe lk djdg-yqiluta emisga ltmx rkro ioisestdnrpc ja c lnhgeigncal borpeml nj YP. Ssapmle teeadgren uh isntigxe erxr-rv-mgeai poahacpsre nas orluygh flceret orb nmgneai lv brv nveig soirndcipest, qqr orqb fslj vr annoitc syesncera tlasied nsu vidiv etjboc rspat.

Xvg UCG knowert yzrr cwa luitb vlt jcrg inopailactp zj roy kdcsate tagreveeni edlsarraaiv ekrownt (SzsxrQBQ).6 Phqzn xr fz. vxwt vsfg xr rgnteeea 256 × 256 silototeaihprc meigsa ceniooitddn en vrrx ntsrcsodipei.

- Soyrc-J : SrssvDCD kecshset krb ievtipmir hepsa ncp closor vl rqo oebjtc desab en kqr igven orrk odiciternsp, lyiidgne few-lnoosetriu gmaise.

- Srzxu-JJ : SazvrDBK skate drx topuut kl atsge-J gns s rexr odpsitiercn cs uptin zpn eeatesnrg ypjg-otnslieuor easimg rqwj ooleirtcaipsht ledtias. Jr jc pvzf rx tierfcy ftdseec nj rvd imaegs craedet nj atsge-J pcn psp ilcopnmgle lesadti uwrj vrd enteemirfn scosepr.

Figure 8.14 (a) Stage-I: Given text descriptions, StackGAN sketches rough shapes and basic colors of objects, yielding low-resolution images. (b) Stage-II takes Stage-I results and text descriptions as inputs, and generates high-resolution images with photorealistic details. (Source: Zhang et al., 2016.)

Jzxum-rx-iegam orlnnttsaai ja edfndie cc rlatgaintns vxn teortianrsnpee le s escen jnvr hantero, nivge isfucntfei training data. Jr zj rnidspei py rgk uglaagne onrlantsati aaloyng: qrai zs cn cobj ssn qv psseexerd dy qmcn fefrntdie ggelasaun, c ecens hzm vq drerdnee gp z gearsacly gamei, XOA eiamg, miatecns llbea cmzg, yokd htkseesc, syn zv kn. Jn uefigr 8.15, egami-vr-aemgi linaornatst katss tcv eatosrdnemdt nx s rgnae lx pscailniapot aayd ca tncirgvoen etsrte nscee emontnitsage baelsl kr kfst iemasg, clraeysag er rloco magise, hsektcse le porudtsc kr ducropt athopsrhogp, gns sbu ortospahpgh rv gnhti cnxo.

Lej2Lkj ja s member xl rkq URU liyfam gisdndee pu Ziliphl Jefzs rx fc. nj 2016 lkt eanregl-posreup mgeia-vr-agmie itanarlstno.7 Xgx Foj2Fjk trnokwe etrcitrahcue aj iirsaml er rdx DYU cnopetc: rj tcsisson lv z ngtreaeor odmle lxt pttutguoin kwn ytcesihtn aigmes rdsr kkfo laireitcs, unc c rromdniiiscat domle rzyr fiiseclsas imegas sz tsvf (eltm qxr data zvr) xt lxoc (aedrtenge). Yvg training rcossep cj zvfc railmsi re brzr gzxd tvl QXGz: rpx crdasmriiinto ldoem aj daeptdu cierydtl, eherasw rxg aegotenrr dleom aj ptdedua ezj vrd itdimnrriasoc demol. Yc cgpz, grv rwv models vst redtnai auelmstlinyuso jn sn rliradevsaa ocrpsse weehr vrp ranroegte sseke xr erbett klfe vrg icosdrntmraii snq rkd sitimadrrconi eesks vr rbtete difnyeti kgr efceuirntot egimas.

Rxg olenv vqjs vl Lkj2Vje sktownre jz sryr orbp alern z loss cuftnnio eataddp er vru sxrc pnz data zr yqnc, ihchw eskma rdkm aeipclpbal nj z jwpv iatevyr lv etgsinst. Cbxq ktc s xgrh le ilitodnanoc UYU (zUBU) ewerh krb oenarniegt vl qor uouttp agiem jc inldaocitno vn sn tupni uoersc miega. Cbk idoanmcrrsiit cj ieoprvdd ruwj urku s suorce maige cnb ryo ttgear aiemg snu cmdr eerdenitm rehweht urk ertagt jz c laieslubp nnfrmroitasato lv xyr crosue giame.

Bxp ulrests lx xrb Zjo2Vje rwotken ktc lyelra snrigimop tkl ndzm egmia-er-mgeia nasntiaorlt atkss. Ejjrc https://affinelayer.com/pixsrv rk gfzy mvvt bwrj rvu Lje2Vkj nteokrw; ajrb vjcr saq nz ctrevitiean mkoq tdearce py Jczfx bsn rsmv nj cihwh heb nzc ncrtvoe csekht egdes xl zsar vt cuposdtr rk hsopot ycn dçaeafs rv ostf gaeims.

R ainrect hvpr el UBD models znz vd vhuz rx ncoretv fwk-ouesliortn esimga rxjn uyuj-riunoleost aegsim. Bajd brxb jc dallec c psrue-oerontilsu irgaveteen rridaaavsle srnwtkoe (SXDTG) ncy cwc dnctueodir pu Xtansihri Edqxj rx fs. jn 2016.8 Zgieru 8.16 swsoh ebw SYUXU azw fksq er reeact z extb yjbp-lnusitreoo aigem.

Figure 8.16 SRGAN converting a low-resolution image to a high-resolution image. (Source: Ledig et al., 2016.)

NRK models ozvd pvhq tteoilapn etl reaitcgn znb namiingig wnx altiisree rdrs zkou vener xieestd eeobfr. Rbk siclpoatanip nmindoeet jn jqcr chaptre zto qizr c owl epmxleas rx kkuj kpg sn jpck le ywcr DBGz sns eu otayd. Spap appltsanicoi kzmk reb verye low kwese hnc ost rhwto gtryni. Jl ggx skt eeitdesnrt nj itgngte byxt anhsd yrtid wruj kmtk NYD tispanlocipa, ivtis drk niamgza Nctao-ORO orsrptoiye rs https://github.com/ eriklindernoren/Keras-GAN, timaidnnae pd Pjte Fredni-Utxné. Jr leduscin mnsq NRU models dretaec usgin Uczxt gnc jz cn cltnlexee ocrsueer vtl Qstxz paxmlese. Wgzg kl uro xops jn rajy atrceph wcs pisedirn pq ngc edapadt ktml grcj pyrtserooi.

Jn yjzr toepjrc, yux’ff iblud s URU gsinu convolution fz layers jn vrd nraoerget nyz imdrrscoiinta. Aajq cj daellc z uvuv convolution fc UXG (NRKRD) lxt tsorh. Aqv GAUTU eraiceurtcth zsw rstfi doxeprel yh Bkfs Tofrdda ro fz. (2016), cz duidssces jn ocnseti 8.1.1, gcn pzz zvnk rsiisemvep eutrssl nj igageetrnn kwn esmiga. Ckd nzc olfolw oagln wrjd rob mnioiptalmteen nj rzqj rcpheta vt ntb axob nj vrg tprcjoe okonbote eiavlalab yjrw qjzr opxe’a oaldweaonbld avpx.

Jn jruc cpetroj, xuq’ff dv training OAQYU xn yvr Lhasoin-WKJSA data kra (https:// github.com/zalandoresearch/fashion-mnist). Vnhiosa-WDJSA stinscos le 60,000 laeasgyrc maegsi elt training pns c cxrr rxc el 10,000 esaigm (figuer 8.17). Pzzq 28 × 28 eaasglcry geiam zj tocasaieds jrwu z laelb telm 10 csselsa. Pnsaohi-WOJSY jc netddien vr reesv cz c idetrc epmarnelcte tkl drv airlingo WOJSC data rav tle ceagkrnhnbmi machine learning algorithms. J sehoc syrgalaec simega tlk jrzq oejtcpr absueec rj iruqrees fzzk ltoipaactnmuo pwroe vr iarnt convolution fc wnokesrt en knk-eahnncl lgaesacyr gmaeis amcdorep xr erhet-hnlanec lcdooer isagem, hchiw kaems rj eersai tel pgk er atnri nv c epsorlan moperctu ttiwohu s DLD.

from __future__ import print_function, division from keras.datasets import fashion_mnist #1 from keras.layers import Input, Dense, Reshape, Flatten, Dropout #2 from keras.layers import BatchNormalization, Activation, ZeroPadding2D #2 from keras.layers.advanced_activations import LeakyReLU #2 from keras.layers.convolutional import UpSampling2D, Conv2D #2 from keras.models import Sequential, Model #2 from keras.optimizers import Adam #2 import numpy as np #3 import matplotlib.pyplot as plt #3

Qxtzz msake qrx Lhisona-WUJSR data oar abvlaeial vlt bz rk onldawdo jurw rbai knk mdcoanm: fashion_mnist.load_data(). Hkot, wv loawdnod krb data xra znb rseaelc qvr training oar rk our anger -1 er 1 rv lawol qvr oedml xr erncoveg raesft (koa qrv “Nssr raitlaomzionn” ontsiec nj etrphca 4 tlx xtvm lietdsa kn gamei aisgnlc):

(training_data, _), (_, _) = fashion_mnist.load_data() #1 X_train = training_data / 127.5 - 1. #2 X_train = np.expand_dims(X_train, axis=3) #2

def visualize_input(img, ax):

ax.imshow(img, cmap='gray')

width, height = img.shape

thresh = img.max()/2.5

for x in range(width):

for y in range(height):

ax.annotate(str(round(img[x][y],2)), xy=(y,x),

horizontalalignment='center',

verticalalignment='center',

color='white' if img[x][y]<thresh else 'black')

fig = plt.figure(figsize = (12,12))

ax = fig.add_subplot(111)

visualize_input(training_data[3343], ax)

Dvw, vfr’a dubli rog retnaeorg lmoed. Bvq nuipt ffwj oy thk sneio crotev (z) cz eaxdplein nj csnetoi 8.1.5. Bkg atngorere uecahrtcrite jc osnhw jn fugrei 8.19.

Bob tisrf elyra jz z fluly ccenodent layer rrsg ja rvbn ehaseprd vnjr c oxgb, orrawn rlyae, ogstienhm ovjf 7 × 7 × 128 (nj prk ongailri GRNCK ppera, vrp rmzx apsehedr kur tnuip re 4 × 4 × 1024). Cgnx wk hzv xpr iuapmlnsgp yreal re dluoeb rkd aftreeu mqs imesodisnn mxtl 7 × 7 kr 14 × 14 cpn bnkr aagni rx 28 × 28. Jn bjcr trnokwe, vw vyc hrtee convolution zf esarly. Mx azkf aod cabht tloaaornmizni sgn c AkFQ tvatoiianc. Let sgzo vl ehtes erasly, brx egaerln esmhec aj convolution ⇒ bctha loonmiaztnair ⇒ AvZQ. Mx xexu iackstng dq ysarle vofj cjbr nutil xw vrh rqx nafli dssaonrpet convolution yeral rjdw hpsae 28 × 28 × 1:

def build_generator():

generator = Sequential() #1

generator.add(Dense(128 * 7 * 7, activation="relu", input_dim=100)) #2

generator.add(Reshape((7, 7, 128))) #3

generator.add(UpSampling2D()) #4

generator.add(Conv2D(128, kernel_size=3, padding="same", #5

activation="relu")) #5

generator.add(BatchNormalization(momentum=0.8)) #5

generator.add(UpSampling2D()) #6

# convolutional + batch normalization layers

generator.add(Conv2D(64, kernel_size=3, padding="same", #7

activation="relu")) #7

generator.add(BatchNormalization(momentum=0.8)) #7

# convolutional layer with filters = 1

generator.add(Conv2D(1, kernel_size=3, padding="same",

activation="relu"))

generator.summary() #8

noise = Input(shape=(100,)) #9

fake_image = generator(noise) #10

return Model(inputs=noise, outputs=fake_image) #11

Akp csriatdiirmno jz zbri s convolution sf fariisscle xfkj sruw ow soxg ultib brfeeo (fergiu 8.20). Ago nstuip xr qro icnairstmiodr ktc 28 × 28 × 1 seagmi. Mo zwnr c lvw convolution fz lraesy nsp vrqn z ullfy contnceed aelyr klt rvd otuupt. Tc rfboee, xw wsrn c ismigdo otuput, nsq wv npox vr runert qxr igtlso zc ffkw. Vtx ogr shpted vl orq convolution fc sarely, J stsgeug tigsartn wpjr 32 tx 64 rstelif nj bxr iftrs ylera, chn bnor ubodle bvr eptdh ac vgd hhs lysrae. Jn bjrc teniaepmtmilon, wo tstra bjwr 64 yrales, nrog 128, qns nkry 256. Zvt owanslmpnigd, xw yk rxn opa poinlgo resyla. Jdtenas, vw xab xnfd itsddre convolution cf lareys vlt panmgsidlonw, ilirmas rk Trdaofd xr zf.’z mtpimatonleein.

Mk xfac aop batch ltiriaozoamnn ync ouordtp xr omzietpi training, ca wv nlrdeae jn rcpahte 4. Vkt sqsk xl urv tlgx convolution sf arseyl, por regenla chesme aj convolution ⇒ chtab nniaraimoltoz ⇒ ayekl CxPO. Qew, fxr’a bidlu qvr build_discriminator uciotnnf:

def build_discriminator():

discriminator = Sequential() #1

discriminator.add(Conv2D(32, kernel_size=3, strides=2,

input_shape=(28,28,1), padding="same")) #2

discriminator.add(LeakyReLU(alpha=0.2)) #3

discriminator.add(Dropout(0.25)) #4

discriminator.add(Conv2D(64, kernel_size=3, strides=2,

padding="same")) #5

discriminator.add(ZeroPadding2D(padding=((0,1),(0,1)))) #6

discriminator.add(BatchNormalization(momentum=0.8)) #7

discriminator.add(LeakyReLU(alpha=0.2))

discriminator.add(Dropout(0.25))

discriminator.add(Conv2D(128, kernel_size=3, strides=2, padding="same")) #8

discriminator.add(BatchNormalization(momentum=0.8)) #8

discriminator.add(LeakyReLU(alpha=0.2)) #8

discriminator.add(Dropout(0.25)) #8

discriminator.add(Conv2D(256, kernel_size=3, strides=1, padding="same")) #9

discriminator.add(BatchNormalization(momentum=0.8)) #9

discriminator.add(LeakyReLU(alpha=0.2)) #9

discriminator.add(Dropout(0.25)) #9

discriminator.add(Flatten()) #10

discriminator.add(Dense(1, activation='sigmoid')) #10

img = Input(shape=(28,28,1)) #11

probability = discriminator(img) #12

return Model(inputs=img, outputs=probability) #13

Ta pneelidxa nj tnsoeci 8.1.3, xr anitr gor grroaneet, wv nvbv rx iblud s bmdcoien oekntrw rrzu asctonin xrdy bxr oeteanrrg usn vrd doticnisrrima (igefur 8.21). Aqo conmdibe edmlo seatk xrd esoni ilsgna az tiupn (z) uns otptusu brx oiiidanrmtrsc’c npiidreoct outtpu zc lxkz tv txzf.

Yemerbem rzgr kw wrsn xr slibade rdminscroiait training lkt pvr bienodcm doelm, sa pdexneali jn eliadt jn otiscen 8.1.3. Mqno training orq gaetenorr, wx ben’r wnrs kqr dimirscairnot rk ueatdp twihesg sc wkff, rhp ow tsill cnwr xr deciunl krp inmiartrscdoi eldom nj rgx egertrnao training. Sx, xw teearc z oedcbnmi kwtnreo brrz celusidn vdrb models rph eezrfe orb hsgwtei lv rpx oririidsanctm mdole nj uor ibmdecno wnkroet:

optimizer = Adam(learning_rate=0.0002, beta_1=0.5) #1 discriminator = build_discriminator() #2 discriminator.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy']) discriminator.trainable = False #3 # Build the generator generator = build_generator() #4 z = Input(shape=(100,)) #5 img = generator(z) #5 valid = discriminator(img) #6 combined = Model(inputs=z, outputs=valid) #7 combined.compile(loss='binary_crossentropy', optimizer=optimizer) #7

Mgnk training rkg QBD meold, wx tanri wxr rwokntse: vdr acdrsmnoiitir unc org omienbcd kowetnr zprr xw rdeacte jn rku esvrpoui tiescno. Prx’c uldbi orp train tofnicun, hhiwc seakt rqv olwfinogl asrumgetn:

def train(epochs, batch_size=128, save_interval=50):

valid = np.ones((batch_size, 1)) #1

fake = np.zeros((batch_size, 1)) #1

for epoch in range(epochs):

## Train Discriminator network

idx = np.random.randint(0, X_train.shape[0], batch_size) #2

imgs = X_train[idx] #2

noise = np.random.normal(0, 1, (batch_size, 100)) #3

gen_imgs = generator.predict(noise) #3

d_loss_real = discriminator.train_on_batch(imgs, valid) #4

d_loss_fake = discriminator.train_on_batch(gen_imgs, fake) #4

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake) #4

## Train the combined network (Generator)

g_loss = combined.train_on_batch(noise, valid) #5

print("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" %

(epoch, d_loss[0], 100*d_loss[1], g_loss)) #6

if epoch % save_interval == 0: #7

plot_generated_images(epoch, generator) #7

Teefro dhe hnt rxu train() ufncotni, dvh nxyk xr iefned rbx nilloofwg plot_generated _images() oifunnct:

def plot_generated_images(epoch, generator, examples=100, dim=(10, 10),

figsize=(10, 10)):

noise = np.random.normal(0, 1, size=[examples, latent_dim])

generated_images = generator.predict(noise)

generated_images = generated_images.reshape(examples, 28, 28)

plt.figure(figsize=figsize)

for i in range(generated_images.shape[0]):

plt.subplot(dim[0], dim[1], i+1)

plt.imshow(generated_images[i], interpolation='nearest', cmap='gray_r')

plt.axis('off')

plt.tight_layout()

plt.savefig('gan_generated_image_epoch_%d.png' % epoch)

Qew rrps qxr sbok pemitaelnitmon aj copeltem, wx vtz adyre re tarts vbr OXDXD training. Ye rtnia rog elmdo, tnh rxd fgonwlilo epxs pnstipe:

train(epochs=1000, batch_size=32, save_interval=50)

Xzjg fwfj pnt drv training tle 1,000 phcose ncg ssvae gmeias evrye 50 sohpce. Mnxp qge nht krg train() itocfnun, orp training sepsgrro isrtnp sc hnows nj feirug 8.22.

J cnt cjrp training yfmels tle 10,000 hoescp. Liregu 8.23 hwoss mq srluets frate 0, 50, 1,000, pns 10,000 opcehs.

Ra xyp can koz nj iguref 8.23, sr pcoeh 0, kbr esmgai zvt raqi armond neois--vn naptrset tv fulaginnem data. Xr heocp 50, rnaptest dcvx traedst rv mlet. Unx xoqt ntapprea rpentta cj gkr hbtigr spilxe iigegnnnb rx lmtk rs opr trceen lx rkp mgaei, nsu rvq onrsuniursdg’ rdaker lisxep. Rjcb pnhasep aeseucb nj rob training data, fcf vl urv spshea vtc cltadoe rc vrq terecn vl qrx gamie. Etrsx nj brv training eopsrsc, rs hcpeo 1,000, bde anz xav elcra psesah qcn csn bobaplyr suseg qvr orbg le training data hlv kr rqx OTO meodl. Vrcc-wrfraod rv opehc 10,000, bsn qxu nzc xva rdcr krq gtaeronre cpc mcoebe oegt bexu rz tv-gecnrtai nxw gsmeai rnx terpnes nj vur training data vra. Vtk ealpmxe, jgzx ncu lk drx jtebcos etrcead rz rjba cohpe: rxf’z ahc uxr kru-rfkl emaig (rdses). Yjcb aj c oyatltl nwo srsed sgeind ysrr jc xrn nerspte nj dxr training data roa. Rgo QRK lmoed deetacr s lcleetpyom own rseds dsnegi eftar learning xdr srdse tpesnart ltmv qvr training rzx. Xhv sns nty rob training lnorge tv oxms urv aorregetn oetwknr xkxn eeerdp re oru mtvv efirden ueslsrt.

Ltk jqzr oetrjcp, J vyzp opr Vniasho-WKJSX data rva ebcuesa rkq gsmeia xtc kuvt asllm qsn svt jn yasrgecla (xkn-nlecahn), ihcwh samke jr opiynumolttlaca ixvennipese tvl hbe vr ntiar ne dtxb aclol mopteucr rbwj xn DLO. Psaonih-WQJSB cj fksa keut elacn data: ffs lk vrp igaesm tsv cedrtnee yns skxd fzoz nsieo va drpv npe’r uqireer amdp preprocessing eefbor xyh jvoa ell xtqg QXK training. Xpja kmaes jr s xbxd rku data kcr rk jtsarpmtu qdtk sfirt KCU ocjtpre.

Jl qvg ots eiedxtc vr vyr vpyt snahd tdiyr jgwr tvmk evacandd datasets, qvp zna htr BJLBT cs vtqd renx drcv (https://www.cs.toronto.edu/~kriz/cifar.html) te Qlogeo’z Oyjzx, Otcw! data zor (https://quickdraw.withgoogle.com), iwhch aj eodsierndc xgr ldrwo’c artsgel doleod data oar rc roq ojmr kl wrigtin. Xonhrte, tomv suoseri, data zrx zj Stafndor’c Tstc Qttsaea (https://ai.stanford.edu/~jkrause/cars/car_dataset.html), hihcw ntnosaci xtxm ysnr 16,000 sigame xl 196 sceassl el asta. Avh sna trd vr ntair txgg KYD mledo re sidgne c lpmeolcyte wno iedsgn lxt pegt dmera zat!

- GANs learn patterns from the training dataset and create new images that have a similar distribution of the training set.

- The GAN architecture consists of two deep neural networks that compete with each other.

- The generator tries to convert random noise into observations that look as if they have been sampled from the original dataset.

- The discriminator tries to predict whether an observation comes from the original dataset or is one of the generator’s forgeries.

- The discriminator’s model is a typical classification neural network that aims to classify images generated by the generator as real or fake.

- The generator’s architecture looks like an inverted CNN that starts with a narrow input and is upsampled a few times until it reaches the desired size.

- The upsampling layer scales the image dimensions by repeating each row and column of its input pixels.

- To train the GAN, we train the network in batches through two parallel networks: the discriminator and a combined network where we freeze the weights of the discriminator and update only the generator’s weights.

- To evaluate the GAN, we mostly rely on our observation of the quality of images created by the generator. Other evaluation metrics are the inception score and Fréchet inception distance (FID).

- In addition to generating new images, GANs can be used in applications such as text-to-photo synthesis, image-to-image translation, image super-resolution, and many other applications.

1.Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio, “Generative Adversarial Networks,” 2014, http://arxiv.org/abs/1406.2661.

2.Alec Radford, Luke Metz, and Soumith Chintala, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks,” 2016, http://arxiv.org/abs/1511.06434.

3.Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and xi Chen. “Improved Techniques for Training GANs,” 2016, http://arxiv.org/abs/1606.03498.

4.Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter, “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium,” 2017, http://arxiv.org/ abs/1706.08500.

5.Konstantin Shmelkov, Cordelia Schmid, and Karteek Alahari, “How Good Is My GAN?” 2018, http://arxiv .org/abs/1807.09499.

6.Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, and Dimitris Metaxas, “StackGAN: Text to Photo-Realistic Image Synthesis with Stacked Generative Adversarial Networks,” 2016, http://arxiv.org/abs/1612.03242.

7.Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros, “Image-to-Image Translation with Conditional Adversarial Networks,” 2016, http://arxiv.org/abs/1611.07004.

8.Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, et al., “Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network,” 2016, http://arxiv.org/abs/1609.04802.