chapter five

5 Introduction to value-based deep reinforcement learning

In this chapter

- You'll understand the inherent challenges of training reinforcement learning agents with non-linear function approximators.

- You'll learn to approximate value-functions using neural networks.

- You'll create a deep reinforcement learning agent that when trained from scratch with minimal adjustments to hyperparameters can solve different kinds of problems.

- You'll identify the advantages and disadvantages of using valuebased methods when solving reinforcement learning problems."Human behavior flows from three main sources: desire, emo-tion, and knowledge."

"Knowledge is of no value unless you put it into practice."

· — Anton Chekhov

A Russian playwright and short-story writer, considered among the greatest writers in history

5.1 The kind of feedback a deep reinforcement learning agent deals with

Deep reinforcement learning deals with sequential feedback

Deep reinforcement learning agents deal with sequential, evaluative and sampled feedback. Up until now, you studied two of the three properties (sequential and evaluative) both in isolation (MDPs is sequential and Bandits is evaluative) and then in interplay ('tabular' reinforcement learning is both sequential and evaluative).

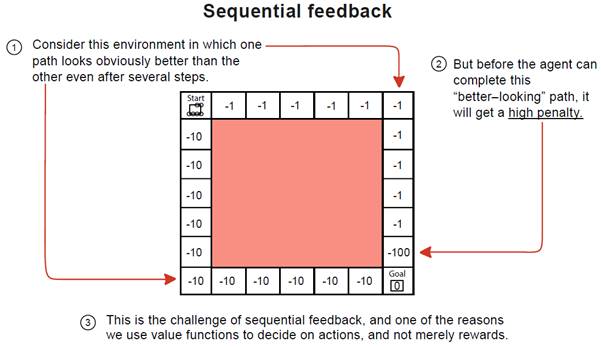

Initially, we examined the issues with sequential feedback in which actions have not only immediate but also long-term consequences. Remember MDPs? Value Iteration? Policy Iteration?

Sequential feedback