12 Combining models to maximize results: Ensemble learning

This chapter covers

- What is ensemble learning, and how it is used to combine weak classifiers into a stronger one.

- Using bagging to combine classifiers in a random way.

- Using boosting to combine classifiers in a more clever way.

- Some of the most popular ensemble methods: random forests, AdaBoost, gradient boosting, and XGBoost.

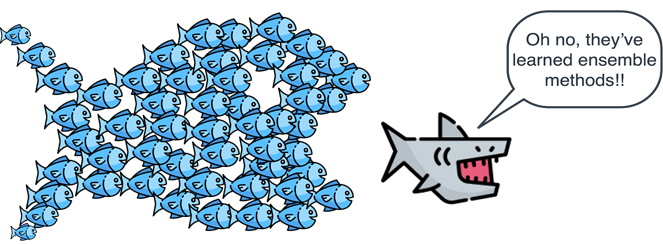

After learning many interesting and useful machine learning models, it is natural to wonder if it is possible to combine these classifiers. Thankfully, we can, and in this chapter I show you several ways to build stronger models by combining weaker ones. The two main methods we learn in this chapter are bagging and boosting. In a nutshell, bagging consists of constructing a few models in a random way, and joining them together. Boosting, on the other hand, consists of building these models in a smarter way by picking each model strategically to focus on the previous models’ mistakes. The results that ensemble methods have shown in important machine learning problems has been tremendous. For example, the Netflix prize, which was given to the best model that fits a large dataset of Netflix viewership data, was won by a group that used ensemble methods.