1 Introduction to Large Language Models

This chapter covers

- Introducing the Large Language Models

- Defining the limitations of LLMs

- Finetuning a model

- Introducing retrieval augmented generation (RAG)

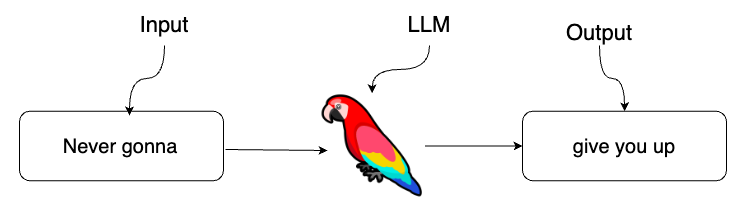

Undoubtedly, 2023 was the year of Large Language Models (LLM). Even if you are a non-technical person, you may have heard of ChatGPT. ChatGPT is a conversational user interface developed by OpenAI and powered by LLMs, such as GPT-4 [OpenAI et al., 2024]. LLMs are built on transformer architecture [Vaswani et al., 2017], which enables them to process and generate text efficiently. These models are trained on vast amounts of textual data, allowing them to learn patterns, grammar, context, and even some degree of reasoning. The training process involves feeding the model large datasets that include a diverse range of text with the primary objective of enabling the model to accurately predict the next word in a sequence. This extensive exposure enables the models to understand and generate human-like text based on the patterns they have learned from the data. For example, if you use "Never gonna" as input to an LLM, you might get a similar response as shown in 1.1.

Figure 1.1 LLMs are trained to predict the next word