15 Building a QA agent with LangGraph

This chapter covers

- Implementing the expert-emulating approach

- Implementing investigation through question answering

- Adapting and improving the system

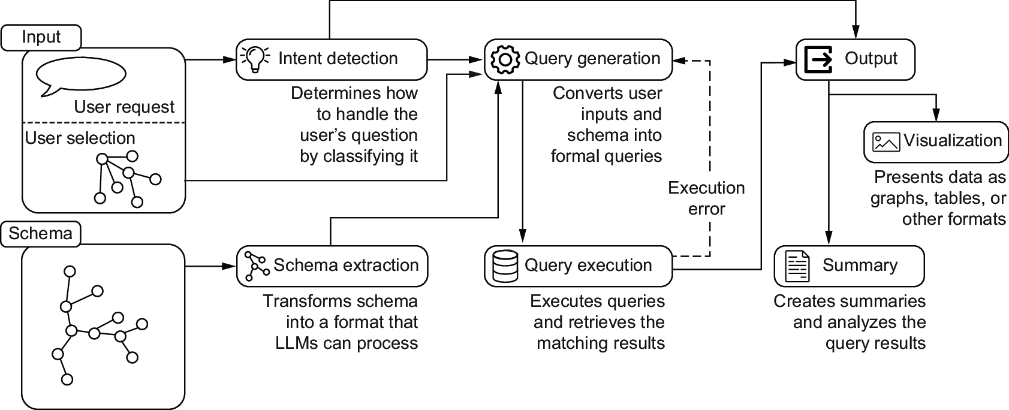

In this chapter, we’ll create a practical application for querying knowledge graphs using LLMs. Drawing together the concepts and techniques explored in chapter 14—illustrated in the mental model in figure 15.1—we’ll demonstrate how to build an integrated solution. Using LangGraph as our orchestration framework, we’ll show how each stage can be combined into a seamless pipeline. To make this system accessible and user-friendly, we will use Streamlit as a frontend interface. The book’s code repository contains the complete implementation and configuration files, so you can easily follow along and reference the code as we progress through the concepts.

Figure 15.1 Overview of the system architecture introduced in chapter 14. We’ll implement it using Streamlit to handle user input (questions and user selection) and output (visualization and summaries), and LangGraph will orchestrate the core pipeline.

15.1 Building the LangGraph pipeline

LangGraph is an innovative library designed for building stateful, multi-actor applications powered by LLMs. This framework is particularly suited for orchestrating workflows that involve complex reasoning and decision-making processes, which are central to our KG querying pipeline.