5 Resource Management

This chapter covers

- How Kubernetes allocates the resources in your cluster

- Configuring your workload to request just the resources it needs

- Overcommitting resources to improve your performance-to-cost ratio

- Balancing Pod replica count with internal concurrency

Chapter 2 covered how Containers are the new level of isolation each with their own resources, and Chapter 3 that the schedulable unit in Kubernetes is a Pod (which itself is a collection of containers). This chapter covers how Pods are allocated to machines based on their resource requirements, as well as the information that you need to give the system so that your Pod will receive the resources that it needs. Knowing how Pods are allocated to Nodes helps you make better architectural decisions around resource requests, bursting, overcommit, availability, and reliability.

5.1 Pod Scheduling

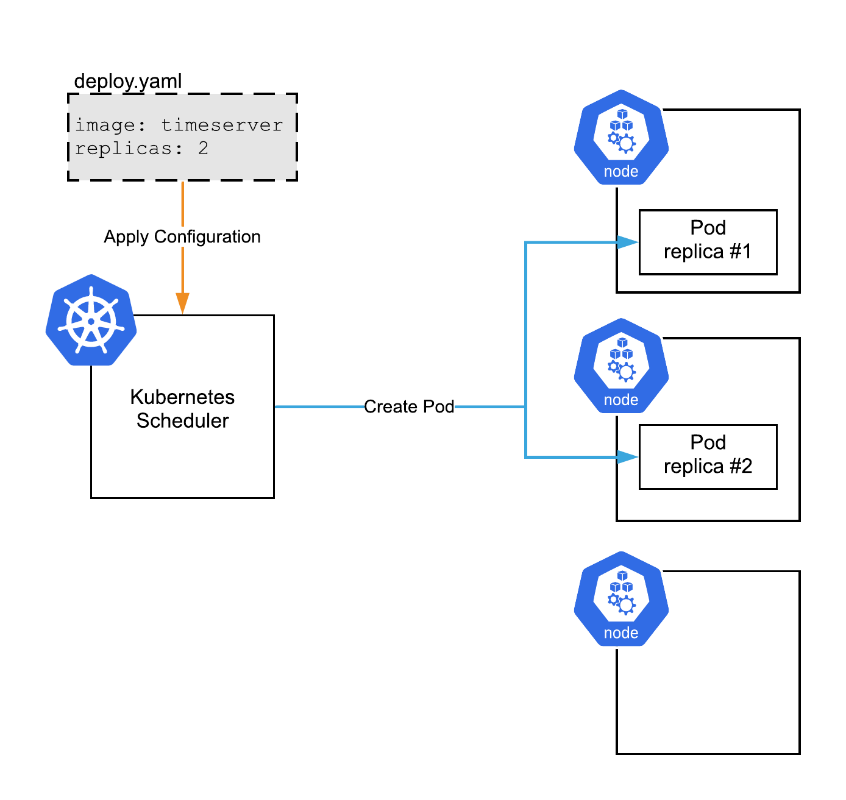

The Kubernetes scheduler performs a resource-based allocation of Pods to Nodes, and is really the brains of the whole system. When you submit your configuration to Kubernetes (as we did in Chapter 3 and 4), it’s the scheduler that does the heavy lifting of finding a Node in your cluster with enough resources, and tasks the Node with booting and running the containers in your Pods.

Figure 5.1 In response to the user applying configuration, the scheduler issues a Create Pod command to the node.