In part 1, we focused on aligning ML project work to business problems. This is, after all, the most critical aspect for making the solution viable. While those earlier chapters focused on communication before, during, and immediately up to a production release, this chapter focuses on the project communication after release. We’ll cover how to present, discuss, and accurately report on the long-term health of ML projects—specifically, in language and methodologies that the business will understand.

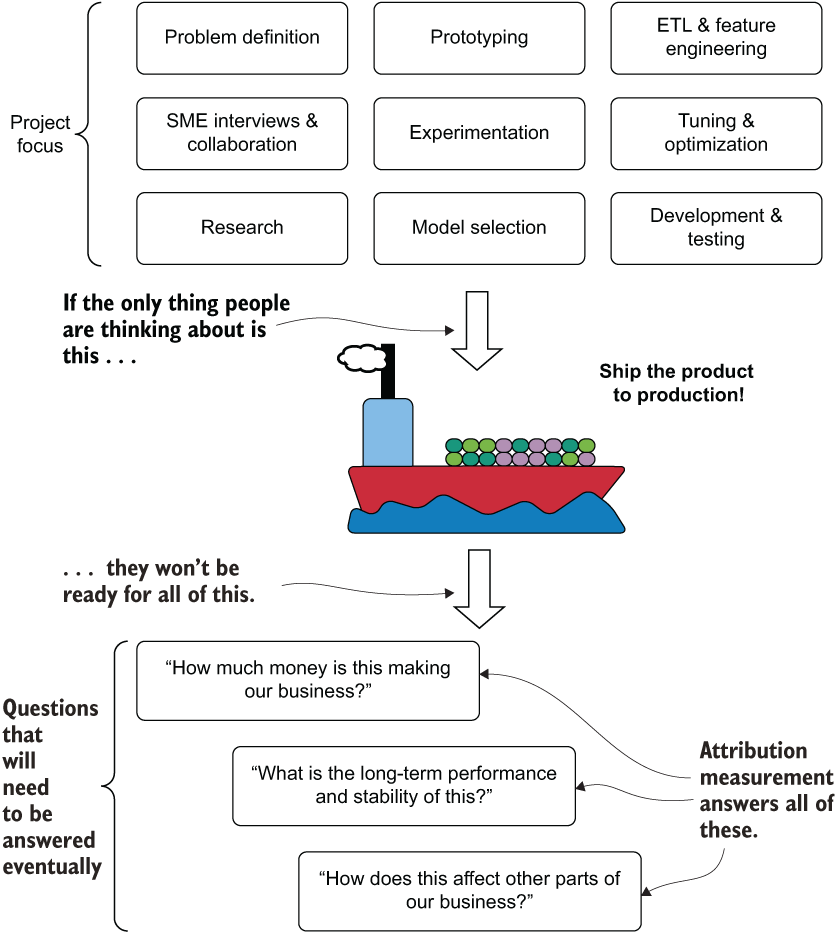

Discussions about model performance are complex. While the business is focusing on measurable attributes of business performance, the ML team is focused on measurements of model efficacy as it relates to strength of correlation to a target variable. Even though a language barrier is implicitly defined in these differing goals, a solution is available. By focusing communications to the business around its metrics, you can answer the question the business leaders really want answered: “How is this solution helping the company?” Ensuring that analytics are performed on those business metrics that the internal customer really cares about, a DS team can avoid the situation that figure 11.1 illustrates.