chapter fifteen

In the preceding chapter, we focused on broad and foundational technical topics for successful ML project work. Following from those foundations, a critical infrastructure of monitoring and validation needs to be built to ensure the continued health and relevance of any project. This chapter focuses on these ancillary processes and infrastructure tooling that enable not only more efficient development, but easier maintenance of the project once it is in production.

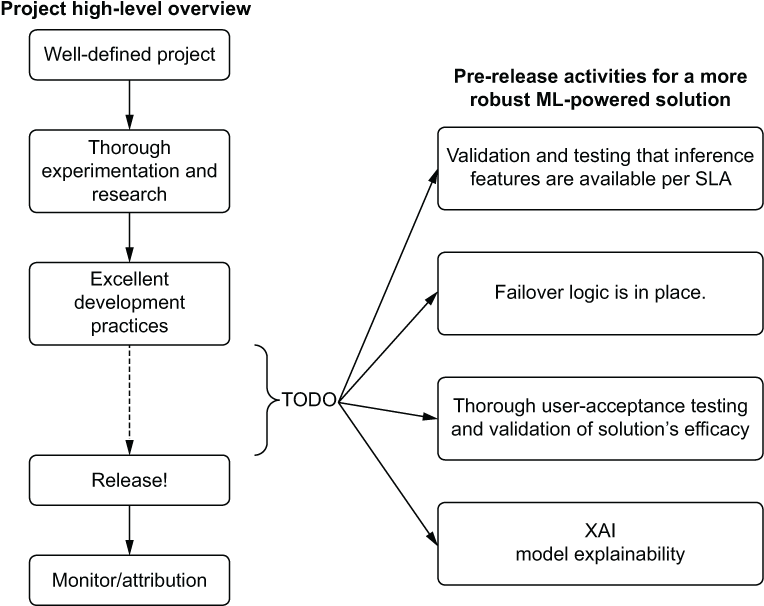

- Data availability and consistency verifications

- Cold-start (fallback or default) logic development

- User-acceptance testing (subjective quality assurance)

- Solution interpretability (explainable AI, or XAI)

To show where these elements fit within a project’s development path, figure 15.1 illustrates the post-modeling phase work covered in this chapter.