chapter three

This chapter covers

- Setting up the infrastructure backbone of your ML platform

- Containerizing applications with Docker

- Orchestrating deployments with Kubernetes

- Automating builds and deployments

- Implementing monitoring for production applications

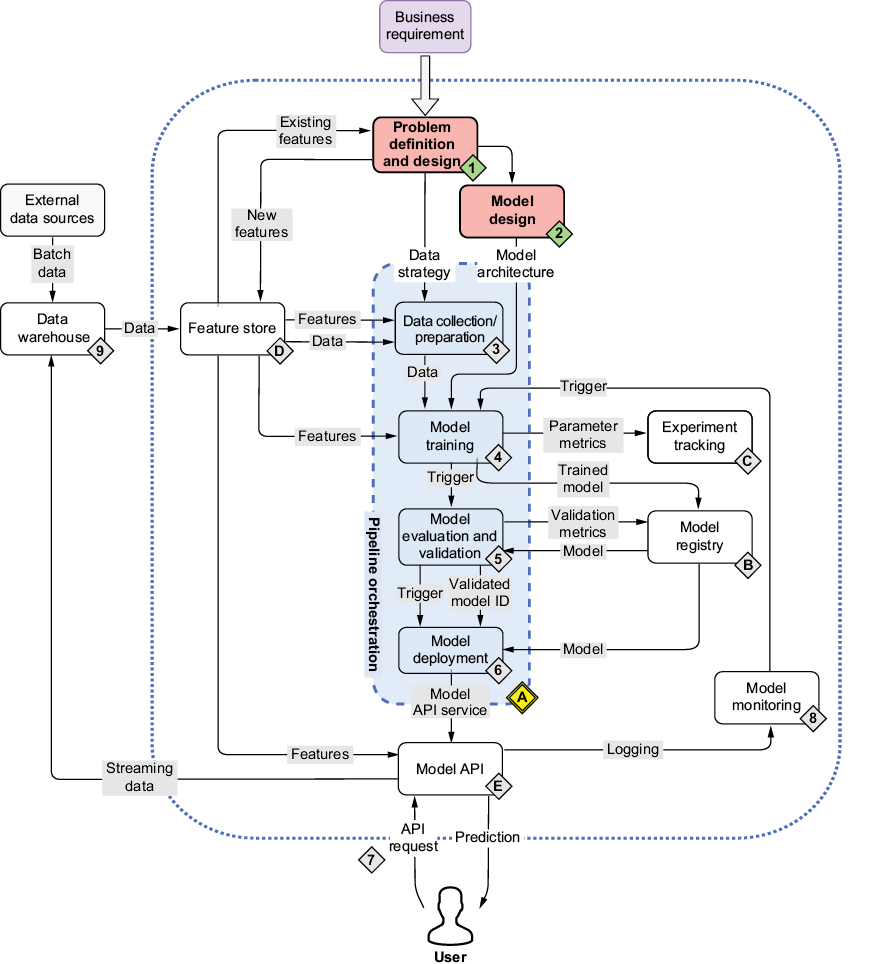

As an ML engineer, one of your primary responsibilities is to build and maintain the infrastructure that powers ML systems. Whether you’re deploying models, setting up pipelines, or managing a complete ML platform, you need a solid foundation in modern infrastructure tools and practices (figure 3.1).

Figure 3.1 The mental map now shifts focus to the foundation of the ML platform, primarily Kubernetes, along with key practices such as continuous integration/continuous deployment and monitoring, which are essential for deploying and maintaining ML systems.

We’ll tackle the essential DevOps tools and practices you need to build reliable ML systems. We’ll start with the basics and progressively build your knowledge through hands-on examples. By the end, you’ll understand how to do the following:

- Package applications consistently with Docker

- Deploy and manage applications on Kubernetes

- Automate workflows with continuous integration/continuous deployment (CI/CD)

- Monitor application health and performance

While these tools aren’t specific to ML, they form the foundation that enables us build robust ML systems at scale. Let’s begin with Docker, the tool that helps us package our applications consistently.