Chapter 6. Evaluating models

This chapter covers

- Calculating model metrics

- Training versus testing data

- Recording model metrics as messages

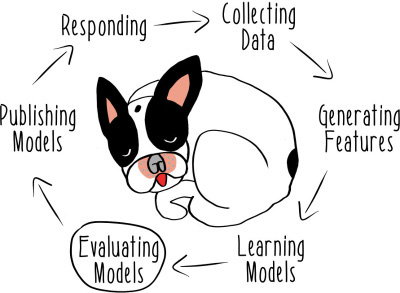

We’re over halfway done with our exploration of the phases of a machine learning system (figure 6.1). In this chapter, we’ll consider how to evaluate models. In the context of a machine learning system, to evaluate a model means to consider its performance before making it available for use in predictions. In this chapter, we’re going to ask a lot of questions about models.

Much of the work of evaluating models may not sound that necessary. If you’re in a hurry to build a prototype system, you might try to get by with something quite crude. But there’s real value in understanding the output of the upstream components of your machine learning system before you use that output. The data in a machine learning system is intrinsically and pervasively uncertain.

When you contemplate whether you want to use a model to make predictions, you face that uncertainty head on. There are no answers to look up from somewhere else. You need to implement the components of your system such that they make the right decisions—or suffer the consequences.