2 Evaluating generated responses

This chapter covers

- Getting started with Spring AI evaluators

- Checking for relevancy

- Judging response correctness

- Applying evaluators at runtime

Writing tests against your code is an important practice. Not only can automated tests ensure that nothing is broken in your application, but they can also provide feedback that informs the design and implementation. Tests against the generative AI components in an application are no less important than tests for other parts of the application.

There’s only one problem. If you send the same prompt to an LLM multiple times, you’re likely to get a different answer each time. The non-deterministic nature of generative AI means that there can be no "assert equals" approach to testing.

Fortunately, Spring AI provides another way to decide if the generated response is an acceptable response: Evaluators.

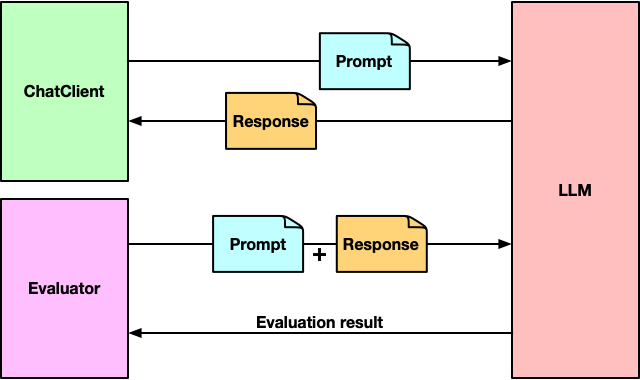

An evaluator takes the user text from the prompt that was submitted to the LLM, along with the content from the response and decides if the response content passes some criteria. Under the covers, an evaluator can be implemented in any way suitable for the kind of evaluation it performs. But as illustrated in Figure 2.1, evaluators typically rely on an LLM to judge how fitting the response is to the submitted prompt.

Figure 2.1 Spring AI evaluators send a prompt and a generated response to the LLM to assess the quality of the response.