This chapter covers

- Basics of prompt engineering

- Integrating external knowledge into prompts

- Helping language models reason and act

- Organizing the process of prompt engineering

- Automating prompt optimization

Prompts bring language models (LMs) alive. Prompt engineering is a powerful technique to steer the behavior of models without updating their internal weights through expensive fine-tuning. Whether you’re a technical expert or working in a nontechnical role within an AI product team, mastering this skill is essential for using LMs. Prompt engineering allows you to start working with language models immediately, enabling quick exploration and enhancement of their capabilities without needing technical expertise. With well-designed prompts, you can make LMs perform specific tasks required by your application, delivering functionality customized to your users’ needs.

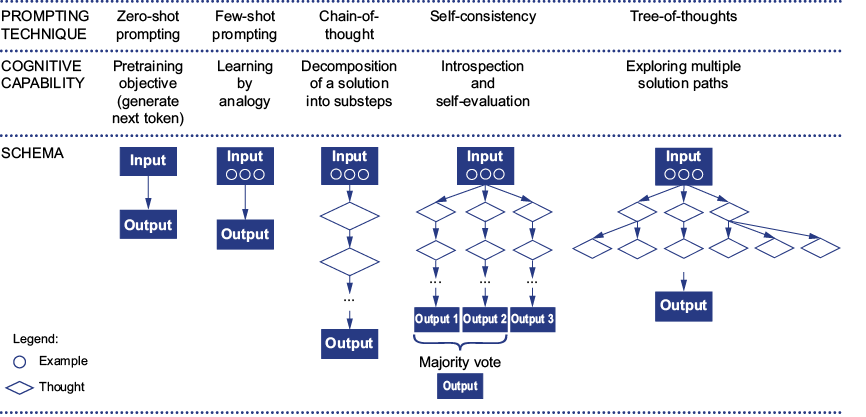

In this chapter, we’ll follow Alex again as he navigates the world of prompt engineering to improve the content generated by his app. He begins with simple zero-shot prompts and works through more advanced techniques, such as chain-of-thought (CoT) and reflection prompts. Each method taps into different cognitive abilities of LMs, from learning by analogy to breaking down complex problems into manageable parts. Figure 6.1 shows this progression.

Figure 6.1 Overview of the most popular prompting techniques