8 Systems

This chapter covers: - Computer architecture: Fundamental concepts that help us build “mechanical sympathy”, meaning intuition for how hardware executes our code. - Linux: Core concepts that matter when diagnosing performance and reliability issues.

8.1 Computer Architecture

In this section, we will explore how modern CPUs execute instructions and why details such as pipelines, caches, and SMT matter to us as software engineers.

8.1.1 Instruction Pipelining

Rather than starting with a definition of instruction pipelining that many would find unclear, let’s first understand why it’s necessary by exploring how CPUs process instructions. This will give us a clearer picture of the problem and why it’s a fundamental technique in modern CPUs.

A CPU typically processes an instruction through four stages:

- Fetch: Fetch the instruction from memory.

- Decode: Decode the instruction to understand what operation is required.

- Execute: Execute the operation (e.g., arithmetic or logic operations).

- Write: Write the result back to memory or a register.

NOTE

This is a simplified view, but it should be enough to understand today’s concept.*

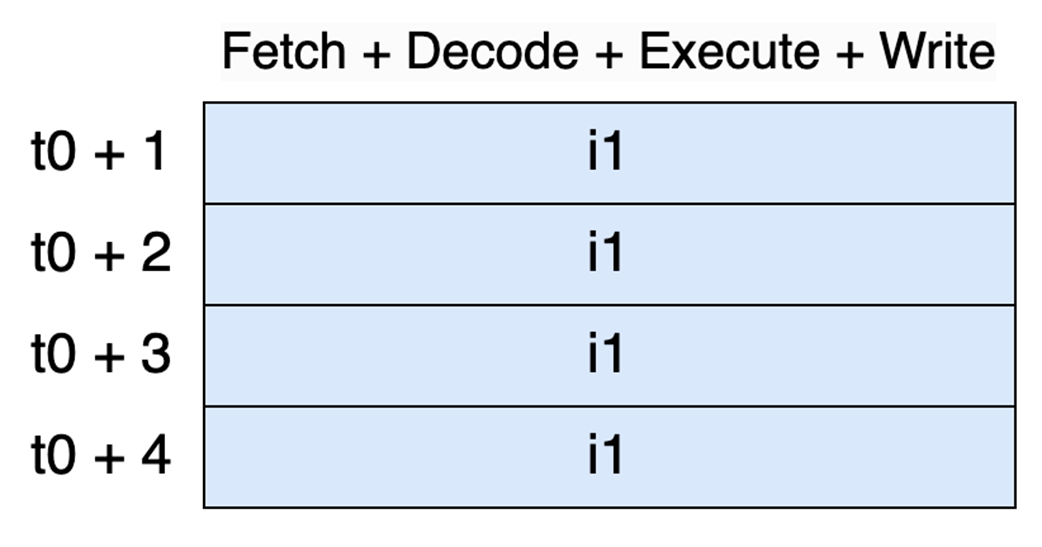

Assume that each of these stages takes one cycle to complete. Therefore, processing a single instruction would take four cycles (i1 stands for instruction 1):

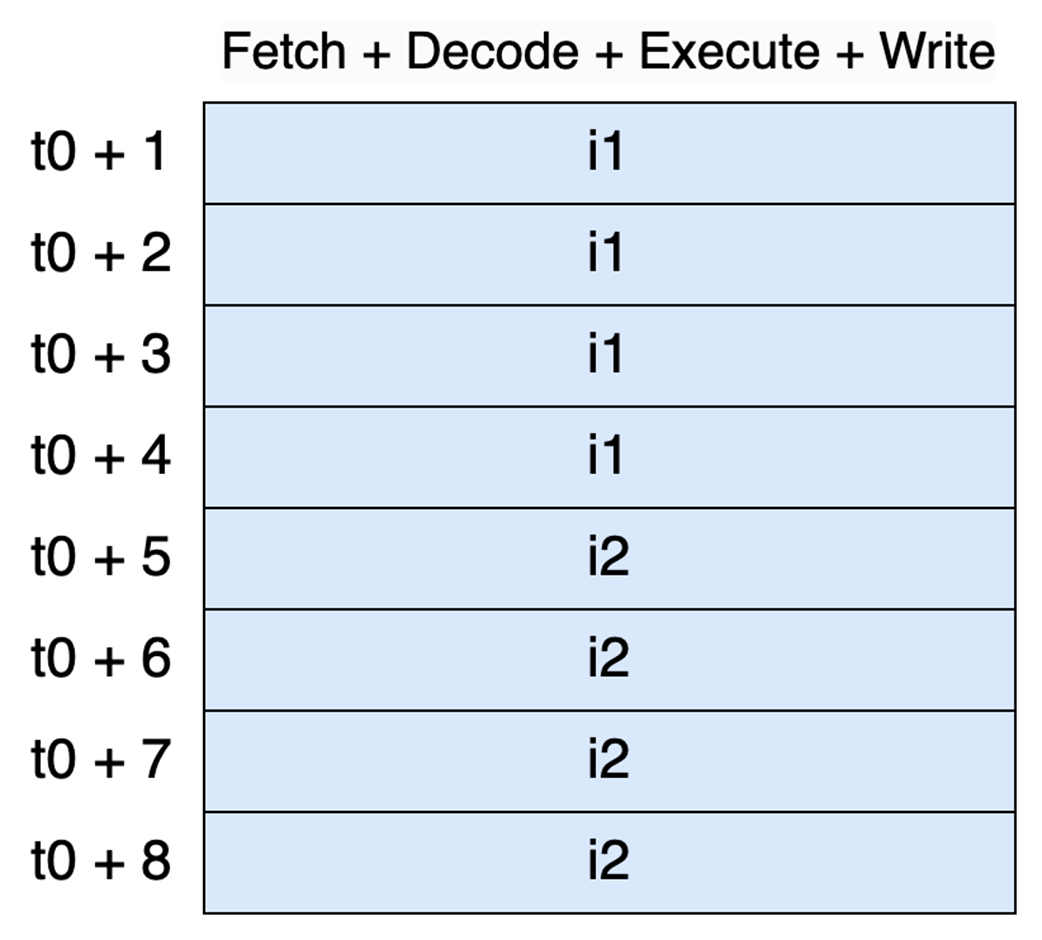

And processing two instructions would then take eight cycles:

If we were a CPU designer, how could we make things faster?