concept utility function in category machine learning

This is an excerpt from Manning's book Machine Learning with TensorFlow, Second Edition MEAP V08.

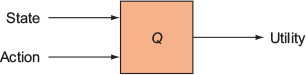

The utility of performing an action (a) at a state (s) is written as a function Q(s, a), called the utility function, shown in figure 13.4.

Figure 13.4. Given a state and the action taken, applying a utility function Q predicts the expected and the total rewards: the immediate reward (next state) plus rewards gained later by following an optimal policy.

In this chapter, you’re going to model a task from human demonstrations while avoiding both imitation learning and the correspondence problem. Lucky you! You’ll achieve this by studying a way to rank states of the world with a utility function, which is a function that takes a state and returns a real value representing its desirability. Not only will you steer clear of imitation as a measure of success, but you’ll also bypass the complications of mapping a robot’s set of actions to that of a human (the correspondence problem).

In the following section, you’ll learn how to implement a utility function over the states of the world obtained through videos of human demonstrations of a task. The learned utility function is a model of preferences.

Figure 19.8 Videos of folding a shirt reveal how the cloth changes form through time. You can extract the first state and the last state of the shirt as your training data to learn a utility function to rank states. Final states of a shirt in each video should be ranked with a higher utility than those shirts near the beginning of the video.

This is an excerpt from Manning's book Machine Learning with TensorFlow.

The utility of performing an action a at a state s is written as a function Q(s, a), called the utility function, shown in figure 8.4.

In this chapter, you’re going to model a task from human demonstrations while avoiding both imitation learning and the correspondence problem. Lucky you! You’ll achieve this by studying a way to rank states of the world with a utility function, which is a function that takes a state and returns a real value representing its desirability. Not only will you steer clear of imitation as a measure of success, but you’ll also bypass the complications of mapping a robot’s set of actions to that of a human (the correspondence problem).

In the following section, you’ll learn how to implement a utility function over the states of the world obtained through videos of human demonstrations of a task. The learned utility function is a model of preferences.