Chapter 6. Scaling web roles

This chapter covers

- What happens to your web server under extreme load

- Scaling your web role

- The load balancer

- Session management

- Caching

One of the coolest things about Windows Azure is that you can dynamically scale your application. Whenever you need more computing power, you can just ask for it and get it (as long as you can afford it). The downside is that in order to harness such power, you need to design your application correctly. In this chapter, we’ll look at what happens when your application is under pressure and how you can use Windows Azure to effectively scale your web application.

Back in chapter 1, we talked about the challenges of handling and predicting growth for typical websites. In this section, we want to show you what happens to a web server when it’s under extreme load and how it handles itself. Using the Ashton Kutcher example from chapter 1, what would happen to your web server if Ashton Kutcher twittered about your little Hawaiian Shirt Shop and you suddenly found thousands of users trying to access your website at the same time?

In an ideal world, if your website (or service) reached its maximum operating capacity, all other requests would be queued and the application could handle the load at a graceful, yet throttled, rate. In the real world, your website is likely to explode into a ball of flames because the web server will continue to attempt to process all requests (regardless of the rate at which they occur). The processing time of the requests will increase, which results in a longer response time to the client. Eventually, the server will become flooded with requests and it won’t be able to service those requests anymore. The server is effectively rendered useless until the requests reduce in volume.

In this section, we’ll look at how a web server performs both under normal and extreme load by doing the following:

- Building a sample application that can run under extreme load

- Building an application that can simulate extreme load on your web server

- Looking at how your sample application responds when the server is under load

- Increasing the ability to process requests by scaling up or out

To do all this you need to build a small ASP.NET web page sample application that you can use in all these scenarios.

The web page that you’re about to build will perform an AJAX poll that returns the time a request was made. Under normal operation, the page should return the current server time every 5 seconds. Figure 6.1 shows this AJAX web page adequately handling the load during normal operation.

Figure 6.1. An ASP.NET web page making an AJAX request and returning the server time every 5 seconds

Let’s take a look at how you can build this web page. The following listing gives the code for the ASP.NET AJAX web poll shown in figure 6.1.

At  is a standard ASP.NET AJAX update panel that contains a timer

is a standard ASP.NET AJAX update panel that contains a timer  , which is set to poll the web server every 5 seconds. When the timer expires, the resultLabel

, which is set to poll the web server every 5 seconds. When the timer expires, the resultLabel  is concatenated with the current date and time using the following code:

is concatenated with the current date and time using the following code:

So far, everything is fine and dandy. Let’s now try to simulate what would happen if your server came under extreme load.

To simulate extreme load, you’re going to build a web page that will put your server on an extreme diet (you’re going to starve it). You’ll build an ASP.NET web form that will send the current thread to sleep for 10 seconds. If you then make enough requests to your web server, you should starve the thread pool, making your website behave like a server under extreme load.

The following code shows the markup for your empty ASP.NET page:

This code is for a simple web page; you don’t need your web page to display anything exciting. You just need the code-behind for your page to simulate a long-running request by sending the current thread to sleep for 10 seconds, as shown below:

Now that you have a web page that simulates long-running requests, you need to put your web server under extreme load by hammering it with requests.

To simulate lots of users accessing your website, create a new console application that will spawn 100 threads. Each thread will make 30 asynchronous calls to your new web page.

Note

Your mileage might vary! You might need to increase the number of threads or calls to effectively hammer the web server.

The following listing shows the code for the console application.

In listing 6.2, you define the URI of your long-running web page at  . Then you iterate through a loop 100 times, creating a new thread at

. Then you iterate through a loop 100 times, creating a new thread at  , which you’ll spawn at

, which you’ll spawn at  . You’ll spawn 100 threads.

. You’ll spawn 100 threads.

Each thread will execute the code defined at  within the lambda expression. This thread will loop 30 times, making an asynchronous request to the web page at

within the lambda expression. This thread will loop 30 times, making an asynchronous request to the web page at  . In the end, you should be making around 300 simultaneous requests to your website. How is the web server going to perform?

. In the end, you should be making around 300 simultaneous requests to your website. How is the web server going to perform?

In figure 6.1, you saw the response time of a simple AJAX website being polled every 5 seconds under normal load. In that example, there were no real issues and the page was served up with ease.

Figure 6.2 shows that same web application, this time coughing and spluttering as it struggles to cope with the simulated extreme load. The extreme load that this page is suffering from is the result of running your console application (listing 6.2) at the same time as your polling application.

Figure 6.2 shows that your polling web page is no longer consistently taking 5 seconds to return a result. At one point (between 18:40:33 and 18:41:04), it took more than 20 seconds to return the result. This type of response is typical of a web server under extreme load. Because the web server is under extreme load, it attempts to service all requests at once until it’s so loaded that it can’t effectively service any requests. What you need to do now is scale out your application.

If you’re hitting the limits of a single instance, then you should consider hosting your website across multiple instances for those busy periods (you can always scale back down when you’re not busy). Let’s take a quick look at how you can do that manually in Windows Azure.

By default, a Windows Azure web role is configured to run in a single instance. If you want to manually set the number of instances that your web role is configured to run on, set the instances count value in your service configuration file in the following way:

This configuration shows that your web role is configured to run in a single instance. If you need to increase the number of active web roles to two, you could just modify that value from 1 to 2:

Because the number of instances that a web role should run on is stored in the service configuration file, you can modify the configured value via the Azure Service portal at runtime. For more information about how to modify the service configuration file at runtime, see chapter 5.

Scaling out automatically

If you don’t fancy increasing your web role instances manually, but you want to take advantage of the ability to increase and decrease the number of instances depending on the load, you can do this automatically by using Windows Azure APIs.

You can use the diagnostics API to monitor the number of requests, CPU usage, and so on. Then, if you hit a threshold, use the service management APIs to increase the number of instances of your web role.

Alternatively, you could increase or decrease the number of instances, based on the time of day, using a model derived from your web logs. For example, you could run one instance between 3:00 a.m. and 4:00 a.m., but run four instances between 7:00 p.m. and 9:00 p.m. To use this kind of schedule, you create a Windows Scheduler job to call the service management API at those times.

In chapter 15, we’ll look at how you can automatically scale worker roles. The techniques used in that chapter also apply to scaling web roles.

You’ve increased the number of instances that host your web role. Now, if you were to rerun your AJAX polling sample and your console application, you would see your polling application responding every 5 seconds as if it were under normal load (rather than taking 30 seconds or so to respond).

Scaling out your web role is great if you designed your application to use this method, but what if you didn’t think that far ahead? Well, then, you can scale it up.

Uh-oh. You didn’t design your application to scale out. Maybe your application has an affinity to a particular instance of a web role (it uses in-process sessions, in-memory caches, or the like); in that case, you might not be able to scale out your web role instance. What you can do is run your web application on a bigger box by modifying the vmsize value in the service definition file:

By default, the web role is hosted on a small VM that has 1 GB of memory and one CPU core. In the example above, you’ve upgraded your web role to run under a medium VM, which means that your web role has an extra CPU core and more memory. For full details about the available VM sizes, see chapter 3, section 3.4.4.

By increasing the size of the VM, your ASP.NET AJAX web polling application should be able to handle the extreme load being placed on the server (or at least process requests a little quicker).

Try to avoid scaling up and scale out instead

Although scaling up will get you out a hole, it’s not an effective long-term strategy. Wherever possible, you should scale out rather than up. At the end of the day, when you scale up, you can only scale up to the largest VM size (and no further). Even that might not be enough for your most extreme needs.

Also, it’s not easy to dynamically scale your application up and down, based on load. Scaling out requires only a change to the service configuration file. Scaling up requires you to upgrade your application (because it requires a change to the service definition file).

Now you understand what happens when your server is placed under extreme load and how to effectively handle that situation by scaling your application. What you need to know now is how requests are distributed across multiple web role instances via the load balancer.

In this section, we’ll look at how the load balancer distributes requests across multiple servers and how it reacts under failover conditions. In the end, you’ll understand how your application will react and behave when you use multiple instances of your web role.

We’re going to look at two load balancers: the development fabric load balancer and the production load balancer. Before we do that, you’re going to build a sample application to demonstrate the effects of the load balancer coordinating requests between multiple servers.

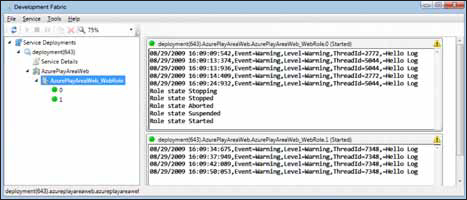

The sample application that you’re about to build is a web page that consists of a label that displays the name of the web server that processed the request, and a button that posts back to the server when it’s clicked. Every time the page is loaded (either on first load or when the button is clicked), the page writes a message to the diagnostic log. Figure 6.3 shows the web page and its log output in the development fabric.

Now we’ll walk you through the steps of creating this simple web role so that you’ll be able to be able to see the kind of output shown in figure 6.3.

To build the sample application shown in figure 6.3, create a new ASP.NET web role (if you’re unsure how to do this, refer to chapter 1). In the web role project that you just created, add a new ASP.NET web page called MultipleInstances.aspx.

Figure 6.3. A single instance of your web role running in the development fabric, writing out to the diagnostic log

Before you can run this web page, you must enable native execution in your service definition file in your Cloud Service project:

Now that you’ve added the page, add the following markup in MultipleInstances.aspx:

This code represents the page displayed in figure 6.3. There’s a label that displays the name of the machine and a button that will post back to the web server when it’s clicked.

If you look at figure 6.3, you can see that each time the page is loaded, the name of the web server that processed the request is displayed. To display the web server name that’s in the lblMachineName label, add the following code to the Page_Load event of the ASP.NET page:

Finally, in order to write some data to the log as shown in figure 6.3, you need to write a message to the log on page load. Add the following code to the Page_Load event of the ASP.NET page:

Now, fire up the application in the development fabric. You’ll see a message written to the log displayed in the development fabric UI every time the page is loaded.

Great! You’ve got your application working. Now let’s go back and take a look at how the load balancers route requests.

In typical ASP.NET web farms, it’s not easy to simulate the load balancing of requests. With Windows Azure, a development version of the load balancer is provided so you can simulate the effects of the real load balancer. The development fabric load balancer helps you find and debug any potential issues that you might have in your development environment (yep, there’s a debugger, too). So let’s take a look at how the development fabric load balancer behaves.

If you were to fire up your web application in the development fabric with two web roles configured, you would notice two instances of your web role displayed in the UI. Similar to the live production system, the development fabric distributes requests between the instances of your web role. Each time someone clicks the button on the web page, the request is distributed to one of the web role instances. Figure 6.4 shows the two instances of the web role in the development fabric UI.

You can see two instances of your web role in the development fabric, but how is this represented on your development machine?

In chapter 4, you discovered that Windows Azure hosts the IIS 7.0 runtime in-process in the WaWebHost.exe process using the Hostable Web Core feature of IIS 7.0 rather than using the default w3wp.exe process. Because your web application is hosted by the WaWebHost.exe process, multiple instances of WaWebHost.exe are instantiated if you increase the number of instances of your web role that need to be hosted (one process per instance is instantiated). Figure 6.5 shows the process list of a development machine when it’s running multiple instances of the web role.

In figure 6.5 there are two instances of WaWebHost in the Processes list, one per web role instance.

Development fabric load balancer process

To help simulate the live production environment, the Windows Azure SDK includes a development load balancer that’s used to simulate the hardware load balancers that run in the Windows Azure data centers. Without the development fabric load balancer, it would be difficult to simulate issues that occur across multiple requests (you can’t attach a debugger in the live production environment, but you can in the development environment).

The process that simulates the load balancer in the development fabric is called DFLoadBalancer.exe, which is shown in figure 6.5. All HTTP requests that you make in the development fabric are sent to this process first and then distributed to the appropriate WaWebHost instance of the web role.

If you were to kill one of the WaWebHost instances, then all requests made to the development fabric load balancer would be redistributed to the other instance of WaWebHost; the other WaWebHost process would be automatically restarted. By performing this test, you can simulate what will happen to your application if there’s a hardware or software failure in the live environment.

If you were to kill your DFLoadBalancer.exe process, the entire development fabric on your machine would be shut down and would require restarting.

When you’re testing your application in the development fabric to see how it responds when requests occur across multiple instances, you should check that your requests haven’t just been processed by a single instance of the process.

In the development fabric, the load balancer tends to favor a particular instance (per browser request) unless that role is under load. If you look at figure 6.4, you’ll see that although there are two instances of your web role running, the development load balancer seems to be routing all traffic to a single instance.

Because each browser window in the development fabric tends to have affinity with a particular web role instance, you should test your application using multiple browser instances. Figure 6.6 shows the outcome of a test in which both instances of the web role are being used by alternately clicking the button in two different browser instances.

Now that two different web pages are running, the requests are distributed across both instances of the development fabric.

Although spinning up multiple instances of your web application with multiple browser instances will allow you to test, in your development fabric, that your application will work with multiple roles, it won’t test the effects of requests being redistributed to another server. To test that your application behaves as expected in your development fabric when running multiple instances, there are two things that you can do: you can restart one of the WaWebHost instances, or you can test your application under load.

Although the development fabric load balancer creates affinity between your process and an instance of WaWebHost, if you kill that WaWebHost instance, the affinity is broken. When the affinity is broken, the request is redistributed to another instance of WaWebHost, which creates a new affinity between the web browser and that instance of the web role. Figure 6.7 shows the changing of affinity between a browser and a web role instance when a WaWebHost.exe process is killed.

In the example shown in figure 6.7, two instances of the web role are running (instance 0 and instance 1), and there’s a single web browser. Instance 1 didn’t process any requests prior to the restarting of the WaWebHost.exe process of instance 0; the browser had affinity with instance 0. When instance 0 was restarted, instance 1 then processed all incoming requests and instance 0 no longer processed any requests from the browser because a new affinity was created.

This test is fairly important for you to perform in the development fabric. Restarting one of your WaWebHost instances helps to ensure that your application can truly run against multiple instances of your web role and can recover from a disaster. We’ll look at these situations in more detail when we look at session, cache, and local storage later in this chapter.

The second way to test that your application behaves correctly when it’s redistributed to multiple instances is to test your application under load. The best method of testing this scenario is to use a load testing tool such as Visual Studio Team System Web Load Tester. If you want to ensure that the development fabric load balancer redistributes requests under load, you can simulate this by modifying the sample application that you built earlier. Now, you’re going to simulate load by sending the thread to sleep for 10 seconds on page load. You can do this by modifying your Page_Load event to include the following code:

If you were to now spin up multiple instances of your web page, you would see that as the result of the increase in load, the development fabric load balancer doesn’t maintain affinity and starts redistributing the requests more evenly.

Asynchronous AJAX requests

Although the development fabric load balancer keeps affinity between your browser instance and a web role (unless under load), it tends to redistribute requests evenly when performing AJAX requests.

In section 6.1, you created an ASP.NET AJAX web page that asynchronously calls the backend web page every 5 seconds and displays the name of the server that processed the request. If you modify that sample to write to the log, the development fabric load balancer evenly distributes the request, rather than maintaining affinity with a particular web role instance.

Now that you have an understanding of how the development fabric load balancer behaves and how you can effectively test how your application will behave in failover situations, let’s look at how things happen in the live environment.

We’ve spent quite a bit of time looking at the development fabric load balancer. Hopefully you have a good understanding of how the load balancer works and how it interacts with multiple instances of your web role. Although the development fabric load balancer doesn’t behave exactly like the load balancer in the real environment, there are some tricks that you can do to ensure that your application will behave correctly in the live environment. That said, there are some cases in which your application might not behave as expected when it’s distributed across physical servers, which isn’t something you can easily test for in the development fabric. To ensure that your application will behave correctly prior to making your application live, you’ll need to perform some testing in the staging environment.

In chapter 2, we showed you how to deploy your application to the staging environment and how to move your staging web application to the production environment via the Windows Azure portal. In that chapter, you also learned that when you switch your application from staging to production, the application continues to run on the same server as before and the load balancers simply redirect traffic to the correct servers. If you want to, you can prove this by deploying the sample that you built earlier in this chapter to the staging environment (noting the machine name) and then switching over to the production environment (noting the machine name once again). Figure 6.8 shows your application running in the staging environment and in the live environment.

Figure 6.8. Your web application running in the staging environment (left) and in the production environment (right)

In figure 6.8, the browser on the left is pointed to the staging environment and is running on machine RD00155D3021EB. The browser on the right is your web application running in production after the switchover. Notice that your application is still running on the same server even though you’re now running in production (rather than in staging).

Because the staging servers will eventually become the production servers, you should be able to iron out, during your staging testing phase, any errors that might occur when you’re running multiple instances of your web role.

Nothing beats production environments

You can’t always be sure that an application that works in your development environment will work in your staging environment. On your development machine, WaWebHost runs under your user account; anything you can do, it can do. That’s not necessarily true with the production servers.

If you modify your ASP.NET web polling application from section 6.1 to display the machine name, and then run it in the staging environment, you get a result that’s similar to what’s shown in figure 6.9.

The requests are distributed between two different servers, which is great news. You can be sure that the staging environment will host each instance of your web role on a separate physical machine (a test that you can’t perform in your development environment). This behavior could change over time, as Windows Azure matures and is expanded. The point is that you shouldn’t rely on any apparent behavior when designing your application. You should design it to be as stateless as possible, with the understanding that successive trips to the server won’t necessarily always go to the same server.

Quirky affinitization

Both the staging and the production environments can be a little quirky in how they distribute requests between servers. In some instances, requests will be evenly distributed between all servers (for example, AJAX requests are distributed this way), but generally both environments will maintain affinity between a connection and a web role. If you’re testing whether your application works with multiple instances of your web role, you should capture the machine name in your request to ensure that your server can handle requests distributed across instances. Then run your sample applications in both the staging and production environments and monitor how the machine name changes.

In the development fabric, you tested how your application handles failover by killing the WaWebHost.exe process and then monitoring the application’s behavior. If you need to, you can perform the same test in the staging and production environments. In the live environment, the web role is also hosted in a process called WaWebHost.exe, so you can kill the process on the live environment using the following command (remember that native execution must be enabled for this to work):

Create a new web page with a button that will execute the above command when it’s clicked. Then you can run the AJAX polling application in one browser, kill the WaWebHost process in another browser, and watch how the load balancer handles the redirection of traffic. Typically, on the live system (at the time of writing), all traffic is redirected to the single node. When the FC is convinced that the failed role is behaving again, the load balancer starts to direct requests to that server.

If you want to test what happens when all roles are killed, you can execute the command on each role until they’re all dead. If no web roles are running in the live environment, your web application won’t process requests anymore and your end user will be faced with an error. Typically, your service will be automatically restarted and will be able to service requests again within about a minute.

That’s how requests are load balanced across multiple web roles. Now let’s take a look at those aspects of a website that are generally affected when running with multiple roles, namely:

- Session management

- Caching

- Local storage

Let’s start with session management.

HTTP is a stateless protocol. Each HTTP request is an independent call that has no knowledge of state from any previous requests. Using sessions is one method of persisting data so that it can be accessed across multiple requests. In ASP.NET, you can use the following methods of persisting data across requests:

- Sessions

- ViewState

- Cookies

- Application state

- Profile

- Database

Throughout the course of this chapter (and future chapters), we’ll be looking at the methods of persisting data that you’ll use that are affected when you scale to multiple web roles. We won’t look at ViewState or cookies in this book; these methods aren’t used differently in a Windows Azure environment.

In this section, we’ll look at how running Windows Azure across multiple roles affects your ASP.NET session and the different types of session providers that you can use. Specifically, we’re going to talk about how a session works, and you’re going to build a sample session application. We’ll also discuss in-process sessions and Table storage sessions.

Although the concept of sessions is probably familiar to most of you, we want to recap the purpose of the session and the Session object.

A session is effectively a temporary store that’s created server-side for a limited window of time for a particular browser instance. Your ASP.NET web application can use this temporary store to store and retrieve data throughout the course of that session.

If we go back to the Hawaiian Shirt Shop example, you can store the shopping cart in a session. Using a session as a storage area lets you store items in the cart, but still have access to the cart across multiple requests. When the session is terminated (the user closes his browser), the session is destroyed and the data stored in the session is no longer accessible. Similarly, if the user opens a new browser instance, a new independent session is assigned to this new browser instance and it has no access to session data associated with the other browser instances (and vice versa). Figure 6.10 shows how a session is treated with respect to the browser instance and the web server.

In figure 6.10, browser instance 1 is associated with session ID 12345; the session key Foo has an associated value of Bar; browser instance 2 is associated with session ID 12346; and the session key Foo has an associated value of Kung. If you were to look at the output of browser instance 1 and browser instance 2, they would display the correct values from their associated temporary store.

When a browser makes a request to an ASP.NET website, it passes a session ID in a cookie as part of the request. This session ID is used to marry the request to a session store. For example, in figure 6.10, browser instance 1 has a session ID of 12345 and browser instance 2 has a session ID of 12346. If no session ID is passed in the request, then a new session is created and that session ID is passed back to the browser in the response, to be used by future requests.

If you need to be able to access data beyond a browser session, then you should consider a more permanent storage mechanism such as Table storage or the SQL Azure Database.

Now that we’ve reminded you how sessions work in ASP.NET, you’re going to build a small sample application that you can use to demonstrate the effects of using sessions on your web applications in Windows Azure.

To get started, you need a web page where you can store a value in the session for later retrieval. Add this new ASP.NET web page, called SessionAdd.aspx, to an ASP.NET web role project. Add the following markup to the page:

The markup shows that the page consists of a text box and a button. Use the following code to set the value of the session key Foo to what the text box contains when the button is clicked:

Now that you can store some session data in your page, you need a page that you can use to display whatever is stored in Foo. For this example, you’ll use an ASP.NET AJAX polling timer (similar to the one that you used earlier) that will display whatever is stored in Foo every 5 seconds. Figure 6.11 shows how this web page looks (prior to running this page, we used the SessionAdd.aspx page to set the value of Foo to bar).

To create the page displayed in figure 6.11, add a new ASP.NET page to the project called SessionTimer.aspx that contains the following markup:

To display the result of Foo in the web page, add the following code-behind to the SessionTimer.aspx page:

Every 5 seconds the SessionTimer.aspx page makes an AJAX request back to the web server where the request is logged. Then, the name of the computer, the time of the request, and the value stored in the session for Foo is returned, all of which is then displayed in the SessionAdd.aspx page.

Using this sample, you can see in both the development and live environments which machine processed the request and what the value of Foo is at any particular time.

By default, ASP.NET uses an in-process session state provider to store session data. The in-process session state provider stores all session data in memory that’s scoped to the web worker process (w3wp in standard web servers, or WaWebHost in Windows Azure). Let’s see how this session provider works.

If the worker process were to be restarted, you would lose any session data because that data is stored in memory. You can simulate this situation in your development environment using your SessionTimer page.

Tip

Before you attempt to lose your session, ensure that your ASP.NET web role is running with a single instance.

Go ahead and fire up the SessionAdd.aspx page that you created earlier and set the value of Foo to bar. After you set this value, open SessionTimer.aspx in the same browser instance. Let the session value display a few times and then kill the WaWebHost process. As you discovered earlier, if you kill the WaWebHost process, the development fabric automatically restarts the process, but all session data is lost. Figure 6.12 shows the result of killing the process.

In figure 6.12, bar was displayed up until 13:49:55; just after that point, you killed the WaWebHost process. From that point on, the session was lost and no data was returned for all other requests.

There are some issues with using the in-process session provider in Windows Azure, but this one is the real killer: if you’re using multiple web role instances, Windows Azure doesn’t consistently implement sticky sessions in the production environment. Any requests made to a web role might not be routed to the same web role.

As we noted earlier, the production systems generally maintain affinity with a web role but will sometimes evenly distribute requests among roles. In the case of AJAX applications, because requests are likely to be distributed across multiple roles, an in-process session state provider can’t be used; the other role won’t have access to session data stored in a previous request. Figure 6.13 shows your AJAX polling application running across multiple web roles.

In figure 6.13, you can see that any request made to the first role returns the session data, but any time the request is distributed to another web role, the session data stored in the first web role is no longer accessible. When you’re testing your applications in the development environment, you need to keep in mind that sticky sessions aren’t always implemented by Windows Azure.

If at first you need to run your web role on only a single instance, then you can get better performance by running your application with in-process session management. You should consider this option if you’re unconcerned that a user’s session might be trashed if the web role is moved to another server (if, for example, the role instance was moved because of a hardware failure). If you need to scale out to multiple servers at a later date, you can always move to a session state provider that’ll work cross multiple web roles (such as Table storage) when required.

Before you decide to run with the in-process session state provider, there’s one other issue that you should be aware of. If you have a large number of users on your website and they’re storing a large amount of session data, you might quickly run into Out of Memory exceptions. The web role host doesn’t automatically free up any active session data until sessions start to expire. Remember that your VM only has 2 GB of memory allocated to it if you’re running on the smallest (default) size, so you’ll run out of memory quite quickly.

If you want to test how your application responds to adding a large amount of session data, you can modify the SessionAdd.aspx page to include a button that will add a large amount of data to the session when clicked. Add the following markup to your SessionAdd.aspx page:

The following code will add a lot of data to the session when the button is clicked: protected void btnLarge_Click(object sender, EventArgs e)

By repeatedly clicking this new button on your website, you’ll find that the memory usage of your WaWebHost.exe process increases until you start getting Out of Memory exceptions.

Note

In Windows Azure, the state server, or out-of-process session state provider, isn’t supported.

To maintain a session state that can be accessed by multiple web roles that can have requests evenly distributed between them, you need to use a persistence mechanism that can be accessed by all web roles. In typical ASP.NET web farms, SQL Server is typically used, mainly because ASP.NET has a built-in provider that supports it.

There’s a sample online for a Table-storage session state provider that you can use in your ASP.NET web applications.

To start using the Table-storage session state provider, you need to build the sample provider and then reference that provider in your project. You can get the sample provider at http://code.msdn.microsoft.com/windowsazuresamples.

To build the project, double-click the buildme.cmd file in the directory. After you’ve built the sample project, add a reference to the assembly in your web role project. Because the Table-storage session state provider is implemented as a custom provider, you’ll need to modify your web.config file to include the provider in the system.web settings:

The above configuration is for using the Table-storage and BLOB-storage providers in the development fabric. The creation of the appropriate tables in the Table-storage account is automatically taken care of for you by the provider. On deployment of your application, you’ll need to modify web.config to use your live Table-storage account.

If you now run your application in either the development fabric or the live environment, you’ll find that you can store and retrieve session data across multiple instances of your web role.

Ever-growing tables

One word of warning about the Table-storage provider: it doesn’t clean up after itself with respect to expired sessions. Because Table storage is a paid, metered service, we advise you to have either a worker role, a simulated worker role (discussed later in this chapter), or a background thread that cleans up any expired sessions from the table. If you don’t clean up this data, you’ll be paying storage costs for data that is no longer used.

Although Table storage gives you a session state that’s accessible across multiple server instances in a load-balanced environment, it does incur a performance hit. To test the performance of the live system, we modified the Table-storage provider to record the time that lapsed between requesting an item from the session and retrieving a response. Because session state is reloaded from the session provider on every page load, this test will allow you to see the impact of the Table-storage session state provider on your website. Figure 6.14 is a modified version of the SessionTimer.aspx page that you built earlier that also displays the time recorded to load the session during the page load.

You can see in figure 6.14 that although you’re storing only one item in the session (bar in session key foo), it still takes somewhere between 0.1 to 0.2 seconds to retrieve the session state. This load time is probably acceptable for most applications. Table storage is a good solution for the session in Windows Azure until a more performant solution, such as a cache-based session provider, is available.

If you store a large amount of data in the session, you might find the performance of the Table-storage provider a little too slow at the moment. Figure 6.15 shows the SessionTimer.aspx page after we added a large amount of data to the session by clicking the large session button that we built earlier twice.

In figure 6.15, you can see that the performance of the session provider seriously degrades when a large amount of data is stored in the session. In this example, it took 1 to 2 seconds just to load the session. In cases when you need minimal session load times or when you’re storing large amounts of data, you should consider another session provider solution (for example, SQL Azure Database or a cache-based session provider).

SQL Azure session state provider

In typical ASP.NET web farms, SQL session state providers are generally used as the session provider. Although this works, it’s not the best use of a SQL database; it’s not querying across sessions, but rather it’s acting as a central storage area.

To date, there isn’t a SQL Azure session state provider available (although this could change). Rather than trying to mess with SQL Azure to make it work with sessions, it’s probably best to either stick to Table storage, use a cache-based session provider, use an in-process provider, or architect your application so it’s not so reliant on sessions.

In any typical website, there’s usually some element of static reference data in the system. This static reference data might never change or might change infrequently. Rather than continually querying for the same data from the database, storing the data in a cache can provide great performance benefits.

A cache is a temporary, in-memory store that contains duplicated data populated from a persisted backing store, such as a database. Because the cache is an in-memory data store, retrieving data from the cache is fast (compared to database retrieval). Because a cache is an in-memory temporary store, if the host process or underlying hardware dies, the cached data is lost and the cache needs to be rebuilt from its persistent store.

Never rely on data stored in a cache. You should always populate cache data from a persisted storage medium, such as Table storage, which allows you to persist back to that medium if the data isn’t present in the cache.

Note

For small sets of static reference data, a copy of the cached data resides on each server instance. Because the data resides on the actual server, there’s no latency with cross-server roundtrips, resulting in the fastest possible response time.

In most systems, there are typically two layers of cache that are used: the in-process cache and the distributed cache. Let’s take a look at the first and most simple type of cache you can have in Windows Azure, which is the ASP.NET in-process cache.

As of the PDC 2009 release, the only caching technology available to Windows Azure web roles is the built-in ASP.NET cache, which is an in-process individual-server cache. Figure 6.16 shows how the cache is related to your web role instances within Windows Azure.

Figure 6.16 shows that both server A and server B maintain their cache of the data that’s been retrieved from the data store (either from Table storage or from SQL Azure database). Although there’s some duplication of data, the performance gains make using this cache worthwhile.

In-process memory cache

You should also notice in figure 6.16 that the default ASP.NET cache is an individual-server cache that’s tied to the web server worker process (WaWebHost). In Windows Azure, any data you cache is held in the WaWebHost process memory space. If you were to kill the WaWebHost process, the cache would be destroyed and would need to be repopulated as part of the process restart.

Because the VM has a maximum of 1 GB of memory available to the server, you should keep your server cache as lean as possible.

Although in-memory caching is suitable for static data, it’s not so useful when you need to cache data across multiple load-balanced web servers. To make that scenario possible, we need to turn to a distributed cache such as Memcached.

Memcached is an open source caching provider that was originally developed for the blogging site Live Journal. Essentially it’s a big hash table that you can distribute across multiple servers. Figure 6.17 shows three Windows Azure web roles accessing data from a cache hosted in two Windows Azure worker roles.

Tip

In figure 6.17, you can see that your web roles can communicate directly with worker roles. In chapter 15, we’ll look at how you can do this in Windows Azure.

Microsoft has developed a solution accelerator that you can use as an example of how to use Memcached in Windows Azure. This accelerator contains a sample website and the worker roles that host Memcached. You can download this accelerator from http://code.msdn.microsoft.com/winazurememcached. Be aware that memcached.exe isn’t included in the download. Use version 1.2.1 from http://jehiah.cz/projects/memcached-win32/.

Hosting Memcached

In chapter 7, we’ll look at how you can launch executables (such as Memcached) from a Windows Azure role.

Although we’re using a worker role to host your cache, you could also host Memcached in your web role (saves a bit of cash).

To get started with the solution accelerator, you just need to download the code and follow the instructions to build the solution. Although we won’t go through the downloaded sample, let’s take a peek at how you store and retrieve data using the accelerator.

If you want to store some data in Memcached, you can use the following code:

In this example, the value "Hello World" is stored against the key "myKey". Now that you have data stored, let’s take a look at how you can get it back (regardless of which web role you’re load balanced to).

Retrieving data from the cache is pretty simple. The following code will retrieve the contents of the cache using the AzureMemcached library:

In this example, the value "Hello World" that you set earlier for the key "myKey" would be returned.

Although our Memcached example is cool, you’ll notice that we’re not using the ASP.NET Cache object to access and store the data. The reason for this is that unlike the Session object, the Cache object (in .NET Framework 3.5SP1, 3.5, or 2.0) doesn’t use the provider factory model; the Cache object can be used only in conjunction with the ASP.NET cache provider.

Using ASP.NET 4.0, you’ll be able to specify a cache provider other than the standard ASP.NET in-memory cache. Although this feature was introduced to support Microsoft’s new distributed cache product, Windows Server AppFabric Caching (which was code-named Velocity), it can be used to support other cache providers, such as Memcached. The configuration of a cache provider is similar to the configuration of a session provider. You could use the following configuration to configure your cache to use AppFabric caching:

Although the Windows Azure team will allow you to hook into any cache provider that you like, the real intention is for you to use a Windows Azure-hosted shared-cache role (that’s probably based on AppFabric caching).

Cache-based session provider

Now that you can see the benefits of a distributed cache, it’s worth revisiting session providers. As stated earlier, most ASP.NET web farms tend to use SQL Server as the session provider. With the increasing popularity of distributed caches, it’s now becoming more common for web farms to use a cache-based session provider rather than a SQL Server–based provider.

When a distributed cache is used in a web farm, it makes sense to leverage it whenever possible to maximize its value. Typically, distributed caches perform better than do databases such as SQL Server; you can improve the performance of your web application by using a faster session provider. Because session state is ultimately volatile data (not unlike cached data), a transactional data storage mechanism such as SQL Server is typically overkill for the job required.

If you want to use a Memcached-based session provider (your session data is stored in your Memcached instance), you can download a ready-made provider from http://www.codeplex.com/memcachedproviders.

OK, so you’ve probably learned everything that’s relevant about scaling your web applications from this chapter (and even from some of the earlier chapters). You should’ve come to realize that websites can’t cope with being under pressure and the best thing that you can do is design your web application to scale out. If, for whatever reason (and there isn’t a good one that we can think of), you can’t scale out, you can always host your website on a bigger box until you can.

In this chapter, we also looked at how Windows Azure distributes requests and how you can test them in your own environment using the development fabric load balancer. Although you can’t test every scenario, you can get a sense as to how your application will behave when you run under multiple instances. Finally, you learned how to handle sessions and caching across multiple servers (if you want to do that).

Now that we’re starting to look at some of the more advanced web scenarios, in the next chapter we’re going to take a peek at how you can use Windows Azure support for full-trust applications, how to build non-ASP.NET–based websites, and how to execute non-.NET Framework applications.