chapter five

5 Cleaning and transforming DataFrames

- Selecting and filtering data

- Creating and dropping columns

- Finding and fixing columns with missing values

- Indexing and sorting DataFrames

- Combining DataFrames using join and union operations

- Writing DataFrames to delimited text files and Parquet

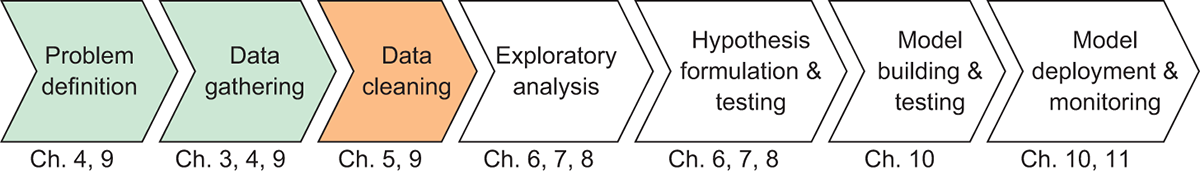

In the previous chapter, we created a schema for the NYC Parking Ticket dataset and successfully loaded the data into Dask. Now we’re ready to get the data cleaned up so we can begin analyzing and visualizing it! As a friendly reminder, figure 5.1 shows what we’ve done so far and where we’re going next within our data science workflow.

Figure 5.1 The Data Science with Python and Dask workflow

Data cleaning is an important part of any data science project because anomalies and outliers in the data can negatively influence many statistical analyses. This could lead us to make bad conclusions about the data and build machine learning models that don’t stand up over time. Therefore, it’s important that we get the data cleaned up as much as possible before moving on to exploratory analysis.