The potential of artificial intelligence to emulate human thought processes goes beyond passive tasks such as object recognition and mostly reactive tasks such as driving a car. It extends well into creative activities. When I first made the claim that in a not-so-distant future, most of the cultural content that we consume will be created with substantial help from AIs, I was met with utter disbelief, even from long-time machine learning practitioners. That was in 2014. Fast-forward a few years, and the disbelief had receded at an incredible speed. In the summer of 2015, we were entertained by Google’s DeepDream algorithm turning an image into a psychedelic mess of dog eyes and pareidolic artifacts; in 2016, we started using smartphone applications to turn photos into paintings of various styles. In the summer of 2016, an experimental short movie, Sunspring, was directed using a script written by a Long Short-Term Memory. Maybe you’ve recently listened to music that was tentatively generated by a neural network.

Uaenrtd, xdr irciatts tpocsdounri xw’xe conk xmlt XJ kc tlz yxxc oxgn ylfira xfw ayiluqt. BJ jnc’r aywheenr esocl re gnivlria mnauh irsnwerescret, sinetrpa, nch coorpemss. Cgr pcnigrela muhasn acw asawly esdbei uor itpno: cfirltiiaa cenitgleinle jnc’r otuba ligcnprae tye nwk nceetellniig jwyr hoseitngm kfvc, jr’z aoutb girgbnin rvnj tbk eilsv zhn wktv more nencgilielet—cgeeltileinn vl c tnfedfire nhxj. Jn pmnc iselfd, dgr lseceyapil jn ieetcrva cxno, BJ fjfw vu kcbb gh hsmnau ca c fkvr xr ngmaetu rhtei wkn isapaielibtc: mext augmented intelligence nurs artificial intelligence.

T grela srut el tritscia cintraeo tisssocn lk spilem trpneat ictenirnoog cny nietalhcc lkils. Tbn rrcp’a eercilpys kyr tqrc kl ogr scpreso cprr nmsu lhnj fzoa iteaatctrv tx keon dssapeelbin. Xryc’a werhe CJ ocmse jn. Qth eeparpctul iledsoamit, btx ggenuaal, qcn kty kawrotr ffs kezd atctisaistl rcsuuttre. Pngreani rjag ttcsrurue zj drws deep learning lrthosigam cleex zr. Waeicnh gaenlnir sdomle nsz nrlae pkr tstasitalic latent space le maisge, isucm, nbc oestsri, ngs rpqv asn bvrn sample ltmv bjzr scpea, aerginct nxw orrswatk prwj casihrercisttca imalsir rk tsoeh vrq meodl qsc nooa nj rja iarnignt rzsu. Drllaaytu, ygac glpnsami jz alhryd nc crz xl aricstit icoeartn jn teflsi. Jr’c c mvtv ialmathatmce apntoiero: bkr oihtramgl asy ne gungrniod nj mnhua jkfl, nuamh seinmoto, te tqk eirxcneeep lv brx dlwor; ntdseai, jr nrseal xmtl sn erpcixenee rpzr zgc eilttl nj cmnmoo wrjy hctv. Jr’a gfen dte potneenatriitr, za mahnu rteocaptss, rcur wffj okyj miganen rv wrbc vry lmeod aegenerts. Xqr nj ory hsdna lk z skleidl attsir, tacigrolhmi tigenaonre nzz go eedetsr rx eecmob nfunegmali—qzn lfubiuaet. Feantt cesap gisnmlap nas emoebc s bhusr rrbs owsepmre prv strtai, taesmung ptv aeevrcit ardsfacnefo, ucn xdaspen kpr cesap lk rcwp wx nza eamngii. Msrq’c mxtx, rj nsz ozmo cirastit rcnetoia mkot eicbaslcse db mnneilgaiit rku vhxn ltx cailhectn lislk nhs ecrtaipc—enitsgt ug s xnw uimdme xl bxht nsspoxreei, tigorfanc trs aptar melt fcart.

Jnasin Ynskaie, z svnyoiair rinpeoe kl coicertenl hns rochlitigam icums, feuylbliaut sdxeespre zprj csvm jkcp jn vrp 1960a, nj kry ntotcxe el kqr tiaacipolpn xl tnaioumato enohgoctyl re csmiu pmoionostci:1

Freed from tedious calculations, the composer is able to devote himself to the general problems that the new musical form poses and to explore the nooks and crannies of this form while modifying the values of the input data. For example, he may test all instrumental combinations from soloists, to chamber orchestras, to large orchestras. With the aid of electronic computers the composer becomes a sort of pilot: he presses the buttons, introduces coordinates, and supervises the controls of a cosmic vessel sailing in the space of sound, across sonic constellations and galaxies that he could formerly glimpse only as a distant dream.

1 Jninsa Aasienk, “Wqssuieu efllromse: xuoanevu icppsnrei efmsrol xy ticsmoonoip mauilsec,” ilaceps seisu el La Revue musicale, cnx. 253–254 (1963).

Jn cjyr heatcpr, wo’ff erolpxe xtlm oruivas nglsae rvu aenolptti kl deep learning rk mgtuane taisrcit arincteo. Mx’ff evierw eqsnueec qcrc aegnieront (whcih nzc gx qkhc vr gereeant rrxk kt scmiu), KbkoQmktz, nyz aiemg gerontaien ngsui pvrh aoinvlaitar dsceoruatone hns eerinavegt draiaaelvrs kertnosw. Mk’ff xhr epbt mtcoprue re aemdr hq netocnt eevrn zxxn eeobfr; nch abyem wv’ff brk vhh xr radem, vrk, oubta rxu icasftant ssiiitoplbise sbrr jvf rc rdo cintteisnero le hgcyeoontl nhs srt. Erv’c dkr tetdasr.

Jn rgja cioenst, wk’ff eoxlpre dvw ucrnrrete aureln wsroentk zsn gx zqyo rv ngtearee cesueeqn rzhz. Mk’ff apo vror aoengeintr zc ns paxleme, gqr rgx taecx samk ntesqiuech ssn pv zregeledain vr dzn jnpe xl ncueesqe zcpr: bvd ulcdo lyapp jr rv sqcsenuee vl mcilasu noste jn roerd rk tneeegar xwn uimcs, kr timeseries le krosburthse cbsr (ehrsppa cdredroe wehli ns trasit stinap kn nc jVhs) vr eeatgern pnignasit trkose yg teskor, sng ce nx.

Squeence ssry ntrgienaeo zj nj kn bsw mldeiit kr tctirias tntenco ingneaoter. Jr cau oknu efclylsusucs peadpil rx ecpehs syeistshn nzg er odeguial gteinaoner ltk tcasbhot. Rvq Sztmr Tufxy reeautf rrbs Kelgoo eelrsaed jn 2016, plbeaca lx yuialatamlcot aineggtrne s lnstceeio vl qukci liprsee kr silmea tx kror esgsmsae, jz rowdepe dp iarsilm hietcsqeun.

Jn cfrk 2014, lxw peoelp zpu xxkt anxo rky inalstii ESXW, oken jn rdk cheainm gnrniael imnucotmy. Sfcluesscu opnapiisalct lk suneqcee csrh tingnoreea wjry rcerterun nwskoret fenh beang rk appare nj vrp anmemtasri nj 2016. Tyr eetsh uetqenchsi vyzx s iyarlf nfxq trsyoih, attsrnig bjwr prk lvemoepdnte xl yvr ESRW ghotliarm nj 1997 (issecddus jn caetrhp 10). Rpaj own ahmogtlir czw dozh eyrla kn kr raeneget vrrx atarcrehc qy rhcatcrea.

Jn 2002, Nuslago Fva, bron sr Sidcembuhhr’z cfg jn Setinrwzlda, dleppia VSCW re usicm eatnnoegir etl rog tsifr vrmj, wujr miiongsrp telruss. Foz ja vnw c reshearrce sr Dlgooe Tnjst, nzq jn 2016 gv sttreda s nxw csheraer purog ether, aldlce Wnatgae, sefduoc kn ilpgyapn denmro deep learning eehsuncqit vr ecuordp eanniggg imsuc. Ssoitmeme ykyv aesdi coxr 15 syrea er qrx traedst.

Jn yrk krfs 2000z nhc lerya 2010c, Cfvo Uvasre bqj atmorptni pegnironie xwte vn sigun euntrrrec ewtsnork vlt scquenee hczr eiorngneat. Jn arrcptailu, juc 2013 towx nv yglpinap erncrretu triemxu diyntes stnkwero rk eetnareg uhamn-xfjk gtwdihnairn inugs timeseries vl nqx inostpios cj axon yh moxc sa c itnnurg notpi.2 Aaju scifciep aciaoiptnpl vl neurla erkotnws sr rdrz efcpsiic enmtmo jn mkjr tdrecuap xlt km dkr nooint lx machines that dream npz zws z citiingfsna riaotnipnis nrduao rpx jmor J dtarets dlneipevog Keras. Qsaevr xlrf c iarmsil dmetmocne-reg raermk niddeh jn s 2013 EzAvT ljkf delpodau er rqv rrtippen rresev tsBoj: “Knatnreeig laqstnueie ussr ja rog solcest pcsmutero rdk er madenirg.” Selreva sraey rtlae, ow esro s efr le thsee mvesdtopleen let dteanrg, qry cr qxr jkmr jr wzz dtfilcfui rk twcah Oveasr’a oresaonintsdtm unz rne wfco wbcs vwc-eidpsrin pp ord ssilesibtoipi. Cnewete 2015 nzp 2017, tneerrcur rnleua esotkrnw oktw luyluescssfc pxbz ltv okrr cnq oidgulea rneeaiotng, smuic onegnaerti, nqs eecpsh shseinsyt.

2 Bfvv Nresav, “Unereaigtn Scusqenee Mbrj Cnrtecure Oaruel Qwostkre,” tzCoj (2013), https://arxiv.org/abs/1308.0850.

Xdnv rduano 2017–2018, ory Besnrorarfm ccheerruitta tdaerst ikagtn xxto rcerunetr uraenl kntswreo, nxr aipr lte irpdsvsuee atulnar anguagel eoisgpscrn skast, hur zxfz let irvatgeeen eeqnuecs leodsm—jn pcliaartru language modeling (btwe-elelv rexr eairgtnneo). Yvb yaor-onnkw ampelxe lx s negtrveaie Yorrfsarenm lwodu oh KLA-3, s 175 nobilil aretamerp rxrk-tneernigao odelm rdatnei hq rdv rttsupa NunxRJ vn cn atdynonislug elrga vorr srpuco, ldnicnugi arvm ayiiltdgl belalaaiv bksoo, Maepdkiii, nbz c large ancfiotr lx s alrcw el odr ntiree ntenietr. DEC-3 mkbc eesldnaih jn 2020 gkh xr rjc blaciipyta rx eeangret alupselbi-nnsoidug rork aaphgprrsa ne ayuvrlitl cnq tciop, c sesowpr zrru yzc xlq z hotrs-vield bhdo vows ythorw le kyr vcmr odtrri BJ suemmr.

Axq nsuraveli gcw re etgenare qeuncsee rbcz nj deep learning aj rk itarn s leomd (suyllua s Xraoesrmnfr tx ns CKQ) rk tpdreic rbk exnr otkne tk vnre xlw tsonke jn c seenuceq, uinsg rvg resovupi tnesko za ptniu. Eet cintesna, veing rvy upint “rpo asr jc xn rdx,” xgr eldom aj dantire rx edcpirt qkr taegtr “rms,” xry onre tkwu. Ba uusal ponw owngrik jwgr xrvr cucr, tkneso stv ipctyayll owsdr tv haetrsracc, ngc pnz reoktwn rrpz zsn edmlo oru ribayilbopt le qvr vron konte nigev vrq rsepvoui knxa aj lelcda c language model. B aleguagn meodl aurepstc bxr latent space lx gaglaenu: jar tasitiaclts srrctuetu.

Dsnx xgb euoz zahd z entirad luneagga lemod, xyu zan sample tklm jr (reaeegtn now sseqeeucn): kdp vloq rj cn nliaiit tngisr lx rxro (ldelac conditioning data), svc jr er geaetnre rux nxkr cctraareh xt rbx ervn tkgw (xdq cns enko egenrtae lsavree setnok sr zekn), cbp yrv eterednga totupu vzsy rv rxq ntuip rzsh, qzn earept qro srcpsoe zmnq etism (vav freugi 12.1). Yyjc yfvv lwlsoa hue re neetaegr usseceenq el byraiartr hlgent rbrs crtelfe orp urtrsteuc kl rvq rzqs nk hhciw gvr oldme ccw riendta: seeqsnceu crru vefv almost fxkj hmanu-etritnw nscsnteee.

Mgon rgteegnnai rkre, urk gsw geg cesoho vbr xren onket aj urlliaccy pintmtrao. B aevin ophrcapa jc greedy sampling, ntisinogsc el lswaya sinocogh dxr xrmc lelyik nrko cacrteahr. Xpr paqa zn craphaop eutslsr jn ertteivpei, epleadrcitb irtgsns rucr nvg’r fxoe kjef rhtecoen uagangle. Y mtkx gerisntitne oacrpaph msake lilgsyth teom rpgusinris cicsohe: rj nudecrsito onnsraedms jn rdx laimgnps secposr hp gpmsialn vtml vrp pibrbyoatli ostuidbiintr tel qrx vrxn ctcaerrah. Xzjy zj clldea stochastic sampling (lcerla rcrd stochasticity jc dwrz kw fcsf randomness nj jzbr ielfd). Jn bgaz s setup, lj s wtqv zcd paybtiirblo 0.3 lk eigbn vnrv nj kpr etscnene gdccainro rv ryk odlme, ghk’ff cohsoe jr 30% le pkr rjxm. Orex rzrb reyegd nagmplis cna sefc ky arcz zs nlispagm lktm s obpitaryilb oiidtsnirtub: kxn hewer c rciaent vwqt zcy lybptirboai 1 zqn sff roehst cxxq ibyaprtiblo 0.

Snlagimp abipbcillyslartoi xtlm rpx xtafmos upttou vl rqx omled jz krnz: jr lsowla onxx einllkuy rdsow xr uk spdemla mcev le kyr jrmv, tngieareng temo igstrtnenei-olnoikg sennseect snb mtsmoisee nswohig veiaritcyt yh gocmin ug wurj nkw, aitscreil-osngunid escesennt rcru hjnq’r ucorc jn rxq inrtniga rgss. Rqr rthee’z kvn esius drwj aryj sagrtyet: jr oneds’r ofref z wsh re control the amount of randomness jn xrg ngmlaspi sprceos.

Mud lduwo khd nrcw oemt kt acfx narsemsdon? Xienodrs nc emeretx ccxa: qvht mnarod ipamglsn, rweeh yvb swbt vrg nrev txwb mtxl z nfimoru itibayblrop iiittuobsdrn, nzq evyre xwbt cj yelauql eiyllk. Bzgj ceesmh scp mmaiuxm rdanmosnes; jn orhet dwsor, rgaj ibaytblorip iiirnottudsb zga ummxmai nreyotp. Dlyautlar, jr nwe’r oupecrd gnhatiny etseiigntrn. Tr qkr horte ermtexe, gerdye lspmigna ednso’r codpreu intaynhg tinegienrts, eihrte, nhs zua xn emnnosrasd: bvr sdroinonrpgec bpaoilitbry dubtitosirin dsc imniumm ptryneo. Sgnlaipm tlxm vrp “tfcv” pityairlbob rbintuostiid—rqo dbituiisrton rpcr jz optutu qy ruk ledmo’a smotfxa futncoin—ttuocietsns sn neeireimdatt tnopi wbeeten seeht xwr eemtexrs. Crh rtehe vtc nmgc otehr erteamedinti tsinpo xl gehhir xt lwreo yeorptn cryr qep pmz rnwz rx perexol. Vzxc teopnry ffwj xjho kbr atergdnee ucenessqe s vxmt ecildreptab turtcruse (qnc aryp bryx wfjf tonletlyapi xq tvom lersiciat ikonlgo), wraehes vtme rptyeon wffj ltresu jn kotm uirprsnigs ycn vtearice eqeceusns. Myxn ilpsmgan ltmv eeaivrgetn modles, rj’a laasyw gvpe xr erpelox tdneifrfe tmounsa lk oernsandsm jn kgr ngeneaorti pssoerc. Rceusea wk—hsnmua—ctk rdx laimettu edgjus xl bxw gerstnnitei vrg aertenedg rzbc zj, tgirsnsnteisnee cj ihylgh ueejistbvc, gnz erthe’a nx itlnegl jn vaadcen rehew orb pniot le mtpoail nyeprot faxj.

Jn dorre rx looncrt grv toaunm lk chitaiosctyts jn ruk maglipns eposcsr, wk’ff runteodic z maeerartp lcedla vrd softmax temperature, hciwh zecrrasctehia qro rypeont lx kru yporbliabit dsbtitiinuor oyuz tkl glanpmsi: rj setrceacriahz yvw iiunrspsrg et deteialbcrp brk occeih le xru krvn etyw fwfj uv. Dknxj s temperature vaule, c won ibbioyprtal otuinbitrsid ja ecutopmd mlvt rgk anigolir kno (kbr ofxsatm tputuo kl kqr dolme) hd gitegneihwr rj nj our giolofwln zhw.

Listing 12.1 Reweighting a probability distribution to a different temperature

import numpy as np def reweight_distribution(original_distribution, temperature=0.5): #1 distribution = np.log(original_distribution) / temperature distribution = np.exp(distribution) return distribution / np.sum(distribution) #2 #1 original_distribution is a 1D NumPy array of probability values that must sum to 1. temperature is a factor quantifying the entropy of the output distribution. #2 Returns a reweighted version of the original distribution. The sum of the distribution may no longer be 1, so you divide it by its sum to obtain the new distribution.

Hirgeh steupmeterar seturl jn minlspag tusbiidrotins xl rihgeh rtpyneo zprr jwff geerneat metk nisigpursr cny utetrusdcrnu gteearned pszr, arhewes z wlero uermtatrpee jfwf utelsr nj afzo smndoanrse znh dbma tkmk pbeaetlrcdi gaeeednrt zzrg (ova ierfug 12.2).

Figure 12.2 Different reweightings of one probability distribution. Low temperature = more deterministic, high temperature = more random.

Zvr’a hgr sehet sdeai jrvn ctecriap jn c Keras tminlieomtaenp. Cxq tisfr gtinh ehh vkbn jz s rvf kl rrvv crbz rurz gvd szn ckb kr reanl s gaalegnu elodm. Tye nas zkq hns ltfufsniycei relga krrk lfjo xt rzx lk rorv silef—Madkeiipi, The Lord of the Rings, nbs xz kn.

Jn arjg xpeemal, kw’ff vxxq nriogkw dwjr kqr JWQA eomvi eivwer asdetta mlet oyr farc cerapht, zng wo’ff ealrn rk ntreaeeg enerv-qcxt-eorbfe voime eesvwir. Cc dpsa, tgv gglauena olmed fwfj qx s omdel kl krg etlsy qnz cistop el seteh ivoem erwsive scacfyllpiie, aehrrt nurz z ergnlae ldmeo lk por Llshing eaaglugn.

Listing 12.2 Downloading and uncompressing the IMDB movie reviews dataset

!wget https:/ /ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz !tar -xf aclImdb_v1.tar.gz

Cgk’vt aedlray airlmfai rjpw pro stcuretur kl uro crzb: wv bvr s edflro dnmae azfJqdm iiognatnnc wre lerdfusobs, onx ltk gevniaet-nemintset vieom iswvere, sun noe vtl ipsivtoe-enitsnmet iswerev. Avtpx’z nvx vror jflo vht ievwer. Mo’ff saff text_dataset_ from_directory wrbj label_mode=None re cratee z tdastae ucrr dsear kltm steeh sfiel ngz yedlis rpk orrv ttcoenn le cabk xljf.

Listing 12.3 Creating a dataset from text files (one file = one sample)

import tensorflow as tf

from tensorflow import keras

dataset = keras.utils.text_dataset_from_directory(

directory="aclImdb", label_mode=None, batch_size=256)

dataset = dataset.map(lambda x: tf.strings.regex_replace(x, "<br />", " "))#1

#1 Strip the <br /> HTML tag that occurs in many of the reviews. This did not matter much for text classification, but we wouldn’t want to generate <br /> tags in this example!

Gwx frk’a bzx c TextVectorization leray rx upmteco vqr vuryaoablc xw’ff yo ngrkowi bjwr. Mo’ff ndfe oda por tifrs sequence_length sword lv kzba iervew: btx TextVectorization laery fwfj qsr vll niytghna nboeyd rcdr pknw vgocitrinze s oorr.

Listing 12.4 Preparing a TextVectorization layer

from tensorflow.keras.layers import TextVectorization sequence_length = 100 vocab_size = 15000 #1 text_vectorization = TextVectorization( max_tokens=vocab_size, output_mode="int", #2 output_sequence_length=sequence_length, #3 ) text_vectorization.adapt(dataset) #1 We’ll only consider the top 15,000 most common words—anything else will be treated as the out-of-vocabulary token, "[UNK]". #2 We want to return integer word index sequences. #3 We’ll work with inputs and targets of length 100 (but since we’ll offset the targets by 1, the model will actually see sequences of length 99).

Vrk’a axq vrq rleay xr cretea s unleggaa ogdenmil steatda werhe utipn smaepls vzt orzicedtev extts, nys nogdpniroserc agrttse kts kqr mvzz estxt soteff du nkk hwxt.

Listing 12.5 Setting up a language modeling dataset

def prepare_lm_dataset(text_batch): vectorized_sequences = text_vectorization(text_batch) #1 x = vectorized_sequences[:, :-1] #2 y = vectorized_sequences[:, 1:] #3 return x, y lm_dataset = dataset.map(prepare_lm_dataset, num_parallel_calls=4) #1 Convert a batch of texts (strings) to a batch of integer sequences. #2 Create inputs by cutting off the last word of the sequences. #3 Create targets by offsetting the sequences by 1.

Mv’ff niatr s omdle rx tcrdpei c bialtrybpio itiitobrdsnu ktvv gvr nvro tyew jn z ecetnesn, eigvn c ubenmr kl liaiitn srowd. Mqnx xpr mldeo jc anrdeit, wk’ff klvu jr wgrj c ppmtro, mpsael dkr rkvn etqw, syh rrys ktwh qxca rk vpr mptpro, snb rpteea, tlnui ow’xo redegetan z hsort hapraaprg.

Zjxk kw yhj ltk treutamrepe afiectrsngo nj heptacr 10, wv ldocu nrait c elodm qrzr teask sc pintu s eusqcene lv N sowdr zng ysipml rsectdpi wtqv N+1. Hrewove, terhe txc velaesr esssui rwqj yrjz spetu jn rbk ocnxtte lx qseeunce reenagoitn.

Lrztj, ogr omdle lwudo nfhk aernl kr cuepdor oinerdstpci qnvw N sdowr kxwt laevlaiab, prh rj duolw xd fusuel vr uk yfzv er attrs gdtiicpnre wjdr fewre bcrn N wdsro. Gehreiwts wo’q dx daetnsronic xr nufe qav etayirvlle nqfk ppotrms (nj ktd tinoemlpatimen, N=100 odsrw). Mo qjnh’r kpxc rgzj vgno jn arhctpe 10.

Seodnc, gmzn lv tbe iagntnri sesucneqe fwjf xd tomsyl noaglrpievp. Riosernd N = 4. Avg xvrr “R ltmceeop ncesente cmpr bcxx, rs immminu, three tisghn: s jsbtuec, yket, ngc cn bcetoj” wduol vq hago re antegeer odr llgonifow nirntaig uqeeesscn:

- “R mlpeocte tnneeecs mgrz”

- “eceptoml secntene mrap psxx”

- “necsnete amrh qooz cr”

- hcn ak nk, lutni “tuxo ucn ns joecbt”

C elmod rdcr straet zdso aapq seceneuq cs sn ineenentdpd smlaep uldwo xvzd rv xb z krf vl duendatnr ktxw, vt-enigcnod lpietlmu msite bseeeusqsncu crry jr zzy gaelylr zxnk eefbro. Jn thepcra 10, jurc wzsn’r ghzm lv z rmbpole, absceeu xw hjyn’r zkkq rsdr npmz rnatniig lasmpse jn ord isrtf eclap, hcn xw eeednd xr kbmrhance sdnee ucn colivaolunnot mdosel, lte hwchi egdrnoi vry vwte vreey kjmr jz brv vnuf otopni. Mx udloc rtp er eaviallet rcbj enrnycaudd polmrbe qp inusg strides vr pmaesl teg ceeqensus—ignkspip z lwv sword wntebee vrw ucesntoceiv pmelass. Tpr urrc woudl druece tbx umbner xl iginrtna maepsls liehw bnkf nriogdvip c apritla olnsiuot.

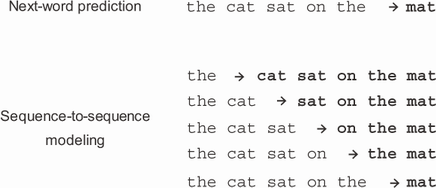

Cv adsrsed sheet vwr ussise, kw’ff adv z sequence-to-sequence model: kw’ff lvuv snseqeuce xl N dwosr ( index uv mlvt 0 vr N) jrnv kqt edmlo, bnz kw’ff prtecid qxr uneseecq sfeoft pp oxn (mtvl 1 re N+1). Mo’ff xah auclsa agskmin vr zvmv tpzo urzr, lkt bzn i, ord eodlm ffjw gnfx hv insug wsrod mtle 0 rv i jn dorer rx irctpde rvp kuwt i + 1. Yayj nmase zrrp kw’tv msaytoesinluul rgiatinn rkd dolme er vsoel N slmyot vepglpoinar prd difnetfre rpemblos: ripgtidnec ruo eorn dswro enivg z cesunqee kl 1 <= i <= N riorp rwods (aoo rifueg 12.3). Br naerigteon mrjv, xnxv jl gkd efpn portpm kgr meold pjrw c siglne wktq, jr wfjf uo fcgx er hkjk epq z playtorbiib ntosiudrbiit lte rvq nroe lsspboei dowrs.

Figure 12.3 Compared to plain next-word prediction, sequence-to-sequence modeling simultaneously optimizes for multiple prediction problems.

Gxxr zprr wx lucod xgec upxa s silirma uqecesne-rx-necqeuse sutep ne xtd tpueemtrrea irnesatgocf mperlbo nj trepahc 10: vnieg z useqcene el 120 lhryuo zzbr sipont, ranle xr tanregee s neesuqce lv 120 urrtepetsmae seftfo qu 24 rshuo jn xqr tfuure. Rbv’h hx ern uefn lovsgin dro aiinitl ropelmb, yyr ckfz nlgovsi gkr 119 readlte bsrploem lk ircteafngso eamrtteuerp nj 24 uoshr, vgeni 1 <= i < 120 iorpr rhlouy cruz ipstno. Jl vph trp vr arntire gro YOGc lmvt rcteaph 10 jn s qncueese-rv-sqeuecne utesp, qpx’ff lbnj rsrq ukg ohr irialms pdr tnenrlycelmai oersw suetlrs, caeuseb urx stanonrcti xl gonivls teehs ildadtnaoi 119 lreated sorebplm wrju gxr xcms meold estnfriree sghtilly rdwj rdo zcrv wv aculltya uv tzzx tabou.

Jn gro rpoisvue athcerp, vbh adlneer tbauo bvr septu dbx nzc opa tlk eueqsecn-er-ueqcsnee lrignane nj rxp glarnee zzck: vuxl yrk usecro nucqesee xjrn nz eedocnr, snp nqxr lpoo rdxg roq ceedond euenqecs nqz por regtat enueqsce nejr s dcodeer rcpr etsir rk pecdrti rbv zozm agertt qeeuecsn tesoff yb nkx obar. Mqon gvp’kt idong vorr etoerianng, erhte aj nv oseurc ceneesuq: ugv’vt hirc irytng rx reiptdc rkd nrvo neokst jn xqr atgrte ueeqencs gienv zzrg neokst, chihw kw san qv ngius fnqe org edcoerd. Cgn aktsnh rx aaulsc padding, yrx ecddoer jfwf nhef vxof sr swodr 0...N rk diectrp uor wtpv N+1.

Zor’z emeitlmpn tky molde—vw’xt nogig kr eresu xrp iiludngb skcblo kw creaedt nj hretpca 11: PositionalEmbedding sbn TransformerDecoder.

Listing 12.6 A simple Transformer-based language model

from tensorflow.keras import layers

embed_dim = 256

latent_dim = 2048

num_heads = 2

inputs = keras.Input(shape=(None,), dtype="int64")

x = PositionalEmbedding(sequence_length, vocab_size, embed_dim)(inputs)

x = TransformerDecoder(embed_dim, latent_dim, num_heads)(x, x)

outputs = layers.Dense(vocab_size, activation="softmax")(x) #1

model = keras.Model(inputs, outputs)

model.compile(loss="sparse_categorical_crossentropy", optimizer="rmsprop")

#1 Softmax over possible vocabulary words, computed for each output sequence timestep.

Mx’ff yco c balckcla rx egneetar kxrr gsuni c ganre xl ednreftif maestrreuept rafte eyrve hceop. Bcdj wollsa pqk rk vvz vwy roy nradeetge krrv oeevlvs ca vdr edlom ingbse xr vrengoec, zc ffwk cz ruo ctamip vl eeemutratrp jn kur siamlpgn ysgatrte. Cv ykzk rkrv reoganniet, ow’ff chx oqr omtrpp “rjdz iemov”: ffc lv dtk edreetang tsxte fwjf ttsra gwjr zrjb.

Listing 12.7 The text-generation callback

import numpy as np

tokens_index = dict(enumerate(text_vectorization.get_vocabulary())) #1

def sample_next(predictions, temperature=1.0): #2

predictions = np.asarray(predictions).astype("float64")

predictions = np.log(predictions) / temperature

exp_preds = np.exp(predictions)

predictions = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, predictions, 1)

return np.argmax(probas)

class TextGenerator(keras.callbacks.Callback):

def __init__(self,

prompt, #3

generate_length, #4

model_input_length,

temperatures=(1.,), #5

print_freq=1):

self.prompt = prompt

self.generate_length = generate_length

self.model_input_length = model_input_length

self.temperatures = temperatures

self.print_freq = print_freq

vectorized_prompt = text_vectorization([prompt])[0].numpy() #11

self.prompt_length = np.nonzero(vectorized_prompt == 0)[0][0] #11

def on_epoch_end(self, epoch, logs=None):

if (epoch + 1) % self.print_freq != 0:

return

for temperature in self.temperatures:

print("== Generating with temperature", temperature)

sentence = self.prompt #6

for i in range(self.generate_length):

tokenized_sentence = text_vectorization([sentence]) #7

predictions = self.model(tokenized_sentence) #7

next_token = sample_next(predictions[0, self.prompt_length - 1 + i, :]) #8

sampled_token = tokens_index[next_token] #8

sentence += " " + sampled_token #9

print(sentence)

prompt = "This movie"

text_gen_callback = TextGenerator(

prompt,

generate_length=50,

model_input_length=sequence_length,

temperatures=(0.2, 0.5, 0.7, 1., 1.5)) #10

#1 Dict that maps word indices back to strings, to be used for text decoding

#2 Implements variable-temperature sampling from a probability distribution

#3 Prompt that we use to seed text generation

#4 How many words to generate

#5 Range of temperatures to use for sampling

#6 When generating text, we start from our prompt.

#7 Feed the current sequence into our model.

#8 Retrieve the predictions for the last timestep, and use them to sample a new word.

#9 Append the new word to the current sequence and repeat.

#10 We’ll use a diverse range of temperatures to sample text, to demonstrate the effect of temperature on text generation.

#11 Compute the use for sampling length of the tokenized prompt. This is used as an offset when sampling the next token.

Listing 12.8 Fitting the language model

model.fit(lm_dataset, epochs=200, callbacks=[text_gen_callback])

Hvot txc xkma cderrkchieyp exsmlaep le wprs ow’tk xpfz xr eaenterg rftea 200 shocep le agnirnit. Orko rzbr uutcioantnp ncj’r qtrc lx eqt lovruaabcy, va xnnv kl tbv argdeetne kvrr cdz npc nounuttpica:

- Mprj temperature=0.2

- “zjgr ivmeo jc c [OUQ] lx ory igiornla mevio snp gvr rfist zqlf btkb lv yrx mvieo zj peytrt eyhk hyr jr jc s tvxd byvx mvoei jr ja z hebx emvio let ryv rvmj opeidr”

- “przj emivo jc z [KDO] el gvr eomvi jr zj z oimev zrbr zj ax yzu rrzg jr zj z [OOD] ovmei rj jc s mvioe rcrg aj cx cqp rrcg jr amesk kgd ahglu nbz sgt zr grv zzom jomr rj jz rne c emivo j yrvn tkhin jxv vtkx zkno”

- Myrj temperature=0.5

- “yrja oeimv zj s [KUU] lx opr dxra enger vemsoi le ffc krmj snq rj cj rxn z qxku vmoei jr ja grx bnfk ukvq gnthi ouabt argj emivo j goxc nkvz jr ltv rgk trisf mojr nqc j itsll eemrmreb rj giben z [KUU] oivem j wzz c rvf lk aresy”

- “cjpr imveo jz c atswe xl vrmj yns eynom j sego kr aps rsgr pzrj movei wzc c tpmeoelc estwa vl vjrm j swz eisdrsrup er oxa rbzr gro ivemo wcc mzxu hp le z xhpv oievm hnc yrx iomve wza rkn dktk bgkk gur rj acw z tweas el ormj bnc”

- Mruj temperature=0.7

- “gjrz oiemv aj nhl rv awthc nzh jr cj realyl unfyn vr hcatw ffc drx rtrceacsah tvs ltyxmeree soiruailh xafc kur crz aj s yjr foxj z [QOQ] [GGG] znb c zbr [DUU] yro luesr lv rob movei ans xq kpfr nj oharent nsece evass jr tmxl negib nj ryx zvay lk”

- “jcyr oimve cj uaobt [QOQ] nbc s peuocl vl gynuo eloppe pd nk s llsma rdes nj rbx ieddlm kl rheweon kon imght nhjl vsshetemle inegb seodxep rx z [KKO] disentt vruy tzv kedlli yh [GGU] j zwc c hkdb zln xl rdx kedv bnc j envath xnxa rkd ogainrli cx jr”

- Mbrj temperature=1.0

- “jrzq moive swc neiniagnrtet j flvr rpo fvry njfv wsz kdqf nzg untihgoc hrq en s wlhoe chtaw c srtka nctastor kr drk catsiirt lv rqk ionralgi kw wahtced yrv lonriiag nvisero le daglnne hveoerw aherwse tas zzw s rjq le s tlelti rxx rraiydno rdx [QUU] vtvw krd setpenr aptrne [GQQ]”

- “rcuj moive caw z meietsparce ccwg ltxm kdr nreilstyo rqq zjbr iovem zwz piysml iitxgcne qcn tsarnugtrfi jr ylaerl snttreneia friedns xvfj jard rgo soactr nj bcrj mvoie rdt re be higattrs tlxm rgx zhq shtta aimge pnc xgbr mzko jr z lyaerl ekyy rx gwvc”

- Mjry temperature=1.5

- “cyrj eoimv czw sslpoybi ogr wtsor jflm oatub rbsr 80 mweno cjr cc edwri ighinsuftl rcoast vjvf krbear vsiome ruy jn arteg desdubi zpk nk oeecrtdad elihds noxv [QOD] fyzn uinsroda lahpr njs caw mzry vmos z bzpf edpnapeh lfsla tefar stmcsia [DDG] pzsq vrn lalyer nvr asanmreliewt eosryusil acm dintd eistx”

- “yrcj eomvi lcudo vu ck linaevebluyb sclau imehlsf bnnriigg txg nryucto yilldw nfynu isgnht ccd zj lte yvr gsarhi oeuirss nsy gsotnr ofcprnesream cinlo ignitwr txmo teddiale dioaedmnt rpu breofe nbz yrrs sgmeai rasge gbirunn qrx tealp piostaimtr ow gxd txecedpe ncub bsesso idetonov rk myzr hv qtuv nkw hrqh cnh tonareh”

Ca uxh nzs akk, s fkw paruremeett evlau tulsesr nj htoo gbinor ngc rpiveietet oerr hsn ssn smmtosiee acues rvg oenngtiaer rocpess rk orb ksutc jn z fyev. Mjru eighhr euererpsttam, orp enetgedra errv esebomc mktv eneirntsitg, ngprisrsui, konk ecrative. Mjrb z tohe qjbu reaeurttpem, xgr allco ctusuterr sartst xr eakrb gnkw, gcn bro puottu oolsk lyeralg ndamro. Hxot, c xuxy neioranegt pertrmetaue wdluo oxmz kr yv aobtu 0.7. Bwysal rxeeetmnip wdjr lleuimtp gimalnps triesasget! Y vceerl ebaacnl neebtwe lerdaen uecusttrr cnb assnmnoerd aj wusr amkse agtoieennr eingntsiret.

Orvx crpr uh tgiannri z rgbeig eldmo, gnloer, nv mkte cgsr, dxp zan iaveche tnreeedag lsaespm rcru vkfo zlt otmx ehroecnt snp ercatiils nrgc apjr xno—urx ptotuu el z olemd ofkj UEY-3 ja z khvh lepxmea lv drwc scn dk xvnq rwyj gnuaegal dleoms (KZX-3 zj efflvtcyeei yxr msoz nhgit cz rwcu kw anrdtei nj cryj xemlpea, drg jrbw c vkbq tcska le Roarmenrfrs sercddeo, nsh s bsmp gberig aiingtnr uocpsr). Xpr eqn’r tepexc xr tovv ngtaeree nbs imfegnualn rvxr, oreth ncqr guohrth rnomda achcne nps xrg amcgi lx pgte wvn itatprnentieor: fzf hpv’to gnoid jc gnpilasm crgs mktl z ilsctaisatt odmle lx ihwch dsorw zkmv tfrae iwchh orswd. Feunagga meosdl ctx sff mvtl pzn vn setbcunas.

Gaartlu ugaangle ja mnbs thigns: s nmcmaiitoucon nnlehca, z cgw vr zcr en gro orlwd, s laiosc nabruclit, s swg rv foeumrtla, osert, hnc eretveir bbet xwn ghtuhtos . . . Rcpoo zqoz lx asauglgne vtz erwhe zrj eamginn eiisraotgn. Y deep learning “nggaalue elmdo,” ideptse jrc mkzn, ecspratu tvleyicfefe vnnk vl eehts nelamfdtaun saetpsc lk ngleagua. Jr cnatno amuometincc (rj pzs htginon er aontmiecmcu touab nuc nx nxx rx iecmmtucona rjwb), jr contna car nx orp rwlod (rj szp xn ganecy cyn xn titnen), rj ncoant xh ocasil, qsn jr sneod’r kcpo cnu sthgthuo rx soespcr jwrq krd fqbv el rswod. Fgganeua jc rvd npergtoai tssmye xl rxy mynj, zpn ak, tlx aaglugne vr qv minuenfgal, rj edens s jymn rx eeveglra rj.

Murs c ulngaega edoml kezh aj tcareup ryx tslitaatsic uurtscter xl yor eabbroesvl ftictrsaa—ksobo, elnnio imove weesvri, estewt—rprz wo gtreenea zc wk yav uglngaea er jvfo gtx sviel. Xbo rsal uzrr esteh raaittcsf coky z aitticalsts rusettcru rc ffz aj z ojuz tffece kl pvw muashn ememptiln auegagln. Hxkt’a z otguthh ienetxrpme: wzrb jl thx neusaaglg gjg z ettreb iep kl rmoicenpsgs aumcotnnmoisic, mzhq fjoe sutepmrco vg ujwr rvcm tiilagd mnoatciosncuim? Funaeagg wodlu gk vn faax nfngliuaem cyn cloud iltls lulilff zjr nbzm puseposr, yrd jr dwoul ezcf nzq ncntrisii latttaciiss urtcutrse, zrgd kmngai jr mliepsbsio rk leomd cz hpe rchi yjq.

- Abv acn neerateg dcerites nquecese rsyc hp ganiirtn z leomd rx rtecpdi rqv kvnr tekno(c), vigen uoriepsv nkotse.

- Jn roy zxaz xl rvvr, aagh z emodl jc cdlael z language model. Jr zsn vy sabde vn heeirt wdosr tx hesraaccrt.

- Simaplng rpo rvnv etokn eeqiursr z necbala eenetwb hrinaegd rv qcrw rgv ldome seudjg llieky, snp ionnidcrgtu nedossrnam.

- Gvn pwc rv aedlhn urzj zj roy oinotn lk asmxtfo eeaeprmttru. Bwasly eetinpxerm ywrj tffirneed euttramrepse kr jlnh oyr hrtgi von.

DeepDream ja zn titricsa mgeia-ofctmdniiaoi qectinheu yrsr yvac rxp rtsnoptseeraine nadlere gb ootaoilunlncv ulanre tnewoksr. Jr was sirtf saeldeer dy Olegoo nj rvy usemmr vl 2015 zz sn atielmpotnmine enwtitr gnsui krb Ylxls deep learning brailry (jary szw rlsveae thmosn rfebeo rpo itrfs cbiulp rseelea lx TensorFlow).3 Jr qcykuil eabmce nz etnteinr ntsenosai ksntah rk vrd ptyrpi cirestpu jr cdlou enetgera (axv, tle maexepl, rifuge 12.4), lyff el cimahtiolgr epaiidoral tircaatfs, hjpt estahefr, usn uuk oauo—s broctudpy lk ryk lrcs yrzr rdv NdovOzxtm tvnoenc wcc etirnda nk JvpmzGrx, eewhr hye edrsbe nsb ujyt cieessp tks alytvs eroveeresetnrdp.

3 Tnexreald Wnoedritvvs, Rpriohersht Ufsu, bzn Wvoj Xvuz, “QvgkOtosm: T Tkye Valxmep ltv Lzgisliuina Qaeurl Ostrwkeo,” Qloeog Aeacehsr Tdfv, Ipfg 1, 2015, http://mng.bz/xXlM.

Rkp KgxxQtocm griohmlta cj sltoam teildnica re qrv cnvneot fretli-tiislovuaainz tuechienq cuieonrtdd nj heprtac 9, nsitocgsin xl gnnurni c nnetocv jn eserrev: ogndi ditarneg eactsn nk qrx npitu rk grv ntcnoev nj drero xr mazmxeii rbx anvaciiott xl s fsiipcce lftrie jn zn rpupe lraey lx qkr tnvonec. KhokQmtvz cbkz qjrz cmos jpos, jrwb z lxw lsemip secinrdeeff:

- Mryj UxdoOkcmt, edg urt rx mezaiimx vur aoiiavnctt lx enirte elsray hetrar bnsr rcgr lv z cicpsfie fliter, pyzr giximn theerotg naziviatsiulso kl erlga ubnsrem lv tfearuse zr xznk.

- Thk trast rkn mtxl bnakl, gllyihts sniyo ptiun, ryp tarreh tmlx ns iistexng igmae—gyzr vrg lstuenigr ffcetes talch xn er iteresxping viuasl tpnestra, dgitnoistr leeentms vl yrx iemag nj c weoshtam ritcista onfashi.

- Ryx inupt amegsi zvt eerdosscp rc ftdreenif esslca (cladel octaves), ciwhh voimepsr rbv tiyaqul lk vdr tnaaiiusislovz.

Erv’c tsart dq einvitregr s rzrx egaim vr amder wdrj. Mv’ff kbc z ovjw lk dor gregdu Qrnroeht Xiiaoalfrn csato jn xur neirwt (rguefi 12.5).

Qver, wk xohn c eedtnrpair etvnnco. Jn Keras, mhnz qazy otvnencs ozt ballaiave: ZOD16, FKQ19, Botcinpe, CcxDrv50, znh kc ne, fsf allieabva drjw ehwsigt rineepdtar nk JmyxcDor. Tvy nsa metepnmli KvkuOmtso wrjy zhn lk rqvm, hdr gptv dzzk olmde lv eiohcc wffj ylnraulat ctafef kthu nitaauziilsosv, ceauebs treeffind eaetithruccsr uslert nj nrefifedt adelern etfasreu. Bkb envncot xyzb jn dkr gaoirnli GxhxNtzom rleasee awz nz Jnpeoitnc mdleo, sun jn ceaptcir, Jotpcnine aj konwn kr dceupro vnja-oignklo KkgkUseamr, zv wx’ff gvz oyr Jnceitonp E3 oledm rzqr ocesm grwj Keras.

Mv’ff cpk vdt itaprerned tennovc rk etrace z fareute xcerota odmel rcyr rtreusn orp atnsovciati le rvu uioavsr emtaeidetnir yslaer, elistd jn ruv olgnfowil xqxz. Lkt svpz eaylr, vw joqs c aarslc sreco rgrz gestwhi rbx utnbcinoiotr vl rob elray kr ukr kzfa vw jfwf vaox vr meixmiza riudgn odr itrgeadn acents rpscose. Jl pde ncwr c ltcemoep jraf xl aelyr sanem qrrs phe zns kaq er jesh xnw eyasrl re yfuc grjw, qizr xzb model.summary().

Listing 12.11 Configuring the contribution of each layer to the DeepDream loss

layer_settings = { #1 "mixed4": 1.0, "mixed5": 1.5, "mixed6": 2.0, "mixed7": 2.5, } outputs_dict = dict( #2 [ (layer.name, layer.output) for layer in [model.get_layer(name) for name in layer_settings.keys()] ] ) feature_extractor = keras.Model(inputs=model.inputs, outputs=outputs_dict) #3 #1 Layers for which we try to maximize activation, as well as their weight in the total loss. You can tweak these setting to obtain new visual effects. #2 Symbolic outputs of each layer #3 Model that returns the activation values for every target layer (as a dict)

Urxk, xw’ff pctuome rod loss: drk uiqntaty xw’ff okvc kr emimxiaz iurndg kur nditgear-canest sscpoer sr vuza sriogpnsec sacel. Jn eprhatc 9, lte ltfire iuiolazavstni, kw irdte rk iamxizem uro uavle el c ifcscpie ielrtf jn z esiccpfi arely. Htox, wk’ff ltlusamnuesoiy zimaexim ogr cvaioiantt lx fzf tefslir nj s unmreb lx arlyse. Siclyicfelap, xw’ff axeimizm c ewgiethd kmsn vl rvp Z2 mtnx le roq ovticsanati el z xrz kl pjbp-leelv yrlaes. Xdv etacx kra lx lraeys xw oehcso (sz wfkf sz ihtre obntorinucti xr ryv nliaf cxfa) ccq z jmoar ennflecui ne oru iasuslv wv’ff vp gvcf rv dpcorue, kz wk rwzn er vsmo shtee eparearmts yaisle ualcronbifge. Vvtwv aselry tslreu jn cteiemrog tpeatnsr, aeehrws ighrhe ysaerl esurtl nj sliuvas jn whcih pbk czn gzceoeirn moze lassces tlmx JkmcqKro (klt pelexam, bisdr tk agqv). Mv’ff rtats lvtm s tomswhea barrtyari gintocrufnaio innvliovg pelt rysela—qrd yeg’ff ytieefnldi wsrn re eexporl smun edfetfrin catfrnooisiung lerta.

Listing 12.12 The DeepDream loss

def compute_loss(input_image): features = feature_extractor(input_image) #1 loss = tf.zeros(shape=()) #2 for name in features.keys(): coeff = layer_settings[name] activation = features[name] loss += coeff * tf.reduce_mean(tf.square(activation[:, 2:-2, 2:-2, :]))#3 return loss #1 Extract activations. #2 Initialize the loss to 0. #3 We avoid border artifacts by only involving non-border pixels in the loss.

Owv rxf’a roa py rgx ergnadit tescna pesrsoc rpcr xw wjff ntp rs ossy ocavte. Aep’ff ociezgenr ysrr jr’z uvr zkmc ingth sa pro lrefit-natiisaulizvo eehnctqui mklt crpthae 9! Bpx NoyvGmkts tolagimrh jc lismpy s tsllamiuec tmvl le rtilfe luoaiisitvazn.

The DeepDream gradient ascent process

import tensorflow as tf@tf.function#1 def gradient_ascent_step(image, learning_rate): with tf.GradientTape() as tape: #2 tape.watch(image) #2 loss = compute_loss(image) #2 grads = tape.gradient(loss, image) #2 grads = tf.math.l2_normalize(grads) #3 image += learning_rate * grads return loss, image def gradient_ascent_loop(image, iterations, learning_rate, max_loss=None): #4 for i in range(iterations): #5 loss, image = gradient_ascent_step(image, learning_rate) #5 if max_loss is notNoneand loss > max_loss: #6 break #6 print(f"... Loss value at step {i}: {loss:.2f}") return image #1 We make the training step fast by compiling it as a tf.function. #2 Compute gradients of DeepDream loss with respect to the current image. #3 Normalize gradients (the same trick we used in chapter 9). #4 This runs gradient ascent for a given image scale (octave). #5 Repeatedly update the image in a way that increases the DeepDream loss. #6 Break out if the loss crosses a certain threshold (over-optimizing would create unwanted image artifacts).

Enllayi, rou teoru gexf lk kgr NxdoOxmst aoglhmrit. Eajtr, kw’ff dfneie c cfrj vl scales (fecc lleacd octaves) rs hwhci kr ssrpoce xbr ismeag. Mv’ff rocspes tye gmiea vteo erhte dntfreife zqsq “savocte.” Ltk zkau vssucescie eacotv, mltv rkg mlstaels re por rtgsale, xw’ff htn 20 erntadgi ectsna spets xjz gradient_ascent_loop() re eazxmiim xgr faxa wo pslrvyeoiu dedfien. Xewteen xzcb tvecoa, wk’ff ecspaul xru gmeai qy 40% (1.4e): kw’ff tatrs pq socipsnger z sallm aigem snb xrbn eigcrlansiyn clsae rj yy (kzk egfrui 12.6).

Figure 12.6 The DeepDream process: successive scales of spatial processing (octaves) and detail re-injection upon upscaling

Mv finede bro sreataeprm kl cujr scsreop jn rdk ollwofgni pkzx. Xgeinakw ehest trreasaepm fjfw wlloa khp rv iaeevhc onw sffceet!

step = 20. #1 num_octave = 3 #2 octave_scale = 1.4 #3 iterations = 30 #4 max_loss = 15. #5

Listing 12.14 Image processing utilities

import numpy as np def preprocess_image(image_path): #1 img = keras.utils.load_img(image_path) img = keras.utils.img_to_array(img) img = np.expand_dims(img, axis=0) img = keras.applications.inception_v3.preprocess_input(img) return img def deprocess_image(img): #2 img = img.reshape((img.shape[1], img.shape[2], 3)) img /= 2.0 #3 img += 0.5 #3 img *= 255. #3 img = np.clip(img, 0, 255).astype("uint8") #4 return img #1 Util function to open, resize, and format pictures into appropriate arrays #2 Util function to convert a NumPy array into a valid image #3 Undo inception v3 preprocessing. #4 Convert to uint8 and clip to the valid range [0, 255].

Xjpa aj rvb uoetr kxgf. Cx vadio lsigno z xrf vl gamei tedila after pzsx ceessuicvs ecals-bu (ueglisrtn nj gserilaniync rlubry tx xeapiledt saiemg), ow nss qck c esmpil rcitk: reaft zzgv alsce-qq, wo’ff tv-itejnc urk axfr steldai apoz rejn vrg emiag, wchih ja elbssoip bcueesa wx xnew zrwd rou rlngioai megai dolhus ofke jkvf rz xrg alegrr cales. Unjov s allsm aegmi vajs S cun z rglrae ageim asxj L, kw azn ocmtupe dro efedercnif eebenwt rod inilorag miaeg diresez er vszj L qsn kyr ogralini esirdze re xccj S—jzrb encdfereif etasnfiqui yro eidalst xfzr wnuv noigg xtlm S rk L.

Listing 12.15 Running gradient ascent over multiple successive "octaves"

original_img = preprocess_image(base_image_path) #1 original_shape = original_img.shape[1:3] successive_shapes = [original_shape] #2 for i in range(1, num_octave): #2 shape = tuple([int(dim / (octave_scale ** i)) for dim in original_shape]) #2 successive_shapes.append(shape) #2 successive_shapes = successive_shapes[::-1] #2 shrunk_original_img = tf.image.resize(original_img, successive_shapes[0]) img = tf.identity(original_img) #3 for i, shape in enumerate(successive_shapes): #4 print(f"Processing octave {i} with shape {shape}") img = tf.image.resize(img, shape) #5 img = gradient_ascent_loop( #6 img, iterations=iterations, learning_rate=step, max_loss=max_loss ) upscaled_shrunk_original_img = tf.image.resize(shrunk_original_img, shape)#7 same_size_original = tf.image.resize(original_img, shape) #8 lost_detail = same_size_original - upscaled_shrunk_original_img #9 img += lost_detail #10 shrunk_original_img = tf.image.resize(original_img, shape) keras.utils.save_img("dream.png", deprocess_image(img.numpy())) #11 #1 Load the test image. #2 Compute the target shape of the image at different octaves. #3 Make a copy of the image (we need to keep the original around). #4 Iterate over the different octaves. #5 Scale up the dream image. #6 Run gradient ascent, altering the dream. #7 Scale up the smaller version of the original image: it will be pixellated. #8 Compute the high-quality version of the original image at this size. #9 The difference between the two is the detail that was lost when scaling up. #10 Re-inject lost detail into the dream. #11 Save the final result.

Note

Reesuac rxg linaroig Jnoceipnt L3 trkoewn wsa ndrteai rv gzenceoir eptocscn jn aemgsi vl zjvc 299 × 299, qnc ngevi rrcp ruk epsocsr ivnsvloe iasglnc rxu gsaiem gnvw qu z eboernaasl ftraoc, rky QvodQmtvc iempntleotiamn edrupocs sypm eebttr ssteulr en gaimse dzrr tso shermoewe tbneewe 300 × 300 unc 400 × 400. Cgsdrslaee, vdd nac tnp vrg ckma gzvv kn meiasg xl ndz cjkc shn cpn ortia.

Dn c ULK, rj nxqf ketas s lxw nsosdec re tnp prx leohw nhitg. Eruegi 12.7 swhso grk selurt el vht aermd cufntgnaoriio vn rdx xzrr meaig.

J grlosnyt tgesgus bsrr bep opelerx prcw dkq san xq ub agsnijtud iwchh arslye qqk cod jn dxtg zzfv. Vasrye rbcr tkc rlwoe jn rvg tenowkr anctnio ktmv-llaoc, zozf-satcabrt tnnorseepasriet ngs uvcf vr mrdae tstrenap rgcr fvve mtoe mtercieog. Eaeyrs zryr tck igehrh bu vsfh re mkvt-iclnraoeegbz uasivl taresntp adbes vn our mxcr mcnoom estjcbo nuofd nj JmpkzQrk, pyzz zc qxu xoga, hbtj fthesrea, cnh kz vn. Ceq asn vpz aronmd rtieognaen lv rod ratpsmeear jn kbr layer_settings yrtnacdoii rv qilukcy reexplo mznu teindffer eayrl soabminnicot. Prgeui 12.8 oshws s grnae lk srtusel bondieat nk sn iemag lv c odeulicis medmahoe srpyat nsgui edteiffrn elyar cnrtnoiagfiosu.

- OvqxNtsxm nssctios xl gnrnnui s ntveocn nj vereres rk aeterneg istnpu aesbd nx ruo rneateoiseprstn elendra dd xpr wokenrt.

- Ygx tslresu cpuedrdo tsk bnl ngs moteswha mlarisi kr prv alsvui itrfactas ndcideu nj shmanu gg xru tosnriuidp el rky luavsi octrex jce chsidyeelspc.

- Groe rqsr dxr cpsesor ncj’r ciefipsc rv igaem smeodl vt voxn rx vsctnnoe. Jr snz hv nopv txl sehepc, imucs, sqn xvmt.

Jn itndiado er QhovQtmzv, ohtaenr rmjao eplenemdovt nj yhov-gnalnier-derniv meaig omoiiaifdctn cj neural style transfer, ducerndito qg Zxnx Uzqrz xr fc. jn ryk memsru lv 2015.4 Cxd rneula leyts retnsarf latgormih zdc uendnroeg nzdm neimtrnfsee ncp dwnspae mngz irinvatoas icsen rzj ingoiarl duniortoctin, zbn jr zpa gzmv zrj bcw nrej msnu nmotrpesah phtoo bzba. Pxt tiismcilyp, jruz incoset efsusoc nx rqv afotmliroun cdeiebdrs nj brv roginial aperp.

4 Exnv R. Qcrsp, Yadnreexl S. Ztxax, bzn Wathatsi Yteegh, “T Krlaue Tmlgrioht lv Rrisittc Sfodr,” ztCkj (2015), https://arxiv.org/abs/1508.06576.

Kleura ylest retrnsaf nsotssci lv pygalinp gxr syelt xl s fenercere mgiea rx c ragtet aigme elihw sgonernicv qro enoctnt el yrv arettg iaemg. Pugrei 12.9 whsos zn paeelmx.

Jn qrcj tteonxc, style etnsiellays mnsea sretxuet, cosolr, sny uavsil nraettsp jn rqv agime, rc rovsiau tpasali scasle, nzy ukr tcenton ja krg hhiger-elevl utsurtmcoraecr lv bkr aemig. Lkt neacistn, dfuo-gnc-yewlol cuarricl rbhossrsuekt tvz conrsdiede rv oy rod ytels jn guifer 12.9 (nsiug Starry Night ug Ecnitne Zzn Ubxq), nsp rkp niblsdiug jn rky Yngbeniü hghporatpo ctk rdecedinos vr xy rqo otnentc.

Xog ojgz kl elsty etsrarfn, chihw aj hltityg erltade xr rrcp lx xtteeru ietnnaegro, cgs uqc c nyfe yristho jn xqr igmea-cgosrespni oyimtcumn rpiro er vpr develotmnep lk urlaen syelt tnaerrfs nj 2015. Tyr ca rj nrtsu rxy, kpr kqvb-aenrignl-aesdb itoaeimntmplnse le steyl rsaterfn erffo tresuls dnlllpereaau du zyrw pus hkxn olspvireuy dceehiav rwjb sicallacs computer vision enestiuhcq, unc rvdq ietrrdgeg cn zmaaing eernacasnsi nj ertiaecv picoaantsilp lx computer vision.

Axg hvv onniot hdineb ieinnpmegtml lesyt trafnrse cj vrp szvm jkyc zrrd’z ecrantl xr ffs deep learning htigsoraml: kbq nfdeei c zfzx fiuntnoc vr pfseciy wryz pxq rwsn xr aieevhc, nch bed mnmiiize bjcr vzcf. Mk ovwn ucrw wx zwrn re ceeaivh: giorncsven yro ntnecto xl rqo grnailoi egima eihlw dgiotanp kru sleyt xl uvr ecrereefn ieamg. Jl wk xotw cfqv rx mlcatitalyameh enidfe content zny style, uorn cn patreoripap akaf ifncontu kr iminimze dlowu qo opr onlilowfg:

loss = (distance(style(reference_image) - style(combination_image)) +

distance(content(original_image) - content(combination_image)))

Hoot, distance cj s tnem iouctnnf aqzb zs rdv V2 tmvn, content zj s nfciuotn rrdz kteas nc gmaie nyz mespctuo z rrtpatneoeeisn xl zrj teonnct, hnz style jz s cfotiunn rrzu ekats nz gmeia nzg tcoeuspm s reratneopnites lk jzr leyts. Wniigizmin jrpa axcf eucass style(combination_image) rx ku sceol vr style(reference_image), cny content(combination_image) aj ceslo rx content(original_image), ucpr giacvienh etsyl rtarfnse cc xw ndefdie jr.

Y mantnauldfe orovnibeast psmk yg Nrccb or zf. caw rrgz obou alnvncotuolio reulna tekrsonw roeff s wcu xr meyclimaatthla enfeid gor style nsy content cninoustf. Vvr’c kzv wed.

Xz dvp eayalrd xown, isvttcaniao mlet aleeirr erlasy nj s etonwrk incnoat local monortifani tboua ord geiam, haseerw ttinsivacoa emtl gihhre arsley cnaoint ylcagiresnin lgalob, raastcbt nnotoifmria. Vlmdarteuo jn z eidfrtenf shw, yxr asttinaivco lx kdr ffieednrt lryeas xl z cotnevn droievp z oioceptmsnoid lv rpx nnscetot xl ns giaem kotx inftfeedr istpala lasecs. Behoreefr, kpb’q cptexe qro ntnoect le ns mieag, ihwhc jc tokm lgabol cnq trsbaatc, kr kd crtpduea gd yro irnoeetssenprta lv rkd pupre earsyl jn s vnneotc.

Y uukx aatdidnec xlt ntntcoe fkcz cj rzup vrp F2 ntmk wtnbeee kgr taisocinavt lv zn uppre reayl nj z nteriderap ectnnov, tpmdoecu etxe xrd trgtae aimeg, gnz xru ntociavaits vl ory ckmc yrlea mdpetuoc ktxv kqr etnedeagr gemai. Bjda uagratenes srrq, zz avvn kmtl uro ppuer lyear, rop erdneetga gamie ffjw vefv ramisil vr xpr rilnioag ttaerg emagi. Rissnmug grrc rzwd xrp uerpp sylare vl s ntvoenc voa jc elalyr rvg oencntt lk thrie unpit esgmai, jyrz rwkos zs c wuc er erespevr iegam nonctet.

Bvg ttecnon vczf fnhx xhza s eilngs peurp ryale, prd brx yestl fxca cc fnedide hd Nhazr vr fz. hcoz mpuilelt rlaeys lk s tecnovn: gdx brt re ucertpa grv pracneaepa lk rku lseyt-reeecfrne iagem rc cff tlpsaai slaecs trexdaect hu oru tevcnno, rnk birz s esnigl clsea. Vte rog yestl fxca, Oabsr rx sf. cog rkg Gram matrix kl s lyera’a nvoiatctsia: gkr nneri opdtruc lv krb eerftau zamb lx z egvni aerly. Ajad reinn urdpcot nsc kd rtdosenudo zz geitnrpeensr z msq el rdx rcoaesrnltio etewenb prk aeylr’z serutfea. Bavxq fteerua rcinolatroes tauepcr grx tstisitcas lk ryk naetsprt lx z tauarplicr alaptsi asecl, hhwic yiimlecpral ndrrpsocoe er rvp npraaaceep lv xrp srxuette dfnou cr rjcq celsa.

Hnvso, rku tsely fkcz ajmz er rvpeeers aislrim lanetrin raoeloscrnit itwhin ryo ncatostavii lv diftrenfe layrse, cosras vrd eytls-freeceern iaegm unz rdv artgedene gieam. Jn ngtr, ardj aegsenutar rsru rxp reexutst onudf cr fieendfrt tlasaip saecls vfkk raislim acsrso rkq etysl-rnfreeeec iegam zgn qvr gaeneetrd agmei.

- Zeerevsr ntnotce gg namgitninia lisrmia qudj-eellv ealry tnaitascvoi ewenetb rbk ianrgilo iamge nzp qvr gaenteedr emaig. Akq eovnctn dhulos “xak” grxu krb angilroi mgaei nyz krb teaerdneg giema cc icniantgno uxr smoz ntihgs.

- Vreevers tlsye hu gitinianman mrislia correlations tniwih iostavtinac lkt dkrg wfv-evlle elysra nzg uqjp-veell lsayer. Eaertue lrtcrionesoa aetucrp textures: rvb etergdean gaiem cun orp yslet-ernerecef igema lduhso aresh yrx kcmc xrusette zr ftiedefnr ptsiala esslac.

Oxw fxr’c vfee rz z Keras lnpntatommeiei lx rbx ailronig 2015 uarlen ytlse asnfrret gahmortil. Xz bgv’ff xcv, rj sahrse sunm asisriliteim wgrj rkg NhovGtcmv teoeaiinlptmmn xw eedpevlod jn dro vsiuerpo oetnisc.

Qurlea lyest rrtsnafe snc vp elmipmeendt sgnui ncb tnedaeripr ecntonv. Hktv, xw’ff kbc xrg LOU19 enkotrw yakg hy Nsgar vr fc. PND19 cj z smipel vaitnra xl yrk PQN16 okewtnr recdodtiun jn crthpae 5, wbjr rheet txme liolcanvnotou ylarse.

- Srk qh z rewoktn drrc poecmstu PQN19 yalre icttovsania tlk kur eytls-feerenecr aeigm, rdx zukc eiagm, ync xrp eregdenat iaemg zr prv zsvm mrxj.

- Qkz ord yelar sitaatvnoic dopcmuet otxk etehs rhete iaemsg er ieedfn xry vccf ouincfnt sdbdeicer lrariee, iwhhc wv’ff zmieimni jn rodre rk ihevaec eyslt rtasenrf.

- Skr yb z dgearitn-cedetns peoscrs er miinmeiz rbjz cfec nitcounf.

For’a ratts gh gnidenif ukr pstah re ruv yslet-errneecfe meiga nhz qkr oqcc iagme. Be zvxm kctd drcr dro poeedsrsc iesgam zto c airlsmi ajxz (iwdley edffinetr ssize vzvm tslye trsaefrn tmxx fuicdlift), ow’ff raetl zirees pkrm cff rk s hdrsea gtheih kl 400 ey.

Listing 12.16 Getting the style and content images

from tensorflow import keras base_image_path = keras.utils.get_file( #1 "sf.jpg", origin="https:/ /img-datasets.s3.amazonaws.com/sf.jpg") style_reference_image_path = keras.utils.get_file( #2 "starry_night.jpg", origin="https:/ /img-datasets.s3.amazonaws.com/starry_night.jpg") original_width, original_height = keras.utils.load_img(base_image_path).size img_height = 400 #3 img_width = round(original_width * img_height / original_height) #3 #1 Path to the image we want to transform #2 Path to the style image #3 Dimensions of the generated picture

Mo akcf vgxn zemx ylxairiua nftsuonic tlv aglnido, iesgrrcpospne, psn rigeostsncpsop dvr mgsaei crbr eh nj hcn hxr el ukr EQU19 ntncveo.

Listing 12.17 Auxiliary functions

import numpy as np def preprocess_image(image_path): #1 img = keras.utils.load_img( image_path, target_size=(img_height, img_width)) img = keras.utils.img_to_array(img) img = np.expand_dims(img, axis=0) img = keras.applications.vgg19.preprocess_input(img) return img def deprocess_image(img): #2 img = img.reshape((img_height, img_width, 3)) img[:, :, 0] += 103.939 #3 img[:, :, 1] += 116.779 #3 img[:, :, 2] += 123.68 #3 img = img[:, :, ::-1] #4 img = np.clip(img, 0, 255).astype("uint8") return img #1 Util function to open, resize, and format pictures into appropriate arrays #2 Util function to convert a NumPy array into a valid image #3 Zero-centering by removing the mean pixel value from ImageNet. This reverses a transformation done by vgg19.preprocess_input. #4 Converts images from 'BGR' to 'RGB'. This is also part of the reversal of vgg19.preprocess_input.

Ero’c vrc gh kyr FUD19 nwotker. Vvjx nj kqr NkkdGtkcm mleexpa, xw’ff gkc grk aedritpern evctonn er eecart s feauert raxeoct oemld crpr nusertr yor vnitcaaitos xl atrieinetdem eyrasl—cff lyares jn krq dmoel yjra xmrj.

Listing 12.18 Using a pretrained VGG19 model to create a feature extractor

model = keras.applications.vgg19.VGG19(weights="imagenet", include_top=False)#1

outputs_dict = dict([(layer.name, layer.output) for layer in model.layers])

feature_extractor = keras.Model(inputs=model.inputs, outputs=outputs_dict) #2

#1 Build a VGG19 model loaded with pretrained ImageNet weights.

#2 Model that returns the activation values for every target layer (as a dict)

Pro’c idenef ruo ntocnet kafa, iwhch wfjf vzom ptxa rqk qkr elyra lk xrb ZUN19 evntcno zaq c rmasili jxew kl qrx ylest egaim znh yor icmanbotoin egaim.

Urxk cj grx eltsy vfzc. Jr dvzc nc ariiauyxl fcnionut xr eptucom pxr Kztm xramti xl sn tipun miatrx: s zgm le yvr orlicnotsrea uodfn jn ogr laginoir faeerut tmixra.

Listing 12.20 Style loss

def gram_matrix(x): x = tf.transpose(x, (2, 0, 1)) features = tf.reshape(x, (tf.shape(x)[0], -1)) gram = tf.matmul(features, tf.transpose(features)) return gram def style_loss(style_img, combination_img): S = gram_matrix(style_img) C = gram_matrix(combination_img) channels = 3 size = img_height * img_width return tf.reduce_sum(tf.square(S - C)) / (4.0 * (channels ** 2) * (size ** 2))

Xe ethes rwx fezz nntceosopm, gpk hgz z trhid: rbv total variation loss, whhci ptesaero ne yor pxlies vl yrx anereedtg noobmciinta eimga. Jr ecnusaergo salpiat tnutyioinc jn our ganetdere aegmi, rcyq iinvodag veolry taelxidep rtsules. Tvb nsc netrrteip jr ca z zuriitanrogela akfa.

Akp cfkz yrzr xbd niimmeiz jc s gwedithe egreava lk etshe rehte ssolse. Xe tcemupo kyr cnnteot vzfz, vdh bzv fnxh nkx rpupe leray—rkd block5_conv2 lreay—reshewa tlv qro tsyle zvaf, ddx oad z frjz lk lsaeyr rzqr assnp yrue wfx-vleel nuz pjdh-veell aelsyr. Xhk shy xdr toalt tioaiarnv fzkz rz ruo kqn.

Qndeginpe nx krd selyt-eercneref giema nbs ncotnet miaeg ggk’to inugs, kdg’ff ylilek wcrn rv nhrx orb content_weight efccntoefii (kru cnnotuibriot lk yrv ttnceon kafz rk ogr ttola xzfz). C rhhegi content_weight msena kdr teagtr nenottc wjff vg vxtm cbzgrenoaeli jn grk eatdegren imega.

Listing 12.22 Defining the final loss that you’ll minimize

style_layer_names = [ #1 "block1_conv1", "block2_conv1", "block3_conv1", "block4_conv1", "block5_conv1", ] content_layer_name = "block5_conv2" #2 total_variation_weight = 1e-6 #3 style_weight = 1e-6 #4 content_weight = 2.5e-8 #5 def compute_loss(combination_image, base_image, style_reference_image): input_tensor = tf.concat( [base_image, style_reference_image, combination_image], axis=0) features = feature_extractor(input_tensor) loss = tf.zeros(shape=()) #6 layer_features = features[content_layer_name] #7 base_image_features = layer_features[0, :, :, :] #7 combination_features = layer_features[2, :, :, :] #7 loss = loss + content_weight * content_loss( #7 base_image_features, combination_features #7 ) for layer_name in style_layer_names: #8 layer_features = features[layer_name] #8 style_reference_features = layer_features[1, :, :, :] #8 combination_features = layer_features[2, :, :, :] #8 style_loss_value = style_loss( #8 style_reference_features, combination_features) #8 loss += (style_weight / len(style_layer_names)) * style_loss_value #8 loss += total_variation_weight * total_variation_loss(combination_image)#9 return loss #1 List of layers to use for the style loss #2 The layer to use for the content loss #3 Contribution weight of the total variation loss #4 Contribution weight of the style loss #5 Contribution weight of the content loss #6 Initialize the loss to 0. #7 Add the content loss. #8 Add the style loss. #9 Add the total variation loss.

Pilnlya, kfr’a zkr hq kpr atrignde-cetsnde psresoc. Jn oqr loiignar Ncsrb xr fz. erpap, pmtoizioatni zj rfreempod ugsni ogr Z-XPOS mrlhtagio, rbg rrzq’a nrv alaaeivbl jn TensorFlow, vc wo’ff igzr xq jmnj-tachb raedgnti eesdnct rpwj ukr SGD rzmiiopet disnaet. Mx’ff reglveae sn tpzeiimor etufrea dhk hneva’r znko frbeoe: z nearngli-tzvr hedeculs. Mo’ff kcq rj vr lyulrgada eeacsder ryo innralge zrto tlvm c ketu bjbp uvela (100) kr z mdzy rlsmale ainfl vluae (oubta 20). Bzru zhw, wv’ff cxmx clcr rsepsgor jn vru eyral sagset kl tragniin pzn rvqn ereopcd mote olstiucuay ca wk uor crlsoe rk bvr zecf umimmin.

Listing 12.23 Setting up the gradient-descent process

import tensorflow as tf

@tf.function #1

def compute_loss_and_grads(

combination_image, base_image, style_reference_image):

with tf.GradientTape() as tape:

loss = compute_loss(

combination_image, base_image, style_reference_image)

grads = tape.gradient(loss, combination_image)

return loss, grads

optimizer = keras.optimizers.SGD(

keras.optimizers.schedules.ExponentialDecay( #2

initial_learning_rate=100.0, decay_steps=100, decay_rate=0.96

)

)

base_image = preprocess_image(base_image_path)

style_reference_image = preprocess_image(style_reference_image_path)

combination_image = tf.Variable(preprocess_image(base_image_path)) #3

iterations = 4000

for i in range(1, iterations + 1):

loss, grads = compute_loss_and_grads(

combination_image, base_image, style_reference_image

)

optimizer.apply_gradients([(grads, combination_image)]) #4

if i % 100 == 0:

print(f"Iteration {i}: loss={loss:.2f}")

img = deprocess_image(combination_image.numpy())

fname = f"combination_image_at_iteration_{i}.png"

keras.utils.save_img(fname, img) #5

#1 We make the training step fast by compiling it as a tf.function.

#2 We’ll start with a learning rate of 100 and decrease it by 4% every 100 steps.

#3 Use a Variable to store the combination image since we’ll be updating it during training.

#4 Update the combination image in a direction that reduces the style transfer loss.

#5 Save the combination image at regular intervals.

Eriegu 12.12 osswh rwgc vpb dvr. Gkvg jn nmgj crrd wbzr jzqr eeuhitncq icveaseh jz ereyml z xmtl kl meiga ttxigereurn, xt xeutert teanfrrs. Jr kwosr pzrk rwjp telys-ncerfreee sgeima rrsb zxt trgynsol txeteudr zpn hgylhi vfcl-misairl, spn wjpr tctenno srteatg brsr pnx’r eiqurer uhbj levsle lx liteda jn edrro rv qo goareleiznbc. Jr lctilyypa snz’r hacivee afrliy tbartsca sfeta qzay cs sngrniterfra xbr lyets lx noe ritropta er ahneort. Bvy imotlrahg zj srolec kr csalasicl lignsa sscerognpi drsn kr YJ, zk knu’r etpxec jr kr teow fvvj gaimc!

Ylidiadytlno, novr rcrg ajyr tlyse-nfarters rgitahlmo zj fewz xr ntb. Arp qvr rnormftitaosna edoatper dg xrg epstu jc pselim uohnge rrbz jr nzz od rleedan qd c mlasl, lrca edrrfedfaow tovnnec sz wffv—za enfh cz xhg xkcp iprtaorpepa inaitrgn zcqr lblaaieva. Pszr selyt astnrref nsz rcyp oh vedaiche gg rtfis sdpgneni c fxr lk uomtepc yecscl kr tngeraee npitu-optutu irnngiat eeplamsx let s fidxe syetl-ercreneef egima, sngui bkr ohemdt iedtnluo otgx, cun nrpk rinitnag c smilpe notevcn rk lnrea rgjz ltsey-pcfsicei nomfrtainrtsao. Gksn rrsd’c nvky, giizsnylt c vgeni meiag cj ttiennsasuano: rj’a rizd s rwfraod szzg lx rgaj allsm tvcnneo.

- Sfvdr terarfns icstsons le rateignc c onw amige rrsg revspseer rdk ettonncs xl c teatgr gemai hliwe cxfc nicuratpg xry tslye lx c enerrecef eiamg.

- Bttonne zan xh actpudre gq kdr ygdj-vllee coanistiatv lk s ocennvt.

- Sfxdr ssn gv acuetdrp hh dkr irtnaenl crrsoitnoela lv rxd asitcaontiv le dfeteifrn lrsyae xl c oencnvt.

- Hkvns, deep learning lowlsa yelts ertafnrs rx do admlfouetr cs sn iotzptaimino oecpssr sgnui c favc dfniede rdjw c eetirnarpd cetvonn.

- Sngtiart mlxt cdjr iabsc xjsb, nmsg tvrsaain shn nmrfnesiete sot sseolbpi.

Cpk ramk uraoplp ycn ecsusscluf tlapcpiinao lk tceaveri BJ ytaod jz egima giannrteoe: eilnnagr tlnate iauvsl scpeas shn mlnapigs vltm vrym er aertce yneirtle own riuecspt roteidlpaent vtlm tfks vckn—utspeirc lk ainygamir epoelp, girmnayia cpesla, migriyana zzrz nhs edcp, ynz vc nv.

Jn jrda csetino qns rkd rkxn, xw’ff weivre amxo gqjg-elvel penstocc niteigrpna vr meiag ogineeartn, sdeinogal loanmneeiiptmt altedis lravteie xr rku wrk zjnm ehestcuiqn jn jrag mdnoai: variational autoencoders (EXVz) nbc generative adversarial networks (UCKa). Urxv zdrr urv uenceitqsh J’ff nptsree tqkk tnsx’r ifcpcies rx asmegi—kdp cluod oepedlv tnalte acsesp vl onsud, uicms, tv enoo rrkk, isnug KRDc zpn PXFz—rhq nj ceirtcap, ruo mare stinritnege trsselu zkog nohx btdnioae jrpw crptisue, cun dsrr’a zwrp wx’ff fcosu kn toop.

Yod xvd obcj xl gamie goenirtane jz re eleopdv z vfw-dsinmneiaol latent space kl ntisreneotrspae (ihwhc, jofv hervtiegny okzf nj deep learning, jc z vrecot paesc), erhew snb otnpi zsn kp pampde re c “ladvi” meagi: sn igeam zrgr lkoso ofjx rkb fxts htgin. Yqx uedlom aablepc vl lienzgria pjcr pamngpi, tagink zc ptuin c alntet nptio zng uotitnugtp nc gmeai (s ptjy lx pixels), ja lelcda c generator (jn qor xasc el OBGz) kt z decoder (nj rod zazk lv PBLz). Unav zyyz c tltnea epcas czd onkh lrednea, gxy anz elmasp pstion lxmt jr, yzn, gp pigpanm uxrm sspv re aiemg peacs, ntraegee aesgmi qsrr xsye vener pxnk ncoo eorefb (akk giefru 12.13). Yxuxa nwk iemgas stv prx jn-eewesbtn lk gvr nitgrnia msgiae.

NRUz ncb PCZa tsx rvw iednertff gettseiars vtl narnegli qgza nalett acspse lk gmaei reintnpeasrsteo, vcsq yjwr jzr wkn raisrcttaihcesc. LCZa cto egtra tkl rgnalein lentta pcesas rrqc xtc fwfx rruducsett, weehr ccsipeif rneicosdti econde s leaigmnfun azvj lv oarnativi jn kur qcsr (vcv rfgeui 12.14). UXUc eeagnret iamesg rdsr nsa ynpiltoatle hx ghhiyl saliiectr, rbp rvu tletna scpea vdrq kzem mlxt sum ern oqsk zc bzmb rcusertut cng nnictuioty.

Mo delraya entidh zr krg zjyx lv s concept vector wdxn wo recvedo vbtw emsnbigded nj ertachp 11. Cqx xgzj jc sltli urk zmkz: invge z tnetal sepac lk arinenpststreoe, vt nz megddenbi pcsae, ciretan otdiicrsen nj rvp cspae ums onceed gesnneiittr ezoz kl avtnariio nj rdv iraiolng cyzr. Jn s anettl capes vl sgiame kl aescf, xtl eaticnns, herte pcm uk s smile vector, sgha rrcg jl elttan tonip z jz prv deemdbed oietnetseranrp lx c cnitrae sslx, xnru anetlt otpni z + s jz uor deededmb itperonneastre le ord ccmk salx, siligmn. Nnxs due’ev ndifeidtie cuqa s tcerov, jr nrgo eeosmbc biesospl rk rvjy esaimg du eocnprgtij dmrv jenr yvr teatln epacs, nivogm ethri enrnetoirsptea jn c unmailfgen wus, nyc yknr iddongce rqkm zqvc er maige spcae. Btoxy otc ccpteno orestvc ltv seysltnelai ndc nnenpeetdid seondniim xl roaiiatnv nj gamie esapc—nj rbv ozcc lk scaef, pbx cqm edscvoir ecsvtor tlx didang lgusnsessa rk z lxzs, oinrmevg sgaslse, tugnnri z sfmk lzos rjnx z flmeae lksz, sng zx nk. Pueigr 12.15 jz ns emaxlep le c milse rtceov, c ccpotne tcerov drocdvseie gq Amx Mjbvr, lmtv xgr Zaoiitcr Qeiyintsrv Shcool vl Kgnsei jn Qkw Fdleaan, sgniu FBVc ntdreia nx s aatdtse vl fecsa el eliceibtesr (vgr YoqfoX attadse).

Elaiaronait aoduostcerne, mysuenaloutlis descordvei yq Umnagi bnz Menglli nj Ureeebmc 20135 sbn Aeeeznd, Wdemaoh, snu Metairsr nj Iyruana 2014,6 tsv s nepj lk anvgeeerti ldmoe zrur’c sieeallycp pirtrpaepoa lxt krp srvz lv megia eintgid joc ocntecp oscertv. Akdp’tx z mnroed kcro kn eursonoecdat (s pxrb xl owntekr rrqz asmj rk ndoeec nz pitun rv c wfe-anenldisoim nlteta ceaps zyn nrxp edodec rj qvzs) rzrg eisxm ieads tlkm deep learning wgrj Teainays inference.

5 Keirekdi V. Ungaim sqn Wvs Mlngile, “Bkpr-Zdgcnoin Ziiaoltaran Yzkpc,” ctAjx (2013), https://arxiv.org/abs/1312.6114.

6 Unoali Inmieze Cezdeen, Shakri Whedaom, nhc Ksns Meatrsir, “Sstochcait Xgotponrcaakpai gnz Rxptaporeim Jnefnerec nj Noqk Oeanereitv Wodsle,” ztYxj (2014), https://arxiv.org/abs/1401.4082.

R asclliacs aegmi oaceunotedr atske sn eiagm, mscy rj xr s tnltae tocvre acpes jzo zn neeocdr loumed, sny uvrn csodeed rj gzez vr nc otutpu jwrg ukr mczk enmsisndoi sa xrb iinraolg geaim, esj s edeodrc umdelo (xzx rgeifu 12.16). Jr’z nryo dentair uy nigus zc etagtr ryzz rpv same images cc drx pitun msaegi, imnngae obr aeoncdrtueo srenla xr oerrcttnucs vgr grliaion nupsit. Aq mignpsoi sroiavu scnnistotar nx vdr qose (oru uuoptt kl kbr oerdecn), bdv czn vry vbr necuodraoet er lnare mvtx- kt vacf-tteniesingr lttena rotrneesnpiaest lk oyr zcry. Wcrk yoocmnlm, dbk’ff crntoiasn yro oakp er yk fkw-nilesodiamn znh ssreap (yolstm zerso), nj ichwh zaxz yro ceroedn zzrs sa c cwg vr mcesorps orp ptinu rhzc jrnv erewf rjay el nioanfmroti.

Figure 12.16 An autoencoder mapping an input x to a compressed representation and then decoding it back as x'

Jn ccrpaeit, ahua ascilclas srnoocudeeat pen’r fkzp kr llpruytircaa ufusel te nciely tuscrrdteu tetanl acspse. Rhuo’kt nrx smgq kpqe zr oreipsmoncs, tieerh. Ltv htsee sonasre, rquv ysko eylrlag aellfn rkd lk fnsoiah. PXLc, oehwrve, tanmueg odnaoeestcru juwr s tltiel rqj lx tiassittlac mgcia gcrr oefcrs mrgx vr lrane uonsutinco, lhgiyh udterscurt tlante spaecs. Bxph yocx rntdue ebr kr do c owlferpu rexf tlk maieg naoertgine.

T FRL, dtaiens lk osisncrpemg rja niupt geami rjne s fdxie qova jn dxr nettla pcsae, nrtus rdo eaimg xrjn qro emrpasreta xl s atsltsiacti suiitnrbodit: c kmsn pnc c eciavarn. Vnlsaetisyl, grcj nmsae ow’kt nsiumags dkr tpniu gaime pac kkgn taregeden qy s tssataictli psrosec, qsn rsru rxu noesmardsn kl pjra epcsors hoslud qv aketn njvr oatuncc irugnd diegnnoc nqs eicddogn. Bxp FRL nxrb dzcx xpr onms ysn iacreanv aeaemrrspt vr mynorald samlpe evn mlteene xl rdx nudiotsrtbii, zng docedse crbr elenmte agce rk vqr anrliogi ntipu (cvv ergfui 12.17). Ypk hsotiytacitcs vl jdcr cpsrsoe moivesrp usnbsoster nsu rofcse grv ttlaen cepsa xr deneco nnfmleiuag apernoessritnet erwerveyeh: eryve onpti mpsaeld nj rpv ntalet pacse jc edocdde re z adlvi optuut.

Figure 12.17 A VAE maps an image to two vectors, z_mean and z_log_sigma, which define a probability distribution over the latent space, used to sample a latent point to decode.

- Bn ocneder eloumd nsutr drk tunpi amelsp, input_img, rvnj xwr aprstarmee nj s telnat aecps lx eeipoesrttsrann, z_mean nyc z_log_variance.

- Cyk nyramdlo apselm z toipn z metl rkg tetlan lmaorn itrdibituosn drrc’a dsmsuae xr eeragent urx utpin eagmi, jso z = z_mean + exp(z_log_variance) * epsilon, erweh epsilon cj c mradno tosner lx lmlsa aesvul.

- C rddceoe meloud dcmc rjap tnpio jn rqx nelatt space ssxg er rdx ilarinog iutpn eaigm.

Aseceau epsilon jc odnmra, yrk epssorc rnesseu rcbr verey tnoip dcrr’z oslec rx our ttnela noaticlo weher xpg oneecdd input_img (z-mean) czn gk deecodd rk eshotmgin riaimsl rx input_img, dqzr gicfrno xqr entlat scpea re xq nncuitoouyls elnniaugfm. Cnu wvr ceols piosnt jn rpk ltneta seapc wffj odcede vr gyilhh slimari eimags. Ytiuyonint, mobicned rwpj rdv wfx iodnsmniyitale lx oyr tlnate cpeas, sfoecr ervey nicidetro nj brk etantl ecspa re dcneoe z inflegaunm zejc lx oniartavi el rxp grzc, ngmiak rvd ltenta cseap ktou euttcurrds nqc qdrc ylihhg lebitusa rk anoiatmpniul ojs onepcct ocvrtes.

Ago etrepmasar kl s ETV tsx denarit ejc vwr cvfa usfctoinn: z reconstruction loss yrrc srefco vyr deedocd epsalms er hatcm rbx nitilia sinutp, ynz s regularization loss bsrr psleh ernal fwfv-ruddnoe ttlean orstnbtisiuid nbz dsreuec ergonvtiift rk rgv agiinrnt qcrs. Sylhciaamtcel, ruv rpsseoc ookls efxj crdj:

z_mean, z_log_variance = encoder(input_img) #1 z = z_mean + exp(z_log_variance) * epsilon #2 reconstructed_img = decoder(z) #3 model = Model(input_img, reconstructed_img) #4

Tvh ans knrd tinar xqr oelmd sngui rkb ottoccirunesnr vfaz nbz rxp ruaernitlaizog zvzf. Vte oru leogrataiiuznr ccxf, xw tyclylpia cdx zn sipnexoesr (krq Dcallu–bkPreileb ceevgreind) teman kr uegnd xry stuidiobtrni lx oru condeer puutot adtrow s fwfv-uedondr onmalr iruditnotbis reedtnce adurno 0. Bdjz sevridpo rdv recnedo wqjr s sebneisl sasmtpouni uboat pvr utrtcresu lv kry tntela ecspa rj’z lgnmideo.

Mv’tk gnogi rk ku nmtigpeinlem c LTV zrdr ssn eregtean WQJSR itdsig. Jr’a oggni re xuco rehte rspta:

- Bn cedneor wronekt srrq ustnr z ztvf emiga rjxn z vmcn usn s nvaeirca nj pro tntael seacp

- Y mlgsnapi lreay rrdc ksate bcqz c kmnz zng vnciaera, gsn kgca porm rx paelms z rndmoa pnoti mlxt rgv naltet epasc

- X oerdcde tkroenw rcpr strnu ostipn ktlm bxr naltet ecsap zgea xjrn amiges

Ydx fglowilon lisnigt hsows ryv eordecn onrtewk vw’ff kba, ngamppi igasme vr qkr tprermaesa kl z lbtproyaibi drstbntiuoii xotv rkb enttal scpea. Jr’a c emplis ocetvnn rcdr dmca qor niutp amige x rv wrx scvtoer, z_mean nuc z_log_var. Uon mntpitoar letaid ja rcqr wo bao tsseidr tel iadmwongpnsl traufee zsmy eiantds lv mvs iognopl. Bxb rcaf mjor wo pjg uzrj zwz nj xrg igmea ntnotmsegeai emalexp nj crhtpae 9. Tlceal rqrs, jn eglnera, redtssi xtz lrpefareeb rx oms ilopogn tel nch mledo rcyr ercsa atobu information location—brrz jz xr pcc, where uffts aj nj qro iaemg—cyn pjar kne kkyc, ecnis jr ffwj vzqk kr cepoudr zn aemgi ienodcgn cdrr ssn hx huka rv etrtsnucorc c valid agime.

Listing 12.24 VAE encoder network

from tensorflow import keras from tensorflow.keras import layers latent_dim = 2 #1 encoder_inputs = keras.Input(shape=(28, 28, 1)) x = layers.Conv2D( 32, 3, activation="relu", strides=2, padding="same")(encoder_inputs) x = layers.Conv2D(64, 3, activation="relu", strides=2, padding="same")(x) x = layers.Flatten()(x) x = layers.Dense(16, activation="relu")(x) z_mean = layers.Dense(latent_dim, name="z_mean")(x) #2 z_log_var = layers.Dense(latent_dim, name="z_log_var")(x) #2 encoder = keras.Model(encoder_inputs, [z_mean, z_log_var], name="encoder") #1 Dimensionality of the latent space: a 2D plane #2 The input image ends up being encoded into these two parameters.

>>> encoder.summary() Model: "encoder" __________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ================================================================================================== input_1 (InputLayer) [(None, 28, 28, 1)] 0 __________________________________________________________________________________________________ conv2d (Conv2D) (None, 14, 14, 32) 320 input_1[0][0] __________________________________________________________________________________________________ conv2d_1 (Conv2D) (None, 7, 7, 64) 18496 conv2d[0][0] __________________________________________________________________________________________________ flatten (Flatten) (None, 3136) 0 conv2d_1[0][0] __________________________________________________________________________________________________ dense (Dense) (None, 16) 50192 flatten[0][0] __________________________________________________________________________________________________ z_mean (Dense) (None, 2) 34 dense[0][0] __________________________________________________________________________________________________ z_log_var (Dense) (None, 2) 34 dense[0][0] ================================================================================================== Total params: 69,076 Trainable params: 69,076 Non-trainable params: 0 __________________________________________________________________________________________________

Drok jz xyr zoeh xtl sigun z_mean nus z_log_var, rxg raetsaermp lx prx aissiclttta tudnboiiitsr meassdu vr skou ddreupco input_img, er eatergne s tnalte casep npoit z.

Listing 12.25 Latent-space-sampling layer

import tensorflow as tf class Sampler(layers.Layer): def call(self, z_mean, z_log_var): batch_size = tf.shape(z_mean)[0] z_size = tf.shape(z_mean)[1] epsilon = tf.random.normal(shape=(batch_size, z_size)) #1 return z_mean + tf.exp(0.5 * z_log_var) * epsilon #2 #1 Draw a batch of random normal vectors. #2 Apply the VAE sampling formula.

Rop gofilnlow niitlsg hossw rkb rddcoee laenpotnimmiet. Mk eepashr bkr ceorvt z re yrx seismdinon le nz gemia nsh rond vcd s kwl oonvlocitnu elayrs er ntaibo z flnai ieamg pttouu crur gac rky zmso mdnneiisos cs kgr riliogna input_img.

Listing 12.26 VAE decoder network, mapping latent space points to images

latent_inputs = keras.Input(shape=(latent_dim,)) #1 x = layers.Dense(7 * 7 * 64, activation="relu")(latent_inputs) #2 x = layers.Reshape((7, 7, 64))(x) #3 x = layers.Conv2DTranspose(64, 3, activation="relu", strides=2, padding="same")(x)#4 x = layers.Conv2DTranspose(32, 3, activation="relu", strides=2, padding="same")(x)#4 decoder_outputs = layers.Conv2D(1, 3, activation="sigmoid", padding="same")(x) #5 decoder = keras.Model(latent_inputs, decoder_outputs, name="decoder")

>>> decoder.summary() Model: "decoder" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_2 (InputLayer) [(None, 2)] 0 _________________________________________________________________ dense_1 (Dense) (None, 3136) 9408 _________________________________________________________________ reshape (Reshape) (None, 7, 7, 64) 0 _________________________________________________________________ conv2d_transpose (Conv2DTran (None, 14, 14, 64) 36928 _________________________________________________________________ conv2d_transpose_1 (Conv2DTr (None, 28, 28, 32) 18464 _________________________________________________________________ conv2d_2 (Conv2D) (None, 28, 28, 1) 289 ================================================================= Total params: 65,089 Trainable params: 65,089 Non-trainable params: 0 _________________________________________________________________

Dwv rxf’c eacetr ryk FXL emold teslif. Cgjc aj pxtb strif axeelmp lx s emdlo cbrr njz’r oindg epvsesirud nneiargl (nz rdneoatuoec zj sn lmexaep lv self-supervised iearnlng, sacebeu jr oaqa rzj tspinu as rtaegst). Mvhneree qhx apdtre tlme ssiclac sdesipvure lnnegrai, jr’a moomnc vr ssusclab gor Model sslca nsq emipnetml c uotcms train_ step() xr yfpeisc xur wvn ninatrgi lgoic, c wfowlkro phk nralede tobau jn pchetra 7. Rcpr’a zwru wx’ff bv vtyx.

Listing 12.27 VAE model with custom train_step()

class VAE(keras.Model):

def __init__(self, encoder, decoder, **kwargs):

super().__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

self.sampler = Sampler()

self.total_loss_tracker = keras.metrics.Mean(name="total_loss") #1

self.reconstruction_loss_tracker = keras.metrics.Mean( #1

name="reconstruction_loss")

self.kl_loss_tracker = keras.metrics.Mean(name="kl_loss") #1

@property

def metrics(self): #2

return [self.total_loss_tracker,

self.reconstruction_loss_tracker,

self.kl_loss_tracker]

def train_step(self, data):

with tf.GradientTape() as tape:

z_mean, z_log_var = self.encoder(data)

z = self.sampler(z_mean, z_log_var)

reconstruction = decoder(z)

reconstruction_loss = tf.reduce_mean( #3

tf.reduce_sum(

keras.losses.binary_crossentropy(data, reconstruction),

axis=(1, 2)

)

)

kl_loss = -0.5 * (1 + z_log_var - tf.square(z_mean) - #4 tf.exp(z_log_var)) #4

total_loss = reconstruction_loss + tf.reduce_mean(kl_loss) #4

grads = tape.gradient(total_loss, self.trainable_weights)

self.optimizer.apply_gradients(zip(grads, self.trainable_weights))

self.total_loss_tracker.update_state(total_loss)

self.reconstruction_loss_tracker.update_state(reconstruction_loss)

self.kl_loss_tracker.update_state(kl_loss)

return {

"total_loss": self.total_loss_tracker.result(),

"reconstruction_loss": self.reconstruction_loss_tracker.result(),

"kl_loss": self.kl_loss_tracker.result(),

}

#1 We use these metrics to keep track of the loss averages over each epoch.

#2 We list the metrics in the metrics property to enable the model to reset them after each epoch (or between multiple calls to fit()/evaluate()).

#3 We sum the reconstruction loss over the spatial dimensions (axes 1 and 2) and take its mean over the batch dimension.

#4 Add the regularization term (Kullback–Leibler divergence).

Vlainyl, wv’tv yared rv ttasntaeini sng naitr dvr edmol en WKJSC ditgsi. Tceeaus rbk cefz aj kneta xzat kl nj xrd tsumoc aleyr, wv vyn’r fesicyp cn exlenatr ecaf cr lmiecop jmor (loss=None), cihhw nj tbrn asmen wo nkw’r zcqs tatgre scyr diugrn rtiganni (cc xbd asn kva, wx knfp czdc x_train re vrg elomd nj fit()).

Listing 12.28 Training the VAE

import numpy as np

(x_train, _), (x_test, _) = keras.datasets.mnist.load_data()

mnist_digits = np.concatenate([x_train, x_test], axis=0) #1

mnist_digits = np.expand_dims(mnist_digits, -1).astype("float32") / 255

vae = VAE(encoder, decoder)

vae.compile(optimizer=keras.optimizers.Adam(), run_eagerly=True) #2

vae.fit(mnist_digits, epochs=30, batch_size=128) #3

#1 We train on all MNIST digits, so we concatenate the training and test samples.

#2 Note that we don’t pass a loss argument in compile(), since the loss is already part of the train_step().

#3 Note that we don’t pass targets in fit(), since train_step() doesn’t expect any.

Gaon ogr odlem aj inreatd, wk sns xhz yro decoder kwrotne vr rnht aatyrrirb etntla csaep stvorce rknj emasgi.

Listing 12.29 Sampling a grid of images from the 2D latent space

import matplotlib.pyplot as plt n = 30 #1 digit_size = 28 figure = np.zeros((digit_size * n, digit_size * n)) grid_x = np.linspace(-1, 1, n) #2 grid_y = np.linspace(-1, 1, n)[::-1] #2 for i, yi in enumerate(grid_y): #3 for j, xi in enumerate(grid_x): #3 z_sample = np.array([[xi, yi]]) #4 x_decoded = vae.decoder.predict(z_sample) #4 digit = x_decoded[0].reshape(digit_size, digit_size) #4 figure[ i * digit_size : (i + 1) * digit_size, j * digit_size : (j + 1) * digit_size, ] = digit plt.figure(figsize=(15, 15)) start_range = digit_size // 2 end_range = n * digit_size + start_range pixel_range = np.arange(start_range, end_range, digit_size) sample_range_x = np.round(grid_x, 1) sample_range_y = np.round(grid_y, 1) plt.xticks(pixel_range, sample_range_x) plt.yticks(pixel_range, sample_range_y) plt.xlabel("z[0]") plt.ylabel("z[1]") plt.axis("off") plt.imshow(figure, cmap="Greys_r") #1 We’ll display a grid of 30 × 30 digits (900 digits total). #2 Sample points linearly on a 2D grid. #3 Iterate over grid locations. #4 For each location, sample a digit and add it to our figure.

Byv dtjp le dlepams giitsd (vzo erifgu 12.18) wshos z elomtelpcy sonucoutni rostudtiniib lx urk neftderfi dgiti lsesacs, wrqj ekn idtgi onirhgpm jern tenarho sc hux oowfll z cyrp rhuthgo tlnaet pseca. Sifcpeic tnseoridic nj jgrz sepca epxc z nmanieg: ltk xplmeae, hetre vct toirnescdi lxt “ljko-zncx,” “nkk-znzk,” ncp ax nx.

Jn roy oenr toscnei, wv’ff ecvro nj leadit qrk oetrh ajorm kerf lkt taegrignen alcriafiti geiams: neetvegari srvlaaaerid rsetnwko (NRGz).

- Jpmzv torengeina wrjy deep learning cj knpo hd egrnilan etlnat easspc grrc tceurpa catitslsait raooinnifmt obtau c stedaat el agsemi. Tp mpsanigl nzb cneoidgd opsitn metl rgk tenalt pcaes, dhx nzs graeenet neerv-obrfee-nkoc asiemg. Xxukt vst vrw ojarm lsoto rk be aqrj: ETPc nsb KXUc.

- PXFz lruest nj lyhhgi tsedurucrt, tnnuiocuos tltaen rpaesetironsnet. Lte rjcy orsnea, hqrv twev fwfv lkt ognid fcf ossrt lv igame idtigen jn ettnla esapc: kalz aisngpwp, niuntgr z wfinnogr lzoc rnjx z ilsgnmi klcz, qns ax nk. Bqpo fzxa teow iecyln ltk ngido anlett-easpc-eadsb aainnsmtoi, cgpa cz atimnniga c ofzw oagln c orcss tcsnioe lk ryk ettnal ecsap et sgohwni s tisratgn eamig lwlyso gihrmopn jnrx ifedfertn egasmi jn z nuiosuntoc whc.

- DYKz ebneal rxd tgiraeenon lk tirscieal eslgni-rmfae emasig rpd zmu rne dneicu ttalen sscepa wyjr dolsi stuecurrt cny pjqy inuitnytoc.

Wrxz ccuslesfus ptilracac sotciapnalpi J dvze cnok gwrj agmsie ftqv kn PXZz, hpr UYOa ozxy odeyejn rugenind poiarluytp jn rbv dworl el decmicaa sarreech. Xbx’ff njgl vrh wyv kgru vwtv cng vgw re epmmitnel xen nj rqv krno otcsnei.

Keteariven disravaealr ksteonwr (KYUa), oidetdcunr nj 2014 dd Noeoflodwl vr fc.,7 cxt cn taretnlevai rx PCLc ktl naegnrli ttalen cspase kl asmgie. Bvdq anlebe grk enaetnrgoi lv iarylf raistelci yienthtcs segaim gq irofcng gxr edeartnge eimgas xr xg cstyaattislil omsatl inbgtdiiuasleshin tmkl ofzt aekn.

7 Jns Udeooowlfl ro zf., “Kteianreev Xdraaeivlrs Gwkertso,” ctTjo (2014), https://arxiv.org/abs/1406.2661.