Chapter 7. Packaging software in images

This chapter covers

- Manual image construction and practices

- Images from a packaging perspective

- Working with flat images

- Image versioning best practices

The goal of this chapter is to help you understand the concerns of image design, learn the tools for building images, and discover advanced image patterns. You will accomplish these things by working through a thorough real-world example. Before getting started, you should have a firm grasp on the concepts in part 1 of this book.

You can create a Docker image by either modifying an existing image inside a container or defining and executing a build script called a Dockerfile. This chapter focuses on the process of manually changing an image, the fundamental mechanics of image manipulation, and the artifacts that are produced. Dockerfiles and build automation are covered in chapter 8.

It’s easy to get started building images if you’re already familiar with using containers. Remember, a union file system (UFS) mount provides a container’s file system. Any changes that you make to the file system inside a container will be written as new layers that are owned by the container that created them.

Before you work with real software, the next section details the typical workflow with a Hello World example.

The basic workflow for building an image from a container includes three steps. First, you need to create a container from an existing image. You will choose the image based on what you want to be included with the new finished image and the tools you will need to make the changes.

The second step is to modify the file system of the container. These changes will be written to a new layer on the union file system for the container. We’ll revisit the relationship between images, layers, and repositories later in this chapter.

Once the changes have been made, the last step is to commit those changes. Once the changes are committed, you’ll be able to create new containers from the resulting image. Figure 7.1 illustrates this workflow.

With these steps in mind, work through the following commands to create a new image named hw_image.

If that seems stunningly simple, you should know that it does become a bit more nuanced as the images you produce become more sophisticated, but the basic steps will always be the same.

Now that you have an idea of the workflow, you should try to build a new image with real software. In this case, you’ll be packaging a program called Git.

Git is a popular, distributed version-control tool. Whole books have been written about the topic. If you’re unfamiliar with it, I recommend that you spend some time learning how to use Git. At the moment, though, you only need to know that it’s a program you’re going to install onto an Ubuntu image.

To get started building your own image, the first thing you’ll need is a container created from an appropriate base image:

This will start a new container running the bash shell. From this prompt, you can issue commands to customize your container. Ubuntu ships with a Linux tool for software installation called apt-get. This will come in handy for acquiring the software that you want to package in a Docker image. You should now have an interactive shell running with your container. Next, you need to install Git in the container. Do that by running the following command:

This will tell APT to download and install Git and all its dependencies on the container’s file system. When it’s finished, you can test the installation by running the git program:

Package tools like apt-get make installing and uninstalling software easier than if you had to do everything by hand. But they provide no isolation to that software and dependency conflicts often occur. You can be sure that other software you install outside this container won’t impact the version of Git you have installed.

Now that Git has been installed on your Ubuntu container, you can simply exit the container:

The container should be stopped but still present on your computer. Git has been installed in a new layer on top of the ubuntu:latest image. If you were to walk away from this example right now and return a few days later, how would you know exactly what changes were made? When you’re packaging software, it’s often useful to review the list of files that have been modified in a container, and Docker has a command for that.

Docker has a command that shows you all the file-system changes that have been made inside a container. These changes include added, changed, or deleted files and directories. To review the changes that you made when you used APT to install Git, run the diff subcommand:

Lines that start with an A are files that were added. Those starting with a C were changed. Finally those with a D were deleted. Installing Git with APT in this way made several changes. For that reason, it might be better to see this at work with a few specific examples:

Always remember to clean up your workspace, like this:

Now that you’ve seen the changes you’ve made to the file system, you’re ready to commit the changes to a new image. As with most other things, this involves a single command that does several things.

You use the docker commit command to create an image from a modified container. It’s a best practice to use the -a flag that signs the image with an author string. You should also always use the -m flag, which sets a commit message. Create and sign a new image that you’ll name ubuntu-git from the image-dev container where you installed Git:

Once you’ve committed the image, it should show up in the list of images installed on your computer. Running docker images should include a line like this:

Make sure it works by testing Git in a container created from that image:

Now you’ve created a new image based on an Ubuntu image and installed Git. That’s a great start, but what do you think will happen if you omit the command override? Try it to find out:

Nothing appears to happen when you run that command. That’s because the command you started the original container with was committed with the new image. The command you used to start the container that the image was created by was /bin/bash. When you create a container from this image using the default command, it will start a shell and immediately exit. That’s not a terribly useful default command.

I doubt that any users of an image named ubuntu-git would expect that they’d need to manually invoke Git each time. It would be better to set an entrypoint on the image to git. An entrypoint is the program that will be executed when the container starts. If the entrypoint isn’t set, the default command will be executed directly. If the entrypoint is set, the default command and its arguments will be passed to the entrypoint as arguments.

To set the entrypoint, you’ll need to create a new container with the --entrypoint flag set and create a new image from that container:

Now that the entrypoint has been set to git, users no longer need to type the command at the end. This might seem like a marginal savings with this example, but many tools that people use are not as succinct. Setting the entrypoint is just one thing you can do to make images easier for people to use and integrate into their projects.

When you use docker commit, you commit a new layer to an image. The file-system snapshot isn’t the only thing included with this commit. Each layer also includes metadata describing the execution context. Of the parameters that can be set when a container is created, all the following will carry forward with an image created from the container:

- All environment variables

- The working directory

- The set of exposed ports

- All volume definitions

- The container entrypoint

- Command and arguments

If these values weren’t specifically set for the container, the values will be inherited from the original image. Part 1 of this book covers each of these, so I won’t reintroduce them here. But it may be valuable to examine two detailed examples. First, consider a container that introduces two environment variable specializations:

Next, consider a container that introduces an entrypoint and command specialization as a new layer on top of the previous example:

This example builds two additional layers on top of BusyBox. In neither case are files changed, but the behavior changes because the context metadata has been altered. These changes include two new environment variables in the first new layer. Those environment variables are clearly inherited by the second new layer, which sets the entrypoint and default command to display their values. The last command uses the final image without specifying any alternative behavior, but it’s clear that the previous defined behavior has been inherited.

Now that you understand how to modify an image, take the time to dive deeper into the mechanics of images and layers. Doing so will help you produce high-quality images in real-world situations.

By this point in the chapter, you’ve built a few images. In those examples you started by creating a container from an image like ubuntu:latest or busybox:latest. Then you made changes to the file system or context within that container. Finally, everything seemed to just work when you used the docker commit command to create a new image. Understanding how the container’s file system works and what the docker commit command actually does will help you become a better image author. This section dives into that subject and demonstrates the impact to authors.

Understanding the details of union file systems (UFS) is important for image authors for two reasons:

- Authors need to know the impact that adding, changing, and deleting files have on resulting images.

- Authors need have a solid understanding of the relationship between layers and how layers relate to images, repositories, and tags.

Start by considering a simple example. Suppose you want to make a single change to an existing image. In this case the image is ubuntu:latest, and you want to add a file named mychange to the root directory. You should use the following command to do this:

The resulting container (named mod_ubuntu) will be stopped but will have written that single change to its file system. As discussed in chapters 3 and 4, the root file system is provided by the image that the container was started from. That file system is implemented with something called a union file system.

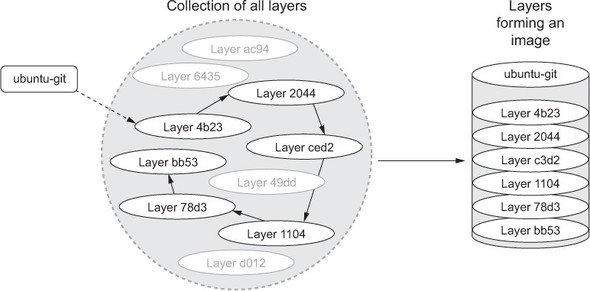

A union file system is made up of layers. Each time a change is made to a union file system, that change is recorded on a new layer on top of all of the others. The “union” of all of those layers, or top-down view, is what the container (and user) sees when accessing the file system. Figure 7.2 illustrates the two perspectives for this example.

When you read a file from a union file system, that file will be read from the top-most layer where it exists. If a file was not created or changed on the top layer, the read will fall through the layers until it reaches a layer where that file does exist. This is illustrated in figure 7.3.

All this layer functionality is hidden by the union file system. No special actions need to be taken by the software running in a container to take advantage of these features. Understanding layers where files were added covers one of three types of file system writes. The other two are deletions and file changes.

Like additions, both file changes and deletions work by modifying the top layer. When a file is deleted, a delete record is written to the top layer, which overshadows any versions of that file on lower layers. When a file is changed, that change is written to the top layer, which again shadows any versions of that file on lower layers. The changes made to the file system of a container are listed with the docker diff command you used earlier in the chapter:

This command will produce the output:

The A in this case indicates that the file was added. Run the next two commands to see how a file deletion is recorded:

This time the output will have two rows:

The D indicates a deletion, but this time the parent folder of the file was also included. The C indicates that it was changed. The next two commands demonstrate a file change:

The diff subcommand will show two changes:

Again, the C indicates a change, and the two items are the file and the folder where it’s located. If a file nested five levels deep were changed, there would be a line for each level of the tree. File-change mechanics are the most important thing to understand about union file systems.

Most union file systems use something called copy-on-write, which is easier to understand if you think of it as copy-on-change. When a file in a read-only layer (not the top layer) is modified, the whole file is first copied from the read-only layer into the writable layer before the change is made. This has a negative impact on runtime performance and image size. Section 7.2.3 covers the way this should influence your image design.

Take a moment to solidify your understanding of the system by examining how the more comprehensive set of scenarios is illustrated in figure 7.4. In this illustration files are added, changed, deleted, and added again over a range of three layers.

Knowing how file system changes are recorded, you can begin to understand what happens when you use the docker commit command to create a new image.

You’ve created an image using the docker commit command, and you understand that it commits the top-layer changes to an image. But we’ve yet to define commit.

Remember, a union file system is made up of a stack of layers where new layers are added to the top of the stack. Those layers are stored separately as collections of the changes made in that layer and metadata for that layer. When you commit a container’s changes to its file system, you’re saving a copy of that top layer in an identifiable way.

When you commit the layer, a new ID is generated for it, and copies of all the file changes are saved. Exactly how this happens depends on the storage engine that’s being used on your system. It’s less important for you to understand the details than it is for you to understand the general approach. The metadata for a layer includes that generated identifier, the identifier of the layer below it (parent), and the execution context of the container that the layer was created from. Layer identities and metadata form the graph that Docker and the UFS use to construct images.

An image is the stack of layers that you get by starting with a given top layer and then following all the links defined by the parent ID in each layer’s metadata, as shown in figure 7.5.

Figure 7.5. An image is the collection of layers produced by traversing the parent graph from a top layer.

Images are stacks of layers constructed by traversing the layer dependency graph from some starting layer. The layer that the traversal starts from is the top of the stack. This means that a layer’s ID is also the ID of the image that it and its dependencies form. Take a moment to see this in action by committing the mod_ubuntu container you created earlier:

That commit subcommand will generate output that includes a new image ID like this:

You can create a new container from this image using the image ID as it’s presented to you. Like containers, layer IDs are large hexadecimal numbers that can be difficult for a person to work with directly. For that reason, Docker provides repositories.

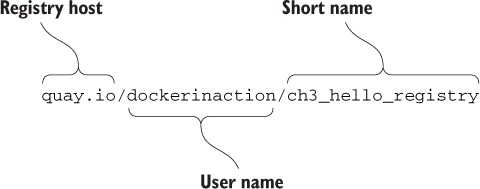

In chapter 3, a repository is roughly defined as a named bucket of images. More specifically, repositories are location/name pairs that point to a set of specific layer identifiers. Each repository contains at least one tag that points to a specific layer identifier and thus the image definition. Let’s revisit the example used in chapter 3:

This repository is located in the registry hosted at quay.io. It’s named for the user (dockerinaction) and a unique short name (ch3_hello_registry). Pulling this repository would pull all the images defined for each tag in the repository. In this example, there’s only one tag, latest. That tag points to a layer with the short form ID 07c0f84777ef, as illustrated in figure 7.6.

Repositories and tags are created with the docker tag, docker commit, or docker build commands. Revisit the mod_ubuntu container again and put it into a repository with a tag:

The generated ID that’s displayed will be different because another copy of the layer was created. With this new friendly name, creating containers from your images requires little effort. If you want to copy an image, you only need to create a new tag or repository from the existing one. You can do that with the docker tag command. Every repository contains a “latest” tag by default. That will be used if the tag is omitted like in the previous command:

By this point you should have a strong understanding of basic UFS fundamentals as well as how Docker creates and manages layers, images, and repositories. With these in mind, let’s consider how they might impact image design.

All layers below the writable layer created for a container are immutable, meaning they can never be modified. This property makes it possible to share access to images instead of creating independent copies for every container. It also makes individual layers highly reusable. The other side of this property is that anytime you make changes to an image, you need to add a new layer, and old layers are never removed. Knowing that images will inevitably need to change, you need to be aware of any image limitations and keep in mind how changes impact image size.

If images evolved in the same way that most people manage their file systems, Docker images would quickly become unusable. For example, suppose you wanted to make a different version of the ubuntu-git image you created earlier in this chapter. It may seem natural to modify that ubuntu-git image. Before you do, create a new tag for your ubuntu-git image. You’ll be reassigning the latest tag:

The first thing you’ll do in building your new image is remove the version of Git you installed:

The image list and sizes reported will look something like the following:

Notice that even though you removed Git, the image actually increased in size. Although you could examine the specific changes with docker diff, you should be quick to realize that the reason for the increase has to do with the union file system.

Remember, UFS will mark a file as deleted by actually adding a file to the top layer. The original file and any copies that existed in other layers will still be present in the image. It’s important to minimize image size for the sake of the people and systems that will be consuming your images. If you can avoid causing long download times and significant disk usage with smart image creation, then your consumers will benefit. There’s also another risk with this approach that you should be aware of.

The union file system on your computer may have a layer count limit. These limits vary, but a limit of 42 layers is common on computers that use the AUFS system. This number may seem high, but it’s not unreachable. You can examine all the layers in an image using the docker history command. It will display the following:

- Abbreviated layer ID

- Age of the layer

- Initial command of the creating container

- Total file size of that layer

By examining the history of the ubuntu-git:removed image, you can see that three layers have already been added on the top of the original ubuntu:latest image:

Outputs are something like:

You can flatten images if you export them and then reimport them with Docker. But that’s a bad idea because you lose the change history as well as any savings customers might get when they download images with the same lower levels. Flattening images defeats the purpose. The smarter thing to do in this case is to create a branch.

Instead of fighting the layer system, you can solve both the size and layer growth problems by using the layer system to create branches. The layer system makes it trivial to go back in the history of an image and make a new branch. You are potentially creating a new branch every time you create a container from the same image.

In reconsidering your strategy for your new ubuntu-git image, you should simply start from ubuntu:latest again. With a fresh container from ubuntu:latest, you could install whatever version of Git you want. The result would be that both the original ubuntu-git image you created and the new one would share the same parent, and the new image wouldn’t have any of the baggage of unrelated changes.

Branching increases the likelihood that you’ll need to repeat steps that were accomplished in peer branches. Doing that work by hand is prone to error. Automating image builds with Dockerfiles is a better idea.

Occasionally the need arises to build a full image from scratch. This practice can be beneficial if your goal is to keep images small and if you’re working with technologies that have few dependencies. Other times you may want to flatten an image to trim an image’s history. In either case, you need a way to import and export full file systems.

On some occasions it’s advantageous to build images by working with the files destined for an image outside the context of the union file system or a container. To fill this need, Docker provides two commands for exporting and importing archives of files.

The docker export command will stream the full contents of the flattened union file system to stdout or an output file as a tarball. The result is a tarball that contains all the files from the container perspective. This can be useful if you need to use the file system that was shipped with an image outside the context of a container. You can use the docker cp command for this purpose, but if you need several files, exporting the full file system may be more direct.

Create a new container and use the export subcommand to get a flattened copy of its filesystem:

This will produce a file in the current directory named contents.tar. That file should contain two files. At this point you could extract, examine, or change those files to whatever end. If you had omitted the --output (or -o for short), then the contents of the file system would be streamed in tarball format to stdout. Streaming the contents to stdout makes the export command useful for chaining with other shell programs that work with tarballs.

The docker import command will stream the content of a tarball into a new image. The import command recognizes several compressed and uncompressed forms of tarballs. An optional Dockerfile instruction can also be applied during file-system import. Importing file systems is a simple way to get a complete minimum set of files into an image.

To see how useful this is, consider a statically linked Go version of Hello World. Create an empty folder and copy the following code into a new file named helloworld.go:

You may not have Go installed on your computer, but that’s no problem for a Docker user. By running the next command, Docker will pull an image containing the Go compiler, compile and statically link the code (which means it can run all by itself), and place that program back into your folder:

If everything works correctly, you should have an executable program (binary file) in the same folder, named hello. Statically linked programs have no external file dependencies at runtime. That means this statically linked version of Hello World can run in a container with no other files. The next step is to put that program in a tarball:

Now that the program has been packaged in a tarball, you can import it using the docker import command:

In this command you use the -c flag to specify a Dockerfile command. The command you use sets the entrypoint for the new image. The exact syntax of the Dockerfile command is covered in chapter 8. The more interesting argument on this command is the hyphen (-) at the end of the first line. This hyphen indicates that the contents of the tarball will be streamed through stdin. You can specify a URL at this position if you’re fetching the file from a remote web server instead of from your local file system.

You tagged the resulting image as the dockerinaction/ch7_static repository. Take a moment to explore the results:

You’ll notice that the history for this image has only a single entry (and layer):

In this case, the image we produced was small for two reasons. First, the program we produced was only just over 1.8 MB, and we included no operating system files or support programs. This is a minimalistic image. Second, there’s only one layer. There are no deleted or unused files carried with the image in lower layers. The downside to using single-layer (or flat) images is that your system won’t benefit from layer reuse. That might not be a problem if all your images are small enough. But the overhead may be significant if you use larger stacks or languages that don’t offer static linking.

There are trade-offs to every image design decision, including whether or not to use flat images. Regardless of the mechanism you use to build images, your users need a consistent and predictable way to identify different versions.

Pragmatic versioning practices help users make the best use of images. The goal of an effective versioning scheme is to communicate clearly and provide adoption flexibility.

It’s generally insufficient to build or maintain only a single version of your software unless it’s your first. If you’re releasing the first version of your software, you should be mindful of your users’ adoption experience immediately. The reason why versions are important is that they identify contracts that your adopters depend on. Unexpected software changes cause problems.

With Docker, the key to maintaining multiple versions of the same software is proper repository tagging. The understanding that every repository contains multiple tags and that multiple tags can reference the same image is at the core of a pragmatic tagging scheme.

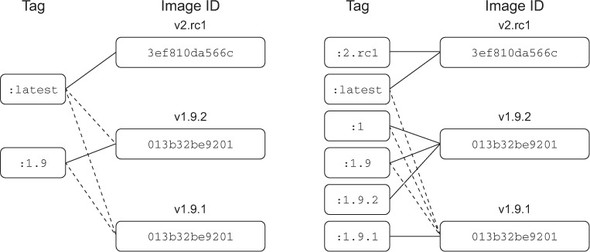

The docker tag command is unlike the other two commands that can be used to create tags. It’s the only one that’s applied to existing images. To understand how to use tags and how they impact the user adoption experience, consider the two tagging schemes for a repository shown in figure 7.7.

Figure 7.7. Two different tagging schemes (left and right) for the same repository with three images. Dotted lines represent old relationships between a tag and an image.

There are two problems with the tagging scheme on the left side of figure 7.7. First, it provides poor adoption flexibility. A user can choose to declare a dependency on 1.9 or latest. When a user adopts version 1.9 and that implementation is actually 1.9.1, they may develop dependencies on behavior defined by that build version. Without a way to explicitly depend on that build version, they will experience pain when 1.9 is updated to point to 1.9.2.

The best way to eliminate this problem is to define and tag versions at a level where users can depend on consistent contracts. This is not advocating a three-tiered versioning system. It means only that the smallest unit of the versioning system you use captures the smallest unit of contract iteration. By providing multiple tags at this level, you can let users decide how much version drift they want to accept.

Consider the right side of figure 7.7. A user who adopts version 1 will always use the highest minor and build version under that major version. Adopting 1.9 will always use the highest build version for that minor version. Adopters who need to carefully migrate between versions of their dependencies can do so with control and at times of their choosing.

The second problem is related to the latest tag. On the left, latest currently points to an image that’s not otherwise tagged, and so an adopter has no way of knowing what version of the software that is. In this case, it’s referring to a release candidate for the next major version of the software. An unsuspecting user may adopt the latest tag with the impression that it’s referring to the latest build of an otherwise tagged version.

There are other problems with the latest tag. It’s adopted more frequently than it should be. This happens because it’s the default tag, and Docker has a young community. The impact is that a responsible repository maintainer should always make sure that its repository’s latest refers to the latest stable build of its software instead of the true latest.

The last thing to keep in mind is that in the context of containers, you’re versioning not only your software but also a snapshot of all of your software’s packaged dependencies. For example, if you package software with a particular distribution of Linux, like Debian, then those additional packages become part of your image’s interface contract. Your users will build tooling around your images and in some cases may come to depend on the presence of a particular shell or script in your image. If you suddenly rebase your software on something like CentOS but leave your software otherwise unchanged, your users will experience pain.

In situations where the software dependencies change, or the software needs to be distributed on top of multiple bases, then those dependencies should be included with your tagging scheme.

The Docker official repositories are ideal examples to follow. Consider this tag list for the official golang repository, where each row represents a distinct image:

The columns are neatly organized by their scope of version creep with build-level tags on the left and major versions to the right. Each build in this case has an additional base image component, which is annotated in the tag.

Users know that the latest version is actually version 1.4.2. If an adopter needs the latest image built on the debian:wheezy platform, they can use the wheezy tag. Those who need a 1.4 image with ONBUILD triggers can adopt 1.4-onbuild. This scheme puts the control and responsibility for upgrades in the hands of your adopters.

This is the first chapter to cover the creation of Docker images, tag management, and other distribution concerns such as image size. Learning this material will help you build images and become a better consumer of images. The following are the key points in the chapter:

- New images are created when changes to a container are committed using the docker commit command.

- When a container is committed, the configuration it was started with will be encoded into the configuration for the resulting image.

- An image is a stack of layers that’s identified by its top layer.

- An image’s size on disk is the sum of the sizes of its component layers.

- Images can be exported to and imported from a flat tarball representation using the docker export and docker import commands.

- The docker tag command can be used to assign several tags to a single repository.

- Repository maintainers should keep pragmatic tags to ease user adoption and migration control.

- Tag your latest stable build with the latest tag.

- Provide fine-grained and overlapping tags so that adopters have control of the scope of their dependency version creep.