14 Different database types and EF Core services

This chapter covers

- Looking at different database server types

- Using the CQRS architecture with EF Core

- Understanding how the

SaveChangesmethod sets keys - Using EF Core’s internal services

- Accessing EF Core’s command-line services

This chapter starts with the differences you might encounter in the range of relational databases that EF Core supports. To bring this to life, you’ll convert our book app from using SQL Server to the MySQL database to see what changes. I make that application available in the Git repo branch Chapter14MySql.

You’ll then look at the Command Query Responsibility Segregation (CQRS) architecture discussed at the end of the preceding chapter (see section 13.6). We’ll spend quite a bit of time on this, as it’s a useful architecture and its implementation highlights advanced features inside EF Core. You’ll also add a NoSQL database to the mix to end up with a high-performance version of our original book-selling site application. It might not challenge Amazon, but it’s still pretty fast for a single-instance ASP.NET Core application. This application is available on the book’s Git repo branch Chapter14 as well as on a live site at http://cqrsravendb.efcoreinaction.com/.

The end of this chapter goes deeper into EF Core and looks at its internal services and what you can do with them. The EF Core team has designed these services to allow you to alter the way EF Core works inside. This is advanced stuff, but it’s worth knowing about in case your project could benefit from it.

14.1 What differences do other database server types bring?

In most of this book, you’ve used an SQL Server database, but what happens if you want to use a different type of database server? EF Core has multiple database providers that access a range of database servers, and that list will grow over time. So, the question is, does anything change with different database types and providers?

You’ll tackle this wide-ranging question with a worked example: you’ll convert our book app from using an SQL Server database to a MySQL database. Typically, you don’t change databases of an application; you just have to get your application working with the database of your choice. But it’s instructive to see what changes when you swap database types, because it gives you an idea of the sorts of issues you may encounter when using different database types.

To make the example a bit harder, you’ll convert the performance-tuned version of the book app (see section 13.2), which has an SQL UDF in it. That brings in the complication of raw SQL code that you add to the database outside EF Core, and this does create some issues.

I’ve chosen a MySQL database because it’s a well-known database with a community version that’s available under the GPL license on many platforms. You can run the application locally by downloading a MySQL server; I give full details on how to do that next. Following that, this section covers the following topics:

- First steps: creating an instance of our application’s DbContext for a MySQL database

- What you have to do to convert the book app from SQL Server to MySQL

- A general look at other database server types and the differences they may bring

Note: I recommend this video showing the steps on how to download and install the community version of the MySQL database: www.youtube.com/watch?v=fwQyZz6cNGU.

I chose the custom installation, and selected the server and the MySQL Workbench. The Workbench is like Microsoft’s SQL Server Management Studio and allows you to inspect the databases and then delete them when you’ve finished.

After this, you can run the Chapter14MySql application via Visual Studio 2017 (press F5), or VS Code (Debug > Net Core launch (web)), or type dotnet run on a console terminal in the EfCoreInAction project directory.

I use the MySQL EF Core database provider Pomelo.EntityFrameworkCore.MySql in my application. There’s another MySQL database provider, MySql.Data.EntityFrameworkCore, but when I was building my application, that database provider didn’t support EF Core 2.0; it’s worth checking out, though.

14.1.1 Creating an instance of the application’s DbContext for MySQL

The first thing you need is to be able to create an instance of the application’s DbContext that accesses a MySQL database rather than an SQL Server database. Section 2.2.2 showed how to create an instance of the application’s DbContext with SQL Server, and this listing shows the same code, but with the changes needed to use a MySQL database shown in bold. In this case I show the connection string as a constant, but in the ASP.NET Core-based book app you would need to update the connection string in the appsetting.json/appsettings.Development.json file.

Listing 14.1 Creating an instance of the DbContext to access the database

const string connection =

"Server=localhost;"+ #1

"Database=EfCoreInActionDev;"+ #1

"Uid=<username>;Pwd=<password>;"; #1

var optionsBuilder = #2

new DbContextOptionsBuilder #2

<EfCoreContext>(); #2

optionsBuilder.UseMySql(connection); #3

var options = optionsBuilder.Options;

using (var context = new EfCoreContext(options))#4

{

var bookCount = context.Books.Count(); #5

//... etc.

As you can see, there aren’t a lot of changes—just the connection string and changing the UseSqlServer method to the UseMySql method. You have to install the EF Core MySQL database provider NuGet package Pomelo.EntityFrameworkCore.MySql to get access to the UseMySql method, and a MySQL database.

14.1.2 What you have to do to convert the SQL Server application to MySQL

Although the changes to create an instance of the application’s DbContext are small, other changes are necessary to make the application work. This section lists all the changes required to make the Chapter13-Part1 branch version of the book app, which used SQL Server, now work with MySQL. I’ve split these into significant changes and housekeeping changes.

Significant changes needed to convert from Chapter13-Part1 version to use MySQL

The significant changes required in the Chapter13-Part1 application are related to migrations and the raw SQL in the application, as detailed here:

- Rerun the

Add-Migrationcommand for the MySQL database provider.In chapters 2 and 4, you added database migrations to the book app application (see section 11.2). These migrations are built for an SQL Server database, not a MySQL database, so you must change them.

First, you update the class called

ContextFactoryNeededForMigrationsin the DataLayer to MySQL. You change the connection string to point to your local MySQL database and replace theUseSqlServermethod with theUseMySqlmethod. That requires you to add the MySQL database provider package to the DataLayer project. You need to do that because the command-line migration tools use this class to obtain an instance of the application’s DbContext.After deleting the old migration files, you run the

Add-Migration/dotnet ef migrations addcommand to build a new set of migration files using the MySQL database provider. - Change the raw SQL in places where the MySQL format is different from SQL Server.

In section 13.2, you added a UDF called

AuthorsStringUdfto your database to improve the performance of building the comma-delimited list of authors of a book. That UDF is written for an SQL Server database, and, although MySQL supports UDFs, the syntax of a UDF is different. You converted the AuthorsStringUdf UDF to the MySQL format successfully, but unfortunately, theCOALESCEstring-combining trick that you used doesn’t work on MySQL. You therefore have to remove the UDF and go back to the LINQ-based approach to combine the author names.This is a typical problem when you change database server types and have raw SQL commands. The EF Core’s database provider translates LINQ or EF Core commands into the correct format for the database type, but any raw SQL commands you write need checking to ensure they work on the new database type. But even with the EF Core–produced SQL, problems can arise, as the next point shows.

- Fix any type mapping between .NET and the database that has changed.

When you converted from an SQL server database to a MySQL database, the LINQ query that calculates the average review votes (see section 13.1.2) threw an exception. It turns out that the returned type of the SQL

AVGcommand on MySQL is a nullabledecimal, rather than the nullabledoublein SQL Server. To overcomes this, you need to change theBookListDto’sAverageReviewVotesproperty .NET type todecimal?to match the way MySQL works.Other, more subtle type differences exist between database servers. For instance, MySQL stores all strings in Unicode (16-bits), MySQL’s

DATETIMEdefault precision is slightly lower than SQL Server’sDATETIME2precision, and so on. One of my unit tests broke because of the difference in theDateTimeprecision, but everything else worked fine. In bigger applications, other problems could arise from these small changes.

The small, housekeeping changes needed to swap to MySQL database provider

You need to make minor changes to make the Chapter13-Part1 application work with the MySQL database provider. They’re trivial, but the application isn’t going to work without them.

The first change is to the DefaultConnection string in ASP.NET Core’s appsetting.json file. When running the application locally for development, the connection string must be in the correct format to access the local MySQL database (see listing 14.1). If you deploy the application to a web host, you need to provide the correct connection string during the publish process to access the hosted MySQL database (see section 5.4.1).

You also need to alter ASP.NET Core’s ConfigureServices method in the Startup class, where the application’s DbContext is registered as a service. You replace the UseSqlServer method with the UseMySql method. That requires you to add the MySQL database provider package to your ASP.NET Core project.

14.1.3 Looking at other database server types and differences

The two key issues when looking at a database to use with EF Core are as follows:

- Does the database have the features you need?

- Does EF Core have a database provider that properly supports that database?

Looking at the database features first, mostly minor differences exist in SQL syntax or features. The SQLite database has the biggest number of feature limitations (it does have the suffix lite), but most other database servers provide good coverage of all SQL features that EF Core uses.

Note

If you’re interested in SQLite, you can learn about the limitations of the SQLite database in chapter 15 (see table 15.2), which covers using SQLite in-memory databases for quicker unit testing.

Typical of a minor database difference is MySQL’s requirement that the EF Core’s concurrency timestamp (see section 8.7.2) must be of the .NET type DateTime, rather than the byte[] in SQL Server, whereas a PostgreSQL database uses a column called xmin (see http://mng.bz/5zB9). I’m sure that lots of subtle EF Core issues exist in various databases, because each database server works in a slightly different way.

Tip

Most of the Microsoft documentation, and mine, uses SQL Server as the primary example. Most other database providers publish documentation highlighting any differences from the standard EF Core setup. You can find links to this documentation via the EF Core’s database providers’ list (see https://docs.microsoft.com/en-us/ef/core/providers/).

The quality of the EF Core database provider and the level of support it provides is also another part of the equation. Writing a database provider for EF Core is a nontrivial task, and the SQL Server database provider written by the EF Core team is the gold standard. You should test any database provider to ensure that it works for you. When I started using the Pomelo.EntityFrameworkCore.MySql database provider, I found a problem, and when I raised an issue on the Pomelo Foundation EF Core GitHub issue page, I got a workaround in 24 hours—which I thought was a good result.

Note

Although talking about various databases is important for EF Core, I don’t cover running an EF Core application on different platforms, such as Linux, macOS, and so on. That topic is a .NET Core issue, and I recommend Dustin Metzgar’s .NET Core in Action (Manning, 2018), which covers this in detail.

14.1.4 Summarizing EF Core’s ability to work with multiple database types

Doing this database swap, plus a bit of work with PostgreSQL, shows me that EF Core and its database providers do an excellent job of handling various database types. The only problems I had during the conversion from SQL Server to MySQL were the differences in how each database server worked. EF Core can also produce database migrations specifically for each database type (see section 11.2.1, subsection “Migrations are database-provider specific”), which is another help for developers who don’t know the SQL language well.

14.2 Developing a CQRS architecture application with EF Core

Having talked about various databases, I now want to talk about a solution that combines a relational database handled by EF Core with a NoSQL database. This comes about from my suggestion in section 13.6 that a CQRS architecture using a polyglot database structure would provide better scalability performance.

Definition

A CQRS architecture segregates operations that read data from operations that update data, by using separate interfaces. This can maximize performance, scalability, and security, and supports the evolution of the system over time through higher flexibility. See http://mng.bz/Ix8D.

Definition

A polyglot database structureuses a combination of storage types; for instance, relational databases, NoSQL databases, and flat files. The idea is that each database type has its strengths and weaknesses, and by using two or more, you can obtain a better overall system. See http://mng.bz/6r1W.

The CQRS architecture acknowledges that the read side of an application is different from the write side. Reads are often complicated, drawing in data from multiple places, whereas the write side is often much simpler. You can see in the example application that listing the books is complex, but adding a review is fairly trivial. Separating the code for each part can help you focus on the specific features of each part; this is another application of the SoC software principle.

In chapter 13, you produced the performance version, in which you cached values (see section 13.4). It struck me then that the final query didn’t access any relationships and could be stored in a simpler database, such as a NoSQL database. In this example, you’ll use a polyglot database structure, with a mixture of SQL and NoSQL databases, for the following reasons:

- Using an SQL write-side database makes sense because business applications often use relational data. Think about a real book-selling site: it would have a lot of complex, linked data to handle business aspects such as suppliers, inventory, pricing, orders, payment, delivery, tracking, audits, and so on. I think a relational/SQL database with its superior level of data integrity is the right choice for many business problems.

- But those relationships and some aspects of an SQL database, such as the need to dynamically calculate some values, can make it slow at retrieving data. So, a NoSQL database with precalculated values such as the “average review votes” can improve performance considerably over an SQL database. This is what Mateusz Stasch calls “a legitimate cache” in his article at http://mng.bz/A7eC.

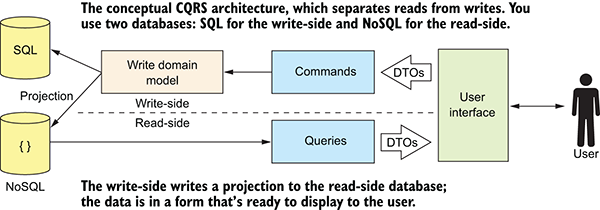

The result of these design inputs means you’ll develop what I refer to as a two-database CQRS architecture, as shown in figure 14.1.

Figure 14.1 A conceptual view of a CQRS architecture with an SQL database for the write side, and a NoSQL database for the read side. A write takes a bit more work because it writes to two databases—the normal SQL database and the new NoSQL read-side database. In this arrangement, the read-side database is writing in the exact format needed by the user, so reads are fast.

Using two databases is a logical step with the CQRS architecture. It brings potential performance gains for reads, but a performance cost on writes. This makes the two-database CQRS architecture appropriate when your business application has more reads of the data than writes. Many business applications have more reads than writes (e-commerce applications are a good example), so this architecture fits our book app well.

14.2.1 Implementation of a two-database CQRS architecture application

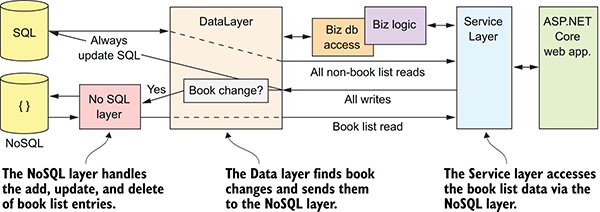

You want to move only the book list view data to the read-side database, and not do this for the order-processing part, because only the book list view has a performance issue. It turns out that although adding CQRS does require a fair amount of work, it’s simple to apply the CQRS architecture to only part of our application. Figure 14.2 shows the design of our changed book application, with the book list implemented as a two-database CQRS part.

Figure 14.2 shows different lines between the ServiceLayer and the DataLayer to illustrate the different routes that data takes through the system, but the lines are notional. The ServiceLayer and BusinessLayer continue to work in the same way, and it’s the DataLayer’s job to split out any writes that will change the book list view. You do this by overriding the SaveChanges method(s) inside the application’s DbContext and adding code to work out whether the book list view has changed. If this new code detects a book list view change, it sends a request to the new NoSQL layer to update the NoSQL database.

Figure 14.2 To implement the CQRS architecture for the book list, you inspect every write to see whether it’ll change the book list data. That’s best done by the DataLayer, where you can use the EF Core change tracker to see what’s being added, updated, or deleted. If it’ll change the book list data, you ask the NoSQL layer to update the database.

The other part to change is the BookListService class in the ServiceLayer. This class handles the book list, and you change it to access the NoSQL database instead of the SQL database. I selected the RavenDB NoSQL database, which has a community version of its database server that you can run locally. There’s also a .NET package that supports LINQ commands, so the LINQ built for EF Core works directly with RavenDB.

I don’t cover the RavenDB database access code because it’s outside the scope of this book. Visit https://ravendb.net/ for documentation, or the GitHub documentation site at https://github.com/ravendb/docs/, which includes sample code.

Note

Thanks to Oren Eini (Twitter @ayende) for his help with using the RavenDB database. Oren is the main force behind the RavenDB NoSQL database and contacted me after one of my articles. He provided support and guidance that were helpful.

Note: If you aren’t developing on a Windows platform, you can use a hosted RavenDB database instead. I went to www.ravenhq.com and found a package called Experimental, which was free. You can create a database on there and use that in the CQRS application. You need to copy the connection string into the EfCoreInAction appsetting.json file. There’s also a Docker container version of RavenDB; see http://mng.bz/CaE4.

Note

You can see a live version of the two-database CQRS book app at http://cqrsravendb.efcoreinaction.com/. This site has 250,000 books in its database. This site uses a hosted RavenDB database courtesy of www.ravenhq.com (thanks to Jonathan Matheus at RavenHQ for organizing that). The RavenDB hosting I’m using is the simplest/cheapest, so the performance of the live site won’t match the performance figures given in this chapter.

In addition to being a high-performance combination, the implementation of this architecture reveals advanced aspects of the way EF Core works. The following are the points covered in the next few subsections:

- How the parts of this CQRS solution interact with each other.

- Finding the book view changes—part 1, finding the correct State and primary key.

- Finding the book view changes—part 2, building the correct

State. - Why the CQRS solution is less likely to have out-of-date cached values.

14.2.2 How the parts of the CQRS solution interact with each other

When updating an existing application for performance reasons, you need to be careful not to break the application in the process. The book-selling site isn’t that complicated, but you still need to be careful when you modify the application over to a CQRS architecture. You therefore want a design that minimizes the changes and isolates the new parts.

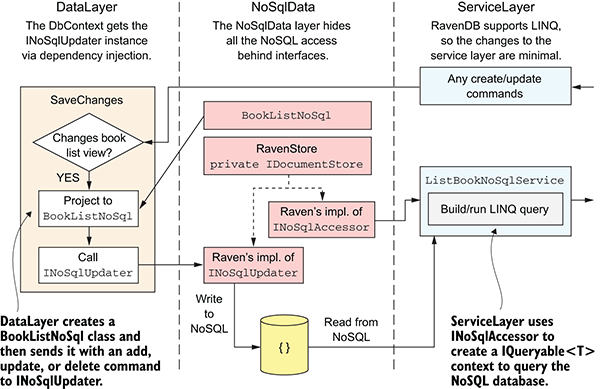

I came up with a design that keeps all the NoSQL/RavenDB parts separate. In this final design, the EF Core doesn’t know, or care, what database is being used for the read-side part of the CQRS system. This makes the update simpler, plus offers the possibility of changing the NoSQL database used. I like RavenDB, with its support of LINQ, but EF Core version 2.1 previews Azure’s NoSQL database, Cosmos, which might be an interesting alternative.

Keeping as much of the new database code in the NoSqlDataLayer, and using interfaces, keeps the impact of the changes to a minimum. Figure 14.3 shows how to hide the NoSQL code behind interfaces to keep that code isolated. You use dependency injection to provide both the DataLayer and the ServiceLayer with methods that allow access to the database.

Figure 14.3 Internals of the NoSqlDataLayer are hidden from the DataLayer and ServiceLayer. The DataLayer and ServiceLayer work with the BookListNoSql class, which maps to the book list view, and several interfaces. The aim is to make it easy to add the CQRS read-side database with minimal impact on the existing application. It also allows you to change the read-side database server with minimal refactoring to the code outside the NoSqlDataLayer.

The changes from the existing, SQL-based book app are as follows:

- New code is added to the DataLayer by overriding the

SaveChangesmethods. This detects when a change to the database means a certain book list view needs to be updated. - The whole of the NoSqlData project is new. It contains all the RavenDB code.

- Minor changes are made to

ListBookServiceto use RavenDB.

The core of this NoSQL implementation is a class I call RavenStore (see listing 14.2). RavenDB requires a single instance of the RavenDB’s IDocumentStore, which is set up on the application’s start. This RavenStore class provides two methods: one for the DataLayer to get a class for writing to the read-side database, and one for the ServiceLayer to get a class to allow reading of the read-side database.

Listing 14.2 RavenStore, with methods to create, read, and write accessors

public class RavenStore : #1

INoSqlCreators #2

{

public const string RavenEventIdStart #3

= "EfCoreInAction.NoSql.RavenDb"; #3

private readonly DocumentStore _store;

private readonly ILogger _logger;

public RavenStore(string connectionString, #4

ILogger logger) #4

{

if (string.IsNullOrEmpty(connectionString))#5

return; #5

_logger = logger;

var store = new DocumentStore(); #6

store.ParseConnectionString(connectionString);#6

store.Initialize(); #6

//Add indexes if not already present

new BookById().Execute(store); #7

new BookByActualPrice().Execute(store);#7

new BookByVotes().Execute(store); #7

_store = store; #8

}

public INoSqlUpdater CreateNoSqlUpdater() #9

{ #9

return new RavenUpdater(_store, _logger);#9

} #9

public INoSqlAccessor CreateNoSqlAccessor() #10

{ #10

return new RavenBookAccesser(_store, _logger);#10

} #10

}

The INoSqlCreators interface is used by the DataLayer to get the method to update the read-side database, and by the ServiceLayer to gain access to the read-side for querying. You need to register a single RavenStore instance with ASP.NET Core’s dependency injection service as the service to be accessed via the INoSqlCreators interface. The following listing shows the section of code in the ConfigureServices method in the Startup class that registers the RavenStore as a singleton, which provides the service INoSqlCreators.

Listing 14.3 Registering the two interfaces to the RavenDB implementation

var ravenDbConnection = #1

Configuration.GetConnectionString #1

("RavenDbConnection"); #1

services.AddSingleton<INoSqlCreators>(ctr => #2

{

var logger = ctr.GetService<ILogger<RavenStore>>();#3

return new RavenStore(ravenDbConnection, logger); #3

});

The listing shows part of the Raven implementation of the INoSqlUpdater interface, which the DataLayer would use to update the read-side database. This gives you some idea of how this works.

Listing 14.4 The RavenUpdater class that handles the read-side database updates

public class RavenUpdater : INoSqlUpdater #1

{

private readonly DocumentStore _store; #2

private readonly ILogger _logger; #3

public RavenUpdater(DocumentStore store, #4

ILogger logger) #4

{ #4

_store = store; #4

_logger = logger; #4

} #4

public void DeleteBook(int bookId) #5

{

using(new LogRavenCommand #6

($"Delete: bookId {bookId}", _logger)) #6

using (var session = _store.OpenSession()) #7

{

session.Delete( #8

BookListNoSql #9

.ConvertIdToNoSqlId(bookId)); #9

}

}

public void CreateNewBook(BookListNoSql book) #10

{

using (new LogRavenCommand #6

($"Create: bookId {book.GetIdAsInt()}", #6

_logger)) #6

using (var bulkInsert = _store.BulkInsert())#11

{ #11

bulkInsert.Store(book); #11

} #11

}

//The UpdateBook and BulkLoad methods are left out to save space

Now that you’ve seen the code that’ll update the read-side database, the other major part of the CQRS implementation is in how the DataLayer detects changes to the SQL database, which will alter the NoSQL book list view. I describe this next.

14.2.3 Finding book view changes—Part 1, finding the correct state and key

As explained in section 14.2.2, you override the SaveChange methods (sync and async) in the application’s DbContext and add code to find changes that will affect the book list view. This turns out to be quite complex; I solved it only by understanding how EF Core works underneath. I think this learning is useful outside the CQRS situation, so in this section I explain how EF Core handles the setting of the foreign keys by looking at the navigational properties.

For this example, you’ll add a new Book entity instance, with one new Review entity instance attached to it via the Book’s Reviews navigational property. This is the simplest example that shows all the stages that EF Core goes through. Table 14.1 shows the value of the State of the Book entity in the Book’s State column after the code in column 1 has run. The other two columns, Book’s BookId and Review’s BookId, show the value of BookId property of the Book entity, and BookId of the Review entity, respectively, after the code in column 1 has run.

Now, you might be wondering about the large negative value that appears after stage 2, the Add stage in table 14.1. What has happened here is that the Add method has looked at the Book entity’s navigational properties to see whether there are any changes in its relationships. EF Core finds that a new Review entity is assigned to the Book entity, so it wants to set the foreign key. In this case, the Book entity hasn’t yet been written to the database, so it uses a negative key to represent that relationship. The negative key is unique within the current tracked entities and tells the SaveChanges method which new entities are linked.

In stage 3, in which the SaveChanges method is called, these negative keys link the Book entity and the Review entity. This causes EF Core to output SQL code that first INSERTs the Book entity into the Books table, returning its primary key as normal, followed by an INSERT of the Review entity, including a BookId value taken from the Book entity.

Table 14.1 How EF Core tracks relationships when adding new entities to the database. EF Core’s Add method uses negative key values to define the relationships. These negative keys are replaced with the real key value after the entities have been written to the database.

Table 14.1 How EF Core tracks relationships when adding new entities to the database. EF Core’s Add method uses negative key values to define the relationships. These negative keys are replaced with the real key value after the entities have been written to the database.

| The three stages in the code | Book’s State | Book’s BookId | Review’s BookId |

1. Create instances |

Detached |

0 | 0 |

2. Add stagecontext.Add(book); |

Added |

–2147482643 | –2147482643 |

3. SaveChanges stagecontext.SaveChanges(); |

Unchanged |

1 | 1 |

The problem is, if you wait until after the call to the SaveChanges method to get the correct key values, the State of the entities will have been cleared. You need a two-stage process, as shown in this listing. In the first part of the process, you capture the State and the relationships; and in the second part, you capture the primary key of any Book entities.

Listing 14.5 The code inside one of the overridden SaveChanges

public override int SaveChanges() #1

{

var detectedChanges = BookChangeInfo #2

.FindBookChanges(ChangeTracker.Entries());#2

var result = base.SaveChanges(); #3

var booksChanged = BookChange #4

.FindChangedBooks(detectedChanges); #4

var updater = new ApplyChangeToNoSql #5

(this, _updater); #5

updater.UpdateNoSql(booksChanged); #6

return result;

}

Now, let’s look inside the BookChangeInfo class and the FindChangedBooks method, as it’s interesting to see the steps required to get the State and the BookId in the correct form.

14.2.4 Finding the book view changes—Part 2, building the correct State

The preceding section showed you how the State property was correct before the call to the SaveChanges method, but the BookId wouldn’t be correct for a new Book until after that method call. Obviously, you need to do something before and after the SaveChanges method call. This section shows those steps.

Listing 14.5 showed the overridden SaveChanges method, with the extra code before and after the call to the base SaveChanges method. Now you’ll look at what’s happening before and after the base SaveChanges method call.

Before the base SaveChanges method call—get the State and relationships

Any change to a relationship in the Book entity class could affect the book list view. You therefore mark the Book entity and all its relationship entities with an interface, as shown in this code snippet:

public interface IBookId

{

int BookId { get; }

}

You apply the IBookId interface Book entity, any entity class that has a foreign-key relationship with the Book entity (the Review, PriceOffer, and BookAuthor entities). This allows you to detect when a command changes any of these entities, which in turn will affect the book list view. After you find any change, you decode that change into a series of BookChangeInfo instances. The BookChangeInfo class holds the State before the SaveChanges method is called, and the BookId that refers to the Book entity it changes. This may be a negative value, as shown in table 14.1, or the real BookId for an update, but either way you can use it to find all the entities that are linked to a single Book entity.

Listing 14.6 shows how the BookChangeInfo class works out the correct State for the book list view. Working out the right State from the Book entity’s perspective is complex—for instance, a new Book entity should set the State to Added—but a new Review should only set the State to Modified, because the new Review only modifies the book list view.

Listing 14.6 The BookChangeInfo class and how it decides on the correct State

internal class BookChangeInfo #1

{

private readonly Book _book; #2

public int BookId { get; } #3

public EntityState State { get; } #4

public int FinalBookId => _book?.BookId ?? BookId; #5

private BookChangeInfo(int bookId, #6

EntityEntry entity) #6

{

BookId = bookId;

_book = entity.Entity as Book; #7

if (_book != null) #8

{

var softDeletedProp = entity.Property( #9

nameof(_book.SoftDeleted)); #9

if (softDeletedProp.IsModified) #10

{ #10

State = _book.SoftDeleted #10

? EntityState.Deleted #10

: EntityState.Added; #10

}

else if (entity.State == #11

EntityState.Deleted) #11

{ #11

State = _book.SoftDeleted #11

? EntityState.Unchanged #11

: EntityState.Deleted; #11

}

else

{

State = _book.SoftDeleted #12

? EntityState.Unchanged #12

: entity.State; #12

}

}

else

{

State = EntityState.Modified; #13

}

}

That might seem like a lot of work to decide on the final State, but because you’re using the SoftDeleted property to hide a Book entity (see section 3.5.1), you need to honor that in the NoSQL database. If a Book entity’s SoftDeleted property is set to true, you must delete it from book list NoSQL database. Listing 14.6 must correctly handle all the combinations to ensure that it doesn’t try to delete an already soft-deleted book from the NoSQL database.

After the base SaveChanges method call—build a list of books that need updating

Now, let’s look at how to use this information after the SaveChanges method has been called. You take the BookChangeInfo information, which may include multiple updates to the same Book entity, and coalesce them down to a one-change-per-book list. The trick is to make sure the type of change is correct for the read-side database. This listing shows the BookChange class, with its static method that produces the final update information.

Listing 14.7 The BookChange class with its static FindChangedBooks method

internal class BookChange #1

{

public int BookId { get; } #2

public EntityState State { get; } #3

private BookChange(int bookId, #4

EntityState state) #4

{ #4

BookId = bookId; #4

State = state; #4

} #4

public static IImmutableList<BookChange> #5

FindChangedBooks(IImmutableList<BookChangeInfo> #5

changes) #5

{

var booksDict = new #6

Dictionary<int, BookChangeInfo>(); #6

foreach (var bookChange in #7

changes.Where( #7

x => x.State != EntityState.Unchanged)) #7

{

if (booksDict.ContainsKey(bookChange.BookId) #8

&& booksDict[bookChange.BookId].State #8

!= EntityState.Modified) #8

continue; #8

booksDict[bookChange.BookId] = bookChange; #9

}

return booksDict.Select(x => new #10

BookChange(x.Value.FinalBookId, x.Value.State)) #10

.ToImmutableList(); #10

}

}

The result of this is a list of BookChange classes, which conveys the BookId of the book list view to change, and the State it should be changed to. You make this class as small as possible, because in a real system, you might want to save the information in the database, or send it to a background job to process. That would allow you to improve on the performance of the write, but more important, to provide retry and error-checking facilities in case the NoSQL database access fails.

14.2.5 Why the CQRS solution is less likely to have out-of-date cached values

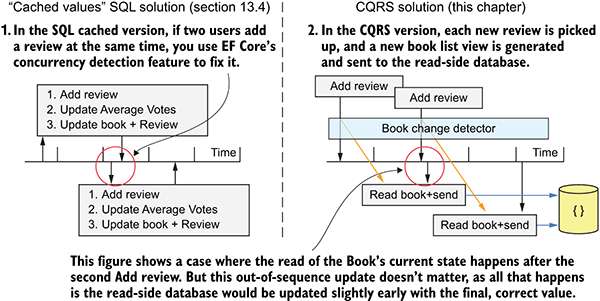

When you create any system in which you cache values, the key issue is to make sure that the cached values stay in step with the calculated values. Applications that handle lots of simultaneous updates can produce situations in which a cached value gets out of step. This is one form of a concurrency issue (see section 8.7).

In section 13.4, you built a version of our application that stored various values, such as the average book’s review votes (what I refer to as cached-values SQL from now on). In that version, you use EF Core’s concurrency detection to find and fix a possible concurrency issue around simultaneous Reviews being added to a Book. That works, but you need to correctly identify that this is a potential problem and then write code to handle it. But it’s better if the design avoids potential concurrency issues, as you did with the ActualPrice in the cached-values SQL solution (section 13.4.1). The CQRS solution does that, by removing any concurrency issues right from the start.

Figure 14.4 On the left, the cached-values SQL performance-tuning implementation developed in section 13.4 fixes the problem of two simultaneous reviews being added, by using EF Core’s concurrent detection and handling feature. On the right, the CQRS architecture handles the same problem by design; it doesn’t need any special handling to cope with this problem.

Figure 14.4 shows the difference in how the cached-values SQL solution (section 13.4) and the CQRS solution handle the “two simultaneous reviews” problem. Each makes sure that the calculated values are up-to-date, but I believe the CQRS approach is much better because it designs around the problem instead of having special code to handle the problem.

Unlike the cached-values SQL solution, in which you had to consider each cached value separately and devise a different solution for each, the CQRS design handles all potential problems in one go; it effectively designs them out. In addition, the CQRS architecture helps with the overall design of the system, which is why I think CQRS architecture is worthy of consideration for systems that have more reads of data than writes.

14.2.6 Is the two-database CQRS architecture worth the effort?

Implementing this two-database CQRS architecture isn’t simple and took me over a week to develop, which is long for me. Admittedly, the main part is learning a new database approach, but there are also some complex EF Core parts to write to. So, is it worth the effort? I’ll answer that question in terms of three distinct aspects:

- Is the improvement in read-side performance worth the extra effort to convert the application?

- Is the drop in the performance of any book-related database write acceptable to gain the extra read-side performance?

- Does the extra effort, complexity, and robustness warrant the read-side performance that the CQRS architecture brings?

The differences in read-side performance between the non-CQRS and CQRS solutions

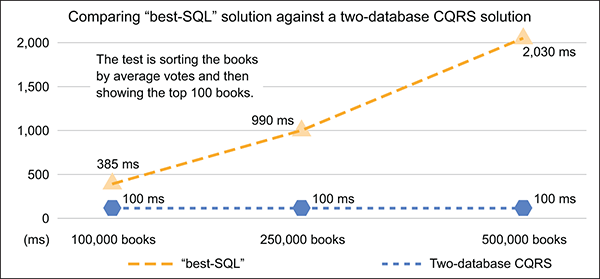

First, let’s compare the performance of the CQRS solution against the “best-SQL” solution—the part 2 version (see section 13.3) in which SQL had to calculate the average vote every time the book list was displayed. Figure 14.5 shows the performance of the CQRS solution against the part 2 version for the following database content:

- 100,000 books, which have ½ million book reviews

- 250,000 books, which have 1.4 million book reviews

- 500,000 books, which have 2.7 million book reviews

Figure 14.5 The time it takes to sort all the books by the average review votes and then show the 100 top books. The graph compares the “best-SQL” solution (see section 13.3) of the book app against the two-database CQRS solution.

Clearly, the performance of this two-database CQRS solution is much better than the “best-SQL” solution from section 13.3. No user wants to wait two seconds for the books to be sorted. The SQL version is slow because it must dynamically calculate the average votes every time. The CQRS solution, in which the book list view contains a precalculated average votes value with an index, is obviously much faster.

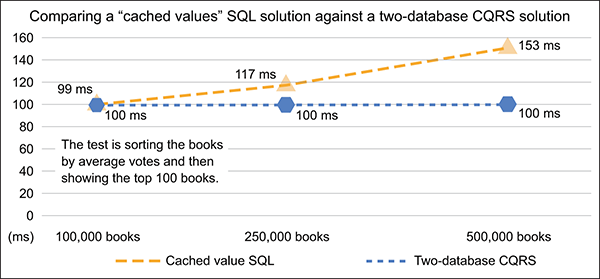

But to provide a fair comparison, you need to compare the CQRS solution against the part 3 solution (see section 13.4), in which you add cached values to your SQL database (the cached-values SQL solution). In this case, the difference is much smaller; see figure 14.6.

Figure 14.6 shows that the cached value with its index is the main reason that the CQRS solution is quicker. When using a CQRS solution to improve performance, the primary goal must be to store the exact data that the user wants to see or sort and/or filter on what I call a precalculated view. If you’re not going to build a precalculated view, but just access the same database as before, you won’t gain much in terms of performance. The precalculated view is the main performance multiplier.

Figure 14.6 The time it takes to sort all the books by the average review votes and then show the 100 top books. This graph compares the “cached values SQL” version (see section 13.4) of the book app against the two-database CQRS solution.

Looking beyond the precalculated view issue, figure 14.6 also shows that the RavenDB database, with its simpler database structure, has better performance as the number of books in the database increases. This brings us to another side of performance: scalability.

Scalability determines how many simultaneous users an application can handle while still providing good performance (see chapter 12, especially section 12.7). Because NoSQL databases such as RavenDB are dealing with a single entry containing all the information, a read or write is simpler than in the SQL case. In general, this makes NoSQL databases easier to duplicate (to have multiple databases all containing the same data).

The effect of having multiple databases on scalability can be significant. Not only can you spread database access across multiple databases, but you can locate databases geographically around the world to provide shorter access times.

To summarize on performance:

- The CQRS architecture solution provides better performance than a noncached SQL version, when its read-side database holds a precalculated view of the book list. This precalculated view makes the handling of sorting, filtering, and viewing a book much faster.

- Using a NoSQL database, which has a simpler, single-entry view, for the read-side database does have performance benefits, especially around scalability, over an SQL database.

Is the drop in the performance of any book-related database write acceptable?

I said previously that a two-database CQRS architecture is going to be slower on writes, because it must write to two databases. I’ve measured this in my solution and there’s an effect, but it’s pretty small. Table 14.2 shows one common case, which is a user adding a review to a book, and the difference in write time.

Table 14.2 The difference in the time taken for the ASP.NET action to return after adding a new review to a book

| Solution type | Total time | Notes |

| Cached-values SQL | 13 ms | Simple addition of Review entity to the database and a recalculation of the cached values. |

| Two-database CQRS | 35 ms | The extra time is mainly around writing to the NoSQL database. I measured the RavenDB update as taking 25 ms, which is quite long compared to an SQL write. |

In my mind, a function that takes less than 50 ms to return to the user isn’t worth performance tuning. But in applications with more-complex updates, this time might get too long. But there are plenty of ways to handle this; for instance, you could pass the update a background task to be executed so that the application returns immediately to the user. The only downside of that approach is the user may be shown out-of-date data, which could be confusing. These are the design trade-offs you must think through.

The differences in software implementation between non-CQRS and CQRS solutions

This section compares the cached-values SQL solution in section 13.4 and the two-database CQRS solution in this chapter. Both solutions take extra work to build, and make the software structure more complex. Table 14.3 compares the development effort, complexity, and robustness of the two designs.

Table 14.3 Comparing the cached-values SQL solution in section 13.4 and the two-database CQRS solution against three software criteria

| Solution type | Effort | Complexity | Robustness |

| Cached-values SQL | ~ 3 days | Same | Good |

| Two-database CQRS | ~ 8 days | Same | Very good |

In terms of development effort, the CQRS implementation takes longer to create than the cached-values SQL solution. Part of that is learning about RavenDB’s philosophy and software package, and part of it is coming up with a clever design to separate the NoSQL code from the rest of the application. But I think this is still within a sensible development cost, considering you’re performance-tuning an existing, SQL-only, EF Core application (but some managers may not agree with me!).

The CQRS design is more complex than the original EF Core design in chapter 5, but I accept some additional complexity whenever I apply performance tuning. But the cached-values SQL solution also added complexity, just in other places. Their complexity is different: the CQRS design has complex interfaces to hide things, the cached-values SQL solution has complex concurrency handling code. Overall, I think the CQRS design and the cached-values SQL solution are comparable in the extra complexity they add to the book app.

The big, positive difference is in the robustness of the CQRS design. I rate it above the cached-values SQL solution because it designs out the concurrency issues that the cached-values SQL solution has. You don’t need any explicit code to handle concurrency in my CQRS solution. That’s a big plus.

To summarize the differences in implementation:

- The CQRS is superior to the cached-values SQL solution (see section 13.4) because the design doesn’t need special handling of concurrency issues.

- Changing the book app over to a CQRS architecture adds complexity to the code, but no more than the cached-values SQL solution does.

- The CQRS implementation takes longer (about eight days) to develop than the cached-values SQL solution (about three days). But, in my opinion, it’s worth the extra effort.

14.3 Accessing and changing EF Core services

Time-saver

This section discusses advanced features within EF Core that aren’t useful in everyday use of EF Core. If you’re new to EF Core, you might want to skip this section for now.

Now let’s look at a completely different area of EF Core: its internal services. EF Core is built in a modular way, with most of the key parts of its code linked by dependency injection (see section 5.3). The EF Core team has made these services available for developers to use for their own uses; you can override some of the services if you want to change the way EF Core works.

Using or overriding the EF Core services is an advanced feature, but it can be useful when you need to customize EF Core behavior, or to save you from writing code that EF Core has already implemented. This section covers the following:

- How and why you might use an EF Core’s service in your own code

- How and why you might override one of EF Core’s internal services

14.3.1 Accessing an EF Core service to help in your own application

EF Core has more than 50 services that you could gain access to. Most aren’t that useful, but a few might help with a project you’re working on. One part of EF Core I’m interested in is its mapping of entity classes to SQL tables. In previous applications I wrote with EF6.x, I had to have a table of how EF mapped .NET types to database types. With EF Core, you can tap into its relational mapping service and obtain that information directly from the EF Core code.

To do this, you need to access the database provider mapping service via the IRelationalTypeMapper interface. This service provides methods that can map a .NET type, with any EF Core configurations or attributes, to an SQL type, and from an SQL type to a .NET type. Listing 14.8 shows how to obtain an instance of the SQL-type-to-.NET-type mapper that EF Core uses for an SQL Server database. In this case, you give it the SQL type, and it tells you the information about the .NET type, including information you’d need to configure EF Core to match that SQL Server type.

Listing 14.8 Determining how EF Core would map an SQL type to a .NET type

//… other setup code left out

optionsBuilder.UseSqlServer(connection); #1

using (var context = new EfCoreContext(optionsBuilder.Options)) #2

{

var service = context.GetService<IRelationalTypeMapper>(); #3

var netTypeInfo = service.FindMapping("varchar(20)"); #4

netTypeInfo.ClrType.ShouldEqual(typeof(string));#5

netTypeInfo.IsUnicode.ShouldBeFalse(); #6

netTypeInfo.Size.ShouldEqual(20); #6

}

There are other services, but many are even more specific to EF Core and therefore not that useful outside EF Core itself. But the next section shows how you can replace an EF Core service with your own custom variant, which opens interesting possibilities.

Tip

If you want to see all the services that EF Core makes available, there isn’t a simple method to call. But if you write the code var service = context.GetService<IServiceScopeFactory>(); and use the debugger to look at the nonpublic members, you can see the list of all services.

14.3.2 Replacing an EF Core service with your own modified service

Wouldn’t it be great if you could change how the internals of EF Core work? For instance, you could modify the IModelValidator service to check that the database table names adhere to your specific project rules. Or you could apply a new property-naming convention to set the correct SQL varchar/nvarchar type by overriding the IRelationalTypeMapper service.

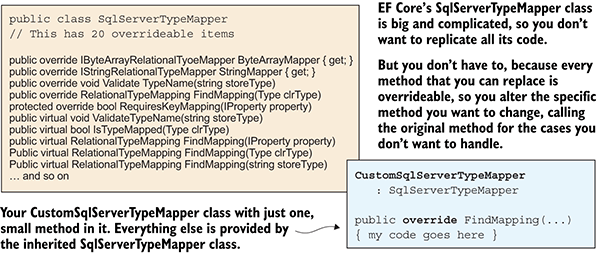

Even if you could replace them, some of these services are complicated; for instance, the RelationalModelValidator class has 11 methods. So it would be a nightmare if you had to re-create all that code, and you might have to change your code when a new EF Core version comes out. Thankfully the EF Core team has thought about developers wanting to alter or extend EF Core internal services.

The EF Core development team has built all the EF Core internal services with overridable methods. You can inherit the appropriate class and then just override the specific method you want to change, with the option of calling the base method if you need to. This makes it much easier to build a customer service, although you still need to understand what you’re doing.

For this example, you’re going to override part of the EF Core SqlServerTypeMapper class, which has 20 parts that can be overridden. Writing all those parts would be an impossible job, but you can override just the one you want to change and leave the rest alone, as shown in figure 14.7.

You’re going to override the FindMapping(IProperty property) method to add your own By Convention rule to EF Core’s configuration stage. The new rule will allow you to configure the SQL storage of certain string properties as a non-Unicode (8-bit) string to save space (normally, string properties are held in 16-bit Unicode characters in SQL Server). The new rule is as follows: if a string property name ends with Ascii, it should be stored using SQL Server’s varchar type (8-bit chars) rather than the normal string mapping to SQL Server’s nvarchar type (16-bit chars).

Figure 14.7 A tiny change to one of EF Core’s key services can be achieved by inheriting the service you want to change and then overriding just the method that you want to change. You can even call the original method for the cases you don’t want to change.

The first step is to create a custom type mapper, which is shown in the following listing. You override the .NET-type-to-SQL-type mapping method, in which you add the new code.

Listing 14.9 The custom SQL Server type-mapping class

public class CustomSqlServerTypeMapper #1

: SqlServerTypeMapper #1

{

public CustomSqlServerTypeMapper( #2

RelationalTypeMapperDependencies dependencies)#2

: base(dependencies) {} #2

public override RelationalTypeMapping

FindMapping(IProperty property) #3

{

var currentMapping = base.FindMapping(property); #4

if (property.ClrType == typeof(string) #5

&& property.Name.EndsWith("Ascii")) #5

{

var size = currentMapping.Size == null #6

? "max" #6

: currentMapping.Size.ToString(); #6

return new StringTypeMapping(#7

$"varchar({size})", #7

DbType.AnsiString, true, #7

currentMapping.Size); #7

}

return currentMapping; #8

}

}

Note

The type mapper is different for every database provider, so you have to inherit from the correct one to match the database server you’re using. Inheriting from the wrong service base will cause serious problems.

The second step is to alter the configuration options sent to the application’s DbContext when you create a new instance. Listing 14.10 shows the alteration of the ASP.NET Core’s ConfigureServices method in the Startup class, which registers the application’s DbContext, plus its options, with ASP.NET Core’s dependency injection module. The new line of code is shown in bold.

Listing 14.10 Registering the custom type mapper to replace the normal mapper

var connection = Configuration

.GetConnectionString("DefaultConnection");

services.AddDbContext<EfCoreContext>( #1

options => options.UseSqlServer(connection, #1

b => b.MigrationsAssembly("DataLayer")) #1

.ReplaceService<IRelationalTypeMapper,#2

CustomSqlServerTypeMapper>() #2

);

Note

You must specify the interface for service as the first part of the generic ReplaceService<IService, TImplementation> method.

14.4 Accessing command-line tools from software

EF Core provides a series of command-line tools to allow you to migrate your database or reverse-engineer a database (see chapter 11 for more details). These are known as design-time services, because these services are normally run by typing a command into the PMC in Visual Studio or the command prompt on your system. But you can access them via software, which can be useful if you want to automate something or exploit a tool for your own use.

Warning

This code accesses internal parts of the EF Core system, which may change with no warning when a new release of EF Core comes out.

As an example, you’ll tap into the EF Core’s reverse-engineering tool design-time service and use it to get data that allows you to list the schema of a database referred to by a connection string.

14.4.1 How to access EF Core design-time services

To access EF Core design-time services, you need to re-create the setup that EF Core uses when you call commands such as Add-Migration or dotnet ef dbcontext scaffold. This is a bit complicated, and thanks to Erik Ejlskov Jensen (http://erikej.blogspot.co.uk/) for helping me with this.

Listing 14.11 shows the code to create the scaffolding (also known as reverse-engineering) service that’s used to produce the entity classes and application’s DbContext from an existing database (see section 11.3). For this to compile, you need to include the NuGet packages for the database providers that you want to access the design-time services; for instance, Microsoft.EntityFrameworkCore.SqlServer to access the SQL Server services.

Listing 14.11 Building and returning the scaffolder design-time service

public enum DatabaseProviders { SqlServer, MySql } #1

public class DesignTimeProvider

{

private readonly List<string> _errors #2

= new List<string>(); #2

private readonly List<string> _warnings #2

= new List<string>(); #2

public ImmutableList<string> Errors => #3

_errors.ToImmutableList(); #3

public ImmutableList<string> Warnings => #3

_warnings.ToImmutableList(); #3

public ServiceProvider GetScaffolderService #4

(DatabaseProviders databaseProvider, #4

bool addPrualizer = true) #4

{

var reporter = new OperationReporter( #5

new OperationReportHandler( #5

m => _errors.Add(m), #5

m => _warnings.Add(m))); #5

#5

// Add base services for scaffolding #5

var serviceCollection = #5

new ServiceCollection() #5

.AddScaffolding(reporter) #5

.AddSingleton<IOperationReporter, #5

OperationReporter>() #5

.AddSingleton<IOperationReportHandler, #5

OperationReportHandler>(); #5

if (addPrualizer) #6

serviceCollection.AddSingleton #6

<IPluralizer, ScaffoldPuralizer>();#6

switch (databaseProvider) #7

{

case DatabaseProviders.SqlServer:

{

var designProvider = #8

new SqlServerDesignTimeServices();#8

designProvider. #9

ConfigureDesignTimeServices( #9

serviceCollection); #9

return serviceCollection #9

.BuildServiceProvider(); #9

}

case DatabaseProviders.MySql:

{

var designProvider = #10

new MySqlDesignTimeServices(); #10

designProvider. #9

ConfigureDesignTimeServices( #9

serviceCollection); #9

return serviceCollection #9

.BuildServiceProvider(); #9

}

default:

throw new ArgumentOutOfRangeException(

nameof(databaseProvider),

databaseProvider, null);

}

}

}

You can use other design-time services, such as the migration tools, but those services will need a different setup. The best way to find out what’s required is to look at the EF Core source at https://github.com/aspnet/EntityFrameworkCore.

14.4.2 How to use design-time services to build the EfSchemaCompare tool

Section 11.4.2 introduced the EfSchemaCompare tool I created to help with database migrations. This uses the design-time scaffolding service to read in the schema of the database you want to inspect. Using the scaffolding service replaces a large amount of ADO.NET code I had to write when I built the EF6.x version of the EfSchemaCompare tool. And because the scaffolding service is provided by the database provider, my new EfSchemaCompare tool can work with any relational database that EF Core supports.

This listing shows how to use one of the available scaffolding services to get information on the schema of the database.

Listing 14.12 Using the design-time service in your code to read a database’s schema

var connectionString = "Server=... shorten to fit"; #1

var builder = new DesignTimeBuilder(); #2

var serviceProvider = builder #3

.GetScaffolderService( #3

DatabaseProviders.SqlServer);#4

var service = serviceProvider #5

.GetService<IDatabaseModelFactory>(); #5

var model = service.Create(connectionString, #6

new string[] { }, new string[] { }); #7

var tables = model.Tables; #8

From this code, EfSchemaCompare can use the data to compare with EF Core’s Model property, which contains a model of what the database should look like based on the entity classes and EF Core configuration.

Using this design-time service provides three things to help build the EfSchemaCompare tool:

- It removes the need to write a lot of complicated ADO.NET code to read in a database’s schema and convert it to a useful form.

- It provides a solution that would work with any relational database supported by EF Core.

- If new features appear in EF Core, it’s more likely that the design-time service will upgrade too, thus reducing the amount of refactoring required to support that new feature.

Summary

- The main differences between each database type are the EF Core database provider, the

UseXXXmethod (for instanceUseMySql), and the connection string. - Features and syntax differ slightly among the various database types. You need to read the documentation relevant to the database type and its EF Core provider.

- The CQRS architecture with different read-side and write-side databases can improve performance, especially if you use a NoSQL database as the read-side database.

- When tracking changes to an entity, the

Stateof an entity is correct before the call to theSaveChangesmethod, but the primary and foreign keys of a new entity will be correct only after the call to theSaveChangesmethod. - You can access EF Core internal services via the

context.GetService<T>method. - You can replace an EF Core internal service by using the

ReplaceService<IService, TImplemenation>method at the time that you configure the application’s DbContext. - You can access the EF Core design-line services, such as

Add-MigrationorScaffoldcommands, via software. This could save you time when developing a tool to work with EF Core.