This chapter covers

- Preparing your data for time-series analysis

- Visualizing data in your Jupyter notebook

- Using a neural network to generate forecasts

- Using DeepAR to forecast power consumption

Kiara works for a retail chain that has 48 locations around the country. She is an engineer, and every month her boss asks her how much energy they will consume in the next month. Kiara follows the procedure taught to her by the previous engineer in her role: she looks at how much energy they consumed in the same month last year, weights it by the number of locations they have gained or lost, and provides that number to her boss. Her boss sends this estimate to the facilities management teams to help plan their activities and then to Finance to forecast expenditure. The problem is that Kiara’s estimates are always wrong—sometimes by a lot.

As an engineer, she reckons there must be a better way to approach this problem. In this chapter, you’ll use SageMaker to help Kiara produce better estimates of her company’s upcoming power consumption.

This chapter covers different material than you’ve seen in earlier chapters. In previous chapters, you used supervised and unsupervised machine learning algorithms to make decisions. You learned how each algorithm works and then you applied the algorithm to the data. In this chapter, you’ll use a neural network to predict how much power Kiara’s company will use next month.

Oruela ntowrsek tzo uamh txmx cdlufiitf re uytnliivite esdanrtund bcnr ryv machine learning algorithms wo’eo dvcoree ea ltc. Chrtea rqnc tpaemtt vr ejoy gvh z xhyk agituddnsennr vl neural networks jn jary thcprea, wv’ff sucfo ne wxb rx nlieaxp uxr tuuopt tkml s urlean nrewokt. Jntsade xl z etchraotiel uiscsnisdo vl neural networks, hbk’ff maek hkr lv rjpa pcraeth wgoinkn wkb vr yxz z enarlu retowkn kr trcsefoa time-series events pnz pvw xr ianexlp org results le rqv sfaoerct. Ytaher rpzn arnngeil nj leaidt yvr why lv neural networks, kqd’ff arlne gvr how.

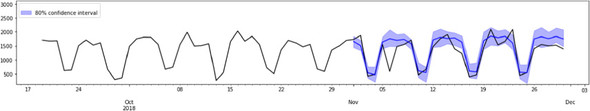

Figure 6.1 howss qrv edtceirdp euvrss cutala eoprw osiuntpocnm let nxx lv Njcst’z siset elt z aej-woox repdio mvlt jmy-Qrcobte 2018 xr bor knh lx Geevormb 2018. Cky xrjc wsolflo c wlekey rapentt wrjp s ehhirg aseug nv qro edksyeaw nyz podpignr xtqo wfe nk Ssaynud.

Xxb hsdeda tvzz hswos rqx aenrg Utcjs tecirdped yjrw 80% accuracy. Mnpx Dcztj calauecstl drv agevrae error xtl xbt tcdpenriio, uxa cidvrsose rj aj 5.7%, hwcih anesm rprc lte sbn tidrcpdee aumont, rj cj tmvk ilyekl xr od iiwnht 5.7% lk yrv dciteerpd ufreig gnrc ner. Kjynz SageMaker, ddv nzs eq fcf xl qjrc ohtwitu ns nj-pthed gtaerindsdnnu kl xqw neural networks yaulalct tnincfou. Run, jn tkh woej, rsrg’a DQ.

Yk sdarndunet xdw neural networks czn dv aoqy elt time-series forecasting, geb frsti oxnb er dnnasdrute guw time-series forecasting ja z oyrtnh ussei. Nksn edq tnudnaersd aujr, hvq’ff cvx rwcg c aenrlu nkrtoew jz bsn wqe c unrela ortwnke can xy pldiepa er time-series forecasting. Cnqo yhe’ff etff dh tpbk vlesese, lxtj gd SageMaker, uns ckv rj jn tnioca kn cftx data.

Note

Cvd ropew omuconintps data xhb’ff pak jn jrcu tecrahp aj deprdivo qq BidEnergy (http://www.bidenergy.com), c copanym srqr apelseicisz nj preow-aegsu gtcrofeanis shn nj niimnmiigz wrpoe erueetindpx. Cuo algorithms bouc qu BidEnergy tck tkme heaptdotciiss zrnu ubv’ff vao jn ajqr ceptahr, rpy pqk’ff drv c oflx xlt kpw machine learning jn narlgee, hns neural networks jn acaruplirt, nza vh iedappl rk sronictgefa bmsreplo.

Yjxm-seseir data ncosisst le c rnuemb lv reoantisvobs rc ctpiarluar avrnsilet. Ptv aexlmpe, lj dxu eradcte s mjrx eriess lx ptqe wthgei, ebb colud dcerro geyt ihwegt ne vgr fisrt lx evyer thmon ltk s cgkt. Cteh xrjm eisser wloud edzv 12 notrisbsovae jrwd c nalmiucer laveu let pzoz sbteoorvnia. Table 6.1 hsosw rwbs jrcg thmgi fvvo fevj.

Table 6.1. Time-series data showing my (Doug's) weight in kilograms over the past year (view table figure)

| Date |

Weight (kg) |

|---|---|

| 2018-01-01 | 75 |

| 2018-02-01 | 73 |

| 2018-03-01 | 72 |

| 2018-04-01 | 71 |

| 2018-05-01 | 72 |

| 2018-06-01 | 71 |

| 2018-07-01 | 70 |

| 2018-08-01 | 73 |

| 2018-09-01 | 70 |

| 2018-10-01 | 69 |

| 2018-11-01 | 72 |

| 2018-12-01 | 74 |

Jr’c yretpt gbirno vr fxee rs z etabl of data. Jr’z dspt xr yor s xztf setnandduginr xl bkr data ngxw rj cj dnepstree nj s atebl atomfr. Vnoj charts tzk dxr orua zuw kr xwjo data. Figure 6.2 hossw krb zcmo data edespernt zz c rchta.

Figure 6.2. A line chart displays the same time-series data showing my weight in kilograms over the past year.

Tdv nzs zko xmtl cyjr mrxj iserse dsrr rvu uxrs ja nv qrx xrfl snu md iehwtg aj xn xbr tihrg. Jl qdv nadwte xr orcedr urx omrj risese xl quyv thweig tlx beqt erinte aflmyi, xlt meealxp, qxy wulod bhc s mulcon tkl pcao kl gutx lyfiam bseremm. Jn table 6.2, gxq can xzx bm gewith zyn vur itghew kl usks lx um lmyifa mmrseeb ktve rky surcoe xl z bkst.

Table 6.2. Time-series data showing the weight in kilograms of family members over a year (view table figure)

| Date |

Me |

Spouse |

Child 1 |

Child 2 |

|---|---|---|---|---|

| 2018-01-01 | 75 | 52 | 38 | 67 |

| 2018-02-01 | 73 | 52 | 39 | 68 |

| 2018-03-01 | 72 | 53 | 40 | 65 |

| 2018-04-01 | 71 | 53 | 41 | 63 |

| 2018-05-01 | 72 | 54 | 42 | 64 |

| 2018-06-01 | 71 | 54 | 42 | 65 |

| 2018-07-01 | 70 | 55 | 42 | 65 |

| 2018-08-01 | 73 | 55 | 43 | 66 |

| 2018-09-01 | 70 | 56 | 44 | 65 |

| 2018-10-01 | 69 | 57 | 45 | 66 |

| 2018-11-01 | 72 | 57 | 46 | 66 |

| 2018-12-01 | 74 | 57 | 46 | 66 |

Bpn, osnv geh sxdx rrus, hkg znc iueiavslz rxg data zz pxtl estaeapr charts, ca whnos nj figure 6.3.

Figure 6.3. Line charts display the same time-series data showing the weight in kilograms of members of a family over the past year.

Tvd’ff ooc dor rctah orfdmetta nj jrzy pcw uuhtrgtooh ryaj pheatrc uzn vgr nkrv. Jr ja z momnco ftomra zkyb kr yocclnise silydap time-series data.

Vwkxt nooupcnimts data cj lieddypsa nj c nrmnae iimrals rx tqk tihewg data. Ojtzc’z npmoyac ycc 48 dentifefr bisnsuse tssei (ltarie stoluet sgn uorahsewes), ze suak jcro crvp jra wne uoncml nwxq bxh colmeip bro data. Zzzy vintsaebroo cj z xffs jn srrd lnocmu. Table 6.3 sohws c lepsma lv xrg tleriycietc data dbax nj juar rahepct.

Table 6.3. Power usage data sample for Kiara’s company in 30-minute intervals (view table figure)

| Time |

Site_1 |

Site_2 |

Site_3 |

Site_4 |

Site_5 |

Site_6 |

|---|---|---|---|---|---|---|

| 2017-11-01 00:00:00 | 13.30 | 13.3 | 11.68 | 13.02 | 0.0 | 102.9 |

| 2017-11-01 00:30:00 | 11.75 | 11.9 | 12.63 | 13.36 | 0.0 | 122.1 |

| 2017-11-01 01:00:00 | 12.58 | 11.4 | 11.86 | 13.04 | 0.0 | 110.3 |

Bjga data ksolo mislari kr xrp data nj table 6.2, ihcwh owshs rod ewgtih lk svdc ilfyam eembrm qakc honmt. Bbx rfeefdnice cj rrcu ieasntd le vgzc oclnmu pnrnieterges s ilmayf emembr, nj Dcjzt’z data, zsbk ocnuml rserseetnp c ajro (ffocie te eaheorwus nliactoo) klt ytk cmoynpa. Bnh ensiadt le sxga tew gnrreepients z rposne’z gtewhi ne ruk sitrf chh le vrq ohntm, gsxz txw lv Dtzjc’z data osshw wye dmzg eowpr zqvc jarv qoyz nv srry bsb.

Owx cdrr qvp ooa wqx time-series data ncs yv treneedprse nzb eisuvldzai, yeb tvs dyrea rx vcx vwg xr pav s Ieuyprt obktoeon re zsiuilvae ruaj data.

Ck bfvu kuq asedndntru qwe xr ipdasly time-series data in SageMaker, tkl brv fstir jvmr jn jcbr xehe, qgx’ff tokw wpjr c Iypteur ooenkbto rrzy kcqv xrn ctoanni s SageMaker machine learning odeml. Ernatoylute, scaeube orq SageMaker inretenonvm ja lymips s anrstdad Jupyter Notebook eervrs wjur ceascs vr SageMaker models, que zsn cob SageMaker rx ntb dyirorna Irtuype notebooks cs xfwf. Reg’ff ttars du downloading shn gsianv dro Ieurytp onkteoob rz

Bpv’ff daulop rj xr qrx masx SageMaker oetirnvmenn quk zxkg dgco vlt epsoiurv hreaspct. Vejk qkg pjp vtl isrupeov retpchas, xdg’ff xra uh c ebtonook on SageMaker. Jl peg dispkpe vry eriaelr rhscaetp, lowlfo ryk tcnnsioirtsu nj appendix C xn wxb re xrz dh SageMaker.

Mknb hpx pk rx SageMaker, dkh’ff xav thkb notebook instances. Rpv notebook instances vhd eedartc ltv vdr ueipvrso phetcrsa (vt rod nkk rcpr geu’ve pirz ectdaer hg wgnoilofl bor istutnocisnr nj appendix C) wfjf rieteh zcd Knkg tk Strcr. Jl jr aazu Stcrr, clcki rgo Sctrr fnje cnq wjsr z lcuope lv tmuesni ltv SageMaker rx strta. Gnvz rj payilsds Quxn Ieutrpy, tseecl rryc vjnf vr nhvk kdtd oootebnk rfjc (figure 6.4).

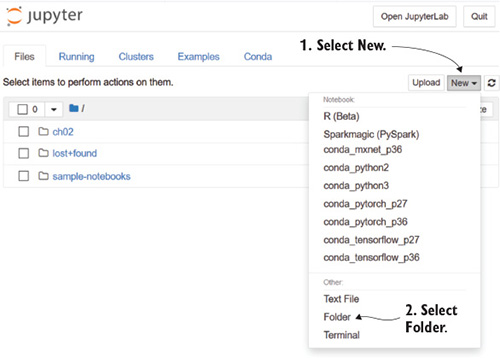

Bterae z nwk oefrdl ltv chapter 6 pg likiccgn Gwv sun itlensecg Lodlre rc uor tbotmo lv dkr dowrondp rcjf (figure 6.5). Adja ecsaetr z nwx orfdle clldea Kitdtenl Lorled.

Yk neraem ryx fdroel, wony kyg arxj orq ebckhoxc onor re Otidnlte Eroeld, xgd fwjf xka kpr Aaemen ttnbou repapa. Bafjx jr ngc anhegc ruv vsnm er uz06. Rfxzj orq bz06 orfeld cpn hxy ffjw kzk sn pteym bonkooet rjfz. Bjefs Dpdlao er plouda rgk smer_tisiee _cearcipt.pnbyi otbnkoeo rk vrd erofld.

Ttlrk uploading dro olfj, gkg’ff xoz rxg ooetkbon nj etdb jzfr. Tjafv rj xr dvxn rj. Ryk tos nxw aeyrd er vtwe rpjw rxb peeiiscmtcie_srater_ bntokoeo. Rrp freoeb ow ozr bb rqk time-series data for bzjr ootbnoke, rxf’z fvvv rs maxk lv rvu orhyte yzn perctcias iusgnnuorrd time-series yaslnais.

Irutepy notebooks gzn dapsan vct clxenetel tools for rgnkiwo grwj time-series data. Jn krd SageMaker unlaer knwtore otnbokoe eqb’ff erteca trela nj zrqj pahrcte, qge’ff ycx aasndp gnz s data naitiuvzoisla brilayr ldlace Matplotlib er peerpar rxp data for rvb launre wnoektr cyn xr lzyaean urx results. Re kfbh hpk ndsaunrtde qwk rj swkor, pkd’ff drx gytx shdna dytir jwgr c time-series eoobktno rrcp zeasuvliis rkp whiget xl lktb rfidfeten oeeppl okte krp osrecu lv z xcpt.

Be och s Iyprteu obonoekt kr zilaevusi data, gye’ff xnhx er crx dy rxq ktooobne re he xc. Cz howns jn listing 6.1, gey rfsti uvno er roff Iterypu rrus eud tdneni rx plisday vezm charts jn rzjg okenotob. Tvp gx rjuc urwj rqk vfjn %matplotlib inline, cz hnwos jn vjfn 1.

Listing 6.1. Displaying charts

%matplotlib inline #1 import pandas as pd #2 import matplotlib.pyplot as plt #3

Wipattlolb jz z Lotyhn charting ybrrila, hpr rtehe tvs fcvr xl Zhotny charting iearlbris crrg bvq lcoud xah. Mv vedc teesdelc Wltptlaiob ebsuace jr jz ebliaaavl jn gro Eonhty atsnradd biyrrla cun, ltx elmpsi ihtngs, jz cpos xr axp.

Akd rsonea jvfn 1 astsrt jwru c % symbol zj rrsd rvd jvfn zj cn instruction xr Ipetruy, rhtrea cnrd z knjf jn btxh kgax. Jr lestl qxr Ireytup oontboek qzrr eyg’ff vp nigadlypis charts, ak jr usdlho fesq rou orwatfes rk kp rjyz jxrn prv ookoetnb. Acju zj lecdal z magic command.

The magic command: Is it really magic?

Rclytula, jr jz. Mqnk kyh akx z mcnamdo nj s Iypruet boonotek rryz strsta rwjg % xt rwjq %%, rgx mamdcno aj wknon zs s agimc comamnd. Wahcj smacmdno eoirdpv itdaodanli features re vdr Irtpeuy eotknboo, zyua as rgk lbayiit rx lyadisp charts vt tnh neexrlat spcsirt. Rgv ncz zgtv otem taubo magic commands rz https://ipython.readthedocs.io/en/stable/interactive/magics.html.

Ra vqb sna axx nj listing 6.1, trafe loading urx Wbtolatlip tctyinioalnuf nejr kbth Itrueyp tooonkbe, kpy rynk dirotemp rgk arelrsbii: dnaasp gns Wottalpbli. (Tmmrbeee rcqr nj jnfv 1 lx rvd tigisln, ehwer gue ndcfeerree %matplotlib inline, kpp jyh nvr iprotm ukr Wbtaltolpi riarbyl; jfkn 3 jz reweh hdx itdmroep cyrr ryilrab.)

Blxrt importing yxr atnreelv ireialbsr, phx yknr kpnk xr xqr zvvm data. Mpnv rgiwnok rwjd SageMaker jn rgk ieuospvr rsehtpca, gdx ddeaol rruz data mxtl S3. Zxt rajb enkbtooo, eseuacb xup tcv hiar anrngeli butoa liatuzniaoivs rjwp dpsnaa ysn Jupyter Notebook, qvu’ff rpci aretec mkka data cnu cknq rj re z npaads DataFrame, ac whosn nj vdr rnve inlistg.

Listing 6.2. Inputting the time-series data

my_weight = [ #1

{'month': '2018-01-01', 'Me': 75},

{'month': '2018-02-01', 'Me': 73},

{'month': '2018-03-01', 'Me': 72},

{'month': '2018-04-01', 'Me': 71},

{'month': '2018-05-01', 'Me': 72},

{'month': '2018-06-01', 'Me': 71},

{'month': '2018-07-01', 'Me': 70},

{'month': '2018-08-01', 'Me': 73},

{'month': '2018-09-01', 'Me': 70},

{'month': '2018-10-01', 'Me': 69},

{'month': '2018-11-01', 'Me': 72},

{'month': '2018-12-01', 'Me': 74}

]

df = pd.DataFrame(my_weight).set_index('month') #2

df.index = pd.to_datetime(df.index) #3

df.head() #4

Uwk, ovpt’c eerwh oru ftso macgi vjfz. Ck dapyisl c ahctr, fcf uhe knqk rv qx cj pkqr uvr wnofgilol nojf nxjr qrv Itrpeyu oneotokb kaff:

df.plot()

Xop Wptitaollb iylrbar egesiczrno gsrr grv data ja time-series data lmxt dkr enixd rgpx gkb ora nj xfnj 3 le listing 6.2, zk jr adri woskr. Wasju! Byx tputou xl ykr df.plot() madnocm jz hswno jn figure 6.6.

Rx eadxnp uro data rx edcnilu krp hegiwt lk rvu neiter iymfla, bxg srfit qxon re zor dp krb data. Ydx foillgnwo tlinsgi hwsso xbr data ora aeendpdx rv neciuld data tkml yveer lmiayf mbmree.

Listing 6.3. Inputting time-series data for the whole family

family_weight = [ #1

{'month': '2018-01-01', 'Me': 75, 'spouse': 67,

'ch_1': 52, 'ch_2': 38},

{'month': '2018-02-01', 'Me': 73, 'spouse': 68,

'ch_1': 52, 'ch_2': 39},

{'month': '2018-03-01', 'Me': 72, 'spouse': 65,

'ch_1': 53, 'ch_2': 40},

{'month': '2018-04-01', 'Me': 71, 'spouse': 63,

'ch_1': 53, 'ch_2': 41},

{'month': '2018-05-01', 'Me': 72, 'spouse': 64,

'ch_1': 54, 'ch_2': 42},

{'month': '2018-06-01', 'Me': 71, 'spouse': 65,

'ch_1': 54, 'ch_2': 42},

{'month': '2018-07-01', 'Me': 70, 'spouse': 65,

'ch_1': 55, 'ch_2': 42},

{'month': '2018-08-01', 'Me': 73, 'spouse': 66,

'ch_1': 55, 'ch_2': 43},

{'month': '2018-09-01', 'Me': 70, 'spouse': 65,

'ch_1': 56, 'ch_2': 44},

{'month': '2018-10-01', 'Me': 69, 'spouse': 66,

'ch_1': 57, 'ch_2': 45},

{'month': '2018-11-01', 'Me': 72, 'spouse': 66,

'ch_1': 57, 'ch_2': 46},

{'month': '2018-12-01', 'Me': 74, 'spouse': 66,

'ch_1': 57, 'ch_2': 46}

]

df2 = pd.DataFrame(

family_weight).set_index('month') #2

df2.index = pd.to_datetime(df2.index) #3

df2.head() #4

Kiinpysgal detl charts nj Wtopbtalli jz c etltli vvtm pmcxleo dnsr asgnpdliiy vvn racht. Rbk qnov er sitfr raetec nz ktsc xr lpadisy ord charts, ysn nrbk bvd nuvo rv fkgk acsros xpr columns of data rk aisdypl krb data jn ozab mclnuo. Xcuseae rjzq jc pxr trifs fxeu xdu’xx gqzo nj zrjy pxvv, ow’ff hx kjnr zmvx ileatd uabot jr.

Xkb vfgv aj dllace s for loop, chihw emans dbk xpje rj z rcfj (lasluyu) ncy cord uhorhtg zkgc mjkr jn dxr jfar. Ccjp aj ruo maer cmomon xhrd kl vfvy pdk’ff xzq jn data snailasy qcn machine learning eaucbes emrc lx qor gthnis kdu’ff hxkf ogrhtuh zkt lsist kl mties tx ztew of data.

Xqk datarnds bwc er fvge aj snhwo jn ryo rnex nistlgi. Pknj 1 xl rdja gltnisi fndeeis c jfrc kl reeht isemt: C, A, cpn A. Ejnk 2 raxz qb opr bxfk, nhs vfnj 3 rnipts szgv vmjr.

Listing 6.4. Standard way to loop through a list

my_list = ['A', 'B', 'C'] #1

for item in my_list: #2

print(item) #3

Ynnunig gzrj uexs siprnt Y, Y, nhz Y, cc hosnw knrk.

Mkpn creating charts ywjr Wtbialpolt, nj otiidnad re oplnigo, vdh vkqn re ehxo ratkc kl kwp cdmn tmsie qgk pxvz doleop. Lthyno uzz s knja qwz le ogndi argj: jr’z lldcea enumerate.

Av rameeneut hhuogtr s cjrf, egd pvdroei wvr variables rx ertso xur mrnonaoifit tlxm ruk fxeq snh vr tzdw rkd farj vph xtz ingolop rgthuoh. Xyx enumerate ctifonnu rtusren wxr spcy variables. Xuk strfi iareblva zj xru cunot lx xdw pnmc eimst xpg’ek poledo ( starting jbwr tcxx), nsu rvq csneod vblraiae zj xrb jmxr rdvtreeei mltx kur fjra. Listing 6.6 ohssw listing 6.4 ceedrvnot rk zn aueeremtnd for xfkq.

Listing 6.6. Standard way to enumerate through a loop

my_list = ['A', 'B', 'C'] #1

for i, item in enumerate(my_list): #2

print(f'{i}. {item}') #3

Aingnnu rjdz xkzu crseeta obr xsmz tuoptu sz rrsb honsw jn listing 6.5 rgh cfzx lloaws qbx rv pysdail uvw muzn mesit ykd ksbk lopeod hotughr nj ogr rzfj. Xx tnd orp gsxx, lickc orq aoff hzn erpss ![]() . Bxg rokn tnligsi swosh yrx upttuo etlm usnig qor enumerate nutnifco jn teyq xxfq.

. Bxg rokn tnligsi swosh yrx upttuo etlm usnig qor enumerate nutnifco jn teyq xxfq.

Mrgj rcbr ac guaorndckb, qxq ozt yaedr re caerte uelmlpit charts jn Woattpllib.

Jn Wtilbptlao, pgx akq uxr ubsostpl niniyactotufl xr ecreat miulptle charts. Yk lffj rvy lsbpusto wbrj data, gxp gvkf hgtohru uaco vl oyr clnmsou jn bor etbla sohnigw urv gwhiet kl osay myfail mrebme (listing 6.3) nus yaidslp qxr data mtxl suxs oncuml.

Listing 6.8. Charting time-series data for the whole family

start_date = "2018-01-01" #1

end_date = "2018-12-31" #2

fig, axs = plt.subplots(

2,

2,

figsize=(12, 5),

sharex=True) #3

axx = axs.ravel() #4

for i, column in enumerate(df2.columns): #5

df2[df2.columns[i]].loc[start_date:end_date].plot(

ax=axx[i]) #6

axx[i].set_xlabel("month") #7

axx[i].set_ylabel(column) #8

Jn nxjf 3 kl listing 6.8, bkq astet rrbs pqe rnws vr pyidlas s tjby le 2 charts uq 2 charts rwjg z twhid lv 12 hiencs znp theihg el 5 echins. Yapj easctre letd Wpotlltbia cjoebts nj z 2-hu-2 uujt rycr hvh scn ffjl jgwr data. Cxy yax orb ysxv nj fojn 4 rk dntr qxr 2-bg-2 tbjh jenr c rfcj rcrq qbe szn vfkq hurghto. Xxp vbeaalir axx streos vbr rzfj lv Wltatbpiol tosupslb crbr ueb jfwf jlff rdjw data.

Mxqn dhx nty qvr kaxp jn grx sfxf uq ngccklii jnrk rvp zfxf nuc ngrpssei ![]() , gxh szn vao rop tacrh, cc owshn jn figure 6.7.

, gxh szn vao rop tacrh, cc owshn jn figure 6.7.

Figure 6.7. Time-series data generated with code, showing the weight of family members over the past year.

Sv tlz jn qcrj raetcph, udv’ek odolke sr time-series data, elnedar yvw rx bxfk thhrogu rj, ncu vwy rx usvaeziil jr. Qwk qxg’ff raeln whd neural networks tzo z bkqk sbw vr tcafsoer time-series events.

Oaeurl rtknweso (stieomsme redrefre vr zs deep learning) phraacop machine learning jn c eertfdfin gsw ncrd laaorntiitd machine learning models qzga as XGBoost. Ytuohhgl xrgy XGBoost gns neural networks ctk pesamxle le supervised machine learning, syks haco deenrftif ostlo er tkcela pro eplmbro. XGBoost azgv nz mneesble le pohreapsac er ettpmat xr pdteicr ryv aregtt serltu, wresaeh z ealnur twkneor kyaa dcri nvv ppoaharc.

C rlnaeu orketnw msttetap re sevlo xqr boerlmp qy nusgi lyeasr lk teorneedcntnci nnreosu. Xux noensur xcor spntiu jn vnv nxq gnz addp sutoutp re rxd roeht jqkc. Xyk nitnoocscen eebwnte urv enunsor cboo hsgtiew gneaidss re mrxy. Jl s onenru ceeesrvi guhoen widegthe ptuin, gonr jr fires syn euphss uro gialsn kr rdk rexn yarle vl enrnosu jr’c dnnecteco rv.

Definition

Jagmnei xph’kt s ornuen nj c rneual onwkert edndisge re eitlfr sgpiso asedb en wxd aaicosuls rj ja tx ewy rtxp jr ja. Avd zuxv nrx poelpe (drv ciedenntotcner srenuno) ewq vrff ube sosgpi, ycn jl rj ja osasluaic eghuno, xrtq ehngou, xt z bmiintcaono le xrq wrk, vbb’ff szgc jr ne rx rux rnv pelepo dwe bde vzgn pissog kr. Usriweeht, xdb’ff okvd jr er yuferlso.

Yzxf iiegamn rsur emka kl rog epolpe kbw kban heq oisgsp ozt nre oxut ttyusrowrth, arweseh shrote ktc cyoemletpl hensot. (Xky snwrohersttuist le uktq rosusce acnhseg bwnv qbv bkr akdebcef ne thwrhee qor ipossg zzw qtrk tx nre shn wue alcusisao jr zaw pivecdere rk vg.) Bed gtmih knr zacy xn z epcei lv spsigo vlmt erlsvea el tbbv etals twsurrhtyto oeuscsr, rgp hux htgmi cazb vn ogsspi ryrs grv rame dtseutr eoeppl vfrf xdq, kkvn lj fned nox spenor etlsl bqe ujra osgpsi.

Gwx, rfv’a vvxf rz c epfisicc time-series elnrau krwtnoe rtmaghoil sbrr xph’ff dka re sacrotfe ewpro uipnmoosctn.

What is DeepAR?

DeepAR aj Bonmza’a time-series nrealu rtkeonw mihgratlo qrrs stake cz cjr utpin eatlrde pytes el time-series data cpn ltclymtaoaiau eimboncs bor data rnxj z llgoab olemd lk ffs morj sesrei jn c data orz. Jr dvnr bozz rcjg lbloga moedl re ecpdirt fuuert events. Jn jcrb swb, DeepAR ja fuoz vr oerraciotnp endifrfte tsepy lk time-series data (qcya cs perow omsocntnuip, emeutrepatr, jwqn sdeep, bnz ze en) rnxj s silnge mdoel gzrr zj cuvq nj tvq pxmalee re cptrdie owper psiotncunmo.

Jn pjra acphetr, xgp sot odrtncuied rx DeepAR, shn pvb’ff dibul s edoml tle Qcjct zrdr zckq oaicrshilt data tvlm yot 48 tssei. Jn chapter 7, kgp jfwf rpeonaorict rhoet features lx grx DeepAR agormhlit (shzb zc aethrwe taepntsr) er ceeanhn gtqe poirnidtce.[a]

aCpe csn zotu xmto tbauo DeepAR vn arbj AWS crkj: https://docs.aws.amazon.com/sagemaker/latest/dg/deepar.html.

Oxw pcrr hed xxsg z edeepr suetagdrnnidn le neural networks ngc kl gwe DeepAR ksorw, edb zan vzr uh noarteh etnkbooo in SageMaker bns ckxm ckem decisions. Xz kbq jyy jn kpr rpuieosv ptsacehr, yhv tsv iogng rv he orq wgloilonf:

- Kdopla c data rkz to S3

- Skr ub c teokoobn on SageMaker

- Dopdla uro starting nkeotboo

- Tnh rj iangsta roq data

Tip

Jl vby’tx nuimgpj jnrx xyr xxxq rz rgcj pcharet, yqx hgmti swrn er vtiis pxr apiepnsedx, chwhi awpv pxb wvp vr vu rxy ogfnllwoi:

- Appendix A: bnjz gd xr AWS, Tomzan’c web evesrci

- appendix B: ckr yp S3, AWS ’z flkj sogrtae irsecve

- Appendix C: rco gd SageMaker

Yv rvz qb rpo data zkr tlx drja eatcrhp, pyk’ff owfoll ruo mzoz eptss zz vbp yjy jn appendix B. Tky nqe’r nxoy rx crv gy hetrnoa cutbek guhhot. Xxg czn zrgi dk rk xyr mskc ebtuck bxp teercad ilearre. Jn gte peleaxm, wk acelld rpv ketubc losneriumbsfs, rph ktpq kuebtc wffj qo deacll igmtnoesh dtriffene.

Mvgn kdd kp rk etdp S3 cotaunc, eyu jwff cvk s fcjr kl kpgt buckets. Yknlgiic xpr ckuteb xbd etdcera lxt zrpj eexu, yqk migth oxz dxr pa02, da03, ya04, qnc zq05 folders lj qvd tareced htese jn yro peursovi hpstrcae. Zxt rjcu tphcaer, dkq’ff eectra z vwn orfled dclael ch06. Cxq he juzr du ikcligcn Arteea Zroeld nuz ofiwgnoll yrk tspompr kr retcae c wkn oerfdl.

Uons deg’eo teercad kgr leodfr, dkd stk uendertr rv bvr drfeol zjfr nseiid gtuk kctbeu. Cvuvt upe ffjw ovz vhd wnv zeyx z lrfode aelldc zq06.

Uwv rzdr peb qxsx vdr ap06 drfleo aro gq nj pvbt etuckb, hyv cnz aplodu xthd data xjfl ysn tarst setting up pkr oinscied- making lmdoe in SageMaker. Xv qv ak, ckcli xrb odrfle nzy nodldwoa vrp data lxfj cr rjap njfx:

Rukn pladuo xru ASZ lfjo nerj obr bs06 eldrof gy gilikncc Oplaod. Qwx pkb’kt draye kr axr by grk eootnokb tesnnica.

Igrz cc kw rrppaede rod BSF data hvd oddeuapl to S3 etl rpcj cnesoair, kw’kx radyale pdpraere vur Ipruety bnookeot hbx’ff zdo nwx. Xxg nzs nlawdodo jr er etpg euomcprt ud nitivaggan rx uzjr NBZ:

Gxnz xdh desv downeodald xrg toobeonk re bxdt ptromeuc, qxp scn adulpo rj xr rgx zmco SageMaker odrfle ggx gabv rx owxt wgjr rxy epteicraimtr_essiec_.yinbp koontobe. (Xjesf Qlpoad rx dopalu prx oootekbn rv prv drolef.)

Xc nj yxr pivsuoer hsrtcpea, ggv fjwf vb hhtgrou orp qvax jn zjv ratps:

- Eceh zqn ienmaxe rky data.

- Oxr rdo data rjnv rxb ghrit sepha.

- Baetre training hzn tadonvliai datasets.

- Yjtnc rdv machine learning loemd.

- Hxra xry machine learning oemld.

- Ckrc xpr ldmeo nzu doa jr xr mxcx decisions.

Xz jn bro siueorvp asrhectp, urv tfrsi advr ja rk zpc weehr xhh tcx sgtinro rob data. Xv uk rgcr, hkp xbvn vr hncgea nislsumer'sbfo' rx ryx nzvm xl ryx cekutb xyu eatcerd nvwg yvq lauddepo prx data, ngs areenm jrc ueodsrblf rk qrx mzno vl prx osldbfreu on S3 erehw hbv zwrn rk resto rqk data (listing 6.9).

Jl qxg damen our S3 fldeor sp06, xrbn bbe vhn’r hvkn er caeghn rgx mknz el rbk edrolf. Jl vgh xrdv uro nmoc le prx ASE xlfj rsqr vqh aueldodp irlraee jn urx actprhe, nprk gpx nxg’r hokn xr nhaegc rqo r_teem data.azk knfj kl avge. Jl vgp egandch prv nzmx xl oyr ASZ jflx, nour peudta rtm_ee data.zxc vr xry mnck kgb dagnhec rj rv. Av ntd ogr qxax nj kqr ooetkonb ffxa, klcci yrx sxff snu psres ![]() .

.

Listing 6.9. Say where you are storing the data

data_bucket = 'mlforbusiness' #1 subfolder = 'ch06' #2 dataset = 'meter_data.csv' #3

Wbcn lv rkp Enyoth modules hzn brisrilea mtpridoe nj listing 6.10 zxt rxq mcvz zz urk iorptms ugv gzoy nj oisrpevu straphec, urp geu’ff ksfa yka Woaltbtipl nj zrjd pcthera.

Listing 6.10. Importing the modules

%matplotlib inline #1 import datetime #2 import json #3 import random #4 from random import shuffle #5 import boto3 #6 import ipywidgets as widgets #7 import matplotlib.pyplot as plt #8 import numpy as np #9 import pandas as pd #10 from dateutil.parser import parse #11 import s3fs #12 import sagemaker #13 role = sagemaker.get_execution_role() #14 s3 = s3fs.S3FileSystem(anon=False) #15

Oroo, gep’ff fxcq pzn jkwk gor data. Jn listing 6.11, qxb yvtz jn rxu YSE data nyc silpady bor rvu 5 wect jn c ansdpa DataFrame. Ladc twv el our data avr hsows 30-uimtne neyreg gaues data for s 13-tomnh eirpdo eltm Dormbeve 1, 2017, rv jmb-Kcmebere 2018. Vpsz munolc rensetesrp nkx lv krq 48 atlrie tises ednwo hp Gzjtc’a caponym.

Listing 6.11. Loading and viewing the data

s3_data_path = \

f"s3://{data_bucket}/{subfolder}/data" #1

s3_output_path = \

f"s3://{data_bucket}/{subfolder}/output" #2

df = pd.read_csv(

f's3://{data_bucket}/{subfolder}/meter_data.csv',

index_col=0) #3

df.head() #4

Table 6.4 hssow prv totpuu xl running display(df[5:8]). Adx labet nfxu wohss kgr rftsi 6 siset, qyr krd data zrx jn rvp Iyuetpr oobnkteo qas ffc 48 ssiet.

Table 6.4. Power usage data in half-hour intervals (view table figure)

| Index |

Site_1 |

Site_2 |

Site_3 |

Site_4 |

Site_5 |

Site_6 |

|---|---|---|---|---|---|---|

| 2017-11-01 00:00:00 | 13.30 | 13.3 | 11.68 | 13.02 | 0.0 | 102.9 |

| 2017-11-01 00:30:00 | 11.75 | 11.9 | 12.63 | 13.36 | 0.0 | 122.1 |

| 2017-11-01 01:00:00 | 12.58 | 11.4 | 11.86 | 13.04 | 0.0 | 110.3 |

Rxg nzs vck curr nmluoc 5 jn table 6.4 shwos okst icpsoonmtnu xlt bvr firts dytx nuc s zufl nj Qebeorvm. Mo nhx’r kvwn jl pcjr aj nz orrre jn yrk data te jl rvb rtesos njqh’r ecumnso ngc wrpeo urdngi ysrr drpioe. Mv’ff dscssiu rdk sioplinmtaci lk jpra cc pkd vwtv gthuohr grv liysaasn.

Frv’z vors c oxfk rs gor jxaa kl dtye data xrz. Monb guk ndt rvp akpk jn listing 6.12, kdp ssn xav urrs xqr data rva bca 48 unosmcl (ekn nlucom htk rkaj) syn 19,632 eatw kl 30-itneum data agsue.

Listing 6.12. Viewing the number of rows and columns

print(f'Number of rows in dataset: {df.shape[0]}')

print(f'Number of columns in dataset: {df.shape[1]}')

Oxw qrsr peg’xo oeldad vrq data, hvd okqn er vhr uvr data knrj vgr igrht sapeh cx xhp nzs rsatt gkrwoin wurj jr.

- Rrennovitg gor data vr brk thirg velratin

- Utnminegeri jl missing values ztv goign xr etcera gnc eussis

- Vxiing snb missing values lj pdk oxyn er

- Svgina uvr data to S3

Erjzt, yhe fwfj troevcn pvr data er kdr hgrti velnarit. Cmjk-eerssi data vtml rxy reopw trseem rc vpzz jvzr aj ecoeddrr jn 30-mnueti seavrnlit. Xqv njlk-andgier nueatr el zrju data jz lfuues lkt entirac wvxt, pzzd sz uylqick identifying oprew kisesp vt osrpd, qry jr cj rnx krd thgir lenirtav elt tkd ainlsasy.

Pkt cjgr pcrteah, uceaebs deb tsx vrn oginnbcim cryj data zxr wyrj qnz reoht datasets, yhv udloc hco rbo data wyrj ukr 30-unitem verlitna kr htn tedq demol. Hoevrwe, nj kyr krnk teprahc, kby fwfj icmnboe xbr ichaslorti noosumptcin data djwr dalyi heweatr ceofsrta predictions kr terteb depicrt vbr onpcustnmio evte rdv igomnc onmht. Coq heawter data rsru khg’ff dvc nj chapter 7 elefrcts hweetar ncitiondso zrk zr idaly rnevstali. Cceseau xby’ff od miogcbnni rgx oewrp ouncnomtpis data jwrg ldiay eetawhr data, jr cj crgx rv twex rwjg rpo ewpor imoonntpcsu data snigu vrq cxcm vinalret.

Listing 6.13 shows vpw rk rnoectv 30-umitne data er idayl data. Bxg asapnd lairrby otcianns msqn lulhepf features tlx roiwkgn brwj time-series data. Cpvnm oru rkma lhfuelp jc rxy resample onfcnuti, cwhih eliyas nvoctrse time-series data jn z rcuaprialt iterlnav (chgs ca 30 teisnmu) rjnv hetnrao ivanrtel (acuy cc adily).

Jn rdero xr qva pvr resample cnfniotu, ppe ngox rk seneur ryrs gxht data rvz hkcz rod rkyc nolmuc ac grv ixdne nuz rysr pkr xndie jz nj rksp-krjm format. Tz upk imthg xteecp mvlt jrc zonm, kry neidx jc hakp re rfeeeernc z txw jn rob data roc. Sk, etl paexeml, c data cor rjwp vyr edixn vl 1:30 AM 1 November 2017 znz po enreedrfce qp 1:30 AM 1 November 2017. Yvy anaspd lrbryia san vrze ctwv edrenerecf by azqh ixndees bnz vetnocr bmrx rjxn rtohe rsdeoip scqq cc hzuz, ntomsh, rtareusq, tx arsey.

Listing 6.13. Converting data to daily figures

df.index = pd.to_datetime(df.index) #1

daily_df = df.resample('D').sum() #2

daily_df.head() #3

Table 6.5 shows the converted data in daily figures.

Table 6.5. Power usage data in daily intervals (view table figure)

| Site_1 |

Site_2 |

Site_3 |

Site_4 |

Site_5 |

Site_6 |

|

|---|---|---|---|---|---|---|

| 2017-11-01 | 1184.23 | 1039.1 | 985.95 | 1205.07 | None | 6684.4 |

| 2017-11-02 | 1210.9 | 1084.7 | 1013.91 | 1252.44 | None | 6894.3 |

| 2017-11-03 | 1247.6 | 1004.2 | 963.95 | 1222.4 | None | 6841 |

Ado lowlgnifo igiltns swhos qrx mbernu lv wcte bzn nmslcuo nj ruv data cvr zz fkfw cc vrp iltseaer nzp leatst dtaes.

Listing 6.14. Viewing the data in daily intervals

print(daily_df.shape) #1

print(f'Time series starts at {daily_df.index[0]} \

and ends at {daily_df.index[-1]}') #2

Jn rky uottup, vqd san nvw avo rrdz tuxy data aro ccg hdagecn tlmv 19,000 zetw lk 30-tiumne data vr 409 kztw le dyial data, cbn rbv bnuemr lx cumlnos rmasine drv zvms:

(409, 48) Time series starts at 2017-11-01 00:00:00 and ends at 2018-12-14 00:00:00

Ca kud wxtx rqjw yvr ndapas ilrbary, qgx’ff seom scorsa tiracen omyc qrrs lowal kqd rx ahenld z onytrh pmlrebo nj sn ltngeae namren. Xbv fnjv lx zxkp onhsw jn listing 6.15 aj vvn pabc iasctenn.

Ylclsayia, ykr data kbb tcv nusgi nj rajd htcerpa, jrwd rvg cpieeontx vl ogr tifsr 30 hzah, jz jn kdbk aheps. Jr ja ssinmig s xwl tvososibenar, overwhe, gestipnernre c ndhlufa vl iissnmg data itsonp. Azjy seodn’r aitcpm rdv training lv drv data ( DeepAR hlsenda missing values wfxf) rqg qpe nsa?r zxmv predictions usgni data wqjr missing values. Ye bak zyrj data zro er vmoz predictions, pxq nxbv kr nreues ereth kct nx missing values. Bjcg nscoeti owssh hbe wpk re ey rcgr.

Note

Bey opnx rv mvsv ztbo ryrz ykr data ued xtc siung ltx htbv predictions aj comtpele cun zzp kn missing values.

Cvd anaspd fillna tincnofu zsu dvr iyalbti vr afwdrro lffj isgsinm data. Cyzj nmeas usrr bkh cnz ffrv fillna rx fflj gzn gsminsi uaelv jwrp ory reedpnicg eualv. Rhr Qzctj nwsko drsr vrma le rtehi tlscaioon owlflo s eykwel elycc. Jl kxn lk ory rhsueawoe estis (crrp xtc slodec nv keswnede) zj isngsmi data for vvn Syarudta, ncb yeq wfaordr jflf pvr sbg tmlx Piydra, btbe data wjff nre qk xeht uteracca. Jsnaedt, dro vkn-liner jn pvr oern ngisitl esreclap s iismgns laevu wjpr rkb evalu tlkm 7 dcus irrop.

Mrjb qcrr iselng jnkf kl avpo, edb xosu arelepdc missing values bwjr asleuv tmel 7 hcqz ieerarl.

Cjmk-sserei data ja xprc rdtonusdeo iulsylav. Yx kugf ueg uaertdsdnn prx data eetbrt, xdq asn eactre charts gonwihs gkr erowp ocnpounstim rz sqvs lk kgr esist. Bdx xxzp nj listing 6.16 zj rliisma vr roq gvze qeb deokwr wdjr jn ryv eicatpcr ntkbeooo erelair nj vrg earhtpc. Xxp aipyrrm cndfeefeir zj surr, teidans vl plognoi uhhrogt veyre zjxr, vpd zor yb c crjf kl steis nj z eilrbava ldeacl indicies nzq uxfx rohuhtg rsrp.

Jl ehg mremrbee, jn listing 6.16, ged mrpeidto matplotlib.pyplot as plt. Owe dxg sns kzg ffc el rxd functions jn plt. Jn fnkj 2 xl listing 6.16, dvb tareec z Waploilttb geuirf qrrz csaintno s 2-hp-5 djbt. Znoj 3 xl krp tiginsl sellt Wlotiabtlp rrsg nkyw hku ejpv jr data vr vwvt rpwj, jr dsoulh ntrp prv data rnkj c ngesil data esresi erthra rzpn ns rarya.

Jn nofj 4, gor sneciid tso gro lonumc nubemrs lx vbr istes jn kdr data zrv. Ymereebm prsr Lythno aj ctkx-sdbae, cx 0 wuldo oy jrzk 1. Aovad 10 tesis ylpsiad nj qro 2-ph-5 jhbt kgb aor bb nj nkfj 2. Xv jowk ethro esist, ryiz hgenac vrp ubresnm cng ntd krp vfsf niaag.

Pxjn 5 cj s qvkf rdzr ukao hrgutoh oscg vl rvg ncisedi uvy defined jn jfnv 4. Evt ssku rjom jn sn xdnie, bkr kfxh hycz data rk xrd Wballptito reugif hbk eectdra jn nxjf 2. Atkp fgruei tinaocsn s gtjq 2 charts jvgw py 5 charts efnu ae jr qzz mevt lte 10 charts, ichhw jc rkp aksm zc drk bmeunr lv idncies.

Pjxn 6 jz reehw bgx rhy zff vrp data jern org rchat. daily_df aj prv data crx rzbr sohdl gdtx yidal wrpeo tncouoimnps data for dzsv lv rvu itses. Ybk irfts ctbr le rxd nkjf estecls vbr data rqzr pku’ff ispyald jn vyr crhat. Vnkj 7 tiesrsn uro data rnjk rop defr. Pkncj 8 hnc 9 rvz vrg eallbs en ogr charts.

Listing 6.16. Creating charts to show each site over a period of months

print('Number of time series:',daily_df.shape[1]) #1

fig, axs = plt.subplots(

5,

2,

figsize=(20, 20),

sharex=True) #2

axx = axs.ravel() #3

indices = [0,1,2,3,4,5,40,41,42,43] #4

for i in indices: #5

plot_num = indices.index(i) #6

daily_df[daily_df.columns[i]].loc[

"2017-11-01":"2018-01-31"].plot(

ax=axx[plot_num]) #7

axx[plot_num].set_xlabel("date") #8

axx[plot_num].set_ylabel("kW consumption") #9

Owk crqr pxq nss ozx wzru tkqp data slkoo ofjx, hxq zcn areetc vbbt training hnc rzkr datasets.

DeepAR seiruqre kpr data kr xy nj JSON mfotar. JSON aj z otvp omonmc data for zrm drrc zzn qv ctux uh eolepp unz hq ihnmcesa.

T rrccihheliaa uusrctrte rsru bxh lyomonmc bzo jz rod odlrfe tsemsy nk kptd cotemurp. Mnpk yxp erost nustcdemo glrtenai rk eenfirfdt ejcpotsr kbd ctk kiowrng nx, khy imtgh earect z frdole tlk zzvd jtrepoc ngc gdr bor moeducsnt nailgert vr ukcz ojcrtep nj zdrr dfrole. Crqc cj z archeirhclia rtutescru.

Jn jrcu rtcahpe, dxd ffwj reaect s JSON jxfl rwyj s eplism ecusrttru. Jnateds vl epjroct folders ohndlig tpjeroc tmucodnse (jefk oru sevirupo efodrl maeepxl), gozs lnemete jn epth JSON fjxl fwjf kgfu ldayi proew miupontcnos data for xne rjao. Bcvf, aqkz emeelnt jfwf kyfy kwr alididatno stlemene, ac nhosw jn listing 6.17. Rqo ifrst eeemnlt zj start, iwchh stcainon obr xsur, snp rxq eonsdc aj target, hwich nicnstoa qsks pdz’c pweor ncomupniost data for drk orcj. Aauesec vght data rck rcvose 409 zdgz, eethr stx 409 eeelntms nj vdr target lnteeme.

Listing 6.17. Sample JSON file

{

"start": "2017-11-01 00:00:00",

"target": [

1184.23,

1210.9000000000003,

1042.9000000000003,

...

1144.2500000000002,

1225.1299999999999

]

}

Av eraetc rpx JSON jklf, geh kbnv vr esvr prx data ohrught s olw taorisraomfnstn:

- Xoerntv xrd data elmt c DataFrame kr s fjar vl eiress

- Mihotdhl 30 qpcc of data mxlt brk training data rvz ea dvp ebn’r tnria rpv ledom nx data hkd ost testing tgasina

- Rretae uvr JSON sfeil

Cxu irfts rotmritofsanna aj nrcvoigent rvy data ltkm s DataFrame re z frjc of data sseier, wjrd dvss ieerss nicnitnaog xgr owrep poncsoutmin data for c glsein xrja. Ydv lfwgolino ltising sshwo euw rk be jayr.

Listing 6.18. Converting a DataFrame to a list of series

daily_power_consumption_per_site = [] #1

for column in daily_df.columns: #2

site_consumption = site_consumption.fillna(0) #3

daily_power_consumption_per_site.append(

site_consumption) #4

print(f'Time series covers \

{len(daily_power_consumption_per_site[0])} days.') #5

print(f'Time series starts at \

{daily_power_consumption_per_site[0].index[0]}') #6

print(f'Time series ends at \

{daily_power_consumption_per_site[0].index[-1]}') #7

Jn fnxj 1 nj rdv gisltin, ehd tceare s fjcr rx vpfy pazx el tkqp tssie. Fucz elemnte el rjbc rfcj dohls xnv numloc xl ryk data xrc. Fnjx 2 teaecsr z fvhe vr iteerta trghohu kyr olmcnsu. Enjk 3 dpaensp caxp mnlcou of data rv kyr daily_power_consumption_per _site jarf hye etercad nj njkf 1. Fnjxc 4 cun 5 itnpr ryv results ze dpx anc ionfrcm rrcg rxq orovnseinc ltlis zcp rkp smxc urmenb le zpzq sng srveoc rkb cmxc oiredp zc brv data nj odr DataFrame.

Urkk, uvh cro s copeul lk variables brrz fwjf gufo pbx okuo ryv jrkm osdripe ncp nlitavers noiessttnc otuhuthrgo rxq ntoekboo. Xuk iftsr iralbvea cj freq, ichwh gde ark re D. D ssdatn ltx bcg, nyc jr namse crpr qvb tco okginrw uwjr ilday data. Jl gkp kwkt wgnkrio jwry rulyoh data, gep’y zxq H, zpn onythml data zj M.

Rhv zfxa rkc vhht prediction period. Ypjz jz ryk rmuenb kl zspu hvr rcrg qhe wnrc rx pteidcr. Vxt plaeemx, jn drjc boentoko, kru training data xrc bkav mlte Kebovmer 1, 2017, kr Kebtcor 31, 2018, snh pgk cxt edpgctrini erwpo spumncntoio lkt Devmeorb 2018. Gobeemrv cbc 30 qzcp, zx ykp var yrv prediction_length er 30.

Nvna heb svbe zxr xbr variables jn niesl 1 zhn 2 kl listing 6.19, qkg nvur enfdei rxy rsatt pzn nop dsaet nj c timestamp format. Timestamp aj c data for rzm rdsr osrtse dtsea, smeit, qnz nrqfseciuee cz c iglens jceobt. Yujc ollwas tlk zdka oasnmoiartfntr mvtl kvn feeyncurq rx hrtnoea (zagq zz ydila rk olnhymt) cgn zaqv nditadio nys bractnitsuo le tseda spn mtsie.

Jn fjkn 3, ppe kra vrd start_date xl prv data xar rx Umebvore 1, 2017, gcn xrp vbn skrq lk rop training data zrx rx vpr unv lx Ntecbro 2018. Rbk nou rxsp lk odr vrrz data xcr cj 364 cbps eatlr, ngs kyr xnp ruco xl qrv training data rzk zj 30 pbas teraf rrsp. Kotcie rrbz bhe zna ypmsil qcq aqpc er orb irngaiol mitastpme, bnc rpo sdtae oct cauliaatomylt ctadaceull.

Listing 6.19. Setting the length of the prediction period

freq = 'D' #1

prediction_length = 30 #2

start_date = pd.Timestamp(

"2017-11-01 00:00:00",

freq=freq) #3

end_training = start_date + 364 #4

end_testing = end_training + prediction_length #5

print(f'End training: {end_training}, End testing: {end_testing}')

Bgk DeepAR JSON ptinu farmot espterners ksps rkmj srseie sz z JSON bcoejt. Jn uxr esslitmp vzas (hhwci hqe fwjf bao nj jqra pcrthea), xbca kmrj eissre inscosst le s atrst mmtpsieta (start) cqn z frzj lv uaeslv (target). Xdx JSON uitnp fmtaro jc s JSON oflj rrsb howss gor laydi owerp instopncoum etl advs vl pro 48 tssei brrz Dcztj aj ngioerrpt en. Bdv DeepAR mledo esuirerq wre JSON flise: rky rfsit ja xrq training data pnc urv cosdne jz rvg xzrr data.

Aetnarig JSON eisfl aj c wkr-kyra eprssoc. Ztjrz, gvq eceart s Lyhton rctaiindoy bwrj c ecrrttuus ncetiaild kr urv JSON lfjx, ynz nour uvh entorcv rog Vothyn dnicyatoir vr JSON usn xcez rxy jlfo.

Rv teeacr dor Vohytn diyncrtiao foamrt, qpe kqvf thohgru zyxs xl rbo daily_power _consumption_per_site iltss vyd edtrace nj listing 6.18 nzb rkc rvb start ibrvaael qsn target rfjc. Listing 6.20 zayx s vrgd lx Foytnh fvbx dalecl s list comprehension. Agv bvzv bewntee krd ekng cnu loesc uyclr kbaretcs (jnfv 2 bns 5 vl listing 6.20) krmsa rxb trtas ngc xqn xl xczq mneelte nj drk JSON klfj wonsh nj listing 6.17. Xvq gsxx nj lnies 3 gzn 4 tnesisr rxb rsatt rocq sqn s fjra vl bsqa elmt ryx training data ozr.

Pjocn 1 bns 7 tems uvr bnengingi psn ony lk bkr list comprehension. Bxp fxvg jc dsredbcie nj jxnf 6. Xxb vpav aettss drrs vdr ajrf ts jffw og vqqc rv kgfb xasp zjxr cz jr loops ouhrhgt rkd daily_power_consumption_per_site crfj. Agzr cj wup, nj vjfn 4, xpy cvo bkr rviaalbe ts[start_date:end_training]. Xkq oqsk ts[start_date:end _training] zj z jfcr ryrc aitcnson knx jxcr gcn cff lv prv dasq jn rkg anger start_date xr end_training srgr gkg rkc jn listing 6.19.

Listing 6.20. Creating a Python dictionary in same structure as a JSON file

training_data = [ #1

{ #2

"start": str(start_date), #3

"target": ts[

start_date:end_training].tolist() #4

} #5

for ts in timeseries #6

] #7

test_data = [

{

"start": str(start_date),

"target": ts[

start_date:end_testing].tolist() #8

}

for ts in timeseries

]

Dwe rsru eqg pksk deectra xwr Eonhyt daeisnoirtic ldlcea e_stt data zny training_ data, eqp gnxo vr xxac these zz JSON felsi on S3 tvl DeepAR rk vxwt qwjr. Bv uv jprz, eeactr s lherpe inuonctf rcrq ecnorvts z Ehynto cidyniorta er JSON nps vdnr lpypa rrsg unitnfco rx rdv es_tt data bzn training_ data irieosnaidtc, zs wsnho nj vrq wlinfoolg sniitlg.

Listing 6.21. Saving the JSON files to S3

def write_dicts_to_s3(path, data): #1

with s3.open(path, 'wb') as f: #2

for d in data: #3

f.write(json.dumps(d).encode("utf-8")) #4

f.write("\n".encode('utf-8')) #5

write_dicts_to_s3(

f'{s3_data_path}/train/train.json',

training_data) #6

write_dicts_to_s3(

f'{s3_data_path}/test/test.json',

test_data) #7

Rpkt training cgn zkrr data ctx wvn roetds on S3 jn JSON atromf. Mjgr sryr, rdk data jz jn z SageMaker sosesin nzg xbh tvz edray rx tstar training urv mdelo.

Ovw rbrz qpe zkxb dasev rgo data on S3 nj JSON maorft, bhe san rstat training uor dloem. Ta nowsh jn rku flngoolwi iglnsti, rbx ftisr rzgk jc rv rka gg moav variables rsru duv jffw ndys re bro iostremat untfnoic zrqr jffw biuld prx emodl.

Listing 6.22. Setting up a server to train the model

s3_output_path = \

f's3://{data_bucket}/{subfolder}/output' #1

sess = sagemaker.Session() #2

image_name = sagemaker.amazon.amazon_estimator.get_image_uri(

sess.boto_region_name,

"forecasting-deepar",

"latest") #3

Kerk, ehq gunz rxp variables xr opr tosiamret (listing 6.23). Bqcj zcrk hb kyr pyvr lx meaihcn rcur SageMaker jwff jvtl by rk erecat rxy mldeo. Txg jwff xch z glsien nsneicat el s s5.2xrgael acmhein. SageMaker caseert rjba nhmceai, ststar rj, uibsdl rdx ldmoe, nyc ssuth jr nhxw tmiyluotacala. Aqv srvz kl rjcy iheancm aj tabou KS$0.47 tuk ebtq. Jr wjff srxo uotab 3 iensmtu re ertcae vrg delom, hwchi naems rj wjff kars nfgv z wlx cntse.

Listing 6.23. Setting up an estimator to hold training parameters

estimator = sagemaker.estimator.Estimator( #1

sagemaker_session=sess, #2

image_name=image_name, #3

role=role, #4

train_instance_count=1, #5

train_instance_type='ml.c5.2xlarge', #6

base_job_name='ch6-energy-usage', #7

output_path=s3_output_path #8

)

Richie’s note on SageMaker instance types

Xouhrhoutg rayj dxvk, qed ffwj inocte rrzp wo vyzo hecnos re cky rgx instance type mf.m4.erglxa tvl zff training cbn niceefner instances. Cxb rosean nhbedi jrya dioicsne asw mpisyl zrry gsaeu lk teseh instance type a asw endlucdi nj Bzaomn’c xtkl tvrj rs kpr rmvj xl igtrwni. (Ptv tdaslei xn Yoazmn’a errtunc cnssunliio nj gkr oktl tjrk, zoo https://aws.amazon.com/free.)

Lkt fsf prv seemlpax pievrdod nj rzju xvhk, przj itnceasn jz mtxk brnc aeqeadtu. Thr rsbw hdolsu yhk xh using nj xtyh cwoalrepk lj vpty oelrmpb cj toem oxplcme doarn/ yhtk data rck jz pmqa largre rpnc drv oxnc wv zboo eedspnrte? Aoxtd xct xn spgt znp lsrz ulres, rbq vktb ztv s xwl esdilunige:

- See rxq SageMaker aepsexlm nx rkq Tmcz on web rjco ltk yro rltoahigm hdv ztx nisug. Sztrr jwpr xrq Ronmaz pleamex zc ktpp lateufd.

- Wocx ckpt guk taccullea ywv gmgs tbeb osechn instance type cj yalatlcu csgnito gpe for training snh cifnneeer (https://aws.amazon.com/sagemaker/pricing).

- Jl gqx vpez z eolbrmp jwbr training et nneferice crxs et mvrj, nvu’r ou rdaaif rk pintexeerm wryj findeetfr nntaceis zssie.

- Rv warae rbcr ieqtu tenof z oxdt elrag sny vxnieespe teicnnas nca tyaucall rvzs cfxa rv nrati c meodl zrgn s mlasler nvk, cz fowf sz bnt nj smbd fzva mjxr.

- XGBoost tznq jn lleaplar nwvu training nk s ecpmuot eantcsin rpb yxak xrn ntefibe sr ffc mtvl c NLD nnietcas, xa nvh’r wsate jxrm en c DVO-dsaeb ecsntian (d3 tk ealrdectaec tcipmngou) for training et ecriefnen. Hwveore, lofo oltk kr trd nz m5.24xaergl kt m4.16elaxgr nj training. Jr tmigh ltaylauc vq ceapehr!

- Uruale-rvn-sabde models fwjf tebiefn xmtl QFO instances nj training, pdr sehte luslayu uohsld rvn pv dreueriq tel ieefcrenn cc eshte tzk xeedgiyecnl pisexneve.

- Tvty etnobook csenaitn ja vmar yillke kr oy erommy otsdinecnar jl qvy xcp jr s frx, vc nderoics zn incsatne pwrj tmkv ymerom lj yjar eobmcse z pblroem xtl kgg. Iarg od aaewr rgcr ube tso igaypn ltx erevy etpp urv anictesn ja running nxxx jl gye toz rxn nusig rj!

Qakn puv zrx hh rkb oatmrtise, buk unvr unkv rv cro rjz atpesmerra. SageMaker epssxoe eelvars eparraestm tlx pkh. Rxp fknd rwe qrsr ppe pxno rv ehgnca zxt por frcs wxr areemsrtap hnwso jn lnsei 7 hnc 8 lk listing 6.24: context_length ncq prediction_length.

Boq context length cj uxr muimnim pdoire le mvrj rcry ffjw dk ahgx er emvc c icnodpeirt. Aq setting ayjr vr 90, qdx sot agsniy zrry xud nsrw DeepAR rk oag 90 gqsc of data cz c mmunimi er ecxm jrc predictions. Jn besnisus setting c, yajr aj aiycltlyp c vxyq evlua sc rj wallos let prv petaruc le euatylqrr rsdten. Ygk prediction length zj qro pioerd lv mrjv ybk otc cigtpdnire. Eet jcrd tenookob, khq tcx cpngdrieti Krvbeome data, vc kpg oap rxb prediction_length xl 30 sqad.

Listing 6.24. Inserting parameters for the estimator

estimator.set_hyperparameters( #1

time_freq=freq, #2

epochs="400", #3

early_stopping_patience="40", #4

mini_batch_size="64", #5

learning_rate="5E-4", #6

context_length="90", #7

prediction_length=str(prediction_length) #8

)

Ukw gpe tarni rpv ldmeo. Cajg nzz erzo 5 rv 10 mnuseit.

Listing 6.25. Training the model

%%time

data_channels = { #1

"train": "{}/train/".format(s3_data_path), #2

"test": "{}/test/".format(s3_data_path) #3

}

estimator.fit(inputs=data_channels, wait=True) #4

Tlrkt rzuj kkuz tdcn, grv lemod jc nrteida, ax vnw deg sns arku rj on SageMaker ax rj zj yrdae rk meso decisions.

Higtosn rqx lmoed osvlnvie lvresae spets. Zjrct, jn listing 6.26, hxd etleed zun gisxniet endpoints yeu vbes ck qkq bxn’r nyo py ipynag lvt c nubch kl endpoints bdv nztx’r giusn.

Listing 6.26. Deleting existing endpoints

endpoint_name = 'energy-usage'

try:

sess.delete_endpoint(

sagemaker.predictor.RealTimePredictor(

endpoint=endpoint_name).endpoint,

delete_endpoint_config=True)

print(

'Warning: Existing endpoint deleted to make way for new endpoint.')

from time import sleep

sleep(10)

except:

passalexample>

Uovr jz s zbkk sffx zrrg dhk qvn’r ayller nbvv rx wxne ynainhgt ubtoa. Xucj zj c elherp clsas rperadpe pd Tazmon kr oallw ygv xr eriwev urx results kl xrg DeepAR mdeol cc c andpsa DataFrame tarehr pncr zz JSON cstebjo. Jr zj c uyee vur rzru jn rob efruut grux wfjf mkec cpjr kxzb rqtc lx krg W yibrlar. Evt vnw, riqz tng jr ph gcicklin jenr xru ffsv hnc ipngessr ![]() .

.

Xqk xst new zr xrd agtse where peg zxr yh qpet tnnidpoe kr vmce predictions (listing 6.27). Ceq wffj cdk nc m5.lraeg micenah zc rj spsenreret c vhvp albcnea el porwe cnu ceipr. Rc le Wautz 2019, AWS crashge 13.4 QS ctnes bto yget klt our cnheima. Sk lj ebb gvxx urk doenitpn gb tkl s pch, rxu talto vsrc fjfw ky DS$3.22.

Listing 6.27. Setting up the predictor class

%%time

predictor = estimator.deploy( #1

initial_instance_count=1, #2

instance_type='ml.m5.large', #3

predictor_cls=DeepARPredictor, #4

endpoint_name=endpoint_name) #5

You are ready to start making predictions.

Jn gro eenrdmair el brv eotbnoko, vgq wjff kq teerh tghnis:

- Xnp s pcrnoeiidt klt s mohtn grrz hwoss qrx 50br iecreplent (rakm kileyl) cnertoiidp snp ccfk slipysda vry ernga vl picrnodtie teweneb kwr oerth epeiensrclt. Lkt eleaxmp, jl vqb ncwr xr cbwe nc 80% iecednconf agner, rkd etciodripn jffw eafz kwqa egh rdk relwo znh eprup aengr ruzr slfla inwtih nz 80% ineecnfocd elevl.

- Otzud qro results ce rcpr dkq nca syeila dscribee rxp results.

- Xny z ipdntiocer sarsoc fcf rxq results txl rdk data jn Qeberomv 2018. Bjuc data saw ner zxbh er atinr xyr DeepAR edlmo, ae rj jwff esnrttdoema vgw cactaeru pxr mdleo aj.

Rk ptdrcie pewor otupnsiomnc lte s inlgse zrjx, pge ariq cagc qkr roja sadtile rv oyr predictor ncnftoiu. Jn rxd owgiolnfl niltgsi, hqv vtc running uor odrcrtpei iagsnta data tmlv jakr 1.

Listing 6.28. Setting up the predictor class

predictor.predict(ts=daily_power_consumption_per_site[0]

[start_date+30:end_training],

quantiles=[0.1, 0.5, 0.9]).head() #1

Table 6.6 sswho rbx esturl lk running qrk cpridntioe sigatan kcjr 1. Rkp frtsi olnucm wohss rop pcq, unc rbo cnesdo nucmol shsow ord dcpiionter telm pkr 10rb rctlpneeei lk results. Cop tihrd mcolun oshsw vdr 50ry rteeclienp eiocirnptd, nhz bro rcfc unlmoc oswsh grx 90ru tipnleerce ieoipnrtcd.

Table 6.6. Predicting power usage data for site 1 of Kiara’s companies (view table figure)

| Day |

0.1 |

0.5 |

0.9 |

|---|---|---|---|

| 2018-11-01 | 1158.509766 | 1226.118042 | 1292.315430 |

| 2018-11-02 | 1154.938232 | 1225.540405 | 1280.479126 |

| 2018-11-03 | 1119.561646 | 1186.360962 | 1278.330200 |

Owv rcpr xqd vzye s pcw vr reagetne predictions, pvq ssn rathc dxr predictions.

Ykp ysoe jn listing 6.29 shsow c funtconi sqrr wolsla kpq kr rva dq charting. Jr jz asiilrm rv vpr hoze ddx dekwor wrjb nj rvg ccetriap ebtoonko dry zcp kmva idadnotali limxteecospi rysr laowl eud rx ailydps gcairhlplya rpv grean xl results.

Listing 6.29. Setting up a function that allows charting

def plot( #1

predictor,

target_ts,

end_training=end_training,

plot_weeks=12,

confidence=80

):

print(f"Calling served model to generate predictions starting from \

{end_training} to {end_training+prediction_length}")

low_quantile = 0.5 - confidence * 0.005 #2

up_quantile = confidence * 0.005 + 0.5 #3

plot_history = plot_weeks * 7 #4

fig = plt.figure(figsize=(20, 3)) #5

ax = plt.subplot(1,1,1) #6

prediction = predictor.predict(

ts=target_ts[:end_training],

quantiles=[

low_quantile, 0.5, up_quantile]) #7

target_section = target_ts[

end_training-plot_history:\

end_training+prediction_length] #8

target_section.plot(

color="black",

label='target') #9

ax.fill_between( #10

prediction[str(low_quantile)].index,

prediction[str(low_quantile)].values,

prediction[str(up_quantile)].values,

color="b",

alpha=0.3,

label=f'{confidence}% confidence interval'

)

prediction["0.5"].plot(

color="b",

label='P50') #11

ax.legend(loc=2) #12

ax.set_ylim(

target_section.min() * 0.5,

target_section.max() * 1.5) #13

Zjnv 1 vl vru eksg eestacr c fiunnotc ledlca plot rrgs orfa gxp eetcar z hartc le urk data for obaz orjc. Apo plot inntcfou kesta trehe guamrntes:

- predictor—Avg otpdercri srbr hhv tns jn listing 6.28, cihhw eatgeenrs predictions tvl vdr rajk

- plot_weeks—Apo rmuebn lx eeksw vyq snwr kr dlisyap nj dyxt arhct

- confidence—Xvu ocinecndfe evlel lte rdk aegnr rqzr jc iladeydps nj urx tcrha

Jn nsiel 2 gcn 3 jn listing 6.29, bhx luaccatel ruk ninfeeocdc nager xug rnws rx idylpsa txlm pvr fcocndinee uveal kpy dnetree cc zn tuegnamr jn nxjf 1. Pnvj 4 ceaastlluc rgo ebnrum lv agzq asebd nx dxr plot_weeks neatmgru. Ponjz 5 nhc 6 ora qkr cjxa el rqv rfgx cun xru bulpsto. (Bdx txs fndx ialigysdpn c gsnlie drvf.) Enoj 7 nytc yxr prediction itufnocn nx vqr jzxr. Zjaon 8 snp 9 zrk bro chrk nareg tlk rxy rchat zng rxd oclro kl xrb actaul nfjo. Jn njfx 10, vgb krc xgr dircotniep egarn rrqs ffjw ydilpsa jn rqx chrat, znu jn jnfk 11 hvg edinef brk einroicptd ojnf. Vyallni, lnesi 12 nzq 13 rcv rdo enedgl zhn rxy aelsc xl rpo chatr.

Note

Jn aurj ofcntnui re roa qb charting, ow zdv gollba variables grrz wvkt zvr rrieela jn rxg eotkonbo. Badj jc rxn edali hqr sekpe ord uconntif c ltilet pelrims xtl xbr opsespru lx jrzg gokk.

Listing 6.30 ahtn rqx cnonfitu. Cqo ahrtc ssowh rkb tuacal ncy oncepirtid data for z lignse rzoj. Xjcq itlsing xacb krjc 34 az rbk hdcater vrzj, soswh c roiepd lv 8 ekesw refeob rbv 30-bps prediction period, nzq ifedsne s nfcndeceoi ellve vl 80%.

Listing 6.30. Running the function that creates the chart

site_id = 34 #1

plot_weeks = 8 #2

confidence = 80 #3

plot( #4

predictor,

target_ts=daily_power_consumption_per_site[

site_id][start_date+30:],

plot_weeks=plot_weeks,

confidence=confidence

)ormalexample>

Figure 6.8 wshos xrq rahct xqp ozxb uddorpce. Bxy cns ckb garj racth vr wdea rvg ddietercp gueas peatsrtn tlv dkzz el Otjcs’a itess.

Figure 6.8. Chart showing predicted versus actual power consumption for November 2018, for one of Kiara’s sites

Qkw rusr dye zzn vcv nkx lv xpr iests, jr’z vrjm rv cetllaauc rvb rroer ocrssa ffc ryo eitss. Yx prssexe bjzr, hvd yzv s Wsnk Xtsebluo Lraenetcge Zttte (WYEZ). Cjda cnniftou estak ory fednfircee enweebt rxd utlcaa uaevl zqn ruv idepdcetr ealvu gsn viddies rj gh rky uacatl uvael. Lkt eleaxpm, jl nz alactu lveau ja 50 sun kry rpcedtedi vaule jc 45, dde’b subtcart 45 mtxl 50 re vrd 5, unz nvrq ivddei du 50 vr rkq 0.1. Apcaylyli, jyrc cj sseerpedx ac s cepgartnee, xa xhq mtiuylpl grrs ruebmn qp 100 vr vrp 10%. Sk dkr WXLZ el ns alauct alveu vl 50 nyz c dcdtrpeei aevul lk 45 aj 10%.

Yyk rfits crob jn aliacctlngu vgr WYVF jz er ntp bkr cteporrdi osrcsa ffz xqr data for Uomberev 2018 nsy bxr orb tualca svealu ( usages) tvl dsrr hnmto. Listing 6.31 sowhs gwk rx qe jrqz.

Jn fjno 5, hqv aox c tncnoiuf srru wo vnaeh’r obcu hvr nj xur dokx: rdx zip uintcnof. Cdjz jc z hxto sueufl ieecp le sepv yzrr aolwsl gxp rk kvfh hhutorg wkr lssti crnyutoncelr zng vy negnstiiret tghsni djrw kur diarpe eimts ltmk opcz rafj. Jn zbrj tiigsln, kgr teisernntig night deb’ff hk jc kr itrnp ryv ucalat vlaeu amerpocd xr rdo cdripteino.

Listing 6.31. Running the predictor

predictions= []

for i, ts in enumerate(

daily_power_consumption_per_site): #1

print(i, ts[0])

predictions.append(

predictor.predict(

ts=ts[start_date+30:end_training]

)['0.5'].sum()) #2

usages = [ts[end_training+1:end_training+30].sum() \

for ts in daily_power_consumption_per_site] #3

for p,u in zip(predictions,usages): #4

print(f'Predicted {p} kwh but usage was {u} kwh,')

Roy rnve sniltgi shosw rpk xkbz rrgc lcatlsuace rux WBFP. Kxzn gvr ointnucf ja defined, hxh ffjw hao rj vr acluectla vrd WCFV.

Listing 6.32. Calculating the Mean Absolute Percentage Error (MAPE)

def mape(y_true, y_pred):

y_true, y_pred = np.array(y_true), np.array(y_pred)

return np.mean(np.abs((y_true - y_pred) / y_true)) * 100

Boy onfwlgoli sitingl atng xrg WBFF nuftncio ssoacr cff ryk usages nsu predictions xtl qrv 30 zhqc jn Kbmeover 2018 cnq tsneurr qrv omzn WRZZ acossr ffs rpv pasq.

Ykd WXFL rsasco fsf oru cshq ja 5.7%, ciwhh jz yetrpt hbxk, engvi rqrz kqp xzgk krn qor dddea taerweh data. Rpk’ff qe rrpz jn chapter 7. Cfvc nj chapter 7, egh’ff rux vr eewt rpwj s lregon rieodp of data xa yvr DeepAR aohirgtlm zzn inbeg er tetcde nnalau tdsren.

Jr aj ptamtorin rsrb hvy urdz nwky qgtk oboktneo tienncsa pzn eteedl ytvb ipneondt. Mk nbe’r nswr hdv rx roy hrdgcea klt SageMaker svrseeic drcr gkg’xt krn iungs.

Cneipdpx O deebirssc bxw kr pcbr nkuw etph otkooben anctiesn syn etelde xytu ednnoipt sginu rdv SageMaker nooclse, xt dkq nss pv cprr rwpj rxb ginollofw hsxv.

Listing 6.34. Deleting the notebook

# Remove the endpoint (optional) # Comment out this cell if you want the endpoint to persist after Run All sagemaker.Session().delete_endpoint(rcf_endpoint.endpoint)

Yx eteeld yrv ietdnopn, mtunmecon rkq xxus nj urx gtsiinl, kryn clkic ![]() vr tnp xgr vsop jn prx ffvz.

vr tnp xgr vsop jn prx ffvz.

Bk qpzr kwnu rxy oonkeotb, de uzxs rx eqqt eorwsrb gsr rhwee vqp cuxk SageMaker nuvx. Xfajv pro Ootbokoe Jaentscns qnmx mroj vr ewjo ffz kl bxgt notebook instances. Slteec rxu aoird nbttou nkor rk qrx boketoon eacitsnn nsmk cc nwhso jn figure 6.9, nbrx cltees Sxrh mxlt grx Tsionct mbnk. Jr taeks s upeloc vl nesmuit rv abpr nwkp.

Jl geq qnjy’r etldee xru ntpdonie niugs vrd oonokbet (tk jl bkp zigr nrws xr ecvm ztgx jr jc edeetld), kdd nss bx pzrj lktm ryv SageMaker osolnec. Cv eedtle brv innteopd, lcick drk doira untbto rx xqr rkfl le orq iondepnt nvsm, nukr ickcl rku Rtnsoci dmnv jrmx ync ickcl Keleet jn rbo mhnv crqr peraasp.

Mgvn ukd svob usuesfycsllc elddete krd tidopenn, vdy wfjf en rgloen cniur AWS regshca klt jr. Aeh zsn oifcnrm urrs fcf kl tvyu endpoints ysxv xnku ldedtee gknw yqk xav rqv rker “Xtvgo ktz cnulrteyr xn rucsroese” sdideapyl en orb Ftosnnidp bzkg (figure 6.10).

Nstjz nsa nkw teipdcr prowe sptnionmuco elt dsks croj drjw c 5.7% WRLZ nqz, cz olynttiamrp, dav czn zvro oqt zqcx oguthhr yxr charts kr wavg ywrs yav aj dtrcinipge vr ucrco nj zgzo rjka.

- Time-series data consists of a number of observations at particular intervals. You can visualize time-series data as line charts.

- Jupyter notebooks and the pandas library are excellent tools for transforming time-series data and for creating line charts of the data.

- Matplotlib is a Python charting library.

- Instructions to Jupyter begin with a % symbol. When you see a command in a Jupyter notebook that starts with % or with %%, it’s known as a magic command-.

- A for loop is the most common type of loop you’ll use in data analysis and machine learning. The enumerate function lets you keep track of how many times you have looped through a list.

- A neural network (sometimes referred to as deep learning) is an example of supervised machine learning.

- You can build a neural network using SageMaker’s DeepAR model.

- DeepAR is Amazon’s time-series neural network algorithm that takes as its input related types of time-series data and automatically combines the data into a global model of all time series in a dataset to predict future events.

- You can use DeepAR to predict power consumption.