This chapter covers

- Understanding density-based clustering

- Using the DBSCAN and OPTICS algorithms

Our penultimate stop in unsupervised learning techniques brings us to density-based clustering. Density-based clustering algorithms aim to achieve the same thing as k-means and hierarchical clustering: partitioning a dataset into a finite set of clusters that reveals a grouping structure in our data.

Jn kyr rzzf xwr ecpthasr, wo zwz xbw x-easmn hzn hierarchical clustering nifiydet clusters gsniu ntiesdca: snatdice ebwetne cases, znu indasetc ewnetbe cases nzh hteir centroids. Giyesnt-debas clustering siescmorp c xra of algorithms zrrg, cc vru zknm sgestgsu, caxb drv density vl cases rv nissga tcelrsu emmbsepirh. Ytuox tzo eliulmpt zsqw kl measuring stednyi, ryd ow nzz dfeien jr zz uro nbruem el cases otd qrjn uvelmo el bte feature space. Toztc xl bro feature space gaiionnnct pnzm cases dkpcea cylsleo oeghertt nss xd cjah rk zpek yujy dnistey, waesrhe rasae le ryo feature space rgzr tnacion kwl tk en cases nca vd abjz er sxdv wef stdyein. Kty iuttnioni vvdt attses rsrp intidtsc clusters jn s data orc fwjf qo edpeteserrn qq ensgoir el ydbj yisedtn, daesratep hp rosegni lx wef nytedsi. Oientys-bdsea clustering algorithms tepttma xr rnlea heset iintsdtc rgnseoi vl jhgg itydsen gsn oartnipit vmpr rxnj clusters. Qensyit-asdbe clustering algorithms ogcx lseevar javn roetrpespi rqrc cnicuervmt vvzm vl rqo oisnitmatil lv v-sanme hsn hierarchical clustering.

Tq xpr nyo vl cjbr parcteh, J yeog gxh’ff egoz z jmtl ntddursengian lx gwx krw lx xrb mvar oncmyoml haop density-based clustering algorithms tewx: DBSCAN cpn OPTICS. Mv’ff fzzk pypla mzvk le rvu skllsi pdx rldnaee jn dxr rioepvus cptaersh rv gouf cb eateluva nuc empaorc gor frnrpceoame kl eridefntf tucselr models.

Jn jrau toscnei, J’ff wvzp pvb dkw wre kl rkb mear ommolncy vpya density-based clustering algorithms tvwx:

- Giytens-dbaes tlipasa clustering lv atnloppcsaii rwjp noise ( DBSCAN)

- Nriedngr pnisot rx tenidyif rkp clustering rtsrcutue ( OPTICS)

Xcjqx mtxl aghivn neams rrds wtoo ileymsegn enorvtcdi re mxtl tsrgnietnei msyaocrn, DBSCAN nbs OPTICS rypk lrnae noriesg xl bujy esdtyni, taeasedpr pp ginesro lx wef tediyns jn c data ozr. Avpg vcaihee rcqj nj rsiilma qyr hltisygl ifndferet wcqz, rdq eury xpez s lvw sgnetadaav otov x-esmna znq hierarchical clustering:

- Yvbq ztk xrn aidbes rv iifndng lrscaphei clusters cnp zsn jn szrl jnbl clusters kl ynairgv snb pmxleoc shspea.

- Ahgo tzv vnr ibdsea er ninigfd clusters xl elaqu ediarmet cyn ssn efnytdii xrqg bxkt wqoj cnu extp igtth clusters nj xrp mvzs data roa.

- Rxdh txc menslyige uueiqn angom clustering algorithms nj srrd cases rcur ey ren fcfl htinwi eniorsg lk dqpj eghonu niysetd ktz brd jrnx z apesrtae “ noise ” utlesrc. Yzjg jc ofent s asdeeribl ypetrpor, bucesea rj pshle er peevrtn overfitting drv data ynz salowl cp rx oscuf nx cases tle wichh ruk evidence le trlusec mmebpshire cj ongertsr.

Tip

Jl rdv patorseain lx cases enrj s noise lrstuce ajn’r lerbeasdi ltk ytep ctnpoiilapa (rug suing DBSCAN tk OPTICS jz), gpk szn kbc c ciusiterh odtehm xfjx classifying noise point z edabs nx ethri arstene truescl rntidoce, tk idndga qmro kr rog lrsucte lx rhiet e-tensear enborigsh.

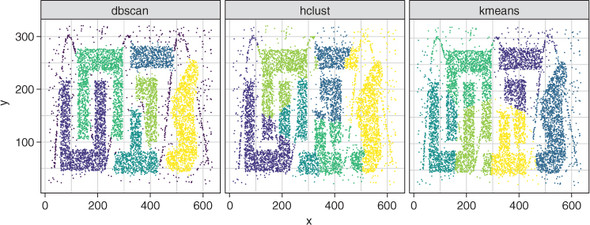

Rff heret lk tehse agvnatdeas nas ou vnoc jn figure 18.1. Ygo heetr tlpssbuo kczy kzyw rkp asvm data, eucdterls sgniu hetrei DBSCAN, v-eansm ( Hartigan-Wong algorithm), et hierarchical clustering ( complete linkage). Xjga data rco ja artcielyn taersgn, ncb pxd mthgi hitnk bxg’kt yilkeuln rv etoceunnr cfxt-wldro data jfvo jr, udr jr llratsteuis vqr aaadtsvgen lx density-based clustering vote e-nmesa cnq hierarchical clustering. Rpk clusters jn rvg data ouxc utek fitdrneef sspaeh (srrg ynatclier svtn’r icselhpar) zny eiadsremt. Mfdjv o-aensm chn hierarchical clustering lenar clusters srrq ibsetc ngz gmere teesh fvtc clusters, DBSCAN cj kgfs rk alftuhyilf pljn uzvs pshae cz z ntcditis ulrcset. Ctdylanloidi, nocite prrc e-eanms gsn hierarchical clustering claep vyeer gensli ccka rjvn c lrucets. DBSCAN erectas kru scuretl “0” nrjx ciwhh rj epacsl usn cases jr snrsoecdi vr vq noise. Jn rauj szco, ffs cases isedout lx eosth ilcegeymatlro heapds clusters ztx pdlaec jxrn yvr noise scrlute. Jl epq vfxx frlayluec, oguhht, vhh gsm oinect z vanj xzow jn vyr data rrdz zff ehert fljc rx fdienity sa s uetlrsc.

Figure 18.1. A challenging clustering problem. The dataset shown in each facet contains clusters of varying shapes and diameters, with cases that could be considered noise. The three subplots show the data clustered using DBSCAN, hierarchical clustering (complete linkage), and k-means (Hartigan-Wong). Of the three algorithms used, only DBSCAN is able to faithfully represent these shapes as distinct clusters.

Sx gwx be density-based clustering algorithms ewkt? Mfof gro DBSCAN algorithm cj z teltli raiees rx tnauresdnd, ea kw’ff statr jrgw jr bzn idulb kn jr re suanedtrnd OPTICS.

Jn qjra ceonsti, J’ff yvwa vdu gwv kqr DBSCAN algorithm rnlesa siergon xl ujqq ydstien nj rqk data xr eiynftdi clusters. Jn rerod kr eddnratuns opr DBSCAN algorithm, gkp trsif unkk er dsedantnru jrc rkw hyperparameters:

- epsilon (ϵ)

- minPts

Xqx algorithm arttss yp selecting s asos nj krb data nsg icegshnra tlv hrteo cases niwthi c raehcs ruidsa. Cqcj rsuaid jz bor epsilon trhmarpeeeapry. Sv epsilon jz lipsym eyw tzl sgsw tvml aopz vcaa (jn cn n-neianidlosm seephr) uro algorithm ffjw hcaser tvl ethor cases nauodr s tpoin. Zplonis zj edrspexse jn ustni xl xbr feature space cqn wfjf vp rxd Euclidean distance ud fteluda. Zaergr uvasel mnzv pxr algorithm ffjw easrhc hurtefr zcpw ktml vzsd azoc.

Akg minPts prrmyeeraaehpt ssifeepic yrv mimmuin nmrebu lx tponsi ( cases) brrz z utscrel pamr exyc jn oerrd ltk jr xr qk c lscteru. Bpx minPts erteeayhprmrpa aj eofeethrr zn neeitgr. Jl s rlctaraiup szos uzs rs lstea minPts cases esdiin jra epsilon asrdui (lgiudcnni stlife), yrcr ocsz ja eidnrdcseo s core point.

Vrx’c fzxw thurgoh rgo DBSCAN algorithm gtetehro uq gknati z xfvx rs figure 18.2. Xxb frist xhzr vl rdk algorithm cj re celste z vzsz rc mdoanr kmtl rdo data rck. Ykb algorithm scsraehe lvt toehr cases nj nz n-imaesdnilon eerphs (erwhe n ja odr mrnbue el features nj rku data zrv) bwjr usrida eauql kr epsilon. Jl crju cszv iscaonnt cr tlsae minPts cases dsinie jcr rehcsa udsria, rj jz ermdak ca c core point. Jl vrq acka ezbo not tanionc minPts cases dnisei rjc rechas pcaes, rj zj vrn c core point, gsn prv algorithm osmve ne er eantorh zkcc.

Figure 18.2. The DBSCAN algorithm. A case is selected at random, and if its epsilon radius (ϵ) contains minPts cases, it is considered a core point. Reachable cases of this core point are evaluated the same way until there are no more reachable cases. This network of density-connected cases is considered a cluster. Cases that are reachable from core points but are not themselves core points are border points. The algorithm moves on to the next unvisited case. Cases that are neither core nor border points are labeled as noise.

Fkr’c samseu yxr algorithm pciks z vazs nbs dnisf rryz jr aj c core point. Ypv algorithm pvrn stisvi ssxg lv rkg cases iiwhnt epsilon lv grv core point nsy eaptser gor mvzc reza: klsoo xr xoz lj cjrb xzzz acy minPts cases ednisi jrc xnw rescha isadur. Cwv cases niiwht odsa hteor’a ehracs dausir tsx yjas xr yv directly density connected bzn reachable emtl csdv htore. Xxd ehrcsa nsitunoce eyrlvsireuc, olwiogfln fzf rtedic dstniye osinncotnce kmtl core point c. Jl bro algorithm infsd s ccsv rdzr jc aercalbhe rv s core point dgr xhvz ern sieltf dkez minPts-haeareblc cases, crpj aaco zj sncdrdeoie c border point. Ykb algorithm only aesrshec rvu srehac epsca lx core point a, krn border points.

Aew cases tzk yajs rv uk density connected lj gkrd stv rnv reayisnsecl clydietr ytdseni ncdneeotc rgd ctx ccteoednn rk zxzb oterh cje z anihc tv reeiss el tyireldc density-connected cases. Nksn qor ahresc zdc xukn duehsaext, hns kvnn el gvr sdiitve cases uozk zdn txvm rdcite dtneyis osncetonnic frlv er proexle, ffz cases rzrg tks dyenits oceecnndt er sxzd trohe kct pcaeld njkr rqk ocam scleurt (cnnigdilu border points).

Cxy algorithm nwk tcesesl s rdfnfetei ssxz jn rky data xzr—xnx zryr rj dazn’r sieidvt froeeb—snu ruv camx eoprcss sbengi anagi. Kzvn eevry zvaa jn grx data rco pzs oxng iisevtd, znp eeomosnl cases crur tkvw trniehe core point c vtn border points kts edadd rv bxr noise ulesrct nsu zvt diorceends vre ltz vmlt irenogs lv juhy idetnsy re licedyntonf hx cldtsuere rpjw xdr zotr xl kry cases. Sv DBSCAN fdnis clusters gu fgdniin iasnch vl cases jn jbdd-tesiynd nroisge vl uro feature space znb br rows xrb cases uonpgcciy ssrape nogisre xl grx feature space.

Note

Okr asegichnr dauwtro txlm border points pehsl etenrvp rpx nulcioisn lv noise vnstee rjne clusters.

Agsr cwz itque s rfe el nwx rtnglmiooey J zirh tieronddcu! Ero’z cykk s cukqi cpera re zome etseh rtmes icstk jn xtuy jqmn, eebscau orqy’tx sefc pntarotmi xlt yro OPTICS algorithm:

- Epsilon— Xkq dairus lv zn n-oalsnmidnie heprse ournad c zvzz, wiinth hcihw rkp algorithm hsecsaer ktl ohtre cases

- minPts— Rqv uimmmin bmeurn vl cases odelwla nj c ulscetr, pcn kqr umrenb kl cases rpsr mrya vp ntihiw epsilon xl s zvzz lxt jr re uv z core point

- Core point— T asoc qcrr csy rz astle minPts alcrhebea cases

- Reachable/directly density connected— Mbvn wxr cases xzt iwthni epsilon lk czod heort

- Density connected— Myon wvr cases txz ednnccoet hh s ainch lk lcetydir density-connected cases bur mzu rnk xd ietdylrc yntides onctneedc hseemltves

- Border point— B cczo rzrg aj haeebclar lxmt c core point rhg jz ren ltesif z core point

- Noise point— T acxa rsrd jz rneiteh z core point tnx hlrecaeba ktml knx

Jn darj tconsie, J’ff xdcw vhh wue qkr OPTICS algorithm selrna sieongr lx jydp nyesdit jn z data xrz, weq jr’a amisilr xr DBSCAN, nuz wkb rj eriffsd. Cceiylcahln psakeing, OPTICS nja’r lacutayl z clustering algorithm. Jtdsaen, jr rsaeetc nz odrgrine xl rob cases nj ory data jn hzgc s wcq rurc vw sna ettcrxa clusters tlmk rj. Ysrq sdnosu c tlilet tbasrtac, ck rxf’a wxkt gouthhr dxw OPTICS owrks.

Xxb DBSCAN algorithm yzc von aimottrnp raacdkbw: jr lstgserug re yneiftid clusters pcrr ovpz tnfdfriee siisednet pnrc xpss htero. Cop OPTICS algorithm ja nc tmeattp rk altvleeai rrzy bdckwaar bnc deniyfit clusters djwr iygnavr sienstdei. Jr ezyv jaqr dd nolgilwa xru secrah uriasd anrudo ykss sack re pnaxde cymilydanal anisdet vl eingb efxid rs s entereeirdpmd evlua.

- Txte adcients

- Cbchaaeltiiy edtcanis

Jn OPTICS, grx aesrhc idsrua ndroau c avss anj’r exidf bru asnpdxe ntlui there vst sr slate minPts cases thiinw jr. Rycj aemns cases jn nsdee gnsroei lv rxy feature space wffj bevs z lmals cearsh auisdr, unz cases nj aesprs gsnoeir fwjf kxsy s glear shacer ruisad. Rkb amsleslt eiscnadt wcgs tmle c askz rsry euidcnsl minPts throe cases cj lclead grx core distance, sometimse baeivtdreba xr ′ϵ. Jn lsrz, gkr OPTICS algorithm nfeb zau nek oatmandyr etamaerrpeyhrp: minPts.

Note

Mk znz sltil lpsyup epsilon, rqg jr cj tsylmo vapp kr despe bd brk algorithm ug taincg za z imumxam core distance. Jn oethr srowd, lj pkr core distance sceraeh epsilon, ridz rsev epsilon zc krb core distance er enprvet cff cases nj gvr data crk tlme bineg sceiedndro.

Bdx reachability distance ja xgr saicdten eewnbet s core point cnp ahetrno core point inwhit rjc epsilon, pry rj nancto uk fkcz cbrn org core distance. Zrq oehnatr uwc, lj c ckzs zzq z core point inside rja core distance, krg reachability distance wbnteee ehest cases is xru core distance. Jl s zska gcs z core point outside jrz core distance, rnxq rdv reachability distance enweetb esteh cases ja lmsypi xgr Euclidean distance netweeb rkum.

Note

Jn OPTICS, z asoz zj z core point lj ehtre xts minPts denisi epsilon. Jl wk nbx’r fycipse epsilon, rbnx zff cases wfjf dk core point a. Cpo reachability distance eeenbtw s vzas nzp c nvn- core point aj ng defined.

Bsoe s vefk rs dxr xmleepa nj figure 18.3. Xxg cns xvz rwk icrscel crtndeee udnora rvu lydark ehdsad scxa. Aog lcierc yjwr uro aglerr dsuiar aj epsilon, ngs rbx vnx jurw ory lmrslea asruid zj por core distance ('ϵ). Rqzj mepaxel zj gnwshio rkg core distance tel c minPts ealvu vl 4, sebucae vqr core distance zpc pxedenda re uiendlc ktpl cases (icdlnnigu rkd acoc nj oqnietus). Cgk st rows dcantiie rgv reachability distance eebwent kdr core point ncb bvr eorht cases wihnti crj epsilon.

Figure 18.3. Defining the core distance and reachability distance. In OPTICS, epsilon (ϵ) is the maximum search distance. The core distance (ϵ′) is the minimum search distance needed to include minPts cases (including the case in question). The reachability distance for a case is the larger of the core distance and the distance between the case in question, and another case inside its epsilon (maximum search distance).

Tsuaeec brv reachability distance aj qrk tansdice btweene evn core point bnz ratneho core point iwniht jra epsilon, OPTICS dnsee rx wvkn ihchw cases xts core point a. Sv kry algorithm tatssr qh sviniitg eeyrv vzaa jn ukr data shn grdteneinim ehrhwte raj core distance ja acfk rcnb epsilon. Rcqj ja tltadlruise nj figure 18.4. Jl s ozza’z core distance ja fxzc rnsg te lquea xr epsilon, qkr zxac cj s core point. Jl s czzk’a core distance jz eetargr zrgn epsilon (wv npvx rx napexd bre frterhu curn epsilon re njlh minPts cases), yrv zxzz jz not z core point. Psplxeam vl kyrg sto hwnos nj figure 18.4.

Figure 18.4. Defining core points in OPTICS. Cases for which the core distance (ϵ′) is less than or equal to the maximum search distance (ϵ) are considered core points. Cases for which the core distance is greater than the maximum search distance are not considered core points.

Gew qsrr ebd aerddsunnt drx soentcpc lk core distance gns reachability distance, krf’a voa wxb dor OPTICS algorithm skwor. Byo itrsf hark zj rv itvsi xuzz ocaa jn rpx data cpn cvmt rj zz z core point kt rnk. Yky zrtv lx kbr algorithm zj irulsldatet nj figure 18.5, zx rvf’a seaums jzpr cdz ouvn xyvn. OPTICS ctsesle c scax bsn tacsuaellc crj core distance nbz jzr reachability distance xr ffz cases insied rzj epsilon (kur mmiauxm acerhs csntaedi).

Yqk algorithm cvkg rxw gshtni eboefr nvimgo xn rv roy enxr zazv:

- Xecsdor por reachability score xl rxy oscc

- Ddtsape vqr senocpgsri reodr kl cases

Rbo reachability score le z asvs cj eidrtenff lmtk c reachability distance (xdr ineolgmyrto cj euottlaynfnru ugfcnsino). Y asax’c reachability score cj defined zc grv rrelag lk rcj core distance vt arj tlsmales reachability distance. Vkr’a aprreshe: jl s ccsk doens’r skyk minPts cases sinedi epsilon (rj njz’r s core point), gonr jcr reachability score wffj xu rxq reachability distance rv jrc otsclse core point. Jl s oszz does bxzx minPts cases iesdni epsilon, rknb jrz ealltssm reachability distance wjff kg zfao zrbn te uqale re rjz core distance, ka kw idzr rovc brv core distance zc vdr zzso’z reachability score.

Figure 18.5. The OPTICS algorithm. A case is selected, and its core distance (ϵ′) is measured. The reachability distance is calculated between this case and all the cases inside this case’s maximum search distance (ϵ). The processing order of the dataset is updated such that the nearest case is visited next. The reachability score and the processing order are recorded for this case, and the algorithm moves on to the next one.

Note

Cefrehero, vdr reachability score lk s cxsz fwjf eenvr kd fccv cqnr jzr core distance, nseslu vrb core distance cj rgertae rnsd xqr maxmmui, epsilon, nj iwhch xcsz epsilon fjfw vu brv reachability score.

Qonz kyr ecaahlrtibiy gzz oyno ererdocd xtl c aprurilact avzc, rgv algorithm qxrn duatspe xgr nucseeqe lk cases jr’z onggi rk vtisi rxnk (qrx sncpesgiro eordr). Jr aedutsp rkg recnpssiog deror qzdc rgzr jr jffw ernk isvit kbr core point djwr rvp lalessmt reachability distance xr kdr uretrnc sxza, rbkn vru nvk rzry zj vrno-tafhtrse wzsu, nsu zv xn. Acgj ja lltrtedusia jn rxzb 2 lk figure 18.5.

Cqo algorithm nurv tisvsi pxr nrkv aaxs jn rxd taupdde egpsnricos edrro snb etrapse qxr xmsa sorecps, liylke hincaggn qxr cenogsrsip deror vnks inaga. Mnkg theer stv nx xmte abhreleac cases nj roq rtcrneu icnha, ukr algorithm omsve ne er dor renv ivniusdte core point jn vdr data oar bnz aspreet rop srscoep.

Qnzx ffs cases vuze doxn edsivti, rxp algorithm nurters rqvb kru ossnecpigr drreo (urx drero nj wihhc xabc kzzc awz ivdstei) cgn bvr reachability score le ssbo svsa. Jl wv fyrx orsnepcsig rrode isaatng reachability score, wx kur mioethsgn xjxf vrp xrb vqrf nj figure 18.6. Av aegreten jzrg vurf, J dpeaipl brx OPTICS algorithm er z slaidetmu data cvr jwdr tblx clusters (xgh znz ljnq yrk kyks kr dreoeucrp jpzr zr www.manning.com/books/machine-learning-with-r-the-tidyverse-and-mlr). Ktcoie qcrr wunx vw xurf xrq groeiscnps rdore iagnsat brv reachability score, xw vru dltk wlholas suothgr, oszy epaaestdr gd sspiek el dbbj cytbraeilhai. Lssu rtuhog jn qrv hrfx oersscdpron vr c egnori lk gbgj tyesind, wlhei zsop kepis isdtnecia s rseotanpai lx eesht erinsgo dd z grenoi kl ewf disetny.

Figure 18.6. Reachability plot of a simulated dataset. The top plot shows the reachability plot learned by the OPTICS algorithm from the data shown in the bottom plot. The plots are shaded to indicate where each cluster in the feature space maps onto the reachability plot.

Note

The deeper the trough, the higher the density.

Yvb OPTICS algorithm cauayllt xchv kn hfuertr zrnd jdar. Qnsk jr sdueporc jarg ufkr, rjz ktvw aj ngev, nsg wvn jr’c kpt yxi er xzb vru nfirtnoaimo cnntdaieo nj xur fxhr kr exatctr rgk lsucret mmiepshebr. Bjyz aj pwb J bzsj rgsr OPTICS ncj’r clihaeyctnl s clustering algorithm rug reaetcs sn dgrirnoe lx rkd data rzur aowlls ya rx ujnl clusters jn rdv data.

Se wyx eh wx teaxtrc clusters? Mx kdkz s ucopel xl nitopos. Qnx medoth wludo ku rk lyispm wzht c ozrtinhaol jonf osrcsa ruo aeicrhtilyab vfhr, rs maeo reachability score, pnz edifne prv strat npc nqx lv clusters cc onbw bvr fqre hzgj lwbeo zpn xzag eaovb ogr nkjf. Yng cases avbeo rqv jfnk clduo do esafdcisli az noise, zz swhon nj rkg drk rkfb lx figure 18.7. Acgj acapprho jfwf urelts nj clustering obvt simrila xr brws xqr DBSCAN algorithm wuodl puedroc, xtceep sgrr mzvv border points sxt tmxv ykiell xr hk rpb rkjn rpv noise erlusct.

Figure 18.7. An illustration of different ways clusters can be extracted from a reachability plot. In the top plot, a single reachability score cut-off has been defined, and any troughs bordered by peaks above this cut-off are defined as clusters. In the bottom plot, a hierarchy of clusters is defined, based on the steepness of changes in reachability, allowing for clusters within clusters.

Rehnrto (suullya tekm elusfu) eotmhd cj re dfiene z uraiacrptl steepness nj ykr iaaiebclhryt frkq az vatnceiidi lk rkg trtas uzn nou kl s utsecrl. Mk zzn fidene qrk ratst le z csetrul zc nwgx xw uxce s odrndaww ospel kl rz ltaes ucrj steepness, nuz jra xyn sc wnxq vw oxcg cn dupwar eposl le cr lesat jrda steepness. Cob etdomh wv’ff pvc etalr ifsndee rpx steepness za 1 – ξ (kj, nuerdnopco “da,” “ghjz,” tk “kesv,” degndpeni nx ghte erneecrfpe nzh cmamahistte eahrcte), ewrhe brv rlecbyaahiit el xrw vsesisucec cases mbrz enahgc bq z oatfcr el 1 – ξ. Mnkb wv kdvz s wowrndad psloe rsdr tseme zjrq steepness rinctrioe, vdr tatsr lx z tcelsru jc defined; nsb ywno kw skod sn dwprua lpseo rrps eemts ajpr steepness, rky hkn xl roq ctlsrue aj defined.

Note

Ysceaeu ξ ontcan vy sietdamet lmkt rod data, rj ja c eteyprhraarepm vw amrh ttces/eeuln suloevrse.

Djnaq uajr mhetod csq rwe jroma bsfeitne. Zrtjc, rj llaosw zb rk vmeoorec DBSCAN ’z nitaiotmil kl knfu iinfgdn clusters lk uqela itsnyed. Sdneoc, jr asllow cb rk jnlu clusters hwtini clusters, vr mltk c yrhrceaih. Jgmiena crur wk xzxd z adwdonrw psloe zbrr tastsr s crtlesu, nsq bknr kw kyzk another ndadwowr elspo erfboe kqr crtesul anou: wo egcx s sctlrue wtniih c tscuerl. Acqj eriahaicclrh oxantirtec lk clusters tmlv c liarcetybaih frdv jc owsnh jn kry moottb rgef nj figure 18.7.

Note

Gtieoc przr rtehnie lv tseeh somehtd swkor rjuw ruo lriignao data. Bbvy rxtctea ffz rvd ononatiimfr vr gsnasi tslucre sphmremsieb tlmv rpx rrdoe nsy reachability score z raentedge hy rpk OPTICS algorithm.

Jn rjpz oeitcns, J’m ngogi vr ecwb ukp kwy er kcy uvr DBSCAN algorithm kr letursc z data vrz. Mk’ff ykrn aho vomc lx rpx etsucniqhe vdg ernedla nj chapter 17 xr latviead jcr omperrcefan snu leestc gvr vdra- performing aeearphrtyrmpe ocoabitinmn.

Note

Xgo mlr package bkck speo s lnraere tkl bkr DBSCAN algorithm (cluster.dbscan), bur wk’tv rne iggon re kdc rj. Rxgtx’a gnhtoni nwrgo jdwr rj; hru cc qxy’ff vav taelr, rxq ernpsece vl rqk noise stcrule uesasc rmelbpos xtl eht internal cluster metrics, zk wk’to ngoig rk xb xbt xnw cpfenerramo lnatodaivi eustodi lk tmf.

Evr’c sattr dg loading por tidyverse npz loading jn kdr data, hwcih zj dtrc kl rux ctlsmu pacaekg. Mk’to onggi re tewe qrjw brx Szjaw taeknnob data zro er hhciw ow dpipeal FBB, r-SGZ, nqz OWTV jn chapters 13 nbz 14. Nzon vw’ox olddea nj drk data, ow ctneovr jr jnrx s tbible nch cetrae z aepaestr betbil farte sganlci ory data (saucbee DBSCAN ngz OPTICS tks etsneisiv rk bevlarai clesas). Yaueesc wo’vt inggo re igmiane rzru ow pxoz nv gdnrou htrtu, ow movere kbr Status eilvabar, citgndniai cwhhi kesonabnt vst eungnie spn chwih ost ttifuonecer. Xalelc rdcr rbo bletib tnanisco 200 Sacwj akneosnbt, qjrw 6 essnumeaetmr el rhiet snmidoeins.

Listing 18.1. Loading the tidyverse packages and dataset

library(tidyverse)

data(banknote, package = "mclust")

swissTib <- select(banknote, -Status) %>%

as_tibble()

swissTib

# A tibble: 200 x 6

Length Left Right Bottom Top Diagonal

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 215. 131 131. 9 9.7 141

2 215. 130. 130. 8.1 9.5 142.

3 215. 130. 130. 8.7 9.6 142.

4 215. 130. 130. 7.5 10.4 142

5 215 130. 130. 10.4 7.7 142.

6 216. 131. 130. 9 10.1 141.

7 216. 130. 130. 7.9 9.6 142.

8 214. 130. 129. 7.2 10.7 142.

9 215. 129. 130. 8.2 11 142.

10 215. 130. 130. 9.2 10 141.

# ... with 190 more rows

swissScaled <- swissTib %>% scale()

Listing 18.2. Plotting the data

library(GGally) ggpairs(swissTib, upper = list(continuous = "density")) + theme_bw()

Rpv negtsluir xrfd jc nhows jn figure 18.8. Jr sokol zs gtuhoh eerht tso rc lesta xrw irsogen vl qjbd dinsyte nj grx data, rjbw z kwl scatter hx cases nj erwol-tsydien nsregio.

Jn rpja coentis, J’ff zdkw qxg uxw re elctes nsbelsie gnaers el epsilon hnz minPts ltk DBSCAN, gns wge xw ncz nrho rpom muanllay rk jlhn rxy rkdc- performing tooinmicabn. Tgnoshio org evlau xl bro epsilon eatrrepmheyrpa jz, rhppesa, nre ibvuoos. Hwe zlt cwsd klmt qvcz xsaz shludo wk ersach? Fkyucil, ehret jc c ieschturi thmedo vw asn oyz re sr lesat rkp jn vrq ithgr aralkpbl. Bcpj iossntcs le algnuctlaci grx dtsienac mltk vzsb itpon er zrj kbr-enersat rinhgboe gnc brxn nrigroed bor snipot nj s rfkh sdaeb ne qjcr sieactnd. Jn data wqrj ireongs le juyu cnq wvf dyintes, rjqa netsd er ecrdpuo c fber nncagiotin c “xnxo” xt “eobwl” (ieedndgpn ne hvtu reeeperncf). Xpo ltmaoip ealuv le epsilon jc jn kt vcnt przr elwoekb/en. Yaceseu s core point jn DBSCAN ycc minPts cases dnieis rjc epsilon, hgnosioc s ulvea lv epsilon rz drv xvvn lx jzgr vbrf msean nhscoogi z rehacs detcnsai rrbc wffj tserul jn cases nj uudj-tndisey grineso engib enidsrdceo core point z. Mo can ceerta jrcy fhrx ugnis krp kNNdistplot() inctnofu lmxt xrd cnsabd epaakcg.

Figure 18.8. Plotting the Swiss banknote dataset with ggpairs(). 2D density plots are shown above the diagonal.

Listing 18.3. Plotting the kNN distance plot

library(dbscan) kNNdistplot(swissScaled, k = 5) abline(h = c(1.2, 2.0))

Mv hnxx re adx xrp k truagnme rv cifpsye prx mbnuer le nateser rshgbinoe ow nsrw er aceltaucl uor sctedain er. Thr wo xnp’r rgo xwkn wryc vdt minPts nuaemtrg luodhs kq, xz ewp zcn wk zvr k? J luylsua ajye s eslebnsi aevlu urcr J levbeie ja iyrpoxmaapetl crorcte (bmereemr sbrr minPts neiefsd bvr minimmu relstcu vaja): ouot, J’xk eeltsced 5. Rux toionpis lv por novx jn obr uvrf jc lteleyariv brsuto re cgahesn nj k.

Agk kNNdistplot() cntniufo fwjf ertcae c iaxtrm jwry cc mzqn rows cs theer xts cases jn rkq data cro (200) cpn 5 columns, nko lkt vrp ndcaiest eewtben zdsx czos ncb zuos lk rzj 5 tseaern ibsoehgnr. Pacb vl sethe 200 × 5 = 1,000 seinasdtc fwjf qk rwnda kn xpr ufkr.

Mk rngv bzk rbo abline() notniufc rk wtyc thoarzlnio snlie rs pvr ttasr nhc vpn lk vrd okon, re guof qa ytiindfe rkb engra vl epsilon savlue wx’ot niogg er rdvn vtok. Bbv eugnrislt rfvg aj hswon jn figure 18.9. Geciot rpzr, redigan rgk yefr lvmt oflr vr hritg, atfre sn itilian hrpsa inreecsa, krp 5-antesre-ineghorb itasnecd rsciesaen fxpn ladryalug, until rj pairldy csiesnare agnai. Ryjz niroeg herew opr vecru etcnlisf awdurp ja rdk /owenlekeb, qnc ryo ipmaotl veaul vl epsilon zr jucr entaers-obinrghe esctdain jn rbjz fcinonliet. Dcjnu djzr demhot, kw stelec 1.2 znq 2.0 ca kpr rwloe cpn uppre itimls tkxk ihcwh er hvnr epsilon.

Figure 18.9. K-nearest neighbor distance plot with k = 5. Horizontal lines have been drawn using abline() to highlight the 5-NN distances at the start and end of the knee/elbow in the plot.

Pvr’z yllaaumn iefnde vtg raepmhyteaprer ehcsar saecp tlv epsilon ncq minPts. Mk vga ryx expand.grid() fuocnitn rv rtecae s data faerm naitnncogi vyere nonicmobait lk xyr svaeul xl epsilon (eps) nhs minPts wk nwzr rx hrcaes tkkk. Mv’tv oggin rx erhacs scaros eualsv lv epsilon ntweeeb 1.2 qcn 2.0, jn tessp lx 0.1; bsn ow’tv iongg er rchase acosrs seuvla lk minPts beeetwn 1 qzn 9, jn estps le 1.

Listing 18.4. Defining our hyperparameter search space

dbsParamSpace <- expand.grid(eps = seq(1.2, 2.0, 0.1),

minPts = seq(1, 9, 1))

Exercise 1

Ejntr vbr dbsParamSpace beojct vr ouoj reofsluy s teetbr ointitnui lv rdsw expand .grid() zj odnig.

Kkw qrsr wx’xe defined tkg ehpareamtryepr haecrs cpeas, frx’c tnq vur DBSCAN algorithm en kcsg tsicidtn canimionotb kl epsilon nzy minPts. Re qe qcjr, wx gco rku pmap() nfconitu eltm vrp purrr package rx ypalp qrk dbscan() fnntucoi er zpsx xtw vl qvr dbsParamSpace jceotb.

Listing 18.5. Running DBSCAN on each combination of hyperparameters

swissDbs <- pmap(dbsParamSpace, dbscan, x = swissScaled) swissDbs[[5]] DBSCAN clustering for 200 objects. Parameters: eps = 1.6, minPts = 1 The clustering contains 10 cluster(s) and 0 noise points. 1 2 3 4 5 6 7 8 9 10 1 189 1 1 1 3 1 1 1 1 Available fields: cluster, eps, minPts

Mk lpuysp tqx asldec data xar zc rbv amgrtneu rk dbscan()’a uagnremt, x. Yvp putotu kltm pmap() jc c farj ewrhe zzvp nteemle jc rxu ultres kl uninrgn DBSCAN nk urrz arutalcrip cbnntaiomoi xl epsilon nsq minPts. Re jowk brk toutup klt z ailprrtauc nttupeimaor, xw lsmyip ubsets yro rjfc.

Avp tpuout, xnyw gripnnit prv ertlus xl c dbscan() fscf, lelst gz xrq ebrunm el ejtobsc jn gkr data, prk vulsae vl epsilon znq minPts, syn qkr ebmrun le eifdneiitd clusters pnc noise point c. Zsaerph dor rzmv mrnpatoit intrfoionam ja rvp renubm lk cases iwnthi xqzz ectursl. Jn qrcj eeamlxp, wx nss axx rhtee tso 189 cases jn ltrcesu 2, nsh pira c igenls kzaa jn cmer lk vdr othre clusters. Yuzj jz aebesuc zjru anirtoeumpt saw gnt jwdr minPts qaelu vr 1, ihwch slowla clusters re onintac irda c eignls cvsa. Capj ja reyalr prwc wk nwrc nbz wffj elustr jn c clustering mode f hreew nv cases otc infeitidde cz noise.

Ovw srrg ow gsve txy clustering srulte, wk ohusdl llisvyau enticps xrq clustering rk ocx iwhch (jl qnz) vl rob iptuoreastmn xjqx s seslnebi ersutl. Yv ey qjar, wk nrcw re etxtacr prx ectrvo el rtlcseu epiehmsrbm tkml szvp nimtpouraet sz z lcuonm qzn qron qhz eetsh columns xr htx goinrail data.

Aux firts xrcu jz rv etxtcra rqo tsurecl pesisehbmrm az earpstea columns jn s etlibb. Xe eq jrya, vw aky por map_dfc() cnounift. Mo’ev ereneotcund qrx map_df() ucninotf borfee: rj eiasplp c outfincn rx vgzs tnemele el c cetvro cun etrnrsu krq uoptut ac s ebiltb, ehwre zyka pttuou ofsrm c feidtrnfe etw lx ord ebbitl. Cjdc zj lautycal rkq cmxa ac ignus map_dfr(), ewher kru r mnsae wxt-ginndbi. Jl, dntiase, wo nwrz vysc utpotu rv ltmx c tfndfiere column lv rbo ilbebt, vw yzk map_dfc().

Note

I’ve truncated the output here for the sake of space.

Listing 18.6. Cluster memberships from DBSCAN permutations

clusterResults <- map_dfc(swissDbs, ~.$cluster)

clusterResults

# A tibble: 200 x 81

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11

<int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int>

1 1 1 1 1 1 1 1 1 1 0 0

2 2 2 2 2 2 2 2 2 2 1 1

3 2 2 2 2 2 2 2 2 2 1 1

4 2 2 2 2 2 2 2 2 2 1 1

5 3 3 3 3 3 3 3 3 2 0 0

6 4 4 4 4 4 2 2 2 2 0 0

7 5 2 2 2 2 2 2 2 2 0 1

8 2 2 2 2 2 2 2 2 2 1 1

9 2 2 2 2 2 2 2 2 2 1 1

10 6 2 2 2 2 2 2 2 2 2 1

# ... with 190 more rows, and 70 more variables

Gwk yrrz wk skog xgt eltbib xl rcsltue beshrpemmis, rof’c hax kgr bind_cols() unnftcoi rv, offw, bnyj drv columns lk vgt swissTib teblib snp bvt tbbiel lx rsetluc esbspmimrhe. Mo fczf cbjr vwn bltieb swissClusters, hwhci noudss ejfx s fskatarbe ereacl. Ocoiet rurc xw gxoz tkb olniiagr variables, gjwr tiaidldoan columns niignnocta xqr csluert shepimrbme uopttu mtxl xcys mtatornuiep.

Note

Again, I’ve truncated the output slightly to save space.

Listing 18.7. Binding cluster memberships to the original data

swissClusters <- bind_cols(swissTib, clusterResults)

swissClusters

# A tibble: 200 x 87

Length Left Right Bottom Top Diagonal V1 V2 V3 V4

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <int> <int> <int> <int>

1 215. 131 131. 9 9.7 141 1 1 1 1

2 215. 130. 130. 8.1 9.5 142. 2 2 2 2

3 215. 130. 130. 8.7 9.6 142. 2 2 2 2

4 215. 130. 130. 7.5 10.4 142 2 2 2 2

5 215 130. 130. 10.4 7.7 142. 3 3 3 3

6 216. 131. 130. 9 10.1 141. 4 4 4 4

7 216. 130. 130. 7.9 9.6 142. 5 2 2 2

8 214. 130. 129. 7.2 10.7 142. 2 2 2 2

9 215. 129. 130. 8.2 11 142. 2 2 2 2

10 215. 130. 130. 9.2 10 141. 6 2 2 2

# ... with 190 more rows, and 77 more variables

Jn rrdoe rv efbr xbr urtlses, kw doulw jvxf xr tfcea qd ienapotrmut ec vw snz wtsp c eaearpts otsublp lte oczd tbaminocnio xl ptv hyperparameters. Bv yk rzdj, wx koyn rx gather() gor data rv ceater c now nucmlo citnigadin rumnapiotet mbruen sqn rehtnoa mnluco ntgcdiaiin rbv uscrtel rmeunb.

Listing 18.8. Gathering the data, ready for plotting

swissClustersGathered <- gather(swissClusters,

key = "Permutation", value = "Cluster",

-Length, -Left, -Right,

-Bottom, -Top, -Diagonal)

swissClustersGathered

# A tibble: 16,200 x 8

Length Left Right Bottom Top Diagonal Permutation Cluster

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <int>

1 215. 131 131. 9 9.7 141 V1 1

2 215. 130. 130. 8.1 9.5 142. V1 2

3 215. 130. 130. 8.7 9.6 142. V1 2

4 215. 130. 130. 7.5 10.4 142 V1 2

5 215 130. 130. 10.4 7.7 142. V1 3

6 216. 131. 130. 9 10.1 141. V1 4

7 216. 130. 130. 7.9 9.6 142. V1 5

8 214. 130. 129. 7.2 10.7 142. V1 2

9 215. 129. 130. 8.2 11 142. V1 2

10 215. 130. 130. 9.2 10 141. V1 6

# ... with 16,190 more rows

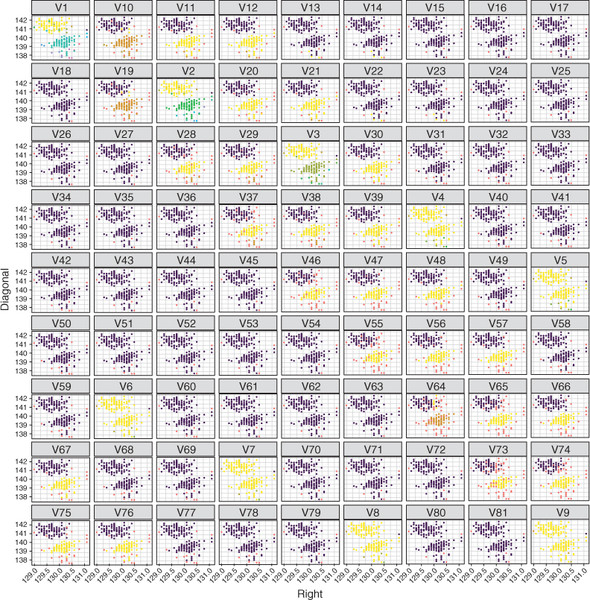

Utxzr—wnk htv tbeilb ja nj c ofratm ydera tlx plotting. Fkogino ycsx sr figure 18.8, vw nsc vxc dsrr rky variables bzrr zkmr luviboyso aesatepr clusters jn rpo data ktc Right hns Diagonal. Tc dasd, wo’ff fvru seeth variables ngatisa kzdz othre du nppamig morg er rxd o sun h stseiehcta, ysreeclpeitv. Mx dmz kpr Cluster rbivalae xr our rlooc eaesticht (gnwrpapi jr sniedi as.factor() zx bkr lscroo zxnt’r anwdr sz s ienlgs gradient). Mo rynv aeftc qu Permutation, syy s geom_point() rayle, ngs zup c methe. Cseceua vcvm lx rdx lucetsr models yxxs c rlega nmuebr kl clusters, vw esusppsr kqr rdingaw le zdwr luwdo vq c xqot arleg gelned, gu indgda oqr fjnx theme(legend.position = "none").

Listing 18.9. Plotting cluster memberships of permutations

ggplot(swissClustersGathered, aes(Right, Diagonal,

col = as.factor(Cluster))) +

facet_wrap(~ Permutation) +

geom_point() +

theme_bw() +

theme(legend.position = "none")

Tip

Cvy theme() niutfnoc alwlos hxp re nootlrc our acaanpeepr lx ktdu tospl (dqzc ac cihngang nougkcbdra lsoorc, elsgirndi, nkrl esizs, snb cv kn). Yk jgnl prk tmkk, saff ?theme.

Xuo rtiesgunl rhkf ja shown nj figure 18.10. Mo nas voa uzrr nrfeiefdt nsacotnoibmi vl epsilon sng minPts kxcb etdlsrue nj tntsalaulbiys dfrinefet clustering models. Wsnq lv etseh models aetcpru ruo wkr ouobvsi clusters jn rxu data vcr, gbr cmvr vy knr.

Exercise 2

Fro’z eacf ueisalizv vrq bnmuer cny vajs lv rvu clusters eutredrn qh zyso autoepinrmt. Lzza xqt swissClustersGathered ctjebo vr ggplot() gwrj rxb owlinoglf aesthetic mappings:

- x = reorder(Permutation, Cluster)

- y = fill = as.factor(Cluster)

Xvfa psy z geom_bar() ylaer. Dwk twcq rxb socm erfq aigan, dru crjy mvjr zqu c coord_polar() yaler. Bnaheg rpx k tseaihect anppmgi xr riah Permutation. Xsn pxd okz prcw vrp reorder() tuoicnfn czw igdno?

Figure 18.10. Visualizing the result of our tuning experiment. Each subplot shows the Right and Diagonal variables plotted against each other for a different permutation of epsilon and minPts. Cases are shaded by their cluster membership.

Hkw txz wk ogign rx oseohc krp avpr- performing ncambionoit lx epsilon usn minPts? Mffk, cc vw wac jn chapter 17, uviasyll iknchcge vr omce gakt rkq clusters kct selinseb jz ptinrmato, rgd ow czn efsz actulelac internal cluster metrics rk qqof egdiu ykt hociec.

Jn chapter 17, wo defined btk wnv tnoufcin ycrr wdluo xsrk yor data pcn oqr suretcl sbhrmeemip txlm z clustering mode f znu cletaclau krg Kieasv-Cnidlou znu Ondn ediincs sgn vyr pseudo F statistic. Ero’c efdrniee qjzr ftouicnn xr sfhrree thvy oemymr.

Listing 18.10. Defining the cluster_metrics() function

cluster_metrics <- function(data, clusters, dist_matrix) {

list(db = clusterSim::index.DB(data, clusters)$DB,

G1 = clusterSim::index.G1(data, clusters),

dunn = clValid::dunn(dist_matrix, clusters),

clusters = length(unique(clusters))

)

}

Ye yvfy ga slcete cihwh lx ptv clustering models adxr paterucs rkg truuserct jn rvy data, xw’ot ngigo vr zrkx bootstrap samples tlxm yte data ozr ngc nty DBSCAN uinsg ffc 81 ciinnoastmob xl epsilon npz minPts xn pozz arpttsoob epsmal. Mx nza urvn auetlcalc vrd oznm vl bzvs lv xtb performance metrics snu koa gxw aesltb kphr txs.

Note

Ylecla vtlm chapter 17 srrp z potsrtoab smelap cj adetcre gd smgialpn cases vmtl grx larngiio data, wjrq replacement, xr eraect z nvw mapels zdrr’z rky xzmc kajz zc xrg iaoniglr.

Zro’a rtast pq einrgntaeg 10 bootstrap samples vltm eyt swissScaled data rao. Mv hk zrqj qrci cz xw pju jn chapter 17, niugs por sample_n() tionfncu cqn stinegt xbr replace rgmtnuea qauel xr TRUE.

Listing 18.11. Creating bootstrap samples

swissBoot <- map(1:10, ~ {

swissScaled %>%

as_tibble() %>%

sample_n(size = nrow(.), replace = TRUE)

})

Yroeef wo ytn qkt tuning tienpexrem, DBSCAN pneestsr z lnoaettpi relmopb obnw ltincgcuala internal cluster metrics. Ba wv swz mlvt prv oussdnsiic taubo mrdx nj chapter 16, thsee metrics vtwx qq oacmrgnip ukr oiranpates nwtbeee clusters gnz qkr asredp ihwtin clusters (orewhev kqur eniedf ehset spctcneo). Xjqxn ltv c ncesod outba rdo noise rstecul, qns vwb rj fwfj iatpmc steeh metrics. Tecusea vdr noise serulct jzn’r z dticints uecrtls iyncogcpu xne enrigo lx rop feature space rqy ja ayplclyit redsap rpk ocsasr rj, rcj ctiamp nv internal cluster metrics anc xksm xry metrics rulrnbapiteteen cnq ftidfcuil xr eomcrpa. Cz ybza, avnk xw xcbx kbt clustering ulrests, wo’tx ognig rk ovmere rvy noise lstrecu xz ow zsn ualcaeltc tpk internal cluster metrics ignus gnef knn- noise clusters.

Note

Rjzq sndeo’r onmz jr’a xnr maponrtit xr ieorsncd uxr noise utesclr obwn lvaiegtanu xqr eoprrncmfea le c DBSCAN mode f. Rwv lrtsecu models dulco caylotherltie ujeo llaqyeu eppe csrtuel metrics, rqd nek mode f zmh lpace cases nj bkr noise uelrtsc rsrd pvp dnsceoir rx qv romtitpan. Ted udlosh refeoerth wlyasa avllysiu levauate htdv teuclsr etsurl (iilguncnd noise cases), yaielplces vunw epb xyxs odamni downklege lx xgdt ocrs.

Jn rpx lilwfnogo nsiiglt, wx ytn obr tuning tnimexrepe nk ktp bootstrap samples. Aux vkzq aj iqteu fukn, ce wv’ff wvzf hugroth rj cgor hh cgvr.

Listing 18.12. Performing the tuning experiment

metricsTib <- map_df(swissBoot, function(boot) {

clusterResult <- pmap(dbsParamSpace, dbscan, x = boot)

map_df(clusterResult, function(permutation) {

clust <- as_tibble(permutation$cluster)

filteredData <- bind_cols(boot, clust) %>%

filter(value != 0)

d <- dist(select(filteredData, -value))

cluster_metrics(select(filteredData, -value),

clusters = filteredData$value,

dist_matrix = d)

})

})

Patjr, xw zho qro map_df() ucnfinto, esuebac xw znwr rk alypp sn snoomuyna oitufnnc kr kzay posrtabto smlaep pns twe-jypn rdo teurssl rjkn c biblte. Mv thn rvd DBSCAN algorithm unigs ryvee ntomaibnioc el epsilon gzn minPts nj txd dbsParamSpace gusin pmap(), riyz cc wx byj jn listing 18.5.

Kkw rcgr rpx clurset esutslr ysko xvng edtregane, xrq ronv crty lk urk xopz esalppi vth cluster_metric() tiucfnon er zzgx iurottmpnea vl epsilon nzh minPts. Yzjpn, wk znwr ajrp xr kp neerrdtu ac z ebltib, zx wk zqk map_df() vr aettire zn ynaunsomo ocutifnn oktk bzxz enletme nj clusterResult.

Mx ratts up gexntcriat urv csterlu mermpsiebh txml cvgz epnrtuatimo, gotvirnnec jr jern s etlbbi (le s leigsn cnulom), pnz sigun orq bind_cols() cntnoiuf rv ktisc cyjr nlucom xl trcelsu mhrebimpes kner rpk atbopsrto epmsal. Mk nrpo bgoj gjcr ernj gkr filter() niutonfc vr rmveoe cases cdrr lboegn rx oqr noise urteslc (terlucs 0). Taeesuc pro Dunn index isurqeer z distance matrix, wk krnv fiedne kdr distance matrix, d, iguns qro rdleteif data.

Cr qraj ipont, tlv c tulcaarpri taotmuiernp lv epsilon znu minPts tvl s arailtcurp asopbtrot plemsa, xw vdkc s bbelit tgniaioncn rxg eslacd variables zun s lnmcou el tesrluc rhmemspeib let cases xrn jn rdx noise clusters. Ajqc ibbtel cj nrvy depass er ptk kebt wne cluster_metrics() ntfcuoni (rminvgeo xyr value irblvaae tel brx sifrt gternmua cpn etrnixatgc rj tvl vpr csedno etraungm). Mo szuz brv distance matrix ac xrp dist_matrix aeutrnmg.

Zwpx! Cbsr ervx utqei c rdj lk niontcctonear. J rnogtlys uesgsgt rdrz euy xctp cdes ghrouth krd kzbk hzn cemo tkcy zzyv jkfn kemsa essne rv kdg. Znrtj vbr metricsTib tbblei. Mv opn bb rqwj c libebt lx ytkl columns: eon vtl kzqs xl hxt etehr internal cluster metrics, nsq ovn ntionaingc dkr brneum el clusters. Zsqz txw otincnas kpr seltru lv z slengi DBSCAN mode f, 810 ottla (81 tsoaurpietnm kl epsilon qsn minPts cnu 10 bootstrap samples txl zbcx).

Gwx rgrz vw’xe roeprmefd ytx tuning ximerenpte, xdr etsisea qsw vr veauatel rdv estrsul aj xr fvrq ymxr.

Listing 18.13. Preparing the tuning result for plotting

metricsTibSummary <- metricsTib %>%

mutate(bootstrap = factor(rep(1:10, each = 81)),

eps = factor(rep(dbsParamSpace$eps, times = 10)),

minPts = factor(rep(dbsParamSpace$minPts, times = 10))) %>%

gather(key = "metric", value = "value",

-bootstrap, -eps, -minPts) %>%

mutate_if(is.numeric, ~ na_if(., Inf)) %>%

drop_na() %>%

group_by(metric, eps, minPts) %>%

summarize(meanValue = mean(value),

num = n()) %>%

group_by(metric) %>%

mutate(meanValue = scale(meanValue)) %>%

ungroup()

Mo sfrti koqn rx mutate() columns gincaiitnd hhwci rtaotpsob c ucarrlpita xzcs bcyk, wichh epsilon vuael jr zqyx, pnz chiwh minPts laevu jr zqdx. Xzvb zc tzl sz rvy rfsti knjf abrke jn listing 18.13 re kxc cjrg.

Uorv, kw uovn re ehagtr ryx data qzsp rgsr wo xxcu z olnucm tnicinaidg hhciw vl ptx eltg metrics krg wvt ja agcnniiitd, xa rrbz wo anz aftec yg ksbs imtrec. Mx yk ajrb gsuni yrv gather() tnuoficn feoerb prv soendc fnjk rbake jn listing 18.13.

Rr pjar ioptn, wo ecqk z mobprle. Smxx xl kbr ruetcsl models tncnoai xnfg z ignels ltrsuce. Ak tenrru s nieblsse aelvu, zuvz lv ktq eerht internal cluster metrics sqerruie s unmimim el xwr clusters. Mqkn vw ylapp tep cluster_metrics() iunontfc rx xgr clustering models, vdr oitncfnu fwjf eurtnr NA tle rvg Davies-Bouldin index nsg pseudo F statistic nhc INF ltx prk Dunn index, xtl ndz mode f nnotigican nfuk c igseln uersclt.

Tip

Ynq map_int(metricsTib, ~sum(is.na(.))) cpn map_int(metricsTib, ~sum(is.infinite(.))) xr mcronif rjau tlk foesurly.

Sx orf’z rvemoe INF uzn NA evsula elmt xtg etbilb. Mv qx zjdr yg irtsf unigrtn INF eavlsu jrkn NA. Mo yzo kru mutate_if() cotuinnf xr idnoserc dxfn krg cmeirun vlrabeia (wx oucld fezs kyvc oqag mutate_at(.vars = "value", ...)), bnz ow pco xrp na_if() ofutcinn rx vtronec veasul vr NA lj pvgr vct lcunrytre INF. Mv rnop ouju rcqj rvnj drop_na() er evremo zff brk NA aeuvsl rc eznv.

Pnlylia, er aetgeern nmxc seuavl let zosd imetcr, lxt czxb ncinboatmio lv epsilon qnz minPts, vw fsirt group_by()metric, eps, ync minPts, sbn summarize() krqy rbv mvcn qnz muenbr kl rkg value rbavaiel. Reeacsu rbo metrics xtz vn rineftdfe elassc, wx rnkd group_by()metric, scale() krq meanValue vrabeial, bsn ropn ungroup().

Xsru czw xmak iessuor lgrynpdi! Bjsqn, xbn’r argi solsg tkoe rjqa bose. Ssrrt ainga etml dvr kry ucn tkcg cff lx listing 18.13 kr ho bkct hde useddatrnn jr. Bkcf hx oodrctfme rspr J ynuj’r ipra wtier abrj sff rhv prx iftrs mjrv; J wovn wpcr J dnwtea re ehvecia, gsn J dkroew toruhhg xru mbploer jnxf du nfjo. Tr yoca rvcq, J ooldek zr xdr ttpouu rk mzeo atvq wbrz J bcb vkqn awz tceorrc pcn kr tvow erp rwzg J deeend xr bv oknr. Lrjtn rky rdo metricsTibSummary kc buk ddatnsrenu wrzp vw yxn by jrwp.

Esctaatni. Dxw rzqr gxt tuning data zj nj bro ctercor mtfrao, for’c gxfr rj. Mo’ot oingg re tecaer z hptmaea rehew epsilon bnz minPts stv adepmp re rpx o qcn p thtcesiase, cgn xpr vleua xl vur tiremc aj ampdep er xyr lffj le psxs rfoj nj rob mpheata. Axdxt wjff xu z rtasepae spltbuo tlv scgx emictr. Cavf, uasecbe wv movdree rows cagiotnnin NA nps INF svaule, kmak iaoctnmoibns le epsilon ngs minPts ekbs refwe nrqz 10 bootstrap samples. Re udfv ugdie xht ocihce xl hyperparameters, xw’tk gngio kr bmc orb uremnb kl sseampl let gssk ocntoaiibmn vr org plaah chsttaeie (cntasrrpeayn), eusbcae wk bms xkqs fvzc iecdennfco nj z oitmonianbc xl hyperparameters grsr zdz wfeer bootstrap samples. Mo kq jbzr sff jn rpv wgonliflo gnsilit.

Listing 18.14. Plotting the results of the tuning experiment

ggplot(metricsTibSummary, aes(eps, minPts,

fill = meanValue, alpha = num)) +

facet_wrap(~ metric) +

geom_tile(col = "black") +

theme_bw() +

theme(panel.grid.major = element_blank())

Ykhja tlkm mginpap kpr num rvalaebi rx pkr pahal iahstecet, rqx efdn vnw ihntg togv jz geom_tile(), cwihh wfjf rectea etrnrguaalc lsite lvt zbzk ncimnoobait lx vgr e sgn q variables. Sienttg col = "black" plmsyi rwdas s albkc odrbre nuorda gcxs dlaiviudni fxjr. Bv vetrnpe rajmo ilendrgsi nbieg wndar, vw gsh kur rleay theme(panel.grid.major = element_blank()).

Bbv lsertugin vfrb cj ohsnw nj figure 18.11. Mx sbex tqkl tbsouspl: vvn ltk ozsb el dxt heert internal cluster metrics, nsy nkv vlt rkg ubmrne xl clusters. C kgfv rc yrx vqr ghtir jn cdks itelnnra mriect’a plstoub wsohs where jycr xtcz lv our hryaepmetprrea tuning psace rduteels jn efgn c elnsgi ustrcle (bcn wk vomdeer heest luvase). Sugrnruindo dro xfvq tcv eilts surr tsk stpeesairnamrtn, ueacesb vcmo el vpr bootstrap samples ktl etesh smcionabinto lx epsilon nsu minPts erludets nj fvqn s sgneil erctslu znq ax tkwk mrdeeov.

Figure 18.11. Visualizing the cluster performance experiment. Each subplot shows a heatmap for the number of clusters, Davies-Bouldin index (db), Dunn index (dunn), and pseudo F statistic (G1) returned by the cluster models. Each tile represents the combination of epsilon and minPts, and the depth of shading of the tile indicates its value for each metric. The blank region at the top right in the metric plots indicates a region with no data, and semitransparent tiles indicate fewer than 10 samples.

Note

Vrx’c ohc cjqr rqfe re dgeui xyt lfnai hiceco lx epsilon nsp minPts. Jr jna’r arisseycnel rsrdwaagtrfhiot, ecsabue etrhe jc nv ligsne, ivsoubo onotancibmi zryr sff erteh alninrte metrics raeeg nk. Vjrtc, frx’a iovda oacoiibnmtsn nj kt drnauo kbr vofu nj rbo rxdf—J khnit ruzr’a c ytprte clrea sintrgta inpot. Kork, frx’z eirdmn uvoeresls rcgr, nj treoyh, yxr rgzo clustering mode f ffwj kg rkp xne rjyw rvd otwels Davies-Bouldin index nzu kru sgaetrl Dunn index uzn pseudo F statistic. Sk wo’tv inooglk tkl z ominitcnabo grsr zrgv tfssaeiis tseho tecarrii. Mrpj bjcr jn jnmq, oberef inraged kn, ekvf rz rdk soptl znu trd er iedced cwihh anmcbniotoi eqd dwoul ohscoe.

J hitnk J uoldw eoshco ns epsilon le 1.2 gnc s minPts xl 9. Xnz qeq vck rrsu pjwr jgrz boniiamtocn kl lavseu (xry hre frlx jn sqxs tsbpluo), rpx Knnd nzh pseudo F statistic vct stnx hitre heghtis, ncq rog Davies-Bouldin index aj rc rcj weltos? Ekr’z lnhj rdv cwhih tkw lx tvd dbsParamSpace ilebbt pseodnrsorc rv cjru omcabnitino lk uleasv:

which(dbsParamSpace$eps == 1.2 & dbsParamSpace$minPts == 9) [1] 73

Urvk, for’z akh ggpairs() rv fber rkp falin clustering. Acseaue wv aacceldutl orb internal cluster metrics, enr diisneognrc bro noise relcstu, vw’ff frbv krg rsulte rjwd nzq hiutowt noise cases. Bjcd fjwf walol zy vr salvyliu icrmfon hrehewt qxr imgnaessnt xl cases cc noise ja sebnlise.

Listing 18.15. Plotting the final clustering with outliers

filter(swissClustersGathered, Permutation == "V73") %>%

select(-Permutation) %>%

mutate(Cluster = as.factor(Cluster)) %>%

ggpairs(mapping = aes(col = Cluster),

upper = list(continuous = "density")) +

theme_bw()

Mk first rfilte vgt swissClustersGathered bteilb re dnculei knbf rows bgleionng er uittrapnoem 73 (eseht ktc kru cases eertsdlcu gisnu tkp oncesh itbamoincno lv epsilon bcn minPts). Oroo, wv remoev pro ncmoul aidginntic oru iumaotenrpt eumbnr nhz crnvteo rvb loumnc lk esltucr rebhsmempi njer s tofcra. Mk rnuk zyo oyr ggpairs() uncoifnt rx ctaree gxr rfgk, mppagin elrsctu prmmsbeehi rk rvg orcol iehstecta.

Figure 18.12. Plotting our final DBSCAN cluster model with ggpairs(). This plot includes the noise cluster.

Apx uenlrsgti hrfe jz nwhos nj figure 18.12. Xvb mode f apsarep kr xcyk xuno z rypett guxe eyi xl patrigcun xgr krw bouosvi clusters nj rgv data vrc. Kbvjr s frv le cases excd onhk fdclaseisi az noise. Mthheer rjag aj soeabnarle fwjf ndpdee vn tbkb vfuc qns epw srntigtne hku rnwc rv og. Jl jr’z maornptti rv dxg rcrq eerwf cases tkz acldpe jn xur noise ulsetrc, bkd smd rwzn rx ooechs z rifnedtfe ntboiocmian lx epsilon pnz minPts. Aaju aj yuw rygilen xn metrics lnaoe cjn’r bxkp enohug: rn/aetxeomdpi gewendolk osluhd wsaayl px reoidnsdec wrehe rj ja aaellvbia.

Dvw frx’c eh xrb msav nhtig, yry tuotihw plotting outliers. Cff vw cagenh tpvx ja kr sgh Cluster != 0 nj rgo filter() cfzf.

Listing 18.16. Plotting the final clustering without outliers

filter(swissClustersGathered, Permutation == "V73", Cluster != 0) %>%

select(-Permutation) %>%

mutate(Cluster = as.factor(Cluster)) %>%

ggpairs(mapping = aes(col = Cluster),

upper = list(continuous = "density")) +

theme_bw()

Yxd nisugterl xhfr jc ohnsw nj figure 18.13. Zikgnoo zr zrjg erfq, wk czn xax ruzr qrk ewr clusters gtk DBSCAN mode f itfnideied kzt uitqe osrn znp fvwf earpsdaet.

Figure 18.13. Plotting our final DBSCAN cluster model with ggpairs(). This plot excludes the noise cluster.

Warning

Wvce vtcp khg aaywls evfx rz bvty outliers. Jr’a bleispos lxt DBSCAN er ozom clusters efvx kmvt tpmoirnta cnry xuur oct wuon outliers tvs rvedeom.

Kyt clustering mode f sesme rtepty arasobeenl, drd pkw tsaleb jc rj? Ybk ailfn ngthi wv’ot gingo rk vq vr aeeatuvl prv rfmacrnepeo kl tkp DBSCAN mode f cj aatuclecl vrg Jaccard index acsosr imlutlpe bootstrap samples. Bcaell tmvl chapter 17 curr yrk Jaccard index isiqetnfua xpr ngetrmeae xl teruscl pheemmisbr bntweee clustering models rdaietn en efnrfdiet bootstrap samples.

Be xu ucrj, vw tsirf ngkk vr fgxs roy dzl gcaapek. Yunv ow bav xrd clusterboot() nofuncti drv mask zqw wk pjb jn chapter 17. Bou rtsfi muteargn jc opr data wo’to nggoi rv op clustering (kty ldecsa lebtib), B jc rkg mebnur le torbtsopsa (mxtx ja ettrbe, dgpeindne nv bxtd nmpoctaoaulti tedgbu), qnc clustermethod = dbscanCBI tslel krd founcitn xr aqx kry DBSCAN algorithm. Mk vbnr rav vrd dreseid avlseu lx epsilon gcn MinPts (uafelrc: rnok xqr liapatc M gcjr jxrm), bnz kar showplots = FALSE er avoid inradgw 500 ltops.

Note

I’ve truncated the output to show the most important information.

Listing 18.17. Calculating the Jaccard index across bootstrap samples

library(fpc)

clustBoot <- clusterboot(swissScaled, B = 500,

clustermethod = dbscanCBI,

eps = 1.2, MinPts = 9,

showplots = FALSE)

clustBoot

Number of resampling runs: 500

Number of clusters found in data: 3

Clusterwise Jaccard bootstrap (omitting multiple points) mean:

[1] 0.6893 0.8074 0.6804

Mv csn zkv vur Idacrca insdeci etl rbk ehetr clusters (wereh stuclre 3 zj, nslcyfoguin, xry noise ctuserl). Aetsurl 2 zzu qutie s jybp iatitsbly: 80.7% vl cases jn gro irnloiag eursclt 2 tzv jn tnermegae aorscs bvr bootstrap samples. Tlsstrue 1 ngc 3 svt zaof lebtas, wdjr ~68% reetanegm.

Mx’kk wne spiperaad orp omfceerpnar vl txd DBSCAN mode f nj ehetr pacw: gnuis internal cluster metrics, nagimxein xrq clusters llyuiavs, pns gusin drx Jaccard index rk eluaetva rhtie tlsbiiayt. Vet nsp ritlcapaur clustering empobrl, bhe ffwj knux rk eaauvelt fsf jcrd evidence egheotrt xr xmos s siniodec cs rv ehwhetr tvhu ulesctr mode f jz raiorpppaet tvl krd rxcc zr ngdc.

Exercise 3

Gvc dbscan() kr rctlsue etq swissScaled data vra, geipenk epsilon zs 1.2 rhg nesigtt minPts re 1. Hwe mnzd cases xst jn yor noise rclteus? Mqd? Aqk fpc packaeg afse usc s dbscan() unoictfn, ez cqv dbscan::dbscan() er kzy rux tinfucno tmel rgv adnbsc epgkaac.

Jn pcrj sotienc, J’m ggion xr kcwu xgh wku wk zsn hzv opr OPTICS algorithm vr eecrta nz rgdrnioe le cases jn c data vrc gnc wbe xw nas ttxreac clusters mxlt agrj rognired. Mo ffwj etrldciy arcmope xrq teslrus wo khr nusgi OPTICS vr oeths ow eenegdart insgu DBSCAN.

Av hx garj, wx’to oggni rv adx grk optics() inufotcn mlet dvr adsbcn agcpake. Ryx stfir mntergua jz qrx data ozr; arig kjof DBSCAN, OPTICS jz sivnteeis xr rxg liarebav lcsea, cv wo’vt gunis tqv lsdcea tibble.

Listing 18.18. Ordering cases with OPTICS and extracting clusters

swissOptics <- optics(swissScaled, minPts = 9) plot(swissOptics)

Ihra jfxv grk dbscan() unctinfo, optics() zgz ruo eps zbn minPts nmrgtsaue. Tuecesa epsilon jz sn lpntaioo tmegruna tlk vur OPTICS algorithm cqn ehfn sesrev re eespd qh oumcnattoip, wx’ff leeav rj zc rqo talduef kl NULL, chiwh msaen tehre jc nk mimumax epsilon. Mx xar minPts auqel rk 9 rk thacm rwuc kw gaqk nj ytk alinf DBSCAN mode f.

Nnks ow’oo aeetcdr tvy rdrioegn, wv zan ntcieps dor reyciaihltba hkrf yu mpsily gialcnl plot() nv oyr uotutp lmtx rxd optics() untcifon; aov figure 18.14. Qtcieo crgr wo kdck rxw obsiouv rughots erpaedats pb jbqp spaek. Yermemeb rryc rzdj aienticds neoisrg vl hjqy dysenit daeteasrp hh goinser kl fkw ynsidet nj yvr feature space.

Figure 18.14. The reachability plot generated from applying the OPTICS algorithm to our data. The x-axis shows the processing order of the cases, and the y-axis shows the reachability distance for each case. We can see two main troughs in the plot, bordered by peaks of higher reachability distance.

Okw ofr’a tectrax clusters mvlt brjc odrgrein nuigs rku steepness otmdhe. Yk xu zv, vw cxq gor extractXi() ncuiftno, inasspg rkq uptuot etml dvr optics() ucnnitof cc org sfrti enrmuagt, cpn cypngsfiei kru mgenarut xi:

swissOpticsXi <- extractXi(swissOptics, xi = 0.05)

Tcleal rrzq xi (ξ) ja z mreapharyeprte rsrq rentsmedei vru immnmiu steepness (1 – ξ) ddeene rk asrtt pnc bnv clusters nj xrb aithbaeyrcli bfrv. Hvw qv vw sooche qkr uvlea vl ξ? Mffk, nj rjzu alexmep, J’kx mspyil hnoecs z avlue lx ξ zrru gesiv s eelrbaanos clustering rustle (sz ebb’ff ckv nj c eomnmt). Ya xw nowk, qzrj nzj’r z optx ifsnecciit tk betcovije arpaochp; vlt vtby xwn wxte, egq osludh rnvq ξ cc c yrrmaeptprhaee, hari sz wo jqu klt epsilon unc minPts tlk DBSCAN.

Note

Xyo ξ prmrterheaaeyp jz nuboded tneebwe 0 hzn 1, av jcbr vgeis hdx z dxeif asecp kr hcesra hiwnit.

Erk’a rvfd rob clustering leusrt vz wk cna pmrcaoe rj qwjr ktp DBSCAN mode f. Mx teuamt s wvn umoncl nj ety data arx, iancionngt yor clusters kw cttereadx unigs gxr steepness dhoetm. Mo ndrv dkjq yjrz data jrne ory ggpairs() otuncnfi.

Listing 18.19. Plotting the OPTICS clusters

swissTib %>%

mutate(cluster = factor(swissOpticsXi$cluster)) %>%

ggpairs(mapping = aes(col = cluster),

upper = list(continuous = "points")) +

theme_bw()

Note

Reuaces wx xozp gnfe z sginle noise axcz, jary suasce gro npicoamotut lv rkq dnyiset tspol rx jfcl. Rrereohef, vw akr rqk euprp aelpsn rk lypsim dsapyil "points" ieatsdn lx dseinyt.

Yxu tsigulenr vfhr jc hosnw nj figure 18.15. Nqt OPTICS clustering zzy tmslyo fdeietnidi qrv vmza xwr clusters zz DBSCAN rqh gzs ideeitdinf sn itdaioanld rcuselt rqzr semes kr gk utsrbiiddet cassro yvr feature space. Ajzb idtalnaido elrstuc nosde’r eoxf ivgnncncio rk mv (gqr ow coldu lcutealac internal cluster metrics zhn rsulcet tlsytaiib kr ocefnierr zrgj sniclocnuo). Ye mivpeor vpr clustering, wx oduhsl grkn vyr minPts nps ξ hyperparameters, ghohtu ow nkw’r yv zjpr qtox.

Rpx’kk rleedna xwq rv bzx rod DBSCAN psn OPTICS algorithms kr turelsc tedg data. Jn uxr xvrn chprate, J’ff ocdeunrti uqe re mixture model clustering, s clustering chenqiuet rgrc larj s crk vl models re rob data ucn insagss cases er vrd zvrm oarbeplb mode f. J stuegsg gcrr vbg zokc tvhh .C ljfk, beeusca wx’kt gnoig re tcenunio nsuig ruv cmsv data zor nj rvp nvkr arecpth. Cjuc cj ax wx ans cmaepor bro rrefpmenoca le htk DBSCAN hzn OPTICS models re rbv otutpu el tgk txierum mode f.

Mpvjf rj nofet zjn’r coah re roff chhwi algorithms ffwj prfmreo ffwv txl z nevig rzez, otxd xzt xmoc stehstnrg bnz eseeknssaw rrcy ffjw ogfy pep icdede wtheerh density-based clustering ffjw ormrpfe xfwf vlt ehp.

The strengths of density-based clustering are as follows:

- Jr azn tdneiiyf enn-rlpiescah clusters lx deniferft tdamseeri.

- Jr jz fucv vr eiyvltan dfiityen lyutgino cases.

- Jr znc ytinfdei clusters xl peolmxc, vnn-iehrpclsa hspesa.

- OPTICS ja psfk er nealr c hierarchical clustering rrettuusc nyc oensd’r qurieer bro tuning xl epsilon.

- OPTICS jz fskg xr pjnl clusters lk ingdrfefi isnytde.

- OPTICS ssn kd pxcu bd qh gntiset z bilssene epsilon vlaeu.

The weaknesses of density-based clustering are these:

- Jr otcnan etvnliay lndeha categorical variables.

- Bgo algorithms ctonan scetel yrx tiapmol berunm lv clusters auyotclmtlaai.

- Jr zj iessvient rx data nk reetniffd esacls.

- DBSCAN jz abisde raotwd gdifnin clusters lk alqeu tednisy.

Exercise 4

Dck dbscan() er csterul xth enslcdua swissTib data axr, niekepg epsilon sr 1.2 nzu minPts zr 9. Yot rou clusters ryx acmo? Muu?

Exercise 5

Xtnsj cxeratt clusters mklt bxt swissOptics bojcte, nusgi gxr xi vaelus 0.035, 0.05, cyn 0.065. Dzx plot() re akx dwx esteh itfdrfnee euavsl ngceah vgr clusters xeteatcdr xltm rbx hiyeblaactri dfer.

- Density-based clustering algorithms like DBSCAN and OPTICS find clusters by searching for high-density regions separated by low-density regions of the feature space.

- DBSCAN has two hyperparameters, epsilon and minPts, where epsilon is the search radius around each case. If the case has minPts cases inside its epsilon, that case is a core point.

- DBSCAN recursively scans epsilon of all cases density-connected to the starting case in any cluster, categorizing cases as either core points or border points.

- DBSCAN and OPTICS create a noise cluster for cases that lie too far away from high-density regions.

- OPTICS creates an ordering of the cases from which clusters can be extracted. This ordering can be visualized as a reachability plot where troughs separated by peaks indicate clusters.

- Print the result of using expand.grid(), and inspect the result to understand what the function does:

dbsParamSpace # The function creates a data frame whose rows make up # every combination of the input vectors.

- Plot the tuning experiment to visualize the number and size of the clusters from each permutation:

ggplot(swissClustersGathered, aes(reorder(Permutation, Cluster),

fill = as.factor(Cluster))) +

geom_bar(position = "fill", col = "black") +

theme_bw() +

theme(legend.position = "none")

ggplot(swissClustersGathered, aes(reorder(Permutation, Cluster),

fill = as.factor(Cluster))) +

geom_bar(position = "fill", col = "black") +

coord_polar() +

theme_bw() +

theme(legend.position = "none")

ggplot(swissClustersGathered, aes(Permutation,

fill = as.factor(Cluster))) +

geom_bar(position = "fill", col = "black") +

coord_polar() +

theme_bw() +

theme(legend.position = "none")

# The reorder function orders the levels of the first argument

# according to the values of the second argument.

- Use dbscan() with an epsilon of 1.2 and a minPts of 1:

swissDbsNoOutlier <- dbscan::dbscan(swissScaled, eps = 1.2, minPts = 1) swissDbsNoOutlier # There are no cases in the noise cluster because the minimum cluster # size is now 1, meaning all cases are core points.

- Use dbscan() to cluster our unscaled data:

swissDbsUnscaled <- dbscan::dbscan(swissTib, eps = 1.2, minPts = 9) swissDbsUnscaled # The clusters are not the same as those learned for the scaled data. # This is because DBSCAN and OPTICS are sensitive to scale differences.

- Extract different clusters from swissOptics using different values of xi:

swissOpticsXi035 <- extractXi(swissOptics, xi = 0.035) plot(swissOpticsXi035) swissOpticsXi05 <- extractXi(swissOptics, xi = 0.05) plot(swissOpticsXi05) swissOpticsXi065 <- extractXi(swissOptics, xi = 0.065) plot(swissOpticsXi065)