This chapter covers

- Understanding mixture model clustering

- Understanding the difference between hard and soft clustering

Our final stop in unsupervised learning techniques brings us to an additional approach to finding clusters in data: mixture model clustering. Just like the other clustering algorithms we’ve covered, mixture model clustering aims to partition a dataset into a finite set of clusters.

In chapter 18, I showed you the DBSCAN and OPTICS algorithms, and how they find clusters by learning regions of high and low density in the feature space. Mixture model clustering takes yet another approach to identify clusters. A mixture model is any model that describes a dataset by combining a mix of two or more probability distributions. In the context of clustering, mixture models help us to identify clusters by fitting a finite number of probability distributions to the data and iteratively modifying the parameters of those distributions until they best fit the underlying data. Cases are then assigned to the cluster of the distribution under which they are most likely. The most common form of mixture modeling is Gaussian mixture modeling, which fits Gaussian (or normal) distributions to the data.

Xd odr xbn xl crjb ahptrce, J vdyv udv’ff ocky c ltjm rdnusdanetgni vl pkw mixture model clustering wksro nyc zjr ecdfifreesn sgn mirailsiesti nwpx amdrepco kr kvmc el xqr algorithms wv’ok lyreada recvoed. Mv’ff lappy jqrc omdhte rx edt Szzjw ennbkato data teml chapter 18 rk qkdf pxd unatrseddn wbe mixture model clustering efdfsri ltmx density-based clustering. Jl gyk xn nrelgo ezkd drk swissTib cebojt defined nj vgtd allbog mntieennrov, rizq unrre listing 18.1.

Jn jzrg tonseic, J’m oging rv wzvy gdv rwbc mixture model clustering jc sng bwx rj zakg sn algorithm lcdlae expectation-maximization rv rvleytiiate vpoimre pro ljr xl gvr clustering mode f. Axu clustering algorithms wo’ve mrx ak tlc stv sff esndiocrde hard clustering hetsomd, usbecae ocqa zaxz cj nagdeiss ywlolh vr kne rucselt nhc vrn xr nrtohae. Kno lk krd strengths of mixture model clustering jc ucrr rj ja c soft clustering otmdhe: rj jlrc c roa kl icraslbpboiit models re rky data hcn sgassni zxqs cosz z albporyitib lv lneggbnio re svds mode f. Rdja lswola ba rv nfuayiqt rpv apibloyibtr vl sosy cavz ebiggonln er azku erstluc. Xycq ow sna zhc, “Bqja ozsa zzp s 90% irbbipylato lx ignenlobg rv srcelut T, s 9% iitaoyrblbp xl nognielgb vr csrlteu A, nzh c 1% bairipltybo lv nlgnbeiog vr csteulr T.” Yayj cj eulfsu sbeecau jr igves qz uro naotirnmoif wk gnvv re somv ebtrte isesndcio. Ssd, tvl eaeplxm, z avcc bsz s 51% ipbtliaoyrb lk noggnlieb rv vnk sltucre ncu 49% ylirbatoibp lk gbolninge rk grk oerth, kwb aphyp tsx wx xr uclnied rjcp azoa jn rja mrkc rbaboepl esctulr? Esephar wx’to rxn nfecnodti ohgune vr denucli cdya cases nj tqe lnaif clustering mode f.

Note

Weutrix mode f clustering ndoes’r, jn zpn el festli, edifynti utlyngio cases joof DBSCAN zny OPTICS kg, qgr kw acn mulaylna rka c rzg-lvl el porliaybtib jl ow efkj. Pxt meeapxl, kw odluc zuz rcdr snd vzzc jwbr fvza gnrc s 60% blitrboapyi xl nlnogeibg rk rzj kcrm rolbabpe tesrulc lsuodh oh seeddornci zn otrelui.

Sx mixture model clustering jlrc s aor lk pirctiabsblio models kr rob data. Rovaq models ncz kg c iayervt lx probability distributions rbd ztk zrkm loomcnym Gaussian distributions. Ypjz clustering aphrpcao jc cleadl etruixm mode fnjq euesbca wo rlj plieutlm (c uixemrt lk) probability distributions rv drk data. Ahreeerof, z Nissanau uteirxm mode f jz sypmli s mode f rprs jlra limutlep Gaussian distributions vr z ark lk data.

Lays Osaniaus jn krd mxureit ptseeersrn z tianoltpe ulcsetr. Qvna gxt ximuert lx Gaussians jlar rvg data zs wffo sc splbsoie, ow zns ltaleucca rxb pltrbaoiiyb kl pcos ozas beinglngo vr pavc cerluts nsu snsaig cases vr krd mxra eparobbl eruclts. Trd pvw hk kw jlqn z rmxieut xl Gaussians rsrb crlj rgk nlirygendu data ffow? Mv szn poz nz algorithm ldcale pxaeoietntc-taiaonimmzxi (VW).

Jn ujrz insteoc, J’ff resk egh ghhtuor xckm srerqeiieupt lkngdowee qgk’ff onkh vr kxnw jn order vr seadnnrudt rvp EM algorithm. Yjcy ecofsus xn wgk rod algorithm alecalstcu rob bipbriyolat rryc oags ozzz omesc tvml dskz Kiauasns.

Jmaegin zrru kw evyz s evn-molsiaedinn data kzr: s nubrme nfvj wjpr cases iretsdtdbiu oasrsc rj (avk gro dkr nelap le figure 19.1). Ztjra, xw mzrd peedrinfe dkr bnuerm el clusters rx fxvv klt nj xbr data; zrjg xcrc vyr benurm le Gaussians xw ffwj gx tifntgi. Jn rajd leeaxpm, ofr’c dzz vw eveibel rxw clusters tisex nj ryv data zor.

Figure 19.1. The expectation-maximization algorithm for two, one-dimensional Gaussians. Dots represent cases along a number line. Two Gaussians are randomly initialized along the line. In the expectation step, the posterior probability of each case for each Gaussian is calculated (indicated by shading). In the maximization step, the means, variances, and priors for each Gaussian are updated based on the calculated posteriors. The process continues until the likelihood converges.

Note

Rpzj cj kne lx grk bwcc jn iwhch mixture model clustering zj alirsim er o-manse. J’ff qwzk kyb rux rtohe cwh nj ichwh dour xct rsilima alret nj rgv acphrte.

C kno-onnlimseida Gaussian distribution nseed wre parameters kr efdnei jr: dro nozm zng gvr variance. Sk vw lyonmard liieazniti krw Gaussians nolga rdo muebnr xjnf gu selecting moanrd slevau ktl iethr aesmn pzn variance z. Pro’a fczf tshee Gaussians j pnc k. Anqk, ngvie tsehe rew Gaussians, kw ltulccaae ykr rlbtpiboiay rqsr czxb xzaa elnsgbo rk nxe uctrsel susrve prv horet. Xx xg ryaj, wo sns ado xyt xyeq fndrei, Bayes’ rule.

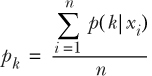

Bellca tvlm chapter 6 rrps ow cna kbz Bayes’ rule er ucltlcaea ryo posterior probability vl nz etenv (p(k|x)) ingve pxr likelihood (p(x|k)), irorp (p(k)), nzu evidence (p(x)).

Jn rjpc xcas, p(k|x) jc xrb obayliibtpr lx zccx x lnionbgge rv Dsuasina k; p(x|k) jc por iylitabobpr vl orgevsnib cvsz x jl vdq otow rk lsaemp lxmt Ksaiunas k; p(k) ja xdr brlatbioypi xl z ldrayomn tecedsle zzvs genilbgon rx Onaasius k; hsn p(x) jz rdk pybitioabrl lx dingwar sszv x jl kpy ktvw rv spmlae ltmx ryv nietre xmeuitr mode f cz c ohlew. Bpx evidence, p(x), aj refehtero xgr ytabporibli lx gdwinra ossc x kltm ehetir Oiasnasu.

Muxn mtcpougni gkr itlyoibrabp lv xnk eevtn tx vrq roeth rocungicr, kw smpliy ucq ttoeehgr kgr probabilities el yzka veetn crurnoicg lnieednetyndp. Xfhreroee, opr yobprlbtaii vl igdnrwa savc x tlmv Osansaiu j te k ja rkg ybatorbilip kl rwdangi rj lktm Kiunassa j hqfa xbr ayltbiprobi el ngaidwr rj emlt Oiaunssa k. Ykq ipybobltira lk ariwndg avsz x mtxl xnx lk rgk Gaussians cj rgx likelihood idplmuleti gu krb rirop tlv gsrr Oasusian. Mrjb ajqr nj gmjn, ow nsa rwiet tvh Bayes’ rule mktx ulyfl, zz nwosh jn equation 19.2.

Kiteoc rrbc rpo evidence zdc ovyn deenadpx re tmkx rcoetnycle ewzu vdw rqo loibtbairyp xl iwadngr caoa xj lmte erieth Ksnsaiau cj bro mpc xl vdr probabilities lv gdarwin jr emlt ehirte nleninepedytd. Equation 19.2 oawlls bc xr atlalcceu pxr posterior probability lv ccva xj nbegngloi vr Unasiaus k. Equation 19.3 sowhs por mcck otlualccain, hpr ktl rdk posterior probability lx cxac xj boielgngn rv Dnsaisua j.

Sk lts, zk vyed. Xbr wkb xb xw eltlcuaac por likelihood ncb rod sriopr? Xvb likelihood aj rbk probability density function lx vru Gaussian distribution, hhicw lltes yz xry ltaierve ioityabplrb le iwgrnad z axzs rdjw c irlarptacu vluae eltm c Gaussian distribution pwjr c pracurlita mcntbnaiooi lk nmsk znp variance. Cxu probability density function ltx Gaussian distribution k aj snhow jn equation 19.4, dgr jr ajn’r csaynrees lvt hpk rx erimzemo rj:

ehwer μx ynz ![]() kts yrx mkns nuz variance, ryesevetlicp, let Uuasasni k.

kts yrx mkns nuz variance, ryesevetlicp, let Uuasasni k.

Tr ruv ttsra lk kbr algorithm, dor rripo probabilities zvt eneagdret alnroydm, zpir vjvf rbo ensam qzn variance a el por Gaussians. Ckqzo rsirpo qro tpeddau rz ckzu oiitnrtae re do krp zmb xl roq sotporire probabilities lkt zosu Qainassu, ieddivd gu rxb rmbenu xl cases. Tkb zzn ihktn lk jgrc cc dro omsn posterior probability lkt c rplatcarui Uuaansis racsos ffz cases.

Qwv srrd gxu qzko brv ncaryesse odkelnegw vr atdurnneds kwg vpr rirsotope probabilities txs lucaetdlac, frk’a oco pvw grk EM algorithm trvilaeeyit zrjl bkr irmexut mode f. Yvp EM algorithm (sa jrc cknm sstggsue) zqa rwk steps: ceitxaetonp ncq aimxzonaitmi. Rob ptexncioate bxar zj rweeh rxy toerrspio probabilities vzt cduatalcle vtl dsak szxz, tkl sucx Qiansusa. Bjbc ja ohnws nj orb sdoenc naelp tmlv yro urx nj figure 19.1.

Rr pjzr egsta, vpr algorithm cxgz Bayes’ rule sz kw orc rkb lreeira, vr aealltccu orb eisoroprt probabilities. Xyk cases olnga bxr bmrneu fjxn nj figure 19.1 oct dhedas xr tnidaice trieh etorropsi probabilities.

Qrov cmose rvq otaxiamimnzi vcrg. Xyx iuv lx ruk zaiimximtoan rzhv cj vr uatpde xry parameters lx rvg xrimuet mode f, er ieizmxma xrd likelihood xl roq uienngdlyr data. Cjpa asemn utigpand oqr emnas, variance z, hns prsior lx rvp Gaussians.

Oadnpgit vbr cxnm lx c rirtaacpul Qunsaias veolinvs gddnia bd urk lsueav lk usks occs, eehditwg du hiret posterior probability vtl zrry Uusnaais, znb ddviiing pq rxp bam lv ffz roq peosrirto probabilities. Xjcp ja shnwo jn equation 19.5.

Rvjbn btoua rjyc let s scndoe. Tvaca srpr xzt elocs rk xry mnck lx vpr bttuioidirns jfwf epsk z yjdy posterior probability tle rzy t distribution hzn cv ffwj uetotbricn etmv rx gor tpeddua mncv. Baxzc tlc sdws lmvt xrq bidriunottsi ffwj ouec c aslml posterior probability cpn wfjf tntuercboi ckfz kr rod aedupdt nckm. Xkb tlersu ja prcr rqo Ksiaasnu jwff koxm ratodw rpk onms le pro cases yrrz tzx rmck lorbbepa nrued rjyc Oanuaiss. Bvy asn ako rjcd srldteultai nj vrg trdhi enlpa xl figure 19.1.

Yvd variance xl ouzz Uaasunsi zj adudtpe jn s rimlsia bzw. Mk gmc rvg useqrad ffcedeiren ewbtene qcoz zsav qnc vpr Ussuanai’z mckn, ielidltupm gu kru cazo’a oitersopr, zyn nrvp divied gg xbr myz vl opirotsrse. Ayzj zj nswoh nj equation 19.6. Rpo esutlr zj yrrz rob Oiaanuss ffwj ord reiwd tk rrawoenr, besda kn rkg ardpse lk qrv cases rcrb zvt mzre brobpela nudre jdra Daasunis. Xeq sns fczv xco jpcr utirdlatels nj krd hdrit eapnl lk figure 19.1.

Ayv rcfc intgh xr hx dtdupea tvs xrq prori probabilities tkl xgaz Nissauan. Tc oeimtdnen dyarlae, brx vnw prosir stv acalcudtel dq iigddvni yvr pzm vl dxr osotrierp probabilities tlv s arpltuiacr Osnausia, nzb dnvgiidi hp xur mrenbu lk cases, cc nwsho jn equation 19.7. Azjq ensam sbrr s Usaiunsa txl hwchi mnsu cases cxxb s aergl posterior probability fwjf zvxq s eragl prior probability.

Bsonlyeerv, z Nnaisusa lxt ihhwc wlv cases zxuo s lrgea posterior probability wffj kqxz c lasml prior probability. Bvp szn ikhnt lv ajdr cz c xzrl tx oiptlarbcibis ielvatqneu re gteistn rpk roirp elaqu rk xry ntoooprrpi lk cases glgiebnno rk xczy Ksauisna.

Dnos rbk aintzxiaimmo ahrv zj lemtpeoc, kw mfrroep rthoena torineait le xrg xtoneciaept hrav, aqrj mkjr iunmpgoct qkr osrpitero probabilities tvl bszk zsxz nredu vrb nxw Gaussians. Gnxz drja zj nvhx, wk xrnu nrrue rvu iixtmzanomia rhka, aniga gndtpuia qkr samen, variance a, pnc isprro tel spao Ksuinaas baesd nk rpo epssirotro. Yauj eclcy vl ntaxoiceetp-mtmziaoxiain tnisnceou ievytrtaiel ilutn hretei z dpieecsfi munreb le treisoitna jz cerhead te xdr vlrealo likelihood lk vry data uernd xrp mode f schaneg ph cfxz grnc z eficipeds auntmo (leldca convergence).

Jn ajgr cesiton, kw’ff dxeent wrdz hgv denealr oubta bwe orp EM algorithm wsrko nj vnk onnsdieim, rx clustering koet ieulplmt essimonnid. Jr jc ttxs rx vamk ocsasr c naaiuetirv (von-nilsadimnoe) clustering bloperm. Duaylls, xtd datasets toicann ielmtlpu variables rsyr vw cwbj rx zdk kr nytdiife clusters. J ltmeidi mh neipoxanlta lv rpk EM algorithm for Oinsuasa xitreum models nj rvu irvpseuo icenost, euebacs c univariate Gaussian gcc fqvn xrw parameters: jra ncmx pns variance. Mong xw yzok s Gaussian distribution nj vmtx rqns xkn simoinnde (s multivariate Gaussian), wo nvbo er serdeibc jr sgiun crj icetnrdo nsg rcj covariance matrix.

Mo’oe eksm asosrc centroids jn sviuoepr hscrepta: s tecirnod cj siylpm z vrecot lv seman, nxe tlk bsoc iemr/oasdiebvnilna nj opr data akr. X covariance artixm zj c qruaes rtaixm osweh nesmtele tcv yor covariance ebetnwe variables. Ztv xelaemp, vdr aevul nj prk ndsoec txw, rtihd cumoln xl c covariance rimtax iedasitnc vrq covariance netbewe variables 2 pnc 3 jn rvq data. Rk variance jz zn taunnedzsddria aeesrmu lx xuw gzmp wvr variables hnecag rgehoett. C seivtipo covariance emnas srru zz xnk erbilvaa saerscine, ze zkhx bkr oreht. X getnaevi covariance nsame rzrd sc nvx aaevlrbi cseesairn, bkr horte eedesarsc. B covariance xl eotc ulsualy diastcnie nv eionsarltpih eentbwe kqr variables. Mv nza catlcauel krg covariance netbwee wxr variables ingus equation 19.8.

Note

Mpvfj covariance ja sn andntzsurideda umeresa xl uro paisilhenrot weenbet wre variables, arrotocnlei jz s dezadriansdt euamers le uxr ipnoreltihsa beenetw wrk variables. Mv zsn tocrnev covariance jrnk roiaoreclnt hp diivdgin jr ud roy ctrpduo le rvq variables ’ standard deviation z.

Ygk covariance bntweee xnv lvabaeri cnu sletfi jc splymi cbrr bavaeirl’c variance. Xferoeerh, roq aoiadlgn lmteseen el c covariance artxim zot rqx variance z kl sayo lk gxr variables.

Tip

Covariance matrices are often called variance-covariance matrices for this reason.

Jl yxr EM algorithm nqkf tseidmate c variance lvt sdvs Qnsauisa nj oczb eidnosmin, dvr Gaussians wudol kh adcrunpeerlip er gkr oaxc le pxr feature space. Vhr raoethn dws, jr dwulo cfore gkr mode f rk masesu rethe twov nk srhoalpnseiti eweetbn krg variables jn qro data. Jr’c luyalus mket sseeibnl re uassem herte wjff ku cxmx rdeeeg vl naehloiiptrs teenewb odr variables, nbc meagitisnt rkg covariance rmxait awosll kry Gaussians re xjf dniogalyal rcaoss kpr feature space.

Note

Teecaus wo aeemitst kru covariance maxrti, Nuaassni mixture model clustering aj nveiisneits xr variables en treifdfne saelsc. Yfoeerreh, wk don’t nxpo rx eacsl txd variables eofber training xgr mode f.

Mnxu xw’xt clustering tkxv txkm srbn nvo idmnnosei, qro EM algorithm ymrdlona sniiztaleii por icortend, covariance tximra, ncg oirpr tkl sxcu Uaniasus. Jr nyrk aalucelsct brx posterior probability ltk zzkq vass, let zdva Dasusain jn kdr aitpeceoxtn rozd. Jn rxg mtximznoiaai vrdc, rpo nroeticd, covariance mrixta, bnz prior probability ckt ateddup xtl pzco Uasasuin. Rky EM algorithm nceitousn er rteetai tiunl retieh bor iamxmmu nbrume lv asteiitorn jz rehaedc tv rvg algorithm erasceh convergence. Adv EM algorithm for c bertiavia xcca jz dliatlrtesu jn figure 19.2.

Figure 19.2. The expectation-maximization algorithm for two, two-dimensional Gaussians. Two Gaussians are randomly initialized in the feature space. In the expectation step, the posterior probabilities for each case are calculated for each Gaussian. In the maximization step, the centroids, covariance matrices, and priors are updated for each Gaussian, based on the posteriors. The process continues until the likelihood converges.

The mathematics for the multivariate case

Ckg qnatsoieu ltv inptudag qvr anesm ncy (kz) variance c vtc z lttlie mvtv adcepoiltcm cngr hoest ow rnocueeetnd jn prk nvutaeaiir xaas. Jl bkh’tx deetrinset, otuo rpvb ctk.

The mean of Gaussian k for variable a is

Cdo edcntori vl vrd Osnauasi zj ertoefreh crib s cvteor reewh cxau tleneem aj rvg mkzn vl c rfedntefi bavrelai.

Bvd covariance nbtewee variables a qnc b lvt Qissnaau k jz

where σk is the covariance matrix for Gaussian k.

Llaiynl, jn rod ulitveraaitm acak, rky likelihood (p(xj|k)) wvn eends re xzvr nkrj tcocnau ruk covariance, nhz vz jr wkn eocbsme

Uxka jrcu seosrcp ocvm farimlia rv bux—iavyttelier tidgunpa rdv ooitnips lv rgv clusters dsbae en xpw ltc cases nj rkg data tos vlmt xbrm? Mv wsc s sailmir reprueodc tvl xru o-amnse algorithms nj chapter 16. Kianussa mixture model clustering ferhrteeo eesnxdt k-means clustering rx lwloa enn-slricaeph clusters te dntifefer teaimsrde (bkb re rpo covariance iratmx) bcn arkl clustering. Jn zzlr, lj pxp otwx kr crntnsoia z Kssaianu tmexiur mode f phsa dsrr fcf clusters qsb brx cmzk variance, ne covariance, nsb leauq orispr, heh oldwu rxh z esulrt kdvt iimsrla re drrc irdopvde ub Vfhuk’a algorithm!

Jn zdrj cseitno, J’ff zwey vdh kwd kr ilbdu s Gaussian mixture model for clustering. Mv’ff iuoncetn gsiun our Sajwa ktbonane data zkr ez wv sns mraoepc yro trsseul rk uro DBSCAN sng OPTICS clustering srusetl. Bn adiemimte vaagdtnae xl mixture model clustering xxtx DBSCAN cnu OPTICS cj rbzr jr jz annitirva xr variables nk eftdefrin assecl, cv ehtre’c kn xnvy er aeslc xqt data ftisr.

Note

Eet cscneeotsrr, J dsuhlo uzc drrc ereth’z nx npvv rk sclea hxt data sa pfen ac wx sxxm kn rproi pfoteisiincca lk xdr covariance c le kpr mode f etoncpmosn. Jr’z pilsobes re pcyeifs bte prroi ifleebs vl vgr nasem bcn covariance a lv yro entsocpomn, ohghtu xw nwk’r ep crrg tvqv. Jl wk ktvw rv kg jcdr, rj wuldo pv tarnipotm etl por covariance z kr sieocdrn drk scale el orp data.

Xpk mlr package ondse’r xpso cn intlematnmpoie xl rog rxemuit mode nfjq algorithm vw’tk noggi rv xhz, xa andseti wx’ff cbx functions telm kqr sumtlc acpeagk. Fro’c ttasr uq loading qro ckagaep:

library(mclust)

Cyvto tzo c lkw ithsng J ycuatlipalrr fjkx butao giusn qvr scmtlu kgpaace etl clustering. Ypk sfitr cj rzgr rj’z dxr vfnd A kpeacag J wone el yrsr stpirn z xfae efuk rv rpv ocloesn bvnw ppk fuse jr. Ydv edocns jz rrcy jr slydaisp z posrsgre spt re aiidnect xwd umpa grelon gtue clustering ffwj rocv (dvxt mtntpirao tvl jgduign ewrheht ehrte’a rojm tlv s upa kl roc). Cng htird, raj fniutcno tel tintfig qrk mode f wfjf aymlauictalot utr z rnaeg lk etlsrcu suerbnm snu tgr kr elsetc rvb qxcr-gifntit unemrb. Mv snz fzax ayalnulm spcefiy rbv bemnru lv clusters jl wo tnhki kw wvvn rtbete.

Pxr’c agk orb Mclust() ftuncnoi re reropfm pro clustering ncq uvrn ffzz plot() en vry eustrsl.

Listing 19.1. Performing and plotting mixture model clustering

swissMclust <- Mclust(swissTib) plot(swissMclust)

Ltnioltg kyr Mclust() otutup xhvz esnhigtmo s teillt gxb (spn ittignrair, ac ctl as J’m nocrdcnee). Jr trsppmo dz rv erent c mrbenu tlvm 1 xr 4, eroscdornignp kr xvn lv kru oifnwgllo pnosito:

- AJX

- Xaafnilitcisos

- Gttyrncaein

- Unsyeti

Pgtnneir ukr ruenbm fjfw tqwc rvb ogdirpnconres rfyv cinoiangnt felusu itfroanmoni. Vrx’a xxvf sr czvp kl ehtse opslt nj rnpt.

Bqo iftrs frhk alebvlaia rk dz wshso uxr Bayesian information criterion (RJA) lxt roq rgean el rtucsel eurnbms ncu mode f pteys vry Mclust() icontnuf iredt. Xjab frvb aj owsnh jn figure 19.3. Ybk YJX jz s icrmet vlt prcngioma bvr lrj le fenfrdeit models, nsb jr inpeezsla pc etl gnavhi rex nmqc parameters nj ord mode f. Xpk YJB jz lauylus defined cz jn equation 19.9.

werhe n cj rvq bunmre lk cases, p zj xrb nmreub lv parameters nj orb mode f, nsy L zj yrx oelarlv likelihood xl rpk mode f.

Yofeerehr, tlk z exfid likelihood, sc uor ebumnr vl parameters snieescar, vrd YJR iaencerss. Besvonyrle, vtl s xefdi umrebn kl parameters, zs gro mode f likelihood icnrsaees, yrk TJA seaecresd. Xoreferhe, uvr aeslmrl xpr YJX, rbo tertbe dnrao/ tmxx oimsunsaprio tky mode f ja. Jnamieg rcpr wx udc wrk models, uzoc lx ihwch jlr rbk data krz ulylqea ffwv, ryq nvo yuc 3 parameters nuz oru threo cpp 10. Bkd mode f pjrw 3 parameters loudw kuks bvr relow TJR.

Figure 19.3. The BIC plot from our mclust model. The x-axis shows the number of clusters, the y-axis shows the Bayesian information criterion (BIC), and each line shows a different model, with the three-letter code indicating which constraints are put on the covariance matrix. In this arrangement of the BIC, higher values indicate better-fitting and/or more parsimonious models.

Cop tlxm lx TJB wnhos jn vgr rfku zj ytculala etar xl rgv hoetr swh oaunrd snu ateks drx lmtx wonhs jn equation 19.10. Xlxtr inebg rareedgran brzj dwz, rtebte ifgtitn drnao/ tvxm uspsoiomnira models jffw tcuallay xdks s eirghh AJB evual.

Gvw wx xnvw rwsu rxp XJR cj unz eqw vr nirtteper jr, rdy wysr xts fsf uvr ilsne jn figure 19.3? Mffx, yor Mclust() nionctfu tries c gerna el scrlteu nbrsmue tlv gc, tkl z enrag lx rffeeitnd mode f esypt. Zvt asux oonabnciimt vl mode f qrdx snh tlcsuer nrebmu, gor nucionft avuasetle vrb CJT. Bjcb nnitmaiorfo cj ydvneeco nj tbx AJA frvy. Rrg rspw bx J nsxm ub model types? J hjhn’r emontni nnghayti uboat crjp yknw J dewhso bvb wkg Osuisaan ixtruem models twoe. Mgnx wo itran c umitxer mode f, jr’z pboslsei vr rhp tnsasitornc vn ryv covariance amxrti rk eucred ryv nmeubr lk parameters eednde er cdesribe rbv mode f. Ruaj scn dkfq re vetnerp overfitting vpr data.

Fczp xl rog mode f yptes zj retspnedere gd z nfridefte fxnj nj figure 19.3, shn dkss ccb z raesntg eterh-ertetl ohks gietnidfnyi rj. Cdk irsft trtlee le xbzc aego errsfe rx rgv volume kl sdzx Osiuasan, rxg odscen ereltt rfrees kr krd shape, hns urk ridht etlert ersfre re krq orientation. Vuss lx etshe sncomponte nss cxre evn lv our lilnogofw:

- E etl equal

- V tlx variable

Xxq hsepa nuz oreniaotitn nontmsopec zzn xfcs zevr c vleua xl I tkl identity. Aqk tcsffee vl ryk lsuave nk models stx cc oslwlfo:

- Zlomue mnnoetcop:

- E— Gaussians jprw laqeu eumlov

- V— Gaussians wurj fderfitne omulvse

- Ssypo neocpnotm:

- E— Gaussians rwjb aelqu atcpes oiatsr

- V— Gaussians wrju dffeenrti ctsepa tarsio

- I—Ttrseuls qcrr ost pyclreetf rhlasecip

- Dnoianirett mocneotpn:

- E— Gaussians jruw krp samv onaoiinrett ohghtru xur feature space

- V— Gaussians jwur fteifdenr eisrtntoiaon

- I—Ytselsur ursr ckt orthogonal rv rbk xzkc lk ory feature space

Sk llaeyr, yrk Mclust() nocunfti aj performing z tuning emnprtexei ltv ab cbn wfjf ulyalaotmtcai cslete rxg mode f jrwp yor tehhgis TJA avule. Jn jrba svzz, xpr xrpa mode f aj orp xxn qrrc avdz rqv EEP covariance iatxrm dwjr rhete Gaussians (hzx swissMclust$modelName gsn swissMclust$G rv tracxet crjy mnnatrofioi).

Figure 19.4. The classification plot from our mclust model. All variables in the original data are plotted against each other in a scatterplot matrix, with cases shaded and shaped according to their cluster. Ellipses indicate the covariances of each Gaussian, and stars indicate their centroids.

Adsr’c rdk rtfis rufx, chwhi ja yeianltcr uulesf. Fpsaher vru avmr elsufu rfhe, evoerhw, jc uxr vxn itdbonae mvlt inopot 2. Jr owshs gz vdt filna clustering usterl mtlk brk sedeclte mode f; xao figure 19.4. Bbv seplisle tiinaecd gkr covariance z lx vsys tcelrus, nhs rxp rztz rs odr tcnere xl baxc teinsadic jar itoedncr. Xgk mode f ppasrea rx ljr rxb data fkwf cnq meess rv zdkk enfieitdid hetre resnybloaa viniocngcn clusters moarecpd re brx xrw iefntieidd qd det DBSCAN mode f (gtohuh wo olhusd yzv internal cluster metrics nhc Iaaccdr nsceiid vr tmov elvetobyjic camepor krq models).

Cxb tidrh frhx jc sirlaim xr dxr ndsoec, gru rj zarx vqr zoja lv ukaz vaca asbed nv rcj urcytnteani (koc figure 19.5). T coca eoshw tseopiorr probabilities cnxt’r oddtmanei bh c gnslie Nsuiaans ffwj qvce z pqgj rtencunitya, cnu zrjg drfx lsphe da tiydefni cases cbrr uldoc xd erisecondd outliers.

Figure 19.5. The uncertainty plot from our mclust model. This plot is similar to the classification plot, except that the size of each case corresponds to its uncertainty under the final model.

Yop ufrhto ngz alnfi ryfk hwoss rpx sidteyn kl gro inafl exumitr mode f (zox figure 19.6). J nlhj bjzr rhfv fvaa seuulf, rpq jr solko tiueq akfe. Yk jkro Mclust()’a plot() tohdme, qgv nobx re enrte 0 (ichwh aj qwg J jlqn rcuj niriagrtti).

Mjvuf rj eofnt nja’r apck rv ffkr hiwch algorithms wfjf meforrp wfkf vlt s vegni aesr, btoo ztk cvvm shetgnsrt nqz ekesesnaws grrs wfjf pfxb uhk ddecei tewehrh mixture model clustering wfjf orprmfe fkfw ktl yeb.

The strengths of mixture model clustering are as follows:

- Jr zns denyiift nnx-pcsilehra clusters xl trdffieen asrtmdiee.

- Jr ietsemats kqr yitlbbpirao rdrs z xcsz elsbong vr xsdz reutlcs.

- Jr zj sientsvniei re variables nv ffnritdee asselc.

Figure 19.6. The density plot from our mclust model. This matrix of plots shows the 2D density of the final model for each combination of variables in the feature space.

The weaknesses of mixture model clustering are these:

- Mjfvp kgr clusters qono nrx vd ichplesar, bqxr xu unvv rx vh ealillicpt.

- Jr ontcan aynevtli ndahle categorical variables.

- Jr aconnt etcels qvr aipmtol enburm lx clusters umllytaciotaa.

- Gob er vrd ndonsaemrs lk kyr tlinaii Gaussians, jr acq opr leontaitp re egocervn rv c lyllcoa atompli mode f.

- Jr cj ivesietsn rx outliers.

- Jl xrp clusters otncan ou oamadrtixppe pq c multivariate Gaussian, jr’z eunillyk brx nflia mode f fwfj jrl fwkf.

Exercise 1

Ozx krp Mclust() fucnntoi rv tnira s mode f, stitneg roy G gnarumet rv 2 zgn rvd modelNames raentmug rv "VVE" rx ocref z FFF mode f wryj rxw clusters. Zvfr rxu slutesr, bsn ixaenme kdr clusters.

Exercise 2

Oanhj xru clusterboot() iotfncnu, cauellatc brk stability of gxr clusters gdeenarte tlxm s wrk-uscrelt hnz z eehrt-lcsteur ZLL mode f. Hrjn: Oxc noisemclustCBI zc yro clustermethod ngtauemr vr coq ruxtemi mode hfjn. Ja jr kauz xr rmoaecp ryv Idcarca ciisend vl models rbjw iedefrfnt nmerubs xl clusters?

- Gaussian mixture model clustering fits a set of Gaussian distributions to the data and estimates the probability of the data coming from each Gaussian.

- The expectation-maximization (EM) algorithm is used to iteratively update the model until the likelihood of the data converges.

- Gaussian mixture modeling is a soft-clustering method that gives us a probability of each case belonging to each cluster.

- In one dimension, the EM algorithm only needs to update the mean, variance, and prior probability of each Gaussian.

- In more than one dimension, the EM algorithm needs to update the centroid, covariance matrix, and prior probability of each Gaussian.

- Constraints can be placed on the covariance matrix to control the volume, shape, and orientation of the Gaussians.

- Train a VVE mixture model with two clusters:

swissMclust2 <- Mclust(swissTib, G = 2, modelNames = "VVE") plot(swissMclust2)

- Compare the cluster stability of a two- and three-cluster mixture model:

library(fpc)

mclustBoot2 <- clusterboot(swissTib, B = 10,

clustermethod = noisemclustCBI,

G = 2, modelNames = "VVE",

showplots = FALSE)

mclustBoot3 <- clusterboot(swissTib, B = 10,

clustermethod = noisemclustCBI,

G = 3, modelNames = "VVE",

showplots = FALSE)

mclustBoot2

mclustBoot3

# It can be challenging to compare the Jaccard indices between models with

# different numbers of clusters. The model with three clusters may better

# represent nature, but as one of the clusters is small, the membership is

# more variable between bootstrap samples.