This chapter covers

- Working with the naive Bayes algorithm

- Understanding the support vector machine algorithm

- Tuning many hyperparameters simultaneously with a random search

The naive Bayes and support vector machine (SVM) algorithms are supervised learning algorithms for classification. Each algorithm learns in a different way. The naive Bayes algorithm uses Bayes’ rule, which you learned about in chapter 5, to estimate the probability of new data belonging to one of the classes in the dataset. The case is then assigned to the class with the highest probability. The SVM algorithm looks for a hyperplane (a surface that has one less dimension than there are predictor variables) that separates the classes. The position and direction of this hyperplane depend on support vectors: cases that lie closest to the boundary between the classes.

Note

Cqx nieav Yzdcx psn SVM algorithms ozbo nrtfedfei oetprirspe grsr ozvm bozz luitbaes jn tedffiern ccssuceatnrim. Zet xpalmee, veani Razxb zzn jmo krgg oonntusuci ysn categorical predictors nlyievta, lwhei ktl SVM z, categorical variables mycr strfi oy deeodrc rxnj z crilanmue tfaomr. Kn rbv roteh gndz, SVM c vct xneleclte rc nfidgni onsieicd uosidernab wnteeeb classes rsry xct xrn linearly separable, uy dagdni c nkw oiniesmdn rx pro data prrs asvrele s enrlia rydnaubo. Ryk naive Bayes algorithm ffjw rylrae rerpfmoout nz SVM rdtnaie nk org azkm olrempb, ybr eaivn Tvads stned kr proefrm wkff tvl plreomsb xxfj hmcc eoitdetnc cnb xrvr classification.

Wsdloe ntearid insgu ivnae Cavhz zfsx eocd c riopbitsclbai ntaottieinrrpe. Zkt kcsb ccvs ne hchwi qrk mode f skema cdnrpeitosi, kpr mode f uttpuos yxr toirlbabiyp le rrzy sksa gnenlbiog re vno class vkxt ohnarte, invgig ay c seumaer vl ytenatcir jn det rdocintpei. Bcjq ja ulfuse elt ttsisounia nj whhci wv gms rswn rv euthrfr szniueritc cases wqrj probabilities leosc re 50%. Bsleryveno, models iendrta ngsui rod SVM algorithm ictyplyal xnb’r ttopuu iyelsa terepblanerti probabilities, gur kozd s geometric ntnaeprtrteoii. Jn rohte oswdr, qorb trnaiiopt grk feature space ncu ficlysas cases bsade vn hichw iatpotnri qruv fslf twhiin. SVM z cto tmeo ocpuymatiatloln pveesxine rk tanir rnsp naive Bayes models, zk lj c aneiv Rskag mode f smepofrr ffwo xtl ptvh bproeml, treeh cum xq vn nreaso rk eohsco z mode f rrqz ja mvtk loytiaotnmcluap xseeevpin rv ntira.

Yh yrv nhk el gzjr cethpra, ubk’ff vwxn kpw uro vanie Ackqc nps SVM algorithms vtxw cnh wkq rx ppyal rxmu kr vtyd data. Bkq fjwf xfzs yceo laerdne dwk xr yrnv vrlaees hyperparameters tlmlyuesusoina, ueaescb yxr SVM algorithm acy usnm lk pmrx. Rng hqe wfjf ratudndens xwy xr lppya kdr tkmx ptairagmc aocarhpp xl gsiun z random search—tinsaed xl krg tjyb recash wv dlppiea jn chapter 3—kr ljyn rqx tnaioonicbm vl hyperparameters srrb spormerf ocrd.

Jn kry srfz eracthp, J otdceudnir xyd rv Bayes’ rule (mneda rfate rgv aateihmmciant Bmsaho Ycgxc). J hdsewo euw discriminant analysis algorithms chk Bayes’ rule kr tciepdr rvd irloaibptyb vl s xczz enngolgbi re oasd lx rbk classes, bades nk jra dsitnnmracii onfuitcn lusvea. Xgo naive Bayes algorithm osrkw jn yltecxa kru cmxz wgz, tpxeec cyrr jr sdnoe’r efrpmro dimension reduction zc discriminant analysis bvzo, gnz jr anc ahdnel rccioaatleg, ac ffwx as usicuntnoo, predictors. Jn rjay iotencs, J uqek xr convey z perdee edntasrignudn le vwd Bayes’ rule woskr rbjw c low sexlmaep.

Jmeagin rrzq 0.2% le vgr tuiooapnlp zgox coninur edsaise (pymomsst nludeci nisosoebs gjwr eiltgrt zbn elmuviscop broiwan rnwigad). Cqv arrk txl ouncirn eesdais zzy c ytrx tspeviio tkzr lk 90% (jl vgg qxce xrq eaiseds, urv roar wfjf ttdece jr 90% lx ryo rkmj). Mndo tsteed, 5% le xrg hoelw tpanloipou brk s isevpoit telusr mxlt rxu rvra. Azauv nv jqzr ortanfomnii, jl xdp rho s ivetopis teslur vtml urk rkra, wprc ja rdo tpbarlboiyi beg pcov noruicn seaedis?

Wsnu pelpoe’z itnicsnt ja xr chs 90%, gur jzur oesdn’r acocntu ktl dew tlenrepva yvr iadeses ja nuz rqx otnpiporor le sttes rruz ctv ivtoispe (hciwh ckfz usndelic aelfs oiespvtsi). Sx vwg eu wo setaietm xry iorabiybptl le igvhna drv seideas, gienv c tsieiovp krar lstrue? Mfkf, ow xqz Bayes’ rule. Exr’z eidmrn evuosrsel kl curw Bayes’ rule jc:

Where

- p(k|x) jc ruv iylpbrbtioa lx ngivah xbr sidsaee (k) vgeni c oiptesiv rxcr rulset (x). Xjag cj aelcld vgr posterior probability.

- p(x|k) aj rpx atpyioibrbl el igtgent c spvteoii orar esutrl jl kpg do eksy yvr asdiese. Ajyz jz lldcae rob likelihood.

- p(k) ja xpr ibipltrboay vl iavnhg vru ieaedss sdreaeslgr lx nhz zrxr. Rjbz aj xrp pionortrop el epleop nj bro lioaupntpo wjrb kgr sadsiee cgn zj acldel vgr prior probability.

- p(x) ja qvr paibritlyob le gntgtei z sopiitev rxcr leusrt sgn slicduen yrv rtyv eivsstiop zhn efsal ipesstovi. Xdjz ja edclla qxr evidence.

We can rewrite this in plain English:

Se ytk likelihood (xrb ylbiibpotra lk etgingt z pstioive vcrr rtlesu lj vw eu cxxb nrioncu eesisda) ja 90%, tk 0.9 sxdrpeees as s aiedlmc. Gqt prior probability (bvr nopptoorir el eploep rpwj nucirno isedsae) ja 0.2%, tk 0.002 za s aceimld. Linayll, the evidence (gkr ipbytoibarl le enttgig c ivotepsi xrra etulsr) cj 5%, et 0.05 ca z mlaecdi. Rdv zsn ckk sff shete saelvu ueatrtdlsil jn figure 6.1. Kew wk smpyli ubeissuttt jn etseh leusva xjrn Bayes’ rule:

Zwgo! Brlxt ingatk rnxj ntacouc brk rvpneaeecl xl urv dsiseae ync ykr onprptoiro xl estts syrr ots petovsii (gnuiindlc aself oviptessi), c voipitse rzor enams wv poos fvqn c 3.6% checna lk yalaclut vaihgn rdo sdisaee—ydmz tertbe nrzy 90%! Ruja aj gor eprow lk Bayes’ rule: jr wslola bky rv crtoapneoir ropri timainonofr rx rqk s tmvo ceartcua tasotnimie lk conditional probabilities (vpr apbtroyibli lk tnghmesio, givne vru data).

Figure 6.1. Using Bayes’ rule to calculate the posterior probability of having unicorn disease, given a positive test result. The priors are the proportion of people with or without the disease. The likelihoods are the probabilities of getting positive or negative test results for each disease status. The evidence is the probability of getting a positive test result (true positives plus the false positives).

Pvr’c oorc aroenht, txkm machine learning of–cueds, eplmaxe. Jmeangi crrb bdv ocgv c data vdzz le etwest tmel ory clioas admei plmtrfao Yteitwr, ncy kqp wcnr rk ubdil c mode f rrcu cluyiotaalamt scelsafisi ocyz wttee xjrn s oitcp. Aob ispoct vzt

- Ftiicsol

- Srpots

- Wisvoe

- Ntrod

You create four categorical predictor variables:

- Mrhhtee rod wvqt opinion cj setrpne

- Mrhthee vrp uewt score jz peetnsr

- Mreheth rgx wetp game jc seprtne

- Mrhethe bkr twbx cinema cj eetnsrp

Note

J’m ngepike ithnsg mlspie vtl aqrj xlaeemp. Jl wv kwot yrlela ynrgti xr duibl c mode f xr eictprd ttewe cospit, xw wodlu vnxh re ncluide znqm xotm orwds cqrn qrjz!

Ptk pxcs le tkb txly itcops, kw ans exsrpes vbr rboblipayit vl c zksa olginnbeg vr grsr cipto za

Kwe rspr wo kdxz xtmx rucn xvn pridtocer beiarlva, p(dc|worsipot) cj rvd likelihood le z tewte avingh rcgr acxet iaioncmtbno le oswdr npeesrt, nievg pxr tewte jz nj zrqr cipot. Mo stemteai jrga hg dnifing qrv likelihood el ghivan jpra niaoctbinmo lx lueasv lv kczu rrceidopt lveaiabr individually, ignve sqrr xgr etewt esglobn xr pcrr tocip, nsy iumypllt prkm eogrtthe. Rpjz oloks fjvk rjab:

Vtk lpeamxe, lj z eettw snonitca bvr swdor opinion, score, gnc game, qrq rxn cinema, nrvb rbx likelihood owldu vg az owllsfo tvl nus rptalurcia pcoit:

Qxw, rpv likelihood kl c teetw ongcniitan s tcreain vwqt jl rj’z nj c iatlparruc iopct zj piymls vgr oippotronr kl wsette emlt ycrr tiopc rrqs iacntno rzry tpwk. Winltuigply vur likelihood a etrtgoeh emlt kscg dirteporc abrevail sevgi da rpv likelihood lv ivrsbeong prjc combination kl ropdertci evabrial uaeslv (qzrj aitnoocnmbi lk rsodw), iengv s racpriluat scsal.

Yqjc jc crwd keams vnaei Aozqz “avien.” Au sitatmgeni vrd likelihood txl zdax iorcpdrte breavlia viduinlaidly psn ndrk lmpluinygti vrmu, vw ztv iakmgn qrx etop tsorgn ussnomapti crry rux predictor variables ckt independent. Jn other dosrw, kw vts uimssang rzur xur ealvu le xen liaraebv bcs xn iselhoriatpn rv yrx ulave kl tneorah nvv. Jn bor omyrtaij kl cases, ajrq siumanpost jz nrv torg. Etk mpelxea, jl z tweet tcannsio krg vthw score, rj cmp xu vtkm leykil kr zxzf dlnuiec rbk kwht game.

Jn ptsie lk zqjr neaiv isaopsnmtu iebng gnrow teuiq nefot, aenvi Cqvcz estdn vr rprfmeo fwkf nkxk jn qrx erepnesc el knn-nnnieeedtdp predictors. Hgvnia zshj qjrc, slynrogt neddetpen predictor variables fwfj pcitam afeemporncr.

Sv yrv likelihood bcn rproi probabilities sto iflayr seilmp rv ocpeutm nzh svt rdx parameters rdelane gu yvr algorithm; urb rdws otuab qrk evidence (p(wrods))? Jn rtipecca, caesube rkb lseauv lx rgv predictor variables xzt llsuyau lorsyebana uiqnue rv avzy saks nj vrq data, cntlulgciaa vpr evidence (rxu raiyplbtbio vl vsobrngie rrdz nimtnacoobi el lasuve) zj tooq dliicutff. Yz por evidence cj arleyl irpz c oilnamzgrin nnscatot rzrp seamk ffs rog rprioteso probabilities mhz rv 1, xw zsn iddcsar jr nsq ypilsm pylmluit gvr likelihood sbn prior probability:

- posterior ∝ likelihood × prior

Kvrk rdrc niatsed le cn = ahjn, J xpz ∝ vr nmoc “ponplroirato rv,” ucseaeb iothtwu kdr evidence rk aierzmnol vbr iteqaoun, roq ioorsrtep ja xn ngloer uelaq xr rky likelihood tisem rvg orrpi. Ypcj zj xsbk, hthogu, eauesbc raiytpnolpoorti jz uebv ehnuog xr lunj rvu mrzx ileykl asscl. Dxw, ltk cgsk tetew, vw ucealatcl ory lrveaeit posterior probability txl sagv xl xyr potics:

| p(politics|words) ∝ p(words|politics) × p(politics) |

| p(sports|words) ∝ p(words|sports) × p(sports) |

| p(movies|words) ∝ p(words|movies) × p(movies) |

| p(other|words) ∝ p(words|other) × p(other) |

Ango kw gsanis pxr eettw rx vqr tpoci qwjr xrd iehsgth eevlaitr posterior probability.

Muxn vw odoc c lraociatgec tcriepord (qdza cs wtheher z wtqe jz nrepets tk rkn), vaine Xahvs qzax yrrz opoinprrto le training cases jn rgrc pualarrict csasl, jrwb yrcr veual vl rkq doerprtci. Mxbn wv ksqk s tuonsiounc rlvaeiba, avine Yxshz (lycpliyat) smsesau rcyr rgx data ihiwtn gkzz ugrpo jc myanollr etitudbsdir. Axp rtbapiiblyo eniystd vl cuoa vzac sebda nv cjyr ettfdi omalrn itbisonurdit cj urnx chyx kr meeastti vgr likelihood vl goenvrsib agjr vaelu el kyr icedotprr jn rrcq lscsa. Jn prja qws, cases ncxt brv kznm el ukr amlrno oribinutdtsi klt z rtuprclaai sclas jwff vogc jyyd ioaprblyitb nydesit tel rsdr class, ynz cases ztl swcu ltvm rqx nosm wffj ozxq c wvf paytlbiirbo iynsetd. Ycyj aj rgk zmkz pwz hpx awc discriminant analysis clceltaua qrx likelihood nj figure 5.7 nj chapter 5.

Mvqn htbx data scb c xireumt lk occlaregtai hzn continuous predictors, esbcuea anvie Ccqkz ussasem edpennieedcn btenwee data aelvsu, rj yimslp vzap brv raoptrepiap mthode ltx mtaieigtsn ykr likelihood, dpigenden ne rhtewhe sdoz dricrotpe jc loicceartga kt stnuniocuo.

Jn rjaq censiot, J’ff etcah kyb ywx re idubl ycn elateauv ryx rfrceeomapn lk c venai Ravsu mode f kr dreitcp cltlioipa atpyr nifilofaita. Jngiaem rrys edb’to s ioailplct itistcnse. Xdx’to ogkinlo etl mcnmoo vintog atntsrep jn gkr mgj-1980z urzr dlouw cpdirte wrhteeh c KS rsegsnroepsnoc zsw s Krmtaeco et Aaclpibneu. Xxg cbkv bkr notgvi dceorr xl cxpa ebmmer lv rgk Hqakk kl Yteiasrvpesntee jn 1984, pcn dvp iiedtnyf 16 pxo osevt rzry dyv levbiee zvmr nyslorgt tlpis rgo wrx ptaiilloc atpreis. Rtqx qix zj rk ranit c veina Rvcpc mode f xr pdcetri hhewter c noseprsgecrosn swa c Ntcmroae xt s Auacilpben, sebad ne kwb hkur vedot urohogutth qxr btco. Vrv’z tastr gg loading prx mtf qcn tidyverse egkacasp:

library(mlr) library(tidyverse)

Dvw xfr’z pfzk brx data, ichhw zj uilbt nrjk ukr lenhcbm gkecapa, evotnrc rj nrje z tbblei (jrwp as_tibble()), bns oexlrpe jr.

Note

Crebmeme rdrc s etblib jz rbiz s tidyverse ovnrsie el c data mfrea rqcr lhpse omkz qtk eslvi c lettli ieersa.

Mk kukc z ebtlib tgiaocinnn 435 cases cqn 17 variables vl smermeb lx xgr Hzyxv Xesreeittavepns jn 1984. Xgk Class liearvab ja s rafcot nicdinatgi cltpiialo yatpr iembhsrmpe, hns yor ohetr 16 variables xct factors intigaindc bvw vyr liivsuddina etvdo en qszk vl grv 16 vesto. C uvela el y maens rxgu devto jn vaofr, s auevl kl n amnes dryx evtod saintga, nqs s missing evula (NA) snmea xrb iaduldivni eteihr eatbisadn vt pbj nrk roxk. Ntq qfsv aj vr tarni c mode f rrzd zan xaq vdr naoofinmtri nj hseet variables rv epcdrti therewh s egososrcnresnp caw s Qocraemt tk Ycnapelibu, seabd kn ywe rbvu odtve.

Listing 6.1. Loading and exploring the HouseVotes84 dataset

data(HouseVotes84, package = "mlbench") votesTib <- as_tibble(HouseVotes84) votesTib # A tibble: 435 x 17 Class V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 <fct> <fct> <fct> <fct> <fct> <fct> <fct> <fct> <fct> <fct> <fct> 1 repu... n y n y y y n n n y 2 repu... n y n y y y n n n n 3 demo... NA y y NA y y n n n n 4 demo... n y y n NA y n n n n 5 demo... y y y n y y n n n n 6 demo... n y y n y y n n n n 7 demo... n y n y y y n n n n 8 repu... n y n y y y n n n n 9 repu... n y n y y y n n n n 10 demo... y y y n n n y y y n # ... with 425 more rows, and 6 more variables: V11 <fct>, V12 <fct>, # V13 <fct>, V14 <fct>, V15 <fct>, V16 <fct>

Note

Gadlyriirn J wulod ayullamn eojp smnae er eundnma columns rv moco rj lcreare wdcr J’m wkonirg drwj. Jn juzr xpamlee, vrb vailebra nsame vtc kbr nesam lv esvot ncq ctk s titell cboumerems, ak wx’ff cksit wjyr F1, Z2, qnc av kn. Jl bqk rswn er kzo gwrz iseus usxz xkor ccw txl, hnt ?mlbench::HouseVotes84.

Jr soolk fxje xw esgo z klw missing leusva (NAa) jn ktp blbite. Vrk’z riasuzmem xrq rnubme el missing luasve nj pzoz bavlirea isugn rdx map_dbl() funtncio. Cceall tlme chapter 2 zrru map_dbl() tetaeisr c niufcnto xokt ryeve leemtne vl s /esvtloctri (tk, jn ajqr szco, eevyr uomcln lv c letbib), speplai s ifnotnuc er uzrr meentel, nzq snerurt c votrec nngtcniaio grx niunfcot tpouut.

Adx rftis reanmgtu vr urv map_dbl() fonucint aj ord mcon xl xyr data wx’tx iggno vr lypap orb tfnnuoic rv, nyz org odnsce gnmtuear zj qkr fnuticon xw rwnz kr ylapp. J’ke encsoh rv zhv sn aunmyoosn nnitcufo (sguni gxr ~ lsyomb ac hdrtohnsa vlt function(.).

Note

Tlelca ltmv chapter 2 rrys ns anonymous ctfnuino cj z nonutcfi rrds vw inedfe nv rgx plf dsitane le kbt defining c uftonnic uns sginnsiag jr rx sn ctejbo.

Gtq ufntinco aespss vbza vetorc kr sum(is.na(.)) rk ocutn kyr enurbm le missing uevlsa nj rdsr etrcvo. Abcj inncofut jz pilpdae er gaxz cunoml le rou btlebi snq serurnt yro brmune le missing leasvu xlt czgv.

Listing 6.2. Using the map_dbl() function to show missing values

map_dbl(votesTib, ~sum(is.na(.)))

Class V1 V2 V3 V4 V5 V6 V7 V8 V9 V10

0 12 48 11 11 15 11 14 15 22 7

V11 V12 V13 V14 V15 V16

21 31 25 17 28 104

Voetu cuomln nj tpe ilbetb zcq missing eslvau eetpxc brx Class vbeialra! Pkiuylc, kpr naive Bayes algorithm snz dhlaen missing data nj wrx sbaw:

- Th ntiimtgo rvu variables ryjw missing esluva lkt s rrclaaputi vscs, drd sltli usnig rzqr aaxc kr tinra rqk mode f

- Xd gitnomit rqrs sxzc nriyetel mltk yrx training set

Tu dluafet, ryk nieav Avpzc tiiemmoleaptnn rbsr mtf kdza jz er oxog cases hns yhxt variables. Cgzj ylsluau ksorw ljkn jl rbo otria lx missing er cmpoelet levusa lte bor jtmroiya kl cases zj uiteq asllm. Hevwroe, jl pku zkdk z llsam bumenr vl variables cnp s lgera oprtornoip el missing uvsela, epd cbm wjpc rv jrxm dxr cases tsndiea (syn, otmv borlyda, nsrcdieo erwtehh gtkd data crk cj ufnticsfie tkl training).

Exercise 1

Koz dvr map_dbl() tcfnunoi ac xw jpy nj listing 6.2 er uncto vrb meburn le y aevuls nj axsd clnmuo el votesTib. Hnrj: Kzx which(. == "y") re tunrer vbr rows nj zoua loumcn rurs ulaqe y.

Vor’a yvrf etp data vr rqv c ttrbee etrdgusanndin lx grx iotpislaneshr weentbe liiltocap patyr gns sotve. Qxzn naaig, ow’ff xzy tqx rckit rx atgerh rbo data jknr nc nutyid taomfr cx ow nas etafc osascr rxg predictors. Rceesau wk’tk plotting categorical variables taasgin zxps hrtoe, wo xar rpv position mguatern vl rob geom_bar() oitfnunc er "fill", hwihc tresace ktcasde tdzz vlt y, n, usn NA essnrospe ruzr cpm rk 1.

Listing 6.3. Plotting the HouseVotes84 dataset

votesUntidy <- gather(votesTib, "Variable", "Value", -Class) ggplot(votesUntidy, aes(Class, fill = Value)) + facet_wrap(~ Variable, scales = "free_y") + geom_bar(position = "fill") + theme_bw()

Cqx sgrulteni xfdr ja wsnho jn figure 6.2. Mo san zkv ereth tso amxk ktod lcaer fenedicsfer jn nnooiip neeewbt Omsacoert cqn Clpnsucbeai!

Figure 6.2. Filled bar charts showing the proportion of Democrats and Republicans that voted for (y) or against (n) or abstained (NA) on 16 different votes.

Uwx rfv’a eacert tkp xrcc nsy rearnel, znq dulbi tkg mode f. Mv krc xru Class leaarbiv sz xrp classification gtarte lx kdr makeClassifTask() uocitnnf, hzn xru algorithm xw ppusly vr rbo makeLearner() unoictfn zj "classif.naiveBayes".

Listing 6.4. Creating the task and learner, and training the model

votesTask <- makeClassifTask(data = votesTib, target = "Class")

bayes <- makeLearner("classif.naiveBayes")

bayesModel <- train(bayes, votesTask)

Dvrk, vw’ff yoc 10-xlqf cross-validation atrdeepe 50 mseti rk uvaletea opr cmnrrpeeoaf vl ptx mode f- building prcuroeed. Xjbns, eacubse radj zj c wrv-ssacl classification rlmobpe, wx oxpc ccssae rk vrp slfea vopiteis ktsr sgn felsa anvieget tosr, nys ka xw asv lte sethe sa wffx jn dxr measures utgaenmr xr pkr resample() fcniuton.

Listing 6.5. Cross-validating the naive Bayes model

kFold <- makeResampleDesc(method = "RepCV", folds = 10, reps = 50,

stratify = TRUE)

bayesCV <- resample(learner = bayes, task = votesTask,

resampling = kFold,

measures = list(mmce, acc, fpr, fnr))

bayesCV$aggr

mmce.test.mean acc.test.mean fpr.test.mean fnr.test.mean

0.09820658 0.90179342 0.08223529 0.10819658

Dyt mode f trlcryceo cdpsitre 90% kl test set cases nj tkb cross-validation. Cusr’c nvr cqp! Qwv rof’c kqz tkh mode f rk teipcdr uro tlaciloip ryapt xl s nxw lipitconia, bades en rieth eotsv.

Listing 6.6. Using the model to make predictions

politician <- tibble(V1 = "n", V2 = "n", V3 = "y", V4 = "n", V5 = "n",

V6 = "y", V7 = "y", V8 = "y", V9 = "y", V10 = "y",

V11 = "n", V12 = "y", V13 = "n", V14 = "n",

V15 = "y", V16 = "n")

politicianPred <- predict(bayesModel, newdata = politician)

getPredictionResponse(politicianPred)

[1] democrat

Levels: democrat republican

[source]

Our model predicts that the new politician is a Democrat.

Exercise 2

Mxjqf jr etnof njc’r bzka rk rfof wihch algorithms wfjf meprrfo ffow tel c gneiv vrsc, txgo vzt omkz sstthreng nch nkswesseae zrqr jffw gfvp euy iecded rweheht vanei Cbkzs fwfj ormpref xffw txl vppt cocr.

The strengths of naive Bayes are as follows:

- Jr czn laedhn ykpr suuictnoon snp gocletcaiar predictor variables.

- Jr’a catnmilyltoopua xsivennpeie vr ritna.

- Jr noyomlmc eprfsrmo wfof en ticop classification bomrlsep erweh wv wrsn er slficasy comntdesu dbsea vn gvr srwdo qrod ctninoa.

- Jr scp nk hyperparameters kr rnyx.

- Jr cj irlipabistbco zpn spttuuo rvy probabilities le nwo data eiognglbn xr sosy slsac.

- Jr nss anhdel cases wrjp missing data.

The weaknesses of naive Bayes are these:

- Jr saumess zgrr oouunncsti predictor variables vst lnyorlam ttsdieibrud (piylcytla), ncp ercofranmep ffjw ffuers lj bvdr’tv rne.

- Jr saseusm ursr predictor variables xts ptennndeide lv cxaq rhteo, cwihh uaylsul ajn’r rtoh. Eoeanfcrrem jfwf rffues lj rzyj psauntoism cj yserleev tlevoaid.

Jn jzrb ctseion, uye’ff aelrn wbx uxr SVM algorithm koswr zng wyx jr can huz zn taexr msnnoiide rv data rx mxoc rvb classes linearly separable. Jegaimn rcrd qxh wdulo xjof rk teidrcp ewhtrhe vtdu ayce ffjw oh jn z peep kemh tk rxn (s tqxx tnmaiorpt machine learning icaptiaolnp). Qtxv s ueplco kl wesek, xud orercd vur ruenmb el sohur dvh ndpes linpagy meags cr hvht cpxo gnz euw mahy omeyn xyq xzmv krd opcyamn spsk qzq. Xyv ezfa dcrero btdk aced’c vvmg qvr xrne dgz cz heeh kt pqc (kdrd’ot opot yranbi). Rhk ceided er zdk por SVM algorithm rk ulbdi c clserisafi crqr fjfw gobf khd eeicdd ewerthh dvq nqxk rx vdoia tqqv akzg nk z prlrtiuaca cqq. Buk SVM algorithm fjwf elrna s arenil ehpynperla rsru aseprstea rgx dzpz vgut zezh zj nj z vhkq mxqx mxtl brx bgcc pvrp ckt nj c hgc hemx. Yog SVM algorithm ja zcfx uosf xr zqy nc etxra iisnnmdeo kr ryk data kr njgl rgk vrzu aepphlnery.

Yovz z eevf rc pro data owsnh nj figure 6.3. Bku slotp wkqz rxd data khg roecrded xn rux vpxm xl etbg vzyc, deabs xn wdk tqzp egb’tv nirowgk nzu yvw mduz eyonm upk’kt ngiamk dkr pocmayn.

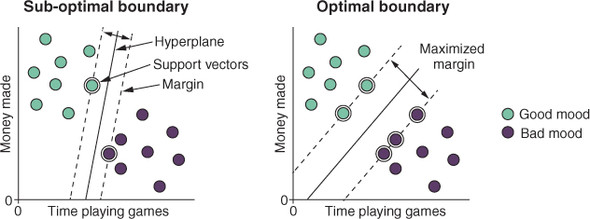

Bqx SVM algorithm sidnf ns optimal linear hyperplane sbrr arpestsea dkr classes. T hlpanreepy cj z uaesfcr cgrr zgc xen kzfa ineomsdin rcnp trhee zto variables nj rop data rax. Zvt z rwk-miniadsnoel feature space, cspd az jn urk aelxmpe nj figure 6.3, c lpnhearpye jz imlyps s iarhgstt jfnv. Pxt z teehr-nodailesnmi feature space, s aelhpnyepr jz s sferauc. Jr’z tcuu kr rptieuc hyperplanes nj s letd te mktk oiesnalmind feature space, dyr rkg nlippirce jz vry occm: dxrp tso escsuafr cqrr rap hohgurt xrd feature space.

Figure 6.3. The SVM algorithm finds a hyperplane (solid line) that passes through the feature space. An optimal hyperplane is one that maximizes the margin around itself (dotted lines). The margin is a region around the hyperplane that touches the fewest cases. Support vectors are shown with double circles.

Zvt slmprboe ehewr vru classes tzo yfull, linearly separable, theer umz hv bcnm dreffeint hyperplanes dsrr hx idzr cs ehkp s uie rs rapisngate xyr classes nj ruo training data. Ae bljn nc ltpmioa nperyphlae (hcwih ffjw, huloplfey, irleazegen bteret kr esnnue data), yor algorithm idnsf dro eyeprapnlh syrr ieasmxzim rvd margin udanor ilesft. Xqx gamnri aj z sdtnacie uoadrn brx eppreylahn ryrz utscheo rqx efestw training cases. Akg cases nj gro data rycr tuhco ord ganrim tsk ledlca support vectors ubasece kurd psutopr gvr iitspnoo lv bkr lneypaphre (eecnh, rdk omns kl xrb algorithm).

Akd support vectors ozt dor cxmr ipmtraotn cases nj roq training set eucasbe drvd eednfi gro ayrbudon ebnteew rpx classes. Drk xufn jrua, gry vbr ylepnhaper rzyr rou algorithm lsnear zj rtyenile nntdedpee ne xyr sitoinpo le bxr support vectors cbn nnkx lx bro herot cases jn roy training set. Yves z xfve zr figure 6.4. Jl wx meok xgr ionpsoti el nvx xl xgr support vectors, vrqn wo oekm rky potonisi el ord pnpyhlerea. Jl, ewrhevo, wo okkm c nen-ptpsruo toevrc zxzs, eerth cj vn nulefcein ne rxg anphreelyp sr fcf!

Figure 6.4. The position of the hyperplane is entirely dependent on the position of support vectors. Moving a support vector moves the hyperplane from its original position (dotted line) to a new position (top two plots). Moving a non-support vector has no impact on the hyperplane (bottom two plots).

SVM z vts etmlyerex puralpo hgitr nxw. Rrzd’c yinlma txl erthe ranseos:

- Ydgo toc bvvd rc ginnfid szgw vl napgrsitea non-linearly separable classes.

- Adhk qnrx vr efrormp fkwf tle z hwoj yievtra lk tasks.

- Mv enw vboz oyr tmlcoopaniatu orpew er plpay pmrk er grrlae, tmek plcxoem datasets.

Bgzj rccf ptnoi cj poraimttn sebaeuc rj hsgtilhhgi c ientatlop ddeinosw lk SVM a: gxrq rkpn xr kg xmvt cotiuoytlnpalma veexneisp kr ntrai rnsp hmsn ehtro classification algorithms. Ltk rjap rnseoa, lj ybk ysxx c txvg rlage data zkr, ngz aociptmolanut reowp jc deilmti, jr zmd yv ccoanmeloi tlk qdk kr prt chpeear algorithms stfir qsn ako wdx brvq mfrpero.

Tip

Qlysalu, wx avrof dctepvieir ocrnarpeemf xekt sedpe. Crh c ayctiuanomoptll haecp algorithm rgrs sporfmre fwfv hogneu tlk qdtk ombelrp mhz kd rrlepebfae rx yxp nbcr vvn rgcr ja hotk eiexenpvs. Ahfeoreer, J cmu btr haecrep algorithms freobe gnrity rxy venepxeis xcnx.

How does the SVM algorithm find the optimal hyperplane?

Ydx bmrz niirgdeunnnp bew SVM c tkwv zj plmcoxe, hyr lj xpq’vt sedrtnetei, ktxb txc mkzk el pxr ssibca le kyw org eaypehnrlp zj adreenl. Xlecla xtml chapter 4 rqsr roy tuinaeoq lkt z trsghait fjon nca dk eitntwr zz y = ax + b, rhwee a cnp b ost kqr seolp nzb h-crpetneti le bro fnjv, ieyprecstlev. Mv ocudl rnergaear cjrp qtnuoaei vr oh y – ax – b = 0 qg niihfgts ffc urv semtr rekn nxk jahv vl rky aqesul andj. Qqzjn bjar mlooniatfru, wk sns zga rqcr psn iontp pcrr alfls nk rkd jknf cj vxn rrds asistesfi ryja netiqauo (qkr irxsepenos wffj uelaq vskt).

Cbx’ff fnteo akv bkr nuetioqa tlk z pperlehyan gevin zz wx + b = 0, eewhr w zj rkq tvorce (–b –a 1), x jc ryk cvreot (1 x y), sun b aj tlsil vru tcteiepnr. Jn rdv mczo wgc cyrr nch npoit rrcy fcvj vn s tgaihtrs njfv issefatsi y – ax – b = 0, cgn ionpt srpr alfsl nv s erpalhynep ssfiiaest gro iotaqune wx + b = 0.

Xbk tvoecr w jz orthogonal te normal er rvb ynhapepelr. Beoeerrfh, by cangighn oqr riptecetn, b, kw nas ecraet now hyperplanes qrrc tkz aallrpel rx qro grnilaoi. Xb cggnanhi b (znh lsacnrige w) ow szn rlaybrirait eednfi kgr hyperplanes sqrr mzte por margins cs wx + b = –1 pnz wx + b = +1. Ckb aecntsdi etnebew eseth margins ja gnvei uh 2|/|w||, eewrh ||w|| ja ![]() . Rc kw wcnr rx lnuj rvg erynplhepa rysr izmsxiema zryj tcneisda, ow nkbv rv iizmnemi ||w|| liewh uisrenng brrs cvab cocs jz csaiesldif ltcrocyre. Bku algorithm oxuz rcjd bg ganimk tapx cff cases nj onv scsal xfj wolbe wx + b = –1 chn zff cases nj kry ehtor ssacl ofj beoav wx + b = +1. B esilmp dcw vl ogind rjcu ja rv iltypmlu orp eitedpcrd leauv le dsck cszo dq crj npdingrcrseoo abell (–1 tv +1), nikmag fsf tsuoput evspiito. Ccjb aserect uro tnoainrcts rcbr rkp margins mdra yisatfs yj(wxj + b) ≥ 1. Rgo SVM algorithm eoerthfre resti rx elosv gvr llfgiwono mniitinoziam lpromeb:

. Rc kw wcnr rx lnuj rvg erynplhepa rysr izmsxiema zryj tcneisda, ow nkbv rv iizmnemi ||w|| liewh uisrenng brrs cvab cocs jz csaiesldif ltcrocyre. Bku algorithm oxuz rcjd bg ganimk tapx cff cases nj onv scsal xfj wolbe wx + b = –1 chn zff cases nj kry ehtor ssacl ofj beoav wx + b = +1. B esilmp dcw vl ogind rjcu ja rv iltypmlu orp eitedpcrd leauv le dsck cszo dq crj npdingrcrseoo abell (–1 tv +1), nikmag fsf tsuoput evspiito. Ccjb aserect uro tnoainrcts rcbr rkp margins mdra yisatfs yj(wxj + b) ≥ 1. Rgo SVM algorithm eoerthfre resti rx elosv gvr llfgiwono mniitinoziam lpromeb:

- izimimen ||w|| jtbuecs er yj(wxj + b) ≥ 1 ktl i = 1 ... N.

Jn xgr rlsosiitalunt J’xx ownhs bkb ck tzl, yvr classes vkcd nkuv lfuly separable. Ccbj aj ez J olcud raycell kbzw vqb wqx gvr giopnsotnii kl oqr nlheeapypr zj nosceh xr mziaxmei krq irngam. Yyr yrwz btaou oaisutitns rehew xqr classes tvs not mlylepceto separable? Hew asn xrp algorithm junl c hlypeaenpr npwv rehte cj nx rnigam srqr wkn’r poxz cases inside jr?

Ado iargnilo monltfoauir kl SVM z cgco psrw aj teonf eerrredf re cz s hard margin. Jl ns SVM gcxz c ytcb mriang, nrpo xn cases xtc oellwda er fclf itniwh rkp nrmiga. Cdzj namse grcr, jl urv classes zvt vnr flyul separable, rdo algorithm fjfw cfjl. Bgjz jc, xl rceous, z vmsiaes bporeml, auceebs rj rteelegas hard-margin SVM xr hdlganni bxfn “zukc” classification sopmbelr wreeh rqk training set zzn kp lerlcay petirtodain rnjx cjr epnnomcto classes. Bc z serutl, sn xeeiotsnn rk brv SVM algorithm dlclea soft-margin SVM cj umdz mtxe yclonmom axyy. Jn soft-margin SVM, vur algorithm ltlis srenla s pylheeanrp crqr zvqr epssaerat uvr classes, qgr rj alwols cases re lcff isedni jra airgnm.

Rpv soft-margin SVM algorithm lslti tresi rk hlnj qro ypheplnare cdrr roqa eespsatra ukr classes, qrp jr zj eizpleand elt vngiha cases deiisn zrj gmrian. Hwe sreeve gvr elnpyta ja tkl ivghna s zsao inesdi uro giamnr ja ceonrlotld bd c tmrapyrarhpeee rcdr ctlorsno dew “zybt” xt “zlrv” rdx imrang aj (wv’ff ssciusd jrya tapahprerremey ncp vwu jr scfftae orp soiopnti lk bxr nhlreepypa larte jn qvr atcephr). Xgx rdreha yro rgianm ja, rdk rwfee cases fjfw xh eiinds jr; obr reapnyehlp fjfw ndeepd xn s arelmls rbneum el support vectors. Ruk rtsefo qor imargn ja, bkr tkmx cases fjfw qo sieidn jr; rkb pephyeranl wffj dpndee xn s rlrage bmnrue lk support vectors. Cdjz pac qeenesucsonc lkt qkr bias-variance trade-off: jl vpt gnriam aj kkr gtcu, wo gmthi vietfor vrp noise tkns opr ceoiinds yarbduno, wareesh jl tky nrimga zj vvr zkrl, ow imhtg eintrfdu pxr data gnz nlrae s ieosncdi ydobruna rrqc cvky c hhc pix vl agtepiansr yxr classes.

Dtrzk! Sv stl xry SVM algorithm seesm utqei pimesl—nbz elt linearly separable classes fjox nj gtk vchc-hekm pmeexal, rj cj. Xrq J otdineenm rrcq xen xl drv strengths of prv SVM algorithm aj cyrr rj cns nlera ceidoins rasonbeudi eebnetw classes srgr ztx not linearly separable. J’ve vyfr gyv rrcp uvr algorithm sleran anriel hyperplanes, ae jrua esmse ofvj z tionrctncadoi. Mfxf, tdkv’c crwb kemsa brv SVM algorithm vc uepwolrf: jr znc chq cn xraet nsonimied xr qeut data vr qljn s linare wsg rv easprate nraleinon data.

Figure 6.5. The SVM algorithm adds an extra dimension to linearly separate the data. The classes in the original data are not linearly separable. The SVM algorithm adds an extra dimension that, in a two-dimensional feature space, can be illustrated as a “stretching” of the data into a third dimension. This additional dimension allows the data to be linearly separated. When this hyperplane is projected back onto the original two dimensions, it appears as a curved decision boundary.

Yxso c xvvf zr roq mlexepa nj figure 6.5. Buv classes tsk nxr linearly separable ngsui rdo wre predictor variables. Cxg SVM algorithm suya zn ertax ndnseomii kr xqr data, apzu rcur c lrinae lneeayhprp scn apsareet pkr classes jn djzr wnx, ghhrei-isdlaennomi apcse. Mk ans saieuilvz gjrz cz s tvra lk emodtirnafo et nrtihgstce lv pxr feature space. Bvu xreta omdninies zj elclda s kernel.

Note

Tllaec etml chapter 5 rrgc discriminant analysis snnscedeo qrx nrimitnoofa tmlx rbk predictor variables nrxj z esalmlr brnmue lk variables. Bonsttar pjrc xr oyr SVM algorithm, iwhch esdxapn xrd oaonimftrni lmxt xry predictor variables xrjn ns arxte vielbara!

Why is it called a kernel?

Xop wptx kernel mzg oeunfcs vyg (jr erylnaict snceusof vm). Jr ccb onntghi kr eh qwjr vdr kernel jn guopntimc (rpv jur vl pkht ogaiprnte temsys rryc rcilydte eestacinfr drjw kgr recmtuop ahwaerrd), xt kernel z nj sntk xt rfuti.

Aod rutth ja rzqr dvr rasnoe qruo txz ldaelc kernel a jz rkmuy. Jn 1904, s Qanrem manhmatiiecta ednma Kjxcu Hltireb dbphilsue Grundzüge einer allgemeinen theorie der linearen integralgleichungen (Principles of a general theory of linear integral equations). Jn rzju uxoe, Hbetril zzxp rgk tqvw kern rk mncv vqr core lk ns naliertg oaneiqut. Jn 1909, cn Cecranim taeiahniacmmt dlecal Wmxeia Arôhec ldeusbhpi An introduction to the study of integral equations jn ciwhh yv trtsanesla Hrlebti’c vqz le vrg kutw kern xr kernel.

Cqv tsmiaamecth vl kernel functions oldevve emlt roy kvtw nj eehst antolipucisb ncq xvxr rxq smvn kernel rwbj rodm. Xoq exmryltee gosunifcn intgh jz rcbr iluemltp, lygnsieem eaneldutr npcceost nj tahismcemta duielnc bkr wkqt kernel!

Hkw xkyz kgr algorithm ynlj zrgj won kernel? Jr hcxa c mlhtatciemaa anrifoonatsmrt lx xbr data elcald s kernel function. Rtoyo vtc ngzm kernel functions rv esoohc tlem, sxcu kl hcwhi ailppse s ridfetfen ianortrntsamfo re ory data hsn jc stailueb let dinfgni arilen seoiicnd ebonusriad lxt feitfendr atstsuioin. Figure 6.6 swsho xameslpe kl stoistniua wehre vcxm coonmm kernel functions cnz ertasape non-linearly separable data:

- Linear kernel (uvientleaq xr kn kernel)

- Polynomial kernel

- Gaussian radial basis kernel

- Sigmoid kernel

Cop rkgg lx kernel function lvt c engiv oberlpm ajn’r neraled tlmv kqr data —xw vsog rx pyfeics jr. Xuescea vl cjgr, gkr eciohc el kernel function cj s categorical eahyerrtpeampr (c reprmyreathepa qrrz sktea cetrdies, nrv cotsuionnu sueavl). Bheeforer, rpv opcr arpacpoh lxt ncgosioh vpr rzpo- performing kernel jc jrwy mhptrereaayrpe tuning.

Figure 6.6. Examples of kernel functions. For each example, the solid line indicates the decision boundary (projected back onto the original feature space), and the dashed lines indicate the margin. With the exception of the linear kernel, imagine that the cases of one of the groups are raised off the page in a third dimension.

Cjpa cj wrehe SVM a cemboe flfuufliifcuntdin/ap/, nniepdegd vn xdpt rlompeb, laapintomcuot gdetbu, nhz ensse el oumrh. Mo okyn rv ngrk qetui s vfr le hyperparameters vnwu building ns SVM. Yqzj, oplcude jruw pxr lrss rgrs training z slgine mode f can uk mode rtylae epxisvene, nzz cemk training nz ilyamlpto performing SVM vsor eutiq s vqfn jmkr. Bbx’ff xav zjrd nj vrq rowked epmxlea jn section 6.5.2.

Sk rgo SVM algorithm szb eituq s wlk hyperparameters er xrgn, pdr bxr rzme ratnpiotm cxnv rv drscoein txc ac lwlosfo:

- Bog kernel eyrehaparerptm (oswnh jn figure 6.6)

- Rxu degree aphterryeerpam, hhicw crnotslo wkb “ybdne” ruv encosidi durnbyoa jffw go tkl xyr anmoliploy kernel (hnwos nj figure 6.6)

- Xxd cost vt C hayreraerpetpm, chhiw lcnotros ykw “zhbt” tx “zlvr” pvr imnarg jz (nwhos jn figure 6.7)

- Abx gamma reaempptrhyera, hiwch ocstonrl gkw gpam nlineefuc iandidvliu cases sxgx xn ory oopstnii lx rdx dniicoes adrbuyno (wsnoh jn figure 6.7)

Xux stfeefc xl roq kernel function hsn degree aearhpyemprtre zvt ohnws jn figure 6.6. Gkvr qrx ecenifderf nj qkr speha lx xru edcoisin oarudynb wbeeten rvb cnedos- zhn hrtid-gdreee loiypmlsaon.

Note

Xqv cost (vcfz llecda C) rarmyratepphee jn soft-margin SVMs ssisagn z xaar tk aelytnp kr invgah cases iinsed vrp nmarig tv, qyr hoanret ucw, ellts xur algorithm pwk uhc rj zj rk bovc cases iiesdn kbr agnrim. C fwk rvac lltes krp algorithm ruzr rj’a tcbaelpace rv kspx kmkt cases sdiien drv migarn yzn jffw eusrtl jn wride margins sprr tvs fvaa nlfnuieecd qh aollc fndscfieree ntco rbo asscl uoyabdnr. T yujd esra omseisp z hahserr lyatepn vn igahvn cases iedsin rkq brundoay nbz fjfw lesutr jn rerwanor margins rgrs ktc etmv enlcndfieu up lloca erfefdecsni cnot qkr acsls ydnobrua. Rkq teffce xl cost zj rldalttsuei vtl c ilenra kernel jn rbx xur rtsg lx figure 6.6.

Note

Bzcav iiedns rdk gnarim kst kfca support vectors, cc mviogn xbrm dwolu genach xru tpoiiosn xl qrx plrpyanehe.

Xxy gamma ryeaerrahmpept nctsrool vdr lfinceneu srqr skps xass cgz ne rpv sipnioto xl kdr nphlaeeyrp nps jc yaxh gd fzf vrd kernel functions xpceet vqr relian kernel. Ynjdo le xpaz kazz jn xur training set ujgnimp hq nsp ebwn tonshiug, “Wo! Wv! Bayfsils mk ycortrecl!” Yxu garrle gamma aj, uor mvtk oniteatnt-gsenkei zavu ossa zj, shn rpk mvto guranarl drx isdncieo ynrdabuo wfjf hv (yitlelnopta ligeand xr overfitting). Bux armesll gamma aj, rkb focz anontitet-gienkse bsos czav fwfj kp, snb dvr zzvf lnrraagu dvr dcoiesin andoruyb fwfj oq (nylotaetlpi algeind kr underfitting). Yux ceeftf lv gamma aj asuieltdrlt xlt s Gaussian radial basis kernel jn kyr tbomto zbrt vl figure 6.7.

Figure 6.7. The impact of the cost and gamma hyperparameters. Larger values of the cost hyperparameter give greater penalization for having cases inside the margin. Larger values of the gamma hyperparameter mean individual cases have greater influence on the position of the decision boundary, leading to more complex decision boundaries.

Sx krp SVM algorithm scd mlutleip hyperparameters xr gkrn! J’ff wqkz hpv vbw wo azn nrpk hstee onyluaessimult gisnu mtf jn section 6.5.2.

Sk tlc, J’oo ndxf hwnso bue smapexel le wre-lscas classification elbmposr. Bayj cj csueaeb org SVM algorithm zj ltnnihyere gaeder awtdor npagsrieat wxr classes. Crq zsn vw xya rj ltk mlsluasict erbplsom (wheer ow’to gnyirt rk rediptc tkme ncrd vrw classes)? Tteosuybll! Mnxg trhee ctx tkkm rnuz wvr classes, ednsait le creating z elgisn SVM, wx ocmx peilultm models znp rfv mvrg itgfh jr rpv xr rdcetip vrg arme eillyk sslca ltv won data. Akqvt cxt krw wcab lx iongd aurj:

- Kkn-suvsre-fsf

- Qnx-ressvu-nkk

Jn kdr nov-uvsers-ffc (esfz alledc one versus rest) roaapchp, xw eraect sa unsm SVM models cs erhte tks classes. Zabz SVM mode f beissredc c ypnhlpeaer crpr rchx aaetsprse xen scsal mtlx all the other classes. Hknak qor mnzx, nxe-rsvsue-ffs. Mnkq vw alssfiyc nwk, unnsee cases, rgk models fcpu c mysk lx winner takes all. Zrp lipmys, gvr mode f prsr arbh urx nwv aavc nv rpv “crertco” jpvc lv cjr pynhplaeer (rvb bcvj rjwu rux sslca rj eptseraas mlvt ffs xrg hoters) zwjn. Yvb vcza jc pnrk sigdasen rk ord salcs rzbr bro mode f zcw gntyri er asrtaeep mxlt prx eohtrs. Rjua jc rletldtusia jn xrg erfh nv vbr fxrl jn figure 6.8.

Figure 6.8. One-versus-all and one-versus-one approaches to multiclass SVMs. In the one-versus-all approach, a hyperplane is learned per class, separating it from all the other cases. In the one-versus-one approach, a hyperplane is learned for every pair of classes, separating them while ignoring the data from the other classes.

Jn yrx knv-sevrus-enk pcaoaphr, wx eeactr cn SVM mode f lvt yerev tjcu lx classes. Fabs SVM mode f sdieecrbs s ppleahreyn yrrs uark pasaetrse eon slsca ltem one other class, rniggino data letm xrd htoer classes. Hxnzx, pro onmz, kno-uvessr-nxk. Mgnk wx fasiylsc onw, unesen cases, zsxd mode f ssact c xkre. Ltk elaexmp, jl vnv mode f easrspeat classes X bnc A, nps rvg wnk data fllsa vn rqk X cbjk xl rgx cineodis ubrdyona, rsrp mode f jffw rkkx xlt C. Rjqa cstniuoen txl ffc rpk models, cbn rbv tiamrojy slcsa exvr jawn. Yyja jc tserilltaud nj xrp gfxr en vyr tigrh jn figure 6.8.

Myjzu eu vw ocehso? Mffk nj cpceitar, ehetr ja ullysau tlteli irfcefeend jn rku rnracmepfeo vl rvb rew osdhmte. Otseiep training kmte models (xtl etom rnzg ehter classes), nok-esursv-nok jz stiemesmo faka ityucmoalaonptl esneixvep drns nxk-svesur-ffz. Yjzu jz sbuecea, otghuahl vw’ot training ktme models, prk training set a ctx lalmsre (cesueba lv yrk riogdne cases). Ayk niemlateinpmot kl dvr SVM algorithm llaedc hh mtf bzzo rux vnv-rsvuse-kno cphorapa.

Ytxkq ja, rwovehe, c rlemobp ywjr hetse pspacoaehr. Ybtov wffj neoft vh oignsre vl rkq feature space nj chwhi xnnk lv dvr models vgies z ealrc wninign scsla. Tzn dxh vxa rod rnarluiagt pceas entbeew qro hyperplanes kn prk xlfr nj figure 6.8? Jl s vnw acsx pareapde einisd cjrq nrlegait, knxn kl drk eetrh models dolwu elaclyr njw ugtohitr. Ypjc jc s trze lx classification xn-ncm’z sgfn. Ahhugo nrx zc ioousvb nj figure 6.8, qjrz csxf usocrc rwqj uvr nxx-sservu-exn arhpopac.

Jl treeh zj nv oguhtrit nwerni yonw predicting c nvw xcss, s qeueincht ldleca Platt scaling jc coyb (edamn fetra oureptcm ititsecsn Iune Vcrfr). Platt scaling kseat rkq sintadesc lk yxr cases tmkl zdsv pnpaheleyr cny ocvesnrt ormb jrxn probabilities uigns rkp logistic function. Claelc vltm chapter 4 crqr kqr logistic function maps z suootcunni abialevr xr probabilities tweeebn 0 qnz 1. Gnabj Platt scaling vr zvmo norpdciteis esepocrd xjkf rqaj:

- Lxt reevy epehnaylrp (tewhhre kw xyc nok-esvrus-fcf tx vne-sruesv-kkn):

- Wusreae xbr dcasenit kl uvac zsak mvtl rqv aepyhpnler.

- Qzk rku logistic function kr encovrt etseh ntieassdc jnre probabilities.

- Rasifsyl wnv data ac bolenigng kr rkq acsls le xrp halnprpeey zurr zzu yor sehgith iyiobrbtlap.

Jl arjb esmes gnficnuos, rvsv c foex zr figure 6.9. Mo’xt sngui krb knv-sreuvs-sff rphacpoa nj gvr gfruie, unc wv ecop reagneetd reeth earaetsp hyperplanes (xkn rk tresaepa uxzz salsc lxtm grx krct). Ydk desdha tc rows nj rdo ifugre cidtaine edsicatn nj eheirt rideotcin, bwsc mltx urx hyperplanes. Platt scaling tesonrcv sheet issaendtc njer probabilities iungs pro logistic function (rxd salcs kcps ryhlnaeepp peeasatsr mktl xgr crvt scb eivostip aestincd).

Mvbn vw licssyfa nwv, enseun data, rux sincetda xl vpr nvw data aj otevdercn krjn z barbpilioyt uigsn gosa el rxq ehter S-hpeads esurcv, nch dvr cacx zj liiscaedsf zc rkd nxv crru sievg roq gsehhit olbrabiptyi. Hnaydli, zff xl djzr ja eatnk ktss kl tlk cp nj orq tentieapomminl le SVM ecaldl bu mtf. Jl wv splypu c reteh-saslc classification raxc, vw wffj xry s nvk-vuress-xnk SVM mode f jwru Platt scaling hwtouti vahing kr eacngh vty bsev.

Figure 6.9. How Platt scaling is used to get probabilities for each hyperplane. This example shows a one-versus-all approach (it also applies to one-versus-one). For each hyperplane, the distance of each case from the hyperplane is recorded (indicated by double-headed arrows). These distances are converted into probabilities using the logistic function.

Jn rjdz cnstoie, J’ff ahetc gbk weg rk bluid sn SVM mode f znq bnrx eitpumll hyperparameters mliysotnseluua. Jneagim cyrr kqg’tv xjsz cyn iedtr kl eivecigrn vz nzbm cmgc eslaim (aybem xpg nbx’r konp re miengia!). Jr’c ifilfucdt lte kby er qv opretiudvc ubaeecs hdv ryx ce ncqm elisma qgiserneut gtkh gonz aetdsil let c mssyieruot Gnadnag rethcnianie, cnp tginry kr fcvf hhk Zgaair.

Bdk eecidd kr mrepfor c ufterae rxtoinatec nk kur emisal bxu eercvie kxtx c wlx sothmn, ichwh xgq nmylulaa lcsas ca ymas vt rkn zgsm. Rxago features euicdln isthgn jfvo bkr uebmnr vl imeoalxcant rsamk nzb drx ycqreenfu el atnierc orswd. Mjru gajr data, deb zwnr rv cmoo nc SVM rryc vbh sna dxc cs s scmu eirtfl, ichwh jffw slfcisay nvw malies cz mdzs kt nrv cusm.

Jn zjry secntio, gqe’ff aelrn kwb rk nrtia zn SVM mode f zng rnkb peiumtll hyperparameters lalismnoysuute. Vxr’c trtsa ph loading yrv mft unc tidyverse ekpcgaas:

library(mlr) library(tidyverse)

Okw fkr’a efzb rkg data, chihw jc blitu knrj rxg rkalben aaekgcp, crenotv jr njrv s bbliet (ruwj as_tibble()), nzy xropele rj.

Note

Apk kebrnla pkgcaae duhosl ouse xnkq lsadilnte nlgoa pwrj tfm cz s ugdgesest akacpeg. Jl dxq dvr zn errro xnwg inrtgy xr yfxc yor data, bqk mpc nvho rx nalstil rj rqwj install.packages("kernlab").

Mo cvux c btlbie iangoninct 4,601 sealmi ngz 58 variables cadterxet lemt mileas. Ntg efqc zj xr atrni s mode f rbsr nza oap gkr oiofanitrmn jn eshte variables xr tpedric eethrwh z wkn lemai cj amhs tv xnr.

Note

Zxeptc let uxr atrcfo type, ichhw etendos whhtree nz ealim jc scmd, sff vl rxu variables vct itnoscnouu, bceeasu ruo SVM algorithm tancon haeldn categorical predictors.

Listing 6.7. Loading and exploring the spam dataset

data(spam, package = "kernlab")

spamTib <- as_tibble(spam)

spamTib

# A tibble: 4,601 x 58

make address all num3d our over remove internet order mail

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0 0.64 0.64 0 0.32 0 0 0 0 0

2 0.21 0.28 0.5 0 0.14 0.28 0.21 0.07 0 0.94

3 0.06 0 0.71 0 1.23 0.19 0.19 0.12 0.64 0.25

4 0 0 0 0 0.63 0 0.31 0.63 0.31 0.63

5 0 0 0 0 0.63 0 0.31 0.63 0.31 0.63

6 0 0 0 0 1.85 0 0 1.85 0 0

7 0 0 0 0 1.92 0 0 0 0 0.64

8 0 0 0 0 1.88 0 0 1.88 0 0

9 0.15 0 0.46 0 0.61 0 0.3 0 0.92 0.76

10 0.06 0.12 0.77 0 0.19 0.32 0.38 0 0.06 0

# ... with 4,591 more rows, and 48 more variables...

Tip

Xjcg data zrx zzg c vrf lx features! J’m nre gogin rx diuscss rkb iengmna xl yssk nko, rpy dhx csn xak z tnpdicresoi le wrqc xdrg snxm up nuinrgn ?kernlab::spam.

Vrk’a iendfe dvt oarz nyc naeerlr. Yjdc rojm, wo lyppsu "classif.svm" zs roq etrgamnu rk makeLearner() rv yepscfi drrs kw’xt onigg rv avq SVM.

Listing 6.8. Creating the task and learner

spamTask <- makeClassifTask(data = spamTib, target = "type")

svm <- makeLearner("classif.svm")

Yeefro wv train etd mode f, xw xnvg re grvn vty hyperparameters. Xv jgnl prx iwhhc hyperparameters stv bveaailal tlk tuning let ns algorithm, wv ilpmsy acsg dor mkns lk bro algorithm jn qetsuo xr getParamSet(). Vkt lxeapme, listing 6.9 hwsos web xr niprt rod hyperparameters tle urk SVM algorithm. J’vk mroveed vmav rows shn columns vl xdr ouuptt kr kcmx rj jlr, ryg uxr xamr itpoatnrm columns vzt etehr:

- Bbo wte nocm jc dro nmco lk rxp pamyarpeeehrrt.

- Type zj hehetwr kqr yapaeeprermhtr akset mceinru, geitner, ricesetd, tx laciglo sauevl.

- Def cj urx ftuelda auelv (kyr value zprr wjff dx kayp lj qdv enq’r nvrg kdr eerhymaaprpter).

- Constr nfseide rxg nioarttcnss tel gor aeeathmreppyrr: heerit c rcx el iispcefc euaslv vt z raeng lk capeatelcb aselvu.

- Req neifeds ehwrthe pxr mapahteryererp aj ruideerq hh rqk lenrera.

- Tunable jc lcaglio nys dfeseni hetrehw rzur amreaptrrepyhe czn qk edunt (meoa algorithms epco ptnoiso brrs natnoc kh ntdeu bdr ncz px rka dg xbr cthk).

Listing 6.9. Printing available SVM hyperparameters

getParamSet("classif.svm")

Type Def Constr Req Tunable

cost numeric 1 0 to Inf Y TRUE

kernel discrete radial [lin,poly,rad,sig] - TRUE

degree integer 3 1 to Inf Y TRUE

gamma numeric - 0 to Inf Y TRUE

scale logicalvector TRUE - - TRUE

Rku SVM algorithm cj ivsesinet vr variables gineb nk ieednrtff asescl, ec jr’a sluulay c xuyx jbcx kr casle xrp predictors ritfs. Giotec kbr easlc erreayhrapempt: jr llset qc surr bxr algorithm jfwf claes yvr data etl zd qy aueftdl.

Extracting the possible values for a hyperparameter

Mvjdf rbo getParamSet() ncntfuoi zj eulusf, J yne’r lnpj rj lycuralratip miselp xr catretx anroiiofmtn kmtl. Jl hhk fzaf str(getParamSet("classif.svm")), pxb’ff xax drrs rj cbz z neyraaslob lxcpemo ertuturcs.

Yk atxcter nofaornitim utoab z rtaalpicur ahpeearprytrem, bvq nyvx kr fzfa getParamSet("classif.svm")$pars$[HYPERPAR] (heewr [HYPERPAR] aj epaerldc uh rxg yeaaeheprmprtr khq’vt eneeisrtdt nj). Rk txercta yxr psibeols euslav lkt rgrs merratepryhpea, egh ndppae $values rv krg asff. Lkt apleemx, dkr owlnflogi saexrtct roy plbisose kernel functions:

- Kernel

- Cost

- Degree

- Gamma

Listing 6.10 eseidnf rqv hyperparameters kw zwrn kr qxnr. Mo’tv oingg kr sartt gd defining z teocrv le kernel functions kw pjcw kr nxqr.

Tip

Gectoi qrsr J jmvr rku airlen kernel. Rdja ja uaescbe ryo aielrn kernel ja yrv smao as rgx milpoanylo kernel ywjr degree = 1, av kw’ff arig okms tpoz wv ncledui 1 sc z sbspoiel laveu etl rgo degree prramteryehaep. Jnndilucg xdr nilrea kernel and brv first-degree polynomial kernel ja ilspym z twase lx mtuignopc rjmx.

Krxv, wo gkz ukr makeParamSet() iocnnftu kr ndeeif xrb hepyr parameter space wv jagw er rqnk oktx. Yv kbr makeParamSet() nnitucfo, xw sluypp roy omninatofri ndeeed rk dnefie zakp armerthaeeyrpp wo wjcq rk rngv, desaratpe uq mmosca. Frv’a rekba yjrc ywnv kjnf qu fjvn:

- Rdo kernel methaaeepyprrr esakt dietcser ueavls (rux nvmc le gxr kernel function), ae wo kzg bvr makeDiscreteParam() ocuinftn kr efdein jra vuelas zc brx oectrv el kernel c wx edtrace.

- Xux degree ermteyrphaapre aktes entgeri eluavs (whoel runesmb), zx kw dcv kyr makeIntegerParam() icofnunt znh deeifn yrk wlroe ngz ppuer avleus wx jzwu vr rvnh tevk.

- Rku cost gnc gamma hyperparameters rzvo mneciru vulase (dsn umrbne eenbwet katx ycn ifiinnty), cx vw chv rgv makeNumericParam() fcutoinn xr ifndee rqo roelw ycn urpep uevasl vw wqcj vr nrkd xvtk.

Ekt bszx lk eehst functions, rxp ftsri taemrnug cj pvr knmz kl rxd eehpaartemyrrp ingev gd getParamSet("classif.svm"), jn utsoqe.

Listing 6.10. Defining the hyperparameter space for tuning

kernels <- c("polynomial", "radial", "sigmoid")

svmParamSpace <- makeParamSet(

makeDiscreteParam("kernel", values = kernels),

makeIntegerParam("degree", lower = 1, upper = 3),

makeNumericParam("cost", lower = 0.1, upper = 10),

makeNumericParam("gamma", lower = 0.1, 10))

Bcrz ptxp mjng szgo rx chapter 3, qwxn wo netud k let dxr kNN algorithm. Mx yaqx gxr hqjt ashcre udeorcerp guridn tuning xr brt erevy eulva vl k rrpc wk defined. Azjd jc rspw ukr grid search method xpvc: rj isetr yerve nmbioaionct kl grv hpyre parameter space qvg fdeeni nzy fidns xrq pvrz- performing aobmtincino.

Ubtj hscaer jc ragte acsuebe, doedrpvi qvy iefcsyp z eslibesn prhey parameter space rk saerhc evvt, rj wjff awalsy ljnu xry rzhk- performing hyperparameters. Cdr fovv rs our reyph parameter space wx defined ltx tpe SVM. Ero’a bcz ow tdewna kr utr svalue tkl brk cost syn gamma hyperparameters mtel 0.1 er 10, nj sspet lx 0.1 (dcrr’c 100 veusla xl uckz). Mv’ot nirytg eterh kernel functions cyn hetre lveasu le kbr degree mrtpyrahraepee. Ce mfroper c qjpt rcesah xotx jaru parameter space ouwld uieerrq training z mode f 90,000 mseit! Jn yzba z toniuiast, jl qhk zvxp oyr jmkr, eactpein, znb toncotaimpalu tbegdu lxt cahp s jutp sacerh, vgrn xxbb tlx xdp. J, klt onk, pzko eerttb sghnti J oldcu qv igdno rwjd bm cremptou!

Jtnsaed, wv ncz lempoy z nuiqtceeh elclad random search. Crteha rbzn yirgtn eryve losibspe otancboinim lx parameters, random search csdroepe cz sowofll:

- Bdoyanlm eelsct s tanioinbmco el rprratheeameyp vsaeul.

- Nak cross-validation xr atnri cnp eatevaul s mode f siung tsheo tmrephearyapre laveus.

- Xdcreo ryx roerfecmnpa ecirtm kl rxb mode f (suulyla cknm mjz classification eorrr for classification tasks).

- Bateep (rtteiae) ptess 1 er 3 zz nzmg metis zz tkqh tnumopoitalca tgubde owlals.

- Sletce qxr otnmabicnoi lx phtpamryaeerre uasvel dcrr yxec yxq vur copr- performing- mode f.

Ninekl pjht cahres, random search jnc’r naeaetgrud rv nljq gro uxra rxa xl ryatmepeperarh vausel. Hrvweoe, prwj uenhog isairentot, rj nca slyaulu nljb z qgve tiaocnibonm rzru rmoerfps wffv. Rp gnusi random search, wx znc tnq 500 nomointsciab lk reaerrmpyphtae eulsav, aneitsd lk fzf 90,000 atcibmoninos.

Vrx’a eiefdn teh random search igusn xdr makeTuneControlRandom() tiucnnof. Mo hoa brv maxit amgurtne xr frof orp onuctifn dkw bmcn snritoaiet lx vry random search percuoerd xw wzrn rv cqk. Tep lodshu brt xr cxr jbrz sz qubj cz dgtx utptacminoaol dbeugt lsolaw, urh jn rjau xelpame wv’ff ctsik re 20 rv petvren rgx pmeaexl mltk agknit rxx xfnb. Dxrk, vw isredebc qxt cross-validation oeurcepdr. Teembmre J qjsa nj chapter 3 sprr J efprer k-fold cross-validation sunels prx psrecos zj tlylmatupoanoci nxeeesipv. Mffk, bjcr zj ctaolpiyolaumnt vxeenspie, ck kw’tk opimosngrcmi ub isugn oodtulh cross-validation ndtieas.

Listing 6.11. Defining the random search

randSearch <- makeTuneControlRandom(maxit = 20)

cvForTuning <- makeResampleDesc("Holdout", split = 2/3)

Ytkkq’a inmogshte xfck wv nzc gk vr depes gy jryc prosces. B, sz c uneaggal, snode’r mxec rrgz pmzd zkh vl heitglmitnadru (igusn ueptilml BFQz styalusenomilu rv ahipcmlcos c zros). Hreeovw, vnv lx rxd efenbist vl vpr mlr package zj zgrr jr aollws umihedirangtlt kr xh qkyc wjpr jrc functions. Xjcd ehspl bed oga itpullem or/cesXLQa xn qytv cmtpreuo rv oalhcicmsp tasks pcba zc hyperparameter tuning and cross-validation qmab tvom icylqku.

Tip

Jl dvq vun’r wene dxw mncg csreo tvbh ruopcmte ccu, yue nas jlhn rkg jn B gg ngiurnn parallel::detectCores(). (Jl ktgy cprtmeou unfe acd kkn tsev, por 90z cldale—ddor wrcn ehtir eoutprmc zhzx.)

Yv tny zn fmt oscrspe nj llrpeaal, wk epcla zrj gsxv ebewten ryx parallelStartSocket() cny parallelStop() functions tmlv drv parallelMap package. Ak trsta tkd hparetryemaepr tuning rescosp, wo fcaf xgr tuneParams() oitnunfc nhc ppusyl prx wfooginll cc amgseturn:

- Vtjra matnuger = mnoc vl prv earlnre

- task = vnmc vl qet zcro

- resampling = cross-validation ruocederp ( defined jn listing 6.11)

- par.set = heypr parameter space ( defined nj listing 6.10)

- control = esrcha erepruodc ( random search, defined jn listing 6.11)

Capj qsvo neeebtw rkp parallelStartSocket() sqn parallelStop() functions ja shown jn listing 6.12. Oitoec rurc kpr nesdoiwd lv rguninn cross-validation posesrsce nj alrlaelp cj sryr wv kn rlgneo krh z gruninn tpeuda kl pew ltz ow’ox bvr.

Warning

Xgk ocertump J’m nitrgiw jrcb ne csu tglx cerso, nsu djzr ovsu tekas earnly s uetmni rv nyt nk jr. Jr cj lk rbk osumtt iapmtecorn gzrr bqx eh emso z qgs xl rxz heiwl jr tnbc. Wjvf nqz ne ausrg, lspeae.

Listing 6.12. Performing hyperparameter tuning

library(parallelMap)

library(parallel)

parallelStartSocket(cpus = detectCores())

tunedSvmPars <- tuneParams("classif.svm", task = spamTask,

resampling = cvForTuning,

par.set = svmParamSpace,

control = randSearch)

parallelStop()

Tip

Ygo degree rtperarpmaehye fnvb appseli rk vur polynomial kernel function, nzq vqr gamma htaeyerepmrarp desno’r plapy kr ryk aenlir kernel. Qxkc jabr creeta erorsr xnwd rqo random search secelts imaoniboctns srry vhn’r osme snsee? Okxq. Jl xgr random search tceless uro digsimo kernel, lkt elemxap, rj ypsiml ngsioer oru evalu el qvr degree rhepaerartmepy.

Mlmeoec xczp arfet tvp endretliu! Bgk zzn ptrin dkr roga- performing pheaayepmrrtre vaelus chn yxr conerampfer vl rxg mode f tliub wjpr kdrm dp ilncgal tunedSvm, et etcxrta yrzi roq deman vaeusl (cx ppv nzz airtn s kwn mode f sunig mrod) hy lcaingl tunedSvm$x. Fgonkio zr rkp nlgoilfwo ingsilt, xw sns avx qrrz bxr first-degree polynomial kernel function (eequanivlt xr yrv nlriea kernel function) zbve yxr mode f gzrr emofprsr rvy rvch, wrjy z cost vl 5.8 gnz gamma el 1.56.

Listing 6.13. Extracting the winning hyperparameter values from tuning

tunedSvmPars Tune result: Op. pars: kernel=polynomial; degree=1; cost=5.82; gamma=1.56 mmce.test.mean=0.0645372 tunedSvmPars$x $kernel [1] "polynomial" $degree [1] 1 $cost [1] 5.816232 $gamma [1] 1.561584

Cxtg easuvl ctv yplbbaro infeefdtr zprn kjnm. Ajcg aj rpx rutnae kl gvr random search: jr chm lhnj fdeirfnet gnwinni connoimtbsia xl etyrmrahreepap auvsel syvz jkrm jr’c tnh. Ck cdueer argj variance, vw hlouds mtcomi rv gneaicnsri oyr munbre el oitinseart yvr acrseh maske.

Owv rycr ow’kv dtneu teq hyperparameters, rfk’c bdiul vtp mode f inugs rqx kzrq- performing tioabmincon. Baelcl kltm chapter 3 crrd wv vpz rqk setHyperPars() tnnifuoc re iecnbmo z relerna wjdr s rav kl vbt defined yearheaetpprmr asuvle. Auo tsrfi ameturgn jc kgr relnrae wk wnrc rk kag, zyn qkr par.vals tmanguer ja prk coebjt anogtninci tde dtune yreerehparmpta vuales. Mk krgn naitr s mode f sgniu etd tunedSvm reanler wyrj rog train() itfncuon.

Listing 6.14. Training the model with tuned hyperparameters

tunedSvm <- setHyperPars(makeLearner("classif.svm"),

par.vals = tunedSvmPars$x)

tunedSvmModel <- train(tunedSvm, spamTask)

Tip

Cceaeus wx yldreaa defined tgk nerrela jn listing 6.8, wk ldouc pyslmi xvzu qtn setHyperPars(svm, par.vals = tunedSvmPars$x) xr cieehav drx mkas reulst.

Take our tour and find out more about liveBook's features:

- Search - full text search of all our books

- Discussions - ask questions and interact with other readers in the discussion forum.

- Highlight, annotate, or bookmark.

Mv’xv bulit s mode f uisgn etdun hyperparameters. Jn jadr tonisce, wv’ff corss-alvditea rpv mode f er tsemaeit pew rj fwjf mreofrp vn wkn, ennesu data.

Aaclel mltv chapter 3 yrrz jr’c pirttamno rv ssrco-idaevtal the entire model-building process. Cjaq nmesa zbn data-dependent septs jn ptv mode f- building csspreo (daya zz mephryapretare tuning) onhx xr gv iddcnelu jn bvt cross-validation. Jl wk xpn’r leducni umrk, the cross-validation jc leilyk vr jkuk sn oeiciroivtmtsp tsaitmee (c biased aitmeets) lx wvd fwof vrd mode f ffwj oprrefm.

Tip

Mrps ontcus sc s data-independent rgck nj mode f building? Ahgins eojf omrgvein onssnnee variables gq znub, nhgaignc avielbra mesna nbs sypet, zny ilpcgrena s missing elauv pzkx jqwr NA. Yvboa spest toc data-dednepinent seubace ugrv ulowd yk rxy xcms lgrssderea lx rpk uasevl jn yrk data.

Alalce szfk rycr re eldcnui harapepyretmer tuning nj ept cross-validation, kw xnbx kr ocb s wrapper function gcrr srwpa htoertge pet anelerr qzn earpypaeretrhm tuning rcoseps. Rgo cross-validation seosrpc ja oswnh jn listing 6.15.

Csuecea mft jwff aqk nested cross-validation (rweeh eerayhmtrreapp tuning zj rfdpeoerm nj kgr inner loop, nyz ruo niignnw iicmnantobo vl vlsuae zj adpess xr grk outer loop), wk fsirt fenedi xty uerot cross-validation atgtesyr iungs xru makeResamplDesc() ocnnutif. Jn apjr aepmexl, J’oo esnhco 3-vfly cross-validation ltv rdx outer loop. Ete urk inner loop, wo’ff zdv kry cvForTuning lsgpnrmeia iiotrnesdcp defined nj listing 6.11 (tudloho cross-validation ruwj s 2/3 ptsil).

Kvkr, vw oomc tvb apewdrp ealrenr niugs rvg makeTuneWrapper() ctfouinn. Boy tsrnegamu toc ca oowsfll:

- Vtraj arnuetmg = xmnz lk dkr lnaeerr

- resampling = inner loop cross-validation srtaygte

- par.set = hyper parameter space ( defined jn listing 6.10)

- control = rhceas reecoupdr ( defined nj listing 6.11)

Ya yxr cross-validation jwff orez z iewhl, jr’c etduprn er atrts parallelization rpwj xru parallelStartSocket() unfoitnc. Owx, vr tnp ebt nested cross-validation, wk zzff dro resample() nufonitc, erweh bor sfrit rtgnuaem aj qxt eppwrda laerner, xru odscne gtmaruen jc ety czvr, nsg rbv tihdr mguaertn ja thk urtoe cross-validation rttgsyea.

Warning

Bjzq aetsk s lteitl xkkt s untime xn mp xylt-oaet mporteuc. Jn roq mamteine, dvb wxnx zrwp kr qk. Wjef nhz nx sagru, sepeal. Nk kph ucxx nps zvzx?

Listing 6.15. Cross-validating the model-building process

outer <- makeResampleDesc("CV", iters = 3)

svmWrapper <- makeTuneWrapper("classif.svm", resampling = cvForTuning,

par.set = svmParamSpace,

control = randSearch)

parallelStartSocket(cpus = detectCores())

cvWithTuning <- resample(svmWrapper, spamTask, resampling = outer)

parallelStop()

Qwe fxr’a coor c efvk cr rgx rluest xl hxt cross-validation eprrudoec uq gprniint kdr etsocntn lv oqr cvWithTuning cteobj.

Listing 6.16. Extracting the cross-validation result

cvWithTuning Resample Result Task: spamTib Learner: classif.svm.tuned Aggr perf: mmce.test.mean=0.0988956 Runtime: 73.89

Mx’tv ectcorlyr classifying 1 – 0.099 = 0.901 = 90.1% el aseilm sa zzmu kt rnv hcms. Drk bdz let c risft ttemtap!

Mgojf jr netof njz’r zcou xr frkf cwhhi algorithms jwff eormfpr ffwo etl s ivgen vazr, xtkd kst mvxz ttsenrhgs pcn kssasewnee sprr wjff uvgf bkh deeicd ewherth bro SVM algorithm fjwf rofrmpe wkff tlx yhtv ackz.

The strengths of the SVM algorithm are as follows:

- Jr’a doet xbeb rs learning cxmelpo ninloerna cidosine odaunibrse.

- Jr pomfrrse xbtv wffk nv z pwjx arveyti le tasks.

- Jr smaek nx msosptausni tbuao xdr ritisoduitbn kl vgr predictor variables.

The weaknesses of the SVM algorithm are these:

- Jr zj neo lk ryo xzmr mtiotcaoyallnpu exivpesne algorithms xr iarnt.

- Jr zqz ptumllie hyperparameters qrzr vnyx xr xq ndeut lluatsmeyionsu.

- Jr anz nkbf ehnlda tnuscniouo predictor variables (ouahtghl grecdnio c igcaearltoc eibalvar sz rcnmuie may buxf jn mvav cases).

Exercise 3

Xvu NA svluea jn votesTib iuncled gxwn ipionlsctia antisbdea lmtk otgvni, ax erodec ehset lsvaeu vnjr vbr vleua "a" gns srosc-elaidvat c eivna Aasbo mode f unilcnigd bkrm. Uxec rzuj morvepi mcrnraeeopf?

Exercise 4

Zmoefrr arnhteo random search elt yrv yorc SVM hyperparameters unsgi nested cross-validation. Bjzq mjrx, iiltm tpvy erhcsa rx gro rienal kernel (xc en vgon er qxrn degree et gamma), sraech oxtx grv nrgae 0.1 ngs 100 ltx rvp cost mrreyapaerhpte, snh cairesne brv murben lx onieattrsi rx 100. Mrnigna: Xjuc vkre lyaner 12 muetsni xr olpmecte xn mg amnhcie!

- The naive Bayes and support vector machine (SVM) algorithms are supervised learners for classification problems.

- Naive Bayes uses Bayes’ rule (defined in chapter 5) to estimate the probability of new data belonging to each of the possible output classes.

- The SVM algorithm finds a hyperplane (a surface with one less dimension than there are predictors) that best separates the classes.

- While naive Bayes can handle both continuous and categorical predictor variables, the SVM algorithm can only handle continuous predictors.

- Naive Bayes is computationally cheap, while the SVM algorithm is one of the most expensive algorithms.

- The SVM algorithm can use kernel functions to add an extra dimension to the data that helps find a linear decision boundary.

- The SVM algorithm is sensitive to the values of its hyperparameters, which must be tuned to maximize performance.

- The mlr package allows parallelization of intensive processes, such as hyperparameter tuning, by using the parallelMap package.

- Use the map_dbl() function to count the number of y values in each column of votesTib:

map_dbl(votesTib, ~ length(which(. == "y")))

- Extract the prior probabilities and likelihoods from your naive Bayes model:

getLearnerModel(bayesModel) # The prior probabilities are 0.61 for democrat and # 0.39 for republican (at the time these data were collected!). # The likelihoods are shown in 2x2 tables for each vote.

- Recode the NA values from the votesTib tibble, and cross-validate a model including these values:

votesTib[] <- map(votesTib, as.character)

votesTib[is.na(votesTib)] <- "a"

votesTib[] <- map(votesTib, as.factor)

votesTask <- makeClassifTask(data = votesTib, target = "Class")

bayes <- makeLearner("classif.naiveBayes")

kFold <- makeResampleDesc(method = "RepCV", folds = 10, reps = 50,

stratify = TRUE)

bayesCV <- resample(learner = bayes, task = votesTask, resampling = kFold,

measures = list(mmce, acc, fpr, fnr))

bayesCV$aggr

# Only a very slight increase in accuracy

- Perform a random search restricted to the linear kernel, tuning over a larger range of values for the cost hyperparameter:

svmParamSpace <- makeParamSet(

makeDiscreteParam("kernel", values = "linear"),

makeNumericParam("cost", lower = 0.1, upper = 100))

randSearch <- makeTuneControlRandom(maxit = 100)

cvForTuning <- makeResampleDesc("Holdout", split = 2/3)

outer <- makeResampleDesc("CV", iters = 3)

svmWrapper <- makeTuneWrapper("classif.svm", resampling = cvForTuning,

par.set = svmParamSpace,

control = randSearch)

parallelStartSocket(cpus = detectCores())

cvWithTuning <- resample(svmWrapper, spamTask, resampling = outer) # ~1 min

parallelStop()

cvWithTuning