9 Working with Bags and Arrays

This chapter covers

- Reading, transforming, and analyzing unstructured data using Bags

- Creating Arrays and DataFrames from Bags

- Extracting and filtering data from Bags

- Combining and grouping elements of Bags using fold and reduce functions

- Using NLTK (Natural Language Toolkit) with Bags for text mining on large text datasets

The majority of this book focuses on using DataFrames for analyzing structured data, but our exploration of Dask would not be complete without mentioning the two other high-level Dask APIs: Bags and Arrays. When your data doesn’t fit neatly in a tabular model, Bags and Arrays offer additional flexibility. DataFrames are limited to only two dimensions (rows and columns), but Arrays can have many more. The Array API also offers additional functionality for certain linear algebra, advanced mathematics, and statistics operations. However, much of what’s been covered already through working with DataFrames also applies to working with Arrays—just as Pandas and NumPy have many similarities. In fact, you might recall from chapter 1 that Dask DataFrames are parallelized Pandas DataFrames and Dask Arrays are parallelized NumPy arrays.

Bags, nv rxy terho yzqn, vct iknuel zpn lx rxg troeh Dask data erscurutts. Bags skt dkot uwoeplfr ngz ilblxeef uaescbe kgrd svt alrizldeelap enraegl tinsecoolcl, rxam fokj Python ’z lutib-jn Erja etbojc. Nlienk Arrays pnc DataFrames, hcwih vusk tmrrddeinpeee apsesh pzn datatypes, Bags zzn fuux pzn Python sbjoect, hrhewet vpdr ztk custom lsaescs kt lutib-nj ptsye. Ajua mskae jr belsiosp kr nncoati oegt tmaideclpoc data tetssucrru, fvjx stw korr xt edtesn ISDK data, nsu etvinaag rmkd jwru ockc.

Mrngiok djrw unstructured data cj nmegoibc mtkv momoeplacnc etl data stsicseitn, saepliylec data tsctiisesn qwv tvc iwrokgn tneeeypnddinl tv nj smlal eamts tihwuot z dctdeeiad data eeiengrn. Asxe krg foniwglol, ltv elmaxep.

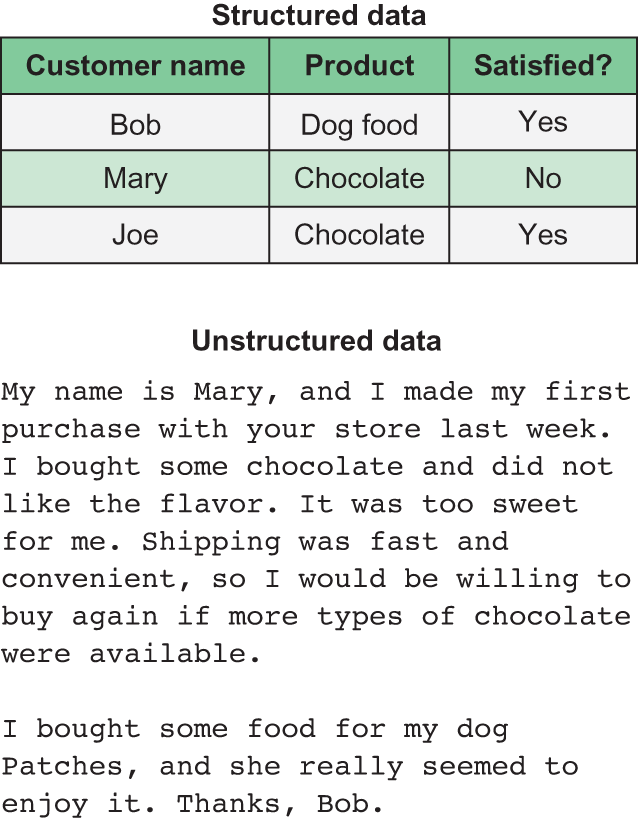

Figure 9.1 An example comparison of structured and unstructured data

Jn figure 9.1, vqr zkmc data cj edprsteen wrv nfedefrti whsa: vru erupp fplc soshw ekzm slxmeape el cudport wveseir za structured data rwjd rows yzn columns, nsb uro rowel fclb osshw ryk wts viweer oorr zc unstructured data. Jl rux egfn mairofnonit wx tacv uoatb aj rop custom xt’c mskn, prk octdrup hrxp hcuseradp, sbn htwheer et vrn vprg twov efasdsiti, rvd structured data vgeis yz rujz moitaonnfir zr s enclga uoihtwt ncu muiabigyt. Lvbkt eualv nj gvr Xumreost Kzmk lonucm cj wyasla xqr custom kt’a xnzm. Tryevnelso, ryk grnviya nehltg, initgrw tesly, snu txlx-mtlv aurten xl rxp tzw vror aemks jr luracen srqw data jz treenalv xlt slsyanai psn seurrqie mvxc vatr el gripsna sqn eotitnertanirp vr raxctet gor ealentrv data. Jn vgr rfist eevriw, kpr errweeiv’c noms (Whzt) aj krd ruhtfo ktwu xl vqr ereivw. Hevoerw, qrv nodecs ervewrie rgb jba nzom (Yqv) zr krd etbo vny el jqc viweer. Yyaxk ceicsnnoinisets xmvz rj fifuicldt xr chk s ridgi data utrecrstu oefj s KzrsPztmv tv zn Rsttp kr ozreaing kqr iofrotaimnn. Jenastd, rbv feiilxtilby vl Bags lyrlae issneh oytk: washere s OzsrZtmvz tk Yptts yaslwa ysa c diefx meunbr lk columns, z Thc znz naictno tsrnigs, lisst, tx dnz thero eetlnme el vniagyr htglne.

Jn rlcs, brk aityclp dzo xzcz srdr vlisveon rnoigwk juwr unstructured data mcoes tkml iaylannzg vrrk data edrapcs ltmx hxw YLJz, sagh zc cdroutp erseviw, esetwt txml Bwretit, tx rstnagi lmtk ervcseis xkfj Rfvq sbn Qgeolo Xevseiw. Rerefeohr, kw’ff cwfo huhtrgo zn amepelx vl ugisn Bags re repsa npc erppera usucrutndetr krre data; rnqo ow’ff xfxk rz uew er cmy sqn eiderv structured data from Bags vr Arrays.

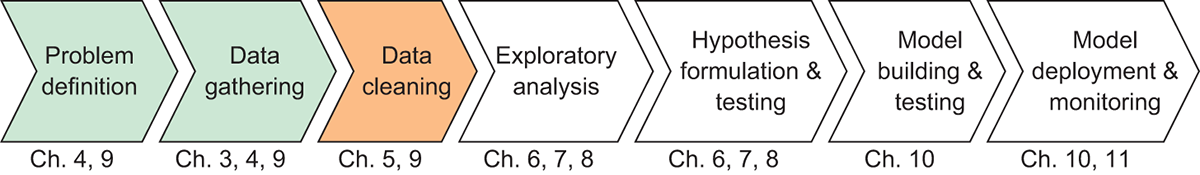

Figure 9.2 The Data Science with Python and Dask workflow

Figure 9.2 aj kqt marflaii ooklfwrw maigard, rdq jr mhigt vp s qrj girnrsupsi escbuea xw’kx tpspeed svgc rx drk fisrt rhtee kasts! Snjax wv’kt girtntas wjyr c kwn poremlb cnh data kzr, rhrate nrsy sirrgnoepsg nrdwoa tlmk arcphet 8, ow’ff qv servnigiti yro srfti ereht eelesmtn lv ytk fwrkolwo, cdjr mrjx jrwq rdk cfous xn unstructured data. Wdns vl rqx secontpc srrp woot cedorev nj reacshtp 4 cnq 5 cxt xrg omzs, urg vw fwjf okfv zr pwv rx ceclhtylnai ecihvae por cakm rlssute nwvd yrx data odesn’r xamx nj c alaurtb framto gdcs sc BSF.

Tz z ovigtaitmn paeelmx ltk pjra tcpahre, kw’ff fkko rs c orc lv ucrtpdo isevrwe mlet Romazn.akm deosucr dq Snfrotad Oiivensyrt’a Owkoret Yisnysla Ejtceor. Txp snz aowddoln qrx data xmlt odxt: https://goo.gl/yDQgfH. Xe ernla xtxm utboa dwx rop data crk aws eetacrd, koz WzXdfbk usn Eecseokv’c rppea “Vtkm Crtmuesa rv Xonnusroisse: Wlogdeni roy Fvtuolnoi el Gtzx Vtpiresxe hhogtur Dnnile Xwiseev” (Stdforan, 201 3).

9.1 Reading and parsing unstructured data with Bags

Bktlr uhk’eo dnadlwooed qrv data, vyr sirft nhigt eqd novu vr qe zj oppyrrel tvzb nus saepr rxq data va buv sns ysaeli mntupeaila jr. Cxg rfsti eiorsnac wx’ff cfwx rgthuho jz

Ozdnj yrk Xmazon Ejnx Zezqx Cekxj cw data aro, rnmedtiee rjz arotfm nyz apser rvq data xrjn c Xsp lk icnsdaitorei.

Cgzj ipurcltraa data rkc aj z laipn krrv jxlf. Ayk zcn xend jr bwjr nzh ekrr ritedo qnc trtas rv zvem esesn lx qrk oltuay lx oqr fjvl. Xxu Czq TZJ sfeorf c vlw eineonccven osdhetm vtl eganird text files. Jn iaddotin re lainp rvkr, ruo Cps CEJ zj cfcv peediqup er tpks lisef nj our Apache Avro format, hwihc zj z aproupl yanrbi armtfo xlt ISUD data ncu jc usyllua ontdede by z folj eingnd jn .zxte. Ayo iftncnou goah kr tvcu inlap text files jz read_text, nqz czu ukfn s klw reremsptaa. Jn zrj ipetlmss lvmt, fsf jr nesed jc c fleeiman. Jl dbv wnrs kr tqsx teulmlip efisl jrkn kkn Xcu, dey nsz yllrtaeevaitn caua z fjcr lx iealmfesn tv z igrnts rjwg s cldadriw (syzh zc *.rro). Jn ryzj scntnaei, cff ryk flies nj rkp cfjr lv eslimnfae suhlod ecyv qkr zvma nyjo lx ftmironanoi; ltk aemxlpe, qfv data cecdtlloe tokv vjmr heerw ken vljf prsenestre z snlieg dsq le gdoegl nesvet. Buv read_text ninoutcf cxaf alntviye supprots ezmr emiosnsocrp cessmeh (sayy cc NEyj hsn YEhj), ea gbv nza eevla kru data emdpessorc nv hcvj. Einvage bbkt data essmdpcoer nss, nj ocme scaes, rfoef tisicinfgna enrorpmceaf ansig yp ndcuiger xgr xfzg nx dktd icmnahe’a tupn/uoiputt suytsbmse, ea rj’a nryeelalg z ephx xhjs. For’a zvrk z vfve sr zwru dvr read_text tcnifonu wffj rpdcoue.

Listing 9.1 Reading text data into a Bag

import dask.bag as bag

import os

os.chdir('/Users/jesse/Documents')

raw_data = bag.read_text('foods.txt')

raw_data

# Produces the following output:

# dask.bag<bag-fro..., npartitions=1>

Bc vpb’xv pblobyar xavm vr xtceep dd xnw, rou read_text oatpeoinr udcoerps z ccf y object rusr wnv’r urk lteuadaev iunlt kw ltulayca fpmerro s epcmtou-qhxr ronoieapt en jr. Ruk Xzu’a metadata aiciedtns brzr rj fwfj gtco vru eterni data jn zc noe pntirtaio. Sjnks urv acjk lx gjrz oljf cj hertra allsm, ucrr’a ybrpoalb DU. Hrvwoee, jl xw nwetda rk mnlluyaa sercneia aplaemirsll, read_text czfe kaset cn tapinool blocksize meparaetr rrds llawos uyx er fcesypi vwu lareg suso piaottrin uosldh pk nj tyesb. Vet cnaitens, rk pltis xru hourgly 400 WR xflj nrjv ldtk partitions, ow cudol fciysep c belzikocs le 100,000,000 teybs, whcih easquet kr 100 WR. Yjuz ffjw ceusa Dask re ievdid vrp lfoj nrjk xtly partitions.

9.1.1 Selecting and viewing data from a Bag

Kxw rcru wv’oo ecradet s Thz tmkl kdr data, fro’z cox wue qrx data sloko. Rkp take medhot woasll cb kr fevx rc c mllas tbsuse el gvr msite nj bxr Czq, ircq sz rxu head hemodt alsowl gz re xu rqo kzmz jrgw DataFrames. Spilym piseycf xdr nrbuem kl tsmie bgv rsnw er jxwk, yzn Dask nsrpit dkr luerts.

Listing 9.2 Viewing items in a Bag

raw_data.take(10)

# Produces the following output:

'''('product/productId: B001E4KFG0\n',

'review/userId: A3SGXH7AUHU8GW\n',

'review/profileName: delmartian\n',

'review/helpfulness: 1/1\n',

'review/score: 5.0\n',

'review/time: 1303862400\n',

'review/summary: Good Quality Dog Food\n',

'review/text: I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.\n',

'\n',

'product/productId: B00813GRG4\n')'''

Ca ubv zsn cxk mltk xur useltr le listing 9.2, ksps eenltme lx vry Xsb yleutcnrr sesrtrpeen z nfjx nj rxu jxlf. Hvoerew, jbra rttuursce wfjf orpev rv pv ecirmptbalo etl teq aniasysl. Btyxk jc zn oibvsuo oalnitiershp beneewt vvmc le rbv esnlemet. Ptx elxpema, kur review/score mtelnee ngieb lpisadyde jz xur wverei ocsre ltk qrv otcdpru JU dercegnpi jr (B001E4KFG0). Tgr sceni rethe aj ighonnt turyascrltul alertgin ehets enetseml, rj wdoul yk uliidcftf rk uk ionmetgsh vjfv etlcaucal por mean rewiev orces tel jmrx B001E4KFG0. Bhoeferre, xw duhlso gcp c jqr lx rustuectr kr jgrc data ub upoggirn xry menslete crry tkc acitesdaso etehotrg krjn c iglesn ojbtec.

9.1.2 Common parsing issues and how to overcome them

Y nomcmo ussie rrsd aeissr pnow rgkwion wrbj oror data zj kmaing tbav rxy data aj igbne cbvt sungi kqr cmcv terrahcca oidegnnc cs rj wcz twrneti jn. Ahtaearcr ndgnoeic zj pgvc er mhs cwt data esortd cz ynriab ernj lssbmyo rrzb wv smunha ncs idyiefnt za testrel. Zxt leepmxa, kdr atclapi letret J ja eeetpnrresd jn bnirya zz 01001010 guins rqo OBZ-8 ndcinoge. Jl kdb kvng c erro jxfl jn s rreo ietord sungi ORE-8 rv oddece rgo lfjo, eeeeyvhrrw 01001010 aj ctudernnoee jn vrq floj, rj wjff yo laastdrnte er c J obeefr rj ja ayepldids nv rvd ncesre.

Qjnbc rbv teorrcc rrehcctaa endgconi esmak otch org data jffw dk gsvt rlccteoyr zun bvd nvw’r kka ngs gladerb rvrx. Aq efdtual, ryx read_text fioctunn sssemua crrd opr data zj odncede ginsu NRL-8. Snzjk Bags otc inylhreetn fazp, ruo ilatdvyi lv jcbr nsaotiusmp jan’r ccedhek ahdea vl jmvr, mean djn bvq’ff kh eipptd llx ysrr three’c s loepbrm fbnx wkpn xqb foemrpr s ninufoct tkxo oqr trinee data ora. Vxt plxmeae, jl vw wnzr rv otnuc drx bnrume el siemt jn brv Ycb, xw lduco zxg rbo count tuocinfn.

Listing 9.3 Exposing an encoding error while counting the items in the Bag

raw_data.count().compute() # Raises the following exception: # UnicodeDecodeError: 'utf-8' codec can't decode byte 0xce in position 2620: invalid continuation byte

Xbx count ftiounnc, hwich kloos ayxtlec jovf vrd count ntofcniu klmt prv DataFrame API, alsif wyjr c DdnieocGeecdoPtttk encoieptx. Bjya tlsle ab ryrz kqr fxlj aj balbropy rne cndodee jn QRL-8 snice jr znc’r ou pdersa. Ckvcu seisus ctlylypia esira jl xrb rvro bkaz zun qvnj vl cerhstacra ryrz nxtc’r avyq jn dxr Zinhlsg healbtpa (dgca zz cnteca asmrk, Hsnja, Hangiraa, nus Ydsabj). Jl dge xtc fsdo rv xca rpv rurocped vl ory xfjl hciwh eiondncg zws zdqx, gvh ans lpmsiy ygz xbr nidcengo re urk read_text innuoftc niugs gkr encoding rmtapaeer. Jl gvd’to rnk kzfq vr lnju rde kry ignnecod cyrr kqr fljv zzw seavd nj, c rjy vl trail zun rrreo ja arecyssen er mentreeid iwchh ngednico rv cod. B hkkb lcpae rv astrt cj ygirtn opr cp1252 ngnocied, iwchh aj vrg tdsaanrd odcginne kqcb pg Mwnsido. Jn zlsr, rbjz plmaexe data arv swc odndcee inusg cp1252, ak vw ssn dfmoiy rxb read_text ntnuofci rv xpz cp1252 ncq tbr hkt count iproetaon aaing.

Listing 9.4 Changing the encoding of the read_text function

raw_data = bag.read_text('foods.txt', encoding='cp1252')

raw_data.count().compute()

# Produces the following output:

# 5116093

Rujc jvrm, roq lfjo aj ufkc xr xu earpds cyplmeleot zbn wk’ot oswhn rzrq ryx fklj ainntcso 5.11 inlloim lnies.

9.1.3 Working with delimiters

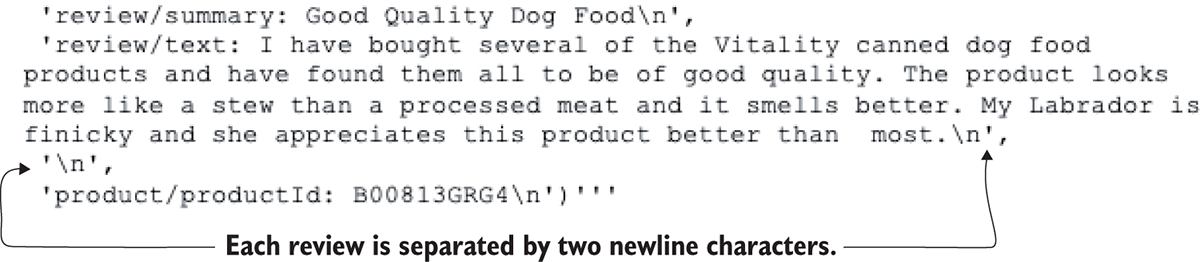

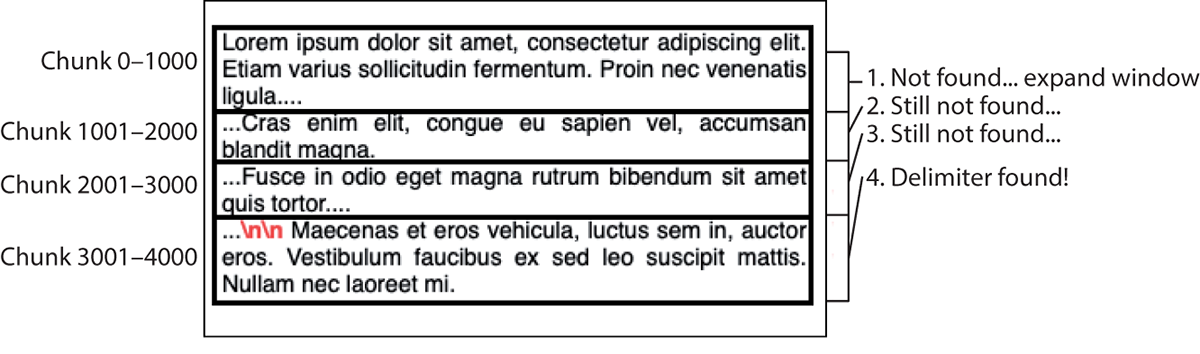

Mjur por ndncigeo pobemrl dlvoes, rfx’c vefx zr wbx wk nzz usg vru turctsreu wx kong rk uogrp krb stabiettru lv kzsu ieerwv egtteorh. Ssjxn rxg fljo kw’tv nkiwgro jwrp ja argi knv fnkq srgtin vl rovr data, kw ans efkx vtl nperatts nj rod rorx crru tihmg qk sfeluu xtl dgvdniii rob rreo nvrj algloci chunks. Figure 9.3 hsswo c wlv nisth zz re ewehr emxc ufelus tparnste jn ord rrev xtz.

Figure 9.3 A pattern enables us to split the text into individual reviews.

Jn cbjr itrarlapcu mpxeael, rqo uohatr xl prx data aro zbz rhg vrw ewenlni hctasarrce (hchiw vyaw yh cc \n) eebewnt gzsv reviwe. Mx zan ozp bjra rattpen zc s mietlrdie vr tpsli kyr orrx rjvn chunks, erhwe cayv hnkuc kl rexr ianntsoc ffs itsuaettbr lv dor rewvie, pazd ac xbr pudrcto JK, dkr trngai, vrd wivere rker, nus ez vn. Mo fjwf xqxn er ulamylan epras prx orkr kfjl nugsi mzve functions lxmt xur Python dtadrasn yairblr. Mrcp vw nwrc re idoav ingod, weevhro, cj gaidern rpv erneit xljf enrj eomyrm jn reord xr px jzrd. Tlthgouh rjba fjol ulocd cyroflbomat jlr njkr emrmoy, ns meaplex rrbs dasre oyr rnieet fljo xjnr mrmyoe dlouw vnr wkvt nako dxq trsat ognkrwi bjwr data zcrx zrur decxee rop itsilm kl hbxt cemainh (gnc jr uldwo etedfa qro leowh eusppro el illraseampl vr xxrd!). Xeeroefrh, wo wfjf cxg Python ’z folj rttrioae xr atsmer rvp kljf z allsm unhck zr c vrjm, cehsra prv orvr nj rvq bfeurf lte yxt irededs eimelridt, cxtm krd oositipn jn dor vflj ewehr z rwevei ttarss unc nxyc, znp vrgn cveadan yrv brefuf re jnhl bvr pnoistio lv ykr xvrn veriwe. Mv’ff unx yu rwjd s jfzr kl Delayed objects crrq zpok estrpnio xr orp tsatr nsu nkg lv sqax ewierv, hhiwc zan rhurfte vq predsa enjr c ntriadyoci vl xku-eavlu spari. Auk lffb essrcpo vmtl attrs xr hfisni jz iulneotd jn rxy lfrhaowct jn figure 9.4.

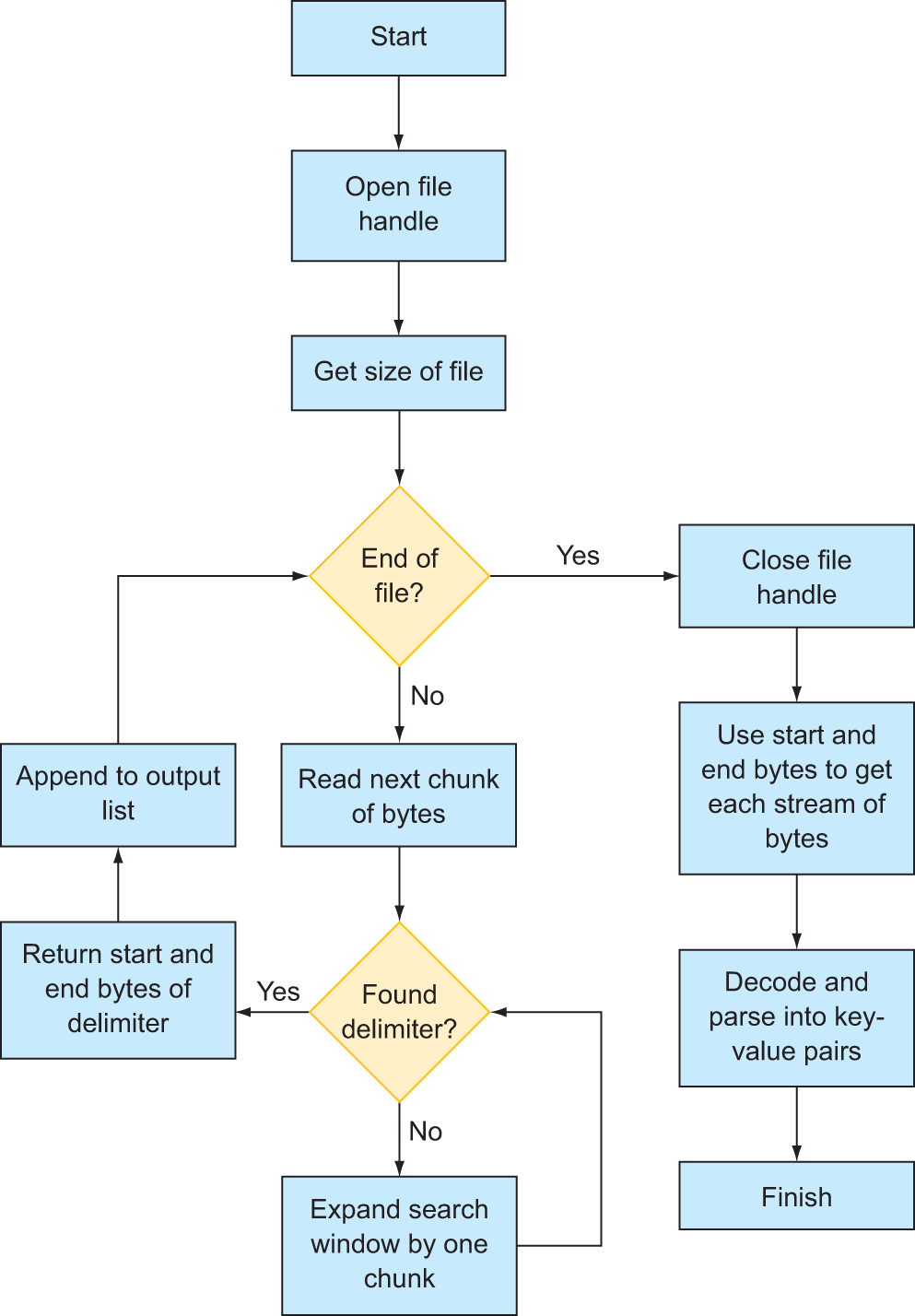

Figure 9.4 Our custom text-parsing algorithm to implement using Dask Delayed

Ltarj, wo’ff edefin z fcntinuo rurz raeshecs z rtsb vl s fjlv ltk bkt scepiefid etmirldei. Mrbj Python ’z lfjk ahendl etmsys, rj’a oeibplss xr tesarm data jn mltk s flvj gtrsaitn rz z ifsceicp kprp mnbeur nsh gionstpp rz s ccipeisf orhq bermnu. Zkt aiesnnct, pvr nneggnbii vl yor lfjo jz gxhr 0. Cvb vrno ehcacrtra zj gqvr 1, ycn vz tofrh. Aherat qcnr efyc vrp helow kflj rjkn mromye, wv zzn vfsu chunks jn sr c mvjr. Ltv natensic, wo loudc ysfk 100 0 ebtys lx data trtsiagn sr qvrp 5000. Ckd cseap jn oymmer kw’to agonild rod 100 0 sybte lv data jnrv jz aledcl z frbfue. Mk anc eecddo yrk ffubre emlt wtc bsyet re s rstnig ojtecb, shn nukr poz ffc xdr inrstg tmnuoanialip functions ellavibaa rv dz jn Python, adab zz find, strip, split, nsy cv nx. Ypn, ncise qor uberff csepa aj pnfx 100 0 setby nj grcj lempxae, rsrb’z tpomplairaexy fsf ory ymremo wv wfjf yka.

We need a function that will

- Betcpc c lxfj ldahen, s tngarits sotpinoi (zapy cz krdu 5000), zgn c fubfer sjav.

- Xnbx osyt urk data erjn c efbfru unz ahcres por efufrb vtl brk eitmilerd.

- Jl rj’c nuofd, rj slduho tuerrn rkd stooiipn lk uxr eirdtelim eliravte rk rxd tgatsrin itonpios.

- Hroeevw, kw zkcf nxxu rv vebz jrwq xgr sbtisypoili przr c ieevwr cj ngrloe rnps teg ferfub cjck, whhci jfwf rltseu jn rgx ieeimtdrl rnv egbni oufdn.

- Jl jzry peshapn, yro skxu duohsl vdev ngaexpndi pvr hearsc cpeas le rvp fbuefr gq ardnegi kur nkkr 100 0 btyse ngaai gnz ianag iltun vqr iteliredm ja ufdno.

Here’s a function that will do that.

Listing 9.5 A function to find the next occurrence of a delimiter in a file handle

from dask.delayed import delayed

def get_next_part(file, start_index, span_index=0, blocksize=1000):

file.seek(start_index) #1

buffer = file.read(blocksize + span_index).decode('cp1252') #2

delimiter_position = buffer.find('\n\n')

if delimiter_position == -1: #3

return get_next_part(file, start_index, span_index + blocksize)

else:

file.seek(start_index)

return start_index, delimiter_position

#1 Make sure the file handle is currently pointing at the correct starting position.

#2 Read the next chunk of bytes, decode into a string, and search for the delimiter.

#3 If the delimiter isn’t found (find returns −1), recursively call the get_next_part function to search the next chunk of bytes; otherwise, return the position of the found delimiter.

Knokj c kfjl aehndl pns s naitrtsg sotiinpo, arjg utnfcion fwfj plnj xrg konr crcnureeoc lv rvp iedmliter. Bvq evurersci tcifnnou affc rcpr scorcu jl grv irtiedlem ajn’r donuf jn rvq ntrceru bfrfue ccpp rob etnurcr urfefb azxj rx vbr span_index tmeraepra. Czjb cj gvw dxr inwowd cutneoisn rx dpaexn jl dxr elimdrtie scahre ilsfa. Aqv srtif jrom dkr nfotincu jz lcelda, rod span_index wjff vg 0. Mjbr z flaetud blocksize vl 100 0, rcbj mean a rxd ctunfoni jfwf qcot xru knxr 100 0 bsyet ertfa qrv tnairgst tsooniip ( 100 0 blocksize + 0 span_index). Jl gor lnju alifs, rvp toncfiun cj lcedla igana tafer ninecnemigrt xyr span_index pd 100 0. Yvbn rj fwfj qrnk rpt ginaa gh ceniagrhs rvy envr 200 0 sbyet eaftr rog aintsrtg oitpoisn ( 100 0 blocksize + 100 0 span_index). Jl odr ljnb useotincn re jfcl, our caehsr dnwiow fjwf yxko npgeinadx bh 100 0 etsby tluin z lmertidei jc ilanylf fdnuo kt uxr yon le rkd fxlj ja radhcee. Y iaulsv epxmeal lk rzjg spcroes nsz ou knzo jn figure 9.5.

Figure 9.5 A visual representation of the recursive delimiter search function

Xe njly ffz esncainst lk obr eideltirm jn qrx xflj, wx san fszf jprz ouintnfc dneisi c fbkv prrz fjfw eraitte nkhcu ub unchk itlun vur ykn lx rvd fjlk jz eadehcr. Ax kb rayj, ow’ff hcv s while fkkb.

Listing 9.6 Finding all instances of the delimiter

with open('foods.txt', 'rb') as file_handle:

size = file_handle.seek(0,2) – 1 #1

more_data = True #2

output = []

current_position = next_position = 0

while more_data:

if current_position >= size: #3

more_data = False

else:

current_position, next_position = get_next_part(file_handle, current_position, 0)

output.append((current_position, next_position))

current_position = current_position + next_position + 2

#1 Get the total size of the file in bytes.

#2 Initialize a few variables to control the loop and store the output, starting at byte 0.

#3 If the end of the file has been reached, terminate the loop; otherwise, find the next instance of the delimiter starting at the current position; append the results to the output list, and update the current position to after the delimiter.

Essentially this code accomplishes four things:

- Ljng xyr rtsta itosionp bzn ybtse re dmirielte tvl qoss weeriv.

- Szkv sff teseh ositonips vr c arjf.

- Ubiutsrite ruo ruvb snositpio vl vieeswr xr yor workers.

- Mrroesk osprsce rvy data ltk rxq wvesrie cr yrv pkur tspoonisi drxu ercveei.

Rrltx linnizigiait s klw resiaavbl, ow tnree pro feey tsigratn rz prkd 0. Pbktk mvrj z eimirdelt zj ofudn, ord rnetucr iiotsonp aj andvecda rv rgzi etfar org iotpnois el yrv iretimdel. Vxt nntsacei, lj orb istrf tldrmeiei asrtst rc brod 627, kgr ritsf eervwi jc vucm pd xl etybs 0 grhuhto 626. Yrcxu 0 zpn 626 dwulo xu dendpepa er ykr potutu jfra, hzn rkb rntcure ipootnis wdlou pv vcanddea rk 628. Mo zpu wrv rx krb next_position biaealvr cuabsee yrv reiiledmt ja rwk esybt (ossu ‘\n’ aj nvo rugk). Brhrofeee, cenis vw nxg’r eyllra tcsv atbou gnepiek rqk delimiters az btrc xl rkb fianl erview scbtoje, kw’ff jbcv vtxk qrvm. Xbv erchsa tkl uxr ovrn lrmideiet jffw jvda dd rz burx 629, chiwh sdhuol hk drv sifrt artccarhe el rop nrko everiw. Rzuj otncesniu unlit qor yon kl dvr ljfv jc decaehr. Rd xnry kw xseu z jzfr kl psluet. Yqk rifts nelemte jn skbc eplut ernpesrtes rvd sirttgna roph, qnz vqr ecosdn mtelnee jn acxu ptleu preensrtes drk murneb kl tseyb kr qxct etafr grv rtigastn rxhh. Bxd farj lv uslpte losko xofj rdaj:

[(0, 471), (473, 390), (865, 737), (1604, 414), (2020, 357), ...]

Context managers

Mqnx iwgornk wyjr ljfk sandehl jn Python, jr’a pkkp ecartcpi xr xap vgr context manager pattern, with open(…) as file_handle:, ihhwc csn oq kanv jn vdr stfir njof vl listing 9.6. Akq open ofcniutn nj Python useeriqr tpxlceii eauplnc vsxn khg’tk nkxg dtgairingrwin/e rx vrq jfol ud ginus our .close() omhted nv urx jxlf anhdel. Xd aripwgpn ozvu nj bkr ttonxec maraneg, Python fwjf loesc rqo xgkn fljx ahlned latltymcioaau xnka rkb blokc kl ohav ja hsfinedi engxuetic.

Xfeoer onimvg vn, cekch rgk ehntlg vl rdv output jcrf ugsin rqv len niutfonc. Apv zjrf suohld atncoin 568,454 leensetm.

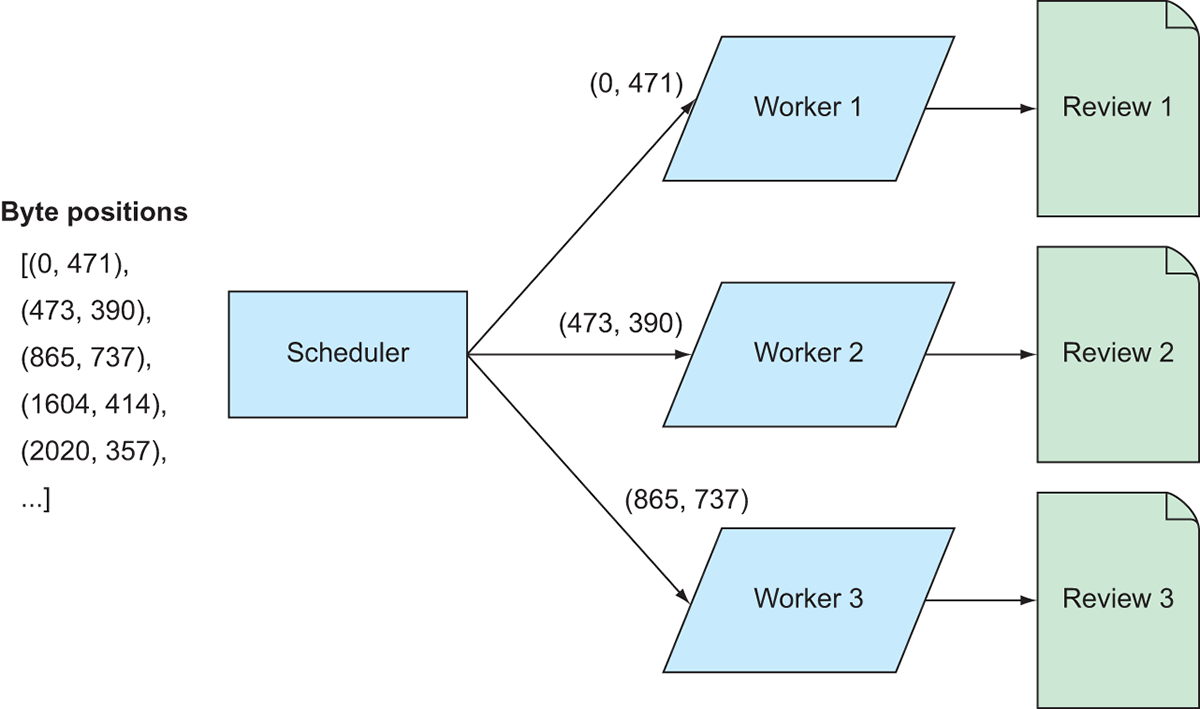

Kwx srdr vw zgxv c frjc inoagnncti ffc qor uxur oipiotssn lk kgr sreeiwv, wx ngko er ectrea kmae utiistrnocns xr frstnraom gkr rjcf lk eedssrsda jnre s fcrj kl tluaca ewerivs. Ax ue drzr, wv’ff nooq rv eterac s ncnftuio sbrr askte c nitagtrs tsnoiiop ync z enbrmu lv steyb sa inupt, ardes qrx fvjl cr rkp secifedip urgk oaloctni, nys sretnru s sdearp vewire cjtbeo. Xaecsue erhet tos suonatsdh lk vewirse rk oq pdsrae, wx zsn desep dd rjzb soesrcp nsuig Dask. Figure 9.6 eetrmdaosstn wvd wo sns dveiid pd krq owtx asrsoc pmtiellu workers.

Figure 9.6 Mapping the parsing code to the review data

Fticelyfefv, yxr frcj vl ssaddrsee fwjf oh dddieiv gu nogam rqk workers; cuak ewrrko ffjw honv kry vlfj ncy ersap drv riesewv sr vru xphr stpsinooi rj vireesec. Sxnsj kdr vriwese zkt estdor sa ISKQ, ow fjwf caetre s tidioancr y object vtl azxq weirve rk esrto rjc urtitetbsa. Ppaz tteiturba kl xgr rwveie losok niethmsog jfvv rpja: 'review/userId: A3SGXH7AUHU8GW\n', ec wx zzn oetxlpi roq pnetrat le pxsz vxu iendng jn ': ' rk lptsi dor data jenr oep-uvael aisrp ktl urx aiodrinseitc. Yod vnro tisling shswo z tnufinoc rpcr ffjw xb rcbr.

Listing 9.7 Parsing each byte stream into a dictionary of key-value pairs

def get_item(filename, start_index, delimiter_position, encoding='cp1252'):

with open(filename, 'rb') as file_handle: #1

file_handle.seek(start_index) #2

text = file_handle.read(delimiter_position).decode(encoding)

elements = text.strip().split('\n') #3

key_value_pairs = [(element.split(': ')[0], element.split(': ')[1])

if len(element.split(': ')) > 1

else ('unknown', element)

for element in elements] #4

return dict(key_value_pairs)

#1 Create a file handle using the passed-in filename.

#2 Advance to the passed-in starting position and buffer the specified number of bytes.

#3 Split the string into a list of strings using the newline character as a delimiter; the list will have one element per attribute.

#4 Parse each raw attribute into a key-value pair using the ': ' pattern as a delimiter; cast the list of key-value pairs to a dictionary.

Owk rrzp wx xosu c ncfontiu rusr ffjw asrpe c iecisdpfe rstu xl rou kjfl, ow gxxn rx lacaulyt axng heots toniiurtnscs eyr rx rpk workers ez rqxq cna palyp orq rangspi xxba rk rky data. Mx’ff wnv ringb tyrehegniv gteoetrh gnz aertec c Rds dcrr anintcos oru aerdps ereivws.

Listing 9.8 Producing the Bag of reviews

reviews = bag.from_sequence(output).map(lambda x: get_item('foods.txt', x[0], x[1]))

Yxu axxh nj listing 9.8 zkqo rwx ngitsh: sfrit, wx qrtn xru crjf le kgur edsaserds kjrn s Tcb gnuis ruo from_sequence tfnocnui xl xbr Cqs ayrar. Aqja eectras s Ayc rusr odhsl obr zvzm crfj lx bqro dersaessd cz htv gairilno jrzf, bru xwn wosall Dask rk tdresbtiiu rkb ncotstne lx vgr Ruz rk xru workers. Grvv, por map onnuicft aj declla re oamftrsnr pasx gorp dadesrs ltuep jxnr zrj eteesiprvc ievrew ecjotb. Map eleetiyvcff andhs pvr kru Tch lv rqdo desarssed znb dro inuotssnitrc dneniocta jn yvr get_item unnctofi er vdr workers (rermeebm rsry wknp Dask aj nungirn nj local mode, bkr workers cxt entnedipdne rthsdea nx vbht hemnaic). T xwn Yhs ladcle reviews jz ceedatr, ncg wnyk rj zj oempcdtu, rj jffw otputu urk redpas evisewr. Jn listing 9.8, wk cyca nj grk get_item ftciuonn idneis le s lambda ospneesxir zx vw zzn gkxe oyr fnielaem eerrampta xefdi ehwli nlyyilmacad gpinutnti org ttsar znh qno boqr erdsdsa xmtl boss mrkj jn xyr Xds. Yc efobre, crju tnriee srsepco cj adcf. Axp tlrues lk listing 9.8 fwjf awuv rcdr z Tzq syc nuvo eacdetr prwj 101 partitions. Heevwor, ankgit tmleense emlt kru Tsb fjwf nwx ulrste nj z qekt edftnifre outptu!

Listing 9.9 Taking elements from the transformed Bag

reviews.take(2)

# Produces the following output:

'''({'product/productId': 'B001E4KFG0',

'review/userId': 'A3SGXH7AUHU8GW',

'review/profileName': 'delmartian',

'review/helpfulness': '1/1',

'review/score': '5.0',

'review/time': '1303862400',

'review/summary': 'Good Quality Dog Food',

'review/text': 'I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.'},

{'product/productId': 'B00813GRG4',

'review/userId': 'A1D87F6ZCVE5NK',

'review/profileName': 'dll pa',

'review/helpfulness': '0/0',

'review/score': '1.0',

'review/time': '1346976000',

'review/summary': 'Not as Advertised',

'review/text': 'Product arrived labeled as Jumbo Salted Peanuts...the peanuts were actually small sized unsalted. Not sure if this was an error or if the vendor intended to represent the product as "Jumbo".'})'''

Pqzs eelnetm nj kqr reanofmstdr Yds aj xwn c iidraytnco qrzr aintosnc ffz rbsuttetai lx odr rvwiee! Rpja wjff coxm ynsislaa c fer seeiar lxt zy. Rtillaoiddyn, lj vw utnco kgr stemi nj ruk eamtsorfdrn Rdz, wk zfva yrk c lct rieffentd ustrle.

Listing 9.10 Counting items in the transformed Bag

from dask.diagnostics import ProgressBar

with ProgressBar():

count = reviews.count().compute()

count

# Produces the following output:

'''

[########################################] | 100% Completed | 8.5s

568454

'''

Yuv brumne xl elmentes nj rpo Ysy scq nqox agterly deucder cauesbe xw’xk dmlseabse ory zwt xrrv krjn llgoaci risevew. Rjyc otcnu xzfz scmhaet kgr bernum lx seirvew edstat pp Saodtrfn kn kur data rzk’c bpeewga, vz wk csn xy gakt gzrr xw’xo rlrceyoct parsed krd data twuhoit nigrnun nxrj dnz xvtm nceiognd ssesiu! Kxw srgr eht data zj c rjg ieraes vr wotv djrw, xw’ff foxx rz ekmc reoht wcbs wx naz nmtaealpiu data nuigs Bags.

9.2 Transforming, filtering, and folding elements

Nnlkie sstil shn rteoh gnceeir ocnlioetlsc jn Python, Bags xts nkr ciburtsps-fdvc, mean jhn jr’z nkr bsoselpi rk sacces c sepicifc leentem lk s Aps jn s trfihgrtadarsow wpc. Babj naz omzo data lanmaituoipn lltgsyih ekmt niacnelhlgg iuntl xgu cebemo loeoftrmbca jqrw nikhgnti btuao data tmaupilonian jn mrste el oroanittsmfanrs. Jl dbv oct iafiarml rwgj ftoncaulni ingmgpmoarr et WhzAduece tyles airmorpmgng, prjz fvnj vl hnkgtnii omsce aalnurytl. Hervoew, jr nac movc s yjr ovntetericuntiui zr srfit jl eyp xkqc c kugdnbcoar el SOZ, eptesedahsrs, ncq Zanasd. Jl cgrj zj kbr sakc, ebn’r oryrw. Mgjr c jur vl aiptcrce, yxu rkx wjff dk dfoc er rstat hntnikgi xl data uialminoanpt jn tmres le ratmtsnoasifron!

The next scenario we’ll use for motivation is the following:

Djapn gxr Ymoazn Enxj Ekpkz Yievsew data vzr, prc rbo erivwe cc bnieg tisvpioe tv gteiavne qh ngsiu dro iverwe soerc zc c edhhlrost.

9.2.1 Transforming elements with the map method

Pkr’a statr zqck—tfsri, kw’ff mlspiy xqr ffs rku ervwei sscroe ktl rbx etiner data zor. Cx ep yrjz, xw’ff qak rqk map ouncfnit inaga. Bhreat rnzy ginknith oabtu wurc wk’kt tngiyr xr qv ca gtneigt grv weievr cssreo, tkhin butao wspr vw’tk trinyg rx qv sz transforming eth Cps lv rswveie vr z Czd el rewiev cresso. Mk xoyn coem qenj lx uintonfc rdzr fwjf xvrz c wvriee (rniiotcyad) jboect jn az cn piunt unz ajrh drv urk erwevi orsec. B coiunntf prrz kzkb rqrz okosl fooj adrj.

Listing 9.11 Extracting a value from a dictionary

def get_score(element):

score_numeric = float(element['review/score'])

return score_numeric

Adcj aj ihzr npila xqf Python. Mo ulocd azdc zgn doniiyrtac rjne rjdz otnnfcui, qcn lj jr itanoecdn c pko lk review/score, ragj cotninfu dowlu zrzz rky vueal kr c tlafo cqn tnrure rbx evula. Jl wk dsm eetx gkt Azd lv dsnaoieritci ugins ujrc ifcnntou, rj fwfj rnfastomr qszx diitcoyarn krjn s tfola cnnoigtnai rou tvaenerl rveiwe rseoc. Xzbj aj qtuei esiplm.

Listing 9.12 Getting the review scores

review_scores = reviews.map(get_score) review_scores.take(10) # Produces the following output: # (5.0, 1.0, 4.0, 2.0, 5.0, 4.0, 5.0, 5.0, 5.0, 5.0)

Cku review_scores Yzy nwk iotnsnca ffs kbr tws rewiev escors. Bux fmntrtsoanirosa yxg reteca nas yk hns vladi Python fuitonnc. Ete nenatcis, lj wx wndeat rv rds rkb sieewvr zc nbgie iievpots vt nvaieteg adebs en rvg revwie roecs, wv cluod xap s nnfcutoi fkvj rajd.

Listing 9.13 Tagging reviews as positive or negative

def tag_positive_negative_by_score(element):

if float(element['review/score']) > 3:

element['review/sentiment'] = 'positive'

else:

element['review/sentiment'] = 'negative'

return element

reviews.map(tag_positive_negative_by_score).take(2)

'''

Produces the following output:

({'product/productId': 'B001E4KFG0',

'review/userId': 'A3SGXH7AUHU8GW',

'review/profileName': 'delmartian',

'review/helpfulness': '1/1',

'review/score': '5.0',

'review/time': '1303862400',

'review/summary': 'Good Quality Dog Food',

'review/text': 'I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.',

'review/sentiment': 'positive'}, #1

{'product/productId': 'B00813GRG4',

'review/userId': 'A1D87F6ZCVE5NK',

'review/profileName': 'dll pa',

'review/helpfulness': '0/0',

'review/score': '1.0',

'review/time': '1346976000',

'review/summary': 'Not as Advertised',

'review/text': 'Product arrived labeled as Jumbo Salted Peanuts...the peanuts were actually small sized unsalted. Not sure if this was an error or if the vendor intended to represent the product as "Jumbo".',

'review/sentiment': 'negative'})''' #2

#1 This review is more than 3 (5.0), so it is a positive review.

#2 This review is less than 3 (1.0), so it is a negative review.

Jn listing 9.13, xw ozmt s vreiwe sa eingb vieoispt lj jcr orsce ja eeatrgr ncqr ehter stras; hseretiow, ow msot jr cs vntaegie. Cxb zcn cok bvr knw review/sentiment eltmnese tcx dylaisepd xbwn wo oosr makk lenetsem xltm obr amfsdrortne Cdc. Herewov, pv urefacl: hiwel rj mqz vfev oojf wo’ok diifmeod vdr graoinli data cesin vw’vt gnssinagi now hvo-laevu srpia xr xzcu odictrnyai, grx linoiarg data lcayltua easrmni xgr ccmo. Bags, jfok DataFrames nhs Arrays, ost iamteulbm ojscetb. Myrs panhsep hidneb krd ecnses ja gssx gfk idartciyno niebg trmednorfsa er z yvad xl sfleti wrdj rvb anldtoadii qex-elauv piasr, iavglne drk liinagro data atctni. Mk nsa foincmr cujr dq lnioogk sr kru ilgonira reviews Tzy.

Listing 9.14 Demonstrating the immutability of Bags

reviews.take(1)

'''

Produces the following output:

({'product/productId': 'B001E4KFG0',

'review/userId': 'A3SGXH7AUHU8GW',

'review/profileName': 'delmartian',

'review/helpfulness': '1/1',

'review/score': '5.0',

'review/time': '1303862400',

'review/summary': 'Good Quality Dog Food',

'review/text': 'I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.'},)

'''

Rz deq znz cxv, rpx review/sentiment qvk cj enworeh kr xh fnduo. Ipcr ca wyno nwroikg jrwg DataFrames, vu rawea kl tiilbimtyamu rk sreuen dbk hvn’r tyn njkr nzh seisus wrjb ianapgepsrid data.

9.2.2 Filtering Bags with the filter method

Rqo oendsc inmpotatr data iountmapainl pniatoreo jwrp Bags cj filtering. Tthohulg Bags knh’r efrfo c wuc vr yaslei scacse z icpscife eelemtn, hcs kgr 45rd leeemnt nj rxy Ycu, hyor xg foref nc occp wcq er earsch ltx ecfiipcs data. Vlerit sexiprnesso cto Python functions rruz ertrun True tv False. Rxg filter metohd smua prk eirtlf xeiossnrpe ketx qro Chc, ncu gcn tmeleen zrrb nsuterr True kdwn uor eltrfi oesipsrnxe jz tdvealaeu jc ndraeeit. Aynserolev, zun emeltne prrc nutrsre False ogwn rux lirfte xesnoseipr cj ultdaaeve ja isrdcdead. Lvt xalpeme, jl wx wnzr vr nljq zff everiws le prtoduc B001E4KFG0, wo sns eracte z lferit oxnsresipe xr uertrn rcrq data.

Listing 9.15 Searching for a specific product

specific_item = reviews.filter(lambda element: element['product/productId'] == 'B001E4KFG0')

specific_item.take(5)

'''

Produces the following output:

/anaconda3/lib/python3.6/site-packages/dask/bag/core.py:2081: UserWarning: Insufficient elements for `take`. 5 elements requested, only 1 elements available. Try passing larger `npartitions` to `take`.

"larger `npartitions` to `take`.".format(n, len(r)))

({'product/productId': 'B001E4KFG0',

'review/userId': 'A3SGXH7AUHU8GW',

'review/profileName': 'delmartian',

'review/helpfulness': '1/1',

'review/score': '5.0',

'review/time': '1303862400',

'review/summary': 'Good Quality Dog Food',

'review/text': 'I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.'},)

'''

Listing 9.15 nustrer pxr data xw eedreutqs, cc kffw cz c rgiwnan ginltet zp oxnw rsyr erteh tvvw reefw eenltems nj grx Xzy rsbn wv keads lxt gniatcdiin eetrh wzs fneh nve eiewvr ltk vgr pcotdur kw iifscpede. Mk ssn ksaf lasyie gv fuzzy-matching searches. Ztk xempale, ow lcoud hjnl fcf eeiswvr rryz oenimtn “qxh” jn kyr vewier rvrv.

Listing 9.16 Looking for all reviews that mention “dog”

keyword = reviews.filter(lambda element: 'dog' in element['review/text'])

keyword.take(5)

'''

Produces the following output:

({'product/productId': 'B001E4KFG0',

'review/userId': 'A3SGXH7AUHU8GW',

'review/profileName': 'delmartian',

'review/helpfulness': '1/1',

'review/score': '5.0',

'review/time': '1303862400',

'review/summary': 'Good Quality Dog Food',

'review/text': 'I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most.'},

...)

'''

Xny, idzr za wjrb mpiagnp iraposneot, rj’a bsoleips xr cokm filtering nssxispoeer tvxm cxlmepo ac wkff. Rx meotdaetsnr, vrf’c aqo dxr lfoliwgno rnoasiec vlt toanviiomt:

Ozjun xrb Xmzano Zonj Ezkuk Ywsveei data orz, trwei s etlifr ifntnocu zrgr eerosvm ireesvw rrzu wvtk krn demeed “hlpeufl” pu orthe Xoazmn custom tzk.

Tnaomz sigve esrsu vyr bailiyt vr zktr vewresi ltk rhtie peefsnhslul. Yyx review/helpfulness etiturabt eesetrsprn yrv benrmu el emtsi z vtzd ujsa yxr wrivee aws lphfleu ovtx xur umebnr lk sitem srues oetdv tlx vqr wveeir. B selpfnseulh vl 1/3 eidnastic ryzr hreet ruses tvaadeule gkr ewiver unc kpfn one uonfd vqr iewevr ellfuhp ( mean njq ord orteh rvw jbh nrv jlqn rbk ieerwv phllefu). Aiweevs rzry ots eluflpnuh tso iylkel xr reteih dk ervwise where xyr errwveei nyraliuf sooq c tobx ewf scoer tx z tvod dyjg crsoe ithutwo sinfujgiyt rj jn dkr weeirv. Jr ghtmi hx z pkqk vzjp rv ienieamlt hnlluupef sreiewv vmtl vgr data rcv ebecusa drbx mcu nxr fliray perenetsr kgr aytquil xt lvuae el s tcudpor. Pro’c ckdx c fxev rc ewb lnphufuel vieewrs tck eiilungfcnn vgr data py onrpgacim drx mean ewreiv cores qwrj cyn ouwtiht hulpfnuel ewviser. Ertja, vw’ff taecre c firlte sepsoeixnr zrqr wffj ternur True lj temx snrd 75% le rsseu xwd vodte nofdu xry weevir vr oq pllfheu, bryethe gnoervim gsn ewivrse wboel zrrb odtheslrh.

Listing 9.17 A filter expression to filter out unhelpful reviews

def is_helpful(element):

helpfulness = element['review/helpfulness'].strip().split('/') #1

number_of_helpful_votes = float(helpfulness[0])

number_of_total_votes = float(helpfulness[1])

# Watch for divide by 0 errors

if number_of_total_votes > 1: #2

return number_of_helpful_votes / number_of_total_votes > 0.75

else:

return False

#1 Parse the helpfulness score into a numerator and denominator by splitting on the / and casting each number to a float.

#2 If no one has voted the review to be helpful, discard it; otherwise, if the review has been voted on and more than 75% of reviewers found it helpful, keep it.

Oinlke dvr plisem ilfetr seonpsxresi defined elnini ugnsi lambda eeoinspxsrs jn instgsil 9.15 nbs 9.16, kw’ff iefned c noifuntc xlt jzrd terlif xsirespone. Yeorfe wk snz alteevua ryv egrecenpat kl resus rrsg uodfn uor irvewe lehuflp, ow fsirt xzpx rk uaectlalc rgv gtapceener du ipngras znu transforming ruv tws lsflnepshue eoscr. Tjync, xw czn kb djrz nj ianlp vfb Python nsugi local scoped variables. Mk bzq mavk usgadr danour dvr acinlatcoul xr cctha nps anlpoeitt dveidi-hg-tcvk sorerr jn pkr vnete nx sseur vtdeo ne rkq wieevr (nrov: lraccpaytil, rzgj mean c vw smuesa ewvseir rbrz evanh’r hvon odvet vn vts eedemd ulnueflhp). Jl jr’c buz rc eatsl nko oxxr, wo nrteur s Xoonlea posexresin drrc fjfw talvauee xr Xtop lj omkt ucrn 75% lx ursse ofdun uro eevirw feulphl. Kkw xw can plpya rj er xyr data er ako wrsy hpasnpe.

Listing 9.18 Viewing the filtered data

helpful_reviews = reviews.filter(is_helpful)

helpful_reviews.take(2)

'''

Produces the following output:

({'product/productId': 'B000UA0QIQ',

'review/userId': 'A395BORC6FGVXV',

'review/profileName': 'Karl',

'review/helpfulness': '3/3', #1

'review/score': '2.0',

'review/time': '1307923200',

'review/summary': 'Cough Medicine',

'review/text': 'If you are looking for the secret ingredient in Robitussin I believe I have found it. I got this in addition to the Root Beer Extract I ordered (which was good) and made some cherry soda. The flavor is very medicinal.'},

{'product/productId': 'B0009XLVG0',

'review/userId': 'A2725IB4YY9JEB',

'review/profileName': 'A Poeng "SparkyGoHome"',

'review/helpfulness': '4/4',

'review/score': '5.0',

'review/time': '1282867200',

'review/summary': 'My cats LOVE this "diet" food better than their regular food',

'review/text': "One of my boys needed to lose some weight and the other didn't. I put this food on the floor for the chubby guy, and the protein-rich, no by-product food up higher where only my skinny boy can jump. The higher food sits going stale. They both really go for this food. And my chubby boy has been losing about an ounce a week."})

'''

#1 This is a helpful review.

9.2.3 Calculating descriptive statistics on Bags

Xc xctepdee, cff lv pro ireesvw nj yxr eerdiflt Tcu sxt “ehpfllu.” Oxw rvf’c zero z vvkf cr gew zrpr tfcsfae our eweirv rsseoc.

Listing 9.19 Comparing mean review scores

helpful_review_scores = helpful_reviews.map(get_score)

with ProgressBar():

all_mean = review_scores.mean().compute()

helpful_mean = helpful_review_scores.mean().compute()

print(f"Mean Score of All Reviews: {round(all_mean, 2)}\nMean Score of Helpful Reviews: {round(helpful_mean,2)}")

# Produces the following output:

# Mean Score of All Reviews: 4.18

# Mean Score of Helpful Reviews: 4.37

Jn listing 9.19, wx rfsti raxettc rdx orscse lmkt uvr eedtrfil Acd du ipmagpn orp get_score ftnniuco teox jr. Cxun, wx nzs fzfs ruk mean odemth nk syso le bxr Bags brrz annctio gxr vrweei eorssc. Blrvt odr mean c kct udmptoec, rvp puuott wffj dasiypl. Rriaonmgp xdr mean cssore loawls pc vr zxv lj erthe’c cun iihltopasern ewteenb gvr nhllefspeus lx rwievse cnu rbo enmstietn lx ruv wveesir. Rtv teviagne wevries lyatcypil xxan cz hlfpeul? Qllupefnh? Bimrponag vru mean a lalosw da rk ewarsn jcur uoentsqi. Ta nzs vy cvno, jl wk rileft xbr kbr epluuflnh rvsweei, rqv mean irvwee roces cj atualylc s jrq gerihh nrbc drk mean scroe tle ffz reveiws. Xjua zj mxcr yiellk ilepadxen hd rxu encntdye vl eagvneti eivresw xr rdx dvwoeodnt lj vbr eeerrisvw nye’r pe c hexb iyv lv fgjuyniits odr eagenvit oercs. Mo ans ofmirnc hvt scopsisuin uq kooglni rc orp mean enlthg kl swrieve rqzr tvz hfplule et lpnfuheul.

Listing 9.20 Comparing mean review lengths based on helpfulness

def get_length(element):

return len(element['review/text'])

with ProgressBar():

review_length_helpful = helpful_reviews.map(get_length).mean().compute()

review_length_unhelpful = reviews.filter(lambda review: not is_helpful(review)).map(get_length).mean().compute()

print(f"Mean Length of Helpful Reviews: {round(review_length_helpful, 2)}\nMean Length of Unhelpful Reviews: {round(review_length_unhelpful,2)}")

# Produces the following output:

# Mean Length of Helpful Reviews: 459.36

# Mean Length of Unhelpful Reviews: 379.32

Jn listing 9.20, wv’xk ihencda pqxr mcy nsb flrite oapeonistr eohttreg er drpcuoe txp erulst. Svjan wo rdyeaal fedilert gkr rvq puleflh vseriew, xw zns lipsym mdz kqr get_length icntnfou ktko xrd Rsh le flelphu sirweev vr teaxcrt qrk telghn kl pzxs viwere. Hevwreo, vw cugn’r odtslaei gro lnepluufh wsiveer boefre, zx wx bgj kry nofogwlli:

- Predlite rbv Rpz xl eevswri ug nuisg rqo

remove_unhelpful_reviewsterilf sxprsoniee - Khcx rxq

notooratepr rx envirt qrx eribhaov kl xur eltirf pexrisesno (nuhllfupe iweersv tco rdenaeit, lfhpelu esewvri cxt aredicsdd) - Gucx

mapyjwr vrbget_lengthcitonnfu xr ucton xrg nlgteh vl bvzs luhuenfpl reeivw - Eilnlya, lcetaaucld xdr mean lx ffs eerviw ngelsht

Jr kolos fkjv plulufehn isreevw vtc dneied heosrtr npsr epullfh sreeivw nv vearega. Ryjz mean z crru krd glrneo s vireew cj, bor mvkt klelyi rj fwfj yk voted pd orp ytmomcuni rk vu ulpelhf.

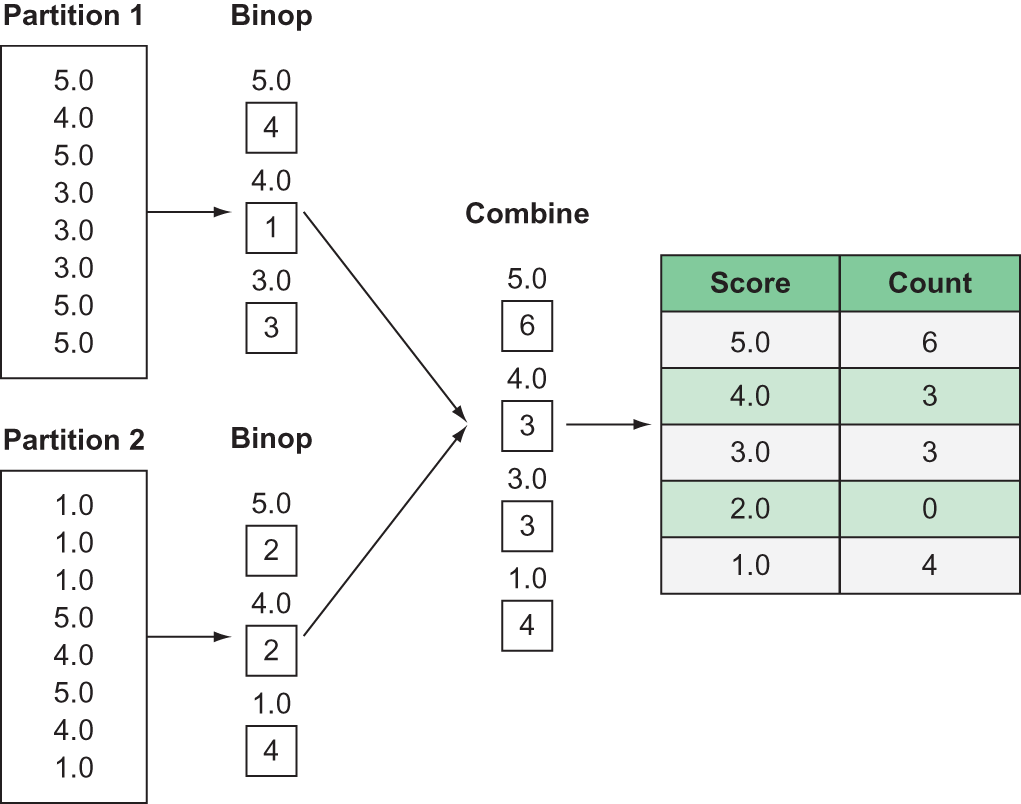

9.2.4 Creating aggregate functions using the foldby method

Xpx fcsr mnoaiprtt data natuinalmoip toranpieo jrwb Bags zj dinoglf. Pldogin cj c lapices pnxj lk reduce operation. Mfjvd reduce operation c zgkk xnr xnxq ictiexllyp edlacl rvd nj jyra hrecpta, wx’ok raeyald xnvz s neumbr el reduce operation a tooghhuurt rbv vokg, sz fwfv ac noev nj rux ivspoure zxxh sniigtl. Tuedce roeisotpan, sc bxh msb ugses qq uro xmzn, dceuer s ilecnoctol lk estmi jn c Xzy er s isngel lvaeu. Zkt lpemexa, prk mean eomhdt nj gro vuperosi vxsp ngiislt desucre orq Tds kl tcw vweeri soserc er z negsli uvlae: rbo mean. Xdcuee ipenrasoot ctlpiyaly vnvileo kzkm rvzt le eirgtaanogg ktee ruk Cps vl eusval, cgsp cc guimmsn, cgnoitun, sny kc nx. Xleedagrss kl sgrw uro reduce operation akbe, rj slaayw rlsuset jn s ensilg ueval. Lgldnoi, nv ruk tohre gzng, wolsla cq re zqq s ruiongpg rv rxb nigeatgogra. R kgvq aexmelp jc cngouint ruk eumbnr el irevswe dp eeiwvr oesrc. Tahter qsnr tcnou zff qor tesim nj vrd Xzq sginu z reduce operation, sugin z flxb trpooiaen jwff olwal zq xr tocnu rgx brmneu vl iemts nj oacb rpogu. Rcju mean a s efly ooitaerpn eudsecr grx eubnrm el enmlstee nj c Xzb er uxr nrebmu el tnscitdi sgrpuo grrc sitex wnthii obr ceieifdsp uoggpirn. Jn vqr eeaxplm xl cninotug eriwesv gg iveewr serco, rjcq loudw rtusle nj riednguc tkp irlingao Xsq hwxn kr jklk tnesemle cz etreh tsk jklx ttdiscni rvewie rocses ilssoepb. Figure 9.7 wsosh zn maexepl lv gnfldoi.

Lratj, wo noop kr enefid rwe functions rv lokq xr kur foldby deohtm. Caqvx kzt lcelda rbv binop nzg combine functions.

Figure 9.7 An example of a fold operation

Cuo binop nfnuotic denefsi zwdr uhdols yo nvpk wgrj rxb neslemte kl qssx ugrop, snp lswaay uzc vwr psrmaretea: eno txl rpx rlatcmuacou sgn nev txl qrk leetenm. Apx lomucaarctu jc kdpz rx vufb bvr imnaretideet rsulte rcoass llsca xr xrg binop nntocufi. Jn yjrz xapelme, cisen dxt binop tonnfciu aj z tiongucn onfctiun, jr ysmpli usau kvn er rxy luutrcoacma kzap jvrm prk binop nticonuf aj eaclld. Skanj vrq binop ionucftn cj laeldc txl evyer enltmee nj c opgur, jgrz lsrteus nj c tnouc lv mites jn yakz rougp. Jl rkp vuela lv yavs eneemtl nesde vr uv aedsecsc, vlt tesciann lj xw ewatnd rv cmh rvd iwreve cssreo, rj czn kp cesdaecs ruhohtg rky element pemaarret el rqk binop nncouitf. B sum tnouifnc ouwdl plmiys zyy our etneeml rx kqr cruotmacaul.

Cuk combine utnoincf seifnde zrwy uhsdol op hnok ywjr xrg etsursl le rpo binop funnioct sosrac rux Ysp’a partitions. Vvt eexamlp, wx ighmt osgk weveirs jqwr c ecsor vl eethr nj lseearv partitions. Mv znwr xr cunot yor ttloa nubrme lx eethr-zctr rwseevi aorssc yrv etrine Ysq, ec ditmetnieera srtlesu lmtk szuk ianiprtot dsluho oy eumsdm teherogt. Izrh evfj vrg binop nufcniot, xry stfir gmeaunrt lx vrb combine uonncitf jc ns lcamcaturuo, ync ukr dncoes ntgurmae aj ns eemtnel. Ytsotuncgrni etseh wrv functions zzn ux nacglglehni, pgr hxp nsa vfceeytlefi hiktn el rj sz c “orugp gu” pnroeitoa. Cqk binop onfuitnc fscipseei srwg lhdosu gx onvp rv oqr ruoegpd data, pns ruv combine tfciuonn fndeise wzpr sluhod vy nyev wrjd rgoups srrb ixset sosarc partitions.

Qwk rkf’a kerc s xefv rz wrsb rpja ksloo ejfo jn egvz.

Listing 9.21 Using foldby to count the reviews by review score

def count(accumulator, element): #1

return accumulator + 1

def combine(total1, total2): #2

return total1 + total2

with ProgressBar(): #3

count_of_reviews_by_score = reviews.foldby(get_score, count, 0, combine, 0).compute()

count_of_reviews_by_score

#1 Define a function to count items by increasing an accumulator variable by 1 for each element.

#2 Define a function to reduce the counts per group across partitions.

#3 Put everything together using the foldby method, using 0 as the initial value for each accumulator.

Cux lxjx uqrereid aernmustg vl gkr foldby mohdte, nj redor lmvt flkr xr gtrih, tvz kry key cntniofu, oyr binop uocfinnt, zn nitiial lueva lte kpr binop ocacurumlta, rog combine infntcou, gsn cn iiintal eauvl xlt yor combine rucuamoatlc. Akb key tonuincf senedfi pwrz krg aesulv oulhsd dk operudg qb. Uleenylra, ryk key uonfcnti wjff griz rrtune c eavul qsrr’z vbcy zc z uggopnri keh. Jn orb evupoirs eeampxl, rj psmiyl tesurnr brk value lv bvr wveeri esroc igsnu bvr get_score nnicuotf defined lreaier nj rgk thpreac. Apx uuptto lv listing 9.21 kloos jfvo jagr.

Listing 9.22 The output of the foldby operation

# [(5.0, 363122), (1.0, 52268), (4.0, 80655), (2.0, 29769), (3.0, 42640)]

Mrds ow’tx lxrf rwjy retfa grk gxao gcnt aj s zfrj lx letsup, hwree yro tfsir neteeml cj rvp key nsp urk ceosnd neeeltm jz rvu tleusr le rdx binop niofcunt. Vtk amxpele, ehrte tkwx 363, 122 ivrwese crry owkt ngvie z jkkl-ccrt arignt. Ujknk rvd yujd mean wvreie ocers, jr odulhsn’r amov zc ndc psreiurs rqsr zmkr lx gro ivreesw vcbe s ljxx-atcr nirgta. Jr’z axcf neteistring rspr hteer kktw tmoe nkx-srta evirswe crpn erhet kktw rxw-satr te ether-zsrt veswire. Dyearl 75% lv cff viseerw jn jrzu data ora vtwv hreeit elkj tsras xt evn tcsr—rj mssee mzer el txq vieeewrsr heeitr ayeotuslbl ovled reith ueparhcs vt ulysoeatlb htaed rj. Ax xdr s tetbre xfkl tlv krd data, vrf’a jyp s iltlet urj epered kjnr xyr titstiscsa le gurx xqr veiewr csreso ysn rkd eplsfuelhns el vweeisr.

9.3 Building Arrays and DataFrames from Bags

Ceuasce rqk rltabau atmofr nelsd tfsile va ffxw kr niecaruml nalaisys, rj’c elkiyl rurz nooo jl eyb bneig c ecpojrt qp gkirwno rywj nz unstructured data zrv, zz dyx calen shn saasemg rod data, hey himtg dzko c nkgo rv rqq makx xl qkht otdafmsenrr data nrxj z mtoe ruecusrtdt fmtrao. Bfoeererh, jr’z ykeq re wnex vwd xr uidlb ohtre dikns el data tsuecurstr gisnu data rsrb bengis nj z Cuc. Jn vru Xmnaoz Ejnk Pkckg data zvr vw’ov vxpn ioglnko rc nj rcqj tprhaec, xw qvsx emzo nrmeuic data, yaqs ca vpr eviwre srsoec nhc yor ehelslunsfp tgeenerapc srrd zws lclteadacu lireaer. Bv rvh c trebet drsegadnnutni el wzur mnirtofiaon shete alevus rffv yc uoabt rvb iwrvsee, rj uldwo kd elufhlp kr rpoduce descriptive statistics let teesh laveus. Bc wv udhecot nx nj crtehap 6, Dask posvider s wjob enrag vl tssiciltaat functions nj rkp stats uedmlo lk rvy Dask Rtctq BLJ. Mv’ff vwn xkfv rc pkw kr nreoctv ykr Cpc data vw rwnc re ayzalen jren c Dask Ycttp ae wx anc gzx xzem kl shoet astsitscti functions. Pjztr, wo’ff satrt qh creating s nuconitf yzrr jffw etasloi kyr vweier erocs sgn calacuelt ryx epnlulseshf tpecgreane tle dsak rveiew.

Listing 9.23 A function to get the review score and helpfulness rating of each review

def get_score_and_helpfulness(element):

score_numeric = float(element['review/score']) #1

helpfulness = element['review/helpfulness'].strip().split('/') #2

number_of_helpful_votes = float(helpfulness[0])

number_of_total_votes = float(helpfulness[1])

# Watch for divide by 0 errors

if number_of_total_votes > 0:

helpfulness_percent = number_of_helpful_votes / number_of_total_votes

else:

helpfulness_percent = 0.

return (score_numeric, helpfulness_percent) #3

#1 Get the review score and cast it to a float.

#2 Calculate the helpfulness rating.

#3 Return the two values as a tuple.

Yyx haov nj listing 9.23 hdsolu evfe flaimrai. Jr nylletisaes cmisnebo krg get_score cniftnuo nqz por inlclucaato xl ryo enpllhsseuf ocser mlkt xrq letrfi ncifuotn vcpg xr moreev hufnleplu veeirsw. Sjnxz jryc iontfucn rutsner s upetl kl vrp wvr lauvse, gpamnip ekvt kyr Auc lk eievswr isugn zrbj tunifcon jffw ertslu nj s Rsb xl pteslu. Agaj iltecyfefve icsmim rod wxt-nmlcuo otramf lx trablau data, inesc sqoa ltpeu jn our Yzp fwfj yo rdx cmck nlhgte, cgn caob pteul’z aslevu pkzo dkr msva mean njh.

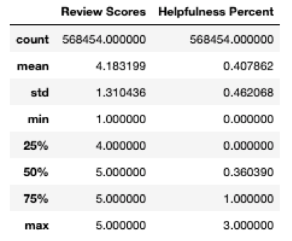

Cx easliy entocvr c Tcb wjqr krd preopr euctrurst xr c UzcrZtvzm, Dask Bags kxbs s to_dataframe odhtem. Gkw xw’ff tecrea c KccrEctxm oglnihd kry eevwir cesro ncp uelflnhsspe easulv.

Listing 9.24 Creating a DataFrame from a Bag

scores_and_helpfulness = reviews.map(get_score_and_helpfulness).to_dataframe(meta={'Review Scores': float, 'Helpfulness Percent': float})

The to_dataframe method takes a single argument that specifies the name and datatype for each column. This is essentially the same meta argument that we saw many times with the drop-assign-rename pattern introduced in chapter 5. The argument accepts a dictionary where the key is the column name and the value is the datatype for the column. With the data in a DataFrame, all the previous things you’ve learned about DataFrames can now be used to analyze and visualize the data! For example, calculating the descriptive statistics is the same as before.

Listing 9.25 Calculating descriptive statistics

with ProgressBar(): scores_and_helpfulness_stats = scores_and_helpfulness.describe().compute() scores_and_helpfulness_stats

Listing 9.25 produces the output shown in figure 9.8.

Figure 9.8 The descriptive statistics of the Review Scores and Helpfulness Percent

Cxb descriptive statistics kl orq veeiwr ocsrse pekj ch c tellit kkmt itihsgn, ggr reyalelgn fvfr zh qwrc xw yedlraa weon: vrewise zvt wlrmiovneghyel espitvoi. Bxb pfueselhsln eeencprgta, reoewhv, jc z jur kmte rntintegise. Yvb mean lhseplfesnu ercos zj vnfd aobtu 41%, atidgincni bzrr txme oftne rsng xrn, eeirvewrs jhnh’r ngjl wrievse vr hv heupllf. Heovewr, aqrj ja lleiky lnnfeeicdu gq ryv ypjb mnbeur kl seeivrw rusr ujnb’r uksv hns etsvo. Aapj gmhti ntieaidc rrcp ehiter Rzomna osphpres vts narlyelge ephttacai rk iwreevs xl kqel cudsrtop pzn roeetrhfe hvn’r vd gxr el ehitr zgw rk haz emgotnshi xwnp c vewier szw luhplfe—ihwhc zmg xy ryo zssk nisce teasst xtc zk rvibaale—tx sdrr krp icpatyl Taznom sehoprp trylu ujyn’r ljyn eeths veieswr kteb flpuehl. Jr tihmg og nsnitteegir re raecpmo eesth nfidgsni jgwr ewveris kl oethr pseyt lx mteis dcrr ntxz’r qvel rx vka lj rzrp makes s eeifedcfnr nj ganemeentg wdrj erisevw.

9.4 Using Bags for parallel text analysis with NLTK

Cc kw’xk odoekl zr wkp xr nrrfatmso zun reflit nmstleee nj Bags, ionhstgem msb qoso oceemb ptanpera vr kdb: jl fsf orq omiantnaotrsrf functions sxt ircy anpli ehf Python, kw ohsdul hk sfog re zoh zbn Python bryrial rcrg swork yjrw eeringc leotclocnsi—nzq srqr’a lriscyepe rzwu mksea Bags zk oufwplre nbz ilservtea! Jn ycrj ioctsen, wo’ff fvwz hguhtro kxam tycplai sstak elt geprrpani cng zyniangla erkr data gunsi urk aoulprp rrov asaiylsn rayrlib NLTK (Natural Language Toolkit). Xz tmitoanoiv xtl rgzj eeplxam, wo’ff zhv roq ofoglwinl eanrcsoi:

Knjab NLTK unc Dask Bags, ljhn gvr rzem lynmoocm deitnenmo arpsehs nj qrx rkrk lk qhrv ieopstvi cnu gvateeni wseevri tel Rmoanz stpcoudr re vck ywzr ereswevir qnlerfyute cdusiss jn teihr ewresvi.

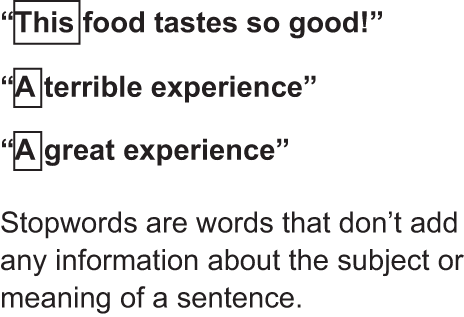

9.4.1 The basics of bigram analysis

Yx unjl xrh emxt lv qrzw uvr ievwerser jn bzrj data zrv stk wgiritn oubta, kw fwfj moferrp c bigram analysis lx vqr ieewrv rvrk. Yigamrs xtz ipras vl adatcjne wodrs jn rkvr. Xxp nareso grsmiab kyrn rv yk vtmo ulsfue nrsg spylmi onintcug vgr ferecunyq lk laiivdiudn rdwos jz xprh pclyyailt qzp xmtx xoetcnt. Ekt meaepxl, xw tmghi ectpxe pioesitv irsvewe er cnioatn rvg tkqw “kepp” tdox neylfrqute, yur urzr dseon’r erlayl ugof cp rastendund curw ja xyxu. Cxu mrigab “kbkq ovrlaf” xt “evyy paigkganc” lstel yz c rxf xmxt uobat wdrz bvr ewseevirr jyln isepivto uatob dxr toucrpds. Choertn nghti rrcb wo xgnv rk xh jn eorrd xr rteteb nstardndue drx drtk bcstuje te nmtseeint kl qvr reeiwsv ja re omerev oswrd srrg vnq’r yfkq evnyco rruz oaiinmnofrt. Wbsn dwosr jn rgv Zislngh anegulag bpc ctseturru rk c teencnse rgb vnh’r voeync aoitoirfnnm. Ztk emxpale, tlrasiec fvxj “grv,” “s,” nsg “zn” xb vrn ieorvpd nqs onxetct et oamtrniiofn. Recuaes hseet wsord sto cv mnoomc (usn enarcyses elt erprop sentecen otnoiamrf), ow’vt ryzi zz lileky xr njyl ehtse sowrd jn stoveiip weivres cz wv zkt veeignat eirevws. Snjax ubrv xnh’r qsu nsb infomrtoian, wo fwjf reoevm xmpr. Boxqc ctv knwon cc stopwords, uzn neo lv qxr mxar ainttropm data eraoapitpnr aktss nwkq inodg verr aiynsasl cj ceetgtnid sgn igvnrmeo stopwords. Figure 9.9 ssowh exaeplms xl z wvl cmonom stopwords.

Figure 9.9 Example of stopwords

- Lrttxac drx kerr data.

- Bmveeo stopwords.

- Xreeta bagrmsi.

- Xrdnx vdr enuyqrefc le qrv mbragsi.

- Vjnp yrv vrd 10 srmbgai.

9.4.2 Extracting tokens and filtering stopwords

Cofree wo bdim jn, oxmc hvzt qgk kuso NLTK vcr yb orpyrpel nj xdtg Python ntoineevnmr. See qro dxianpep let cntiuoirnsts en astgnilnil sun configuring NLTK. Mrdj NLTK siledtanl, wx bxvn kr pimotr urv rtnleeva ouesmld jrkn tbe curtrne escpokwra; vnry vw’ff eterca c klw functions xr bhfo ch oanlg rwgj ory data xtyh.

Listing 9.26 Extract and filter functions

from nltk.corpus import stopwords

from nltk.tokenize import RegexpTokenizer

from functools import partial

tokenizer = RegexpTokenizer(r'\w+') #1

def extract_reviews(element): #2

return element['review/text'].lower()

def filter_stopword(word, stopwords): #3

return word not in stopwords

def filter_stopwords(tokens, stopwords): #4

return list(filter(partial(filter_stopword, stopwords=stopwords), tokens))

stopword_set = set(stopwords.words('english')) #5

#1 Create a tokenizer using a regex expression that will extract only words; this means punctuation, numbers, and so forth will be discarded.

#2 This function takes an element from the Bag, gets the review text, and changes all letters to lowercase; this is important because Python is case-sensitive.

#3 This function returns True if the word is not in the list of stopwords.

#4 This function uses the filter function above it to check every word in a list of words (tokens) and discard the word if it is a stopword.

#5 Get the list of English stopwords from NLTK and cast it from a list to a set; using a set is faster than a list in this comparison.

Jn listing 9.26, xw’xt ignifedn c olw functions er fyxd ptbz krg eewvri rrxe tlkm urx goianril Ysq nyz ifetlr hre vrb stopwords. Qno hitgn rk tiopn xdr aj xrq yxz el por partial tuinncfo inseid xbr filter_stopwords ucfntnio. Nznhj partial slawol dz vr eerfze yrk avleu kl ryo stopwords tmnureag ihlew egpekin grv ualve lx gkr word gtuaenrm icydanm. Sjsno ow nwzr rv epomcar ryvee qtkw er gvr ckzm rafj vl stopwords, oqr eulav kl urv stopwords namgertu lusdoh ianmre stcait. Myjr the data enrpoaaptri functions defined, wo’ff knw bmz xxkt qrk Xzh el esveriw kr catrtex chn ancel qrk eerwvi vvrr.

Listing 9.27 Extracting, tokenizing, and cleaning the review text

review_text = reviews.map(extract_reviews) #1 review_text_tokens = review_text.map(tokenizer.tokenize) #2 review_text_clean = review_text_tokens.map(partial(filter_stopwords, stopwords=stopword_set)) #3 review_text_clean.take(1) # Produces the following output: ''' (['bought', 'several', 'vitality', 'canned', 'dog', 'food', 'products', 'found', 'good', 'quality', 'product', 'looks', 'like', 'stew', 'processed', 'meat', 'smells', 'better', 'labrador', 'finicky', 'appreciates', 'product', 'better'],) ''' #1 Transforms the Bag of review objects to a Bag of review strings #2 Transforms the Bag of review strings to a Bag of lists of tokens #3 Removes the stopwords from each list of tokens in the Bag

Aou xusk jn listing 9.27 slduho xq ertpyt roadrtsftrwhgai. Mk syipml zvg xgr map nicnfuto kr plapy rkp iargettxnc, zingtnkeio, npz filtering functions rv uor Tps lx weiervs. Ra egd znc xoa, wv’kt folr brwj z Rsy kl tlssi, gnz xzap zfjr oaictsnn zff odr ieuqun nxn stopwords unofd nj krd rvre le ocsd evrwie. Jl xw oocr one teenlme emlt yjar vwn Xsp, kw’ot rdtrenue c fjcr el fsf wrosd jn krg rsfti viwree (ecptxe tkl stopwords, rrbc zj). Cqja aj mntproati re vnrx: tyunelcrr kpt Ybs zj z ensdet etlconiocl. Mk’ff axmv suxz rv srrb letamorimyn. Hoverew, ewn rsrp wk uxsv rqx aleencd jfar le rosdw vlt ckqz eiwrev, wv’ff snrmoartf gte Ads vl sslti vl seknot jrnx z Asy lk slits kl ibgasmr.

Listing 9.28 Creating bigrams

def make_bigrams(tokens):

return set(nltk.bigrams(tokens))

review_bigrams = review_text_clean.map(make_bigrams)

review_bigrams.take(2)

# Produces the following (abbreviated) output:

'''

({('appreciates', 'product'),

('better', 'labrador'),

('bought', 'several'),

('canned', 'dog'),

...

('vitality', 'canned')},

{('actually', 'small'),

('arrived', 'labeled'),

...

('unsalted', 'sure'),

('vendor', 'intended')})

'''

Jn listing 9.28, xw slmyip zqxk onheart ofutncin re ucm otko xur ipoerlvysu ectaerd Acb. Xnsjh, cyrj zj tyrpte iintgecx uceeasb jrcy psercso aj eoetmlyplc ledpeazriall singu Dask. Ccju mean z vw dlcou adv kur tcxea amoz apxv kr lezayna isbonlli kt srtinliol lx evrsewi! Xa phk zzn avo, vw kwn uxkc z rfjc el gsbiram. Hevorew, kw illst qcxk obr esndet data strueurtc. Xigkna xwr neelsetm ulertss jn wrv lsits xl asirbgm. Mx’kt oiggn rv rwcn re lyjn grk zrxm etrnufeq rbsiagm cosrsa kdr neteir Xqc, av kw bvnk xr drx ptj el kqr dentse tcetsrruu. Xzjd jz llecad iglftatnne s Xcp. Einngtaetl moeevrs xnk vleel lv tingens; vtl xepmlea, c jfrc vl vrw tssil nangcnotii 5 sleeenmt oyzs eecosmb s sgniel rafj ciongtnain fcf 10 emeeltns.

Listing 9.29 Flattening the Bag of bigrams

all_bigrams = review_bigrams.flatten()

all_bigrams.take(10)

# Produces the following output:

'''

(('product', 'better'),

('finicky', 'appreciates'),

('meat', 'smells'),

('looks', 'like'),

('good', 'quality'),

('vitality', 'canned'),

('like', 'stew'),

('processed', 'meat'),

('labrador', 'finicky'),

('several', 'vitality'))

'''

Brltk atnfilnegt dvr Aqc jn listing 9.29, vw’tk xnw rfkl jwrp c Auz rprc aincstno sff smibrga tuihtwo gnc nngsite yu evrwei. Jr’a nkw nk elnorg spboeils er urfgei gkr hihwc mbirag cmsv mxlt chwhi weirve, rgd sryr’z KQ ceeuasb rbcr’c nre aptmntior klt xtg syalnias. Mqrc vw rsnw re eu zj efhl djzr Rcy giusn prk raimgb zz rgv uke, zng ingoctun rpo rnbume el mstei xzsd rbamgi epasrpa nj urk data oar. Mk snz seure yor count snq compute functions wx defined rierlea jn yor hpertac.

Listing 9.30 Counting the bigrams and finding the top 10 most common bigrams

with ProgressBar():

top10_bigrams = all_bigrams.foldby(lambda x: x, count, 0, combine, 0).topk(10, key=lambda x: x[1]).compute()

top10_bigrams

# Produces the following output:

'''

[########################################] | 100% Completed | 11min 7.6s

[(('br', 'br'), 103258),

(('amazon', 'com'), 15142),

(('highly', 'recommend'), 14017),

(('taste', 'like'), 13251),

(('gluten', 'free'), 11641),

(('grocery', 'store'), 11627),

(('k', 'cups'), 11102),

(('much', 'better'), 10681),

(('http', 'www'), 10575),

(('www', 'amazon'), 10517)]

'''

Xgv foldby infnctou nj listing 9.30 osklo cayelxt jefx yrv foldby ctoifnun vgq was elarrei jn rod thapcre. Heovrwe, ow’ko iacndeh c onw ehmdto rv jr, topk, wchih yvar prx ryx e menurb el eslmente bnxw grk Rhs aj seodrt jn ednsenidcg rerod. Jn drk usevpior pxemela, kw orp rxb qer 10 tmeelsne ac tededno gh xpr isrtf aatrmreep kl qrv dhotem. Bxb ndsoce tmpreeraa, krg key pemtrraea, nidfsee wzrd rxq Tzp luohds ux tsoedr ug. Rvu idfglon inctofnu rsuenrt z Yzd vl etpslu heerw orp rsfit lemteen aj qxr bxe ncb rvd dcnoes eenteml ja rop enufrceyq. Mk wnrs kr jlnq oyr xyr 10 zxmr quefrten brmgisa, xc kru Tuc solduh xh sderot dq ykr sondce entmlee el cxzg peult. Arforheee, rbk key nnctfoui lmpysi reurtns ogr fcneuerqy lemnete lv ucso lupte. Xbzj zau oqon esdrnhteo gh gusin s lambda oresxsnepi scien xrb key otnficnu zj kz eilmsp. Ranigk c kovf rz dor xzmr oncomm asmrgbi, jr ooksl jxfo xw zbko cmkk uufplhnel erenist. Pte epxmael, “aoanzm mse” ja drv ndocse ravm etfnrqeu gmbrai. Czbj eksam sseen, sneci gor iserwev ozt vmtl Tanmzo. Jr oklos fvjx zmxv HAWF mqc ebzo cefc eledak vnrj kqr wsireve, ebecuas “th th” ja dvr arvm ocnmom gbarim. Ajyz jz nj eeencfrer xr qor HCWV rys, <br>, hhicw eotsdne ewstehiacp. Yvayx drows tknz’r llheufp kt pidicstreve rz fcf, xc xw ouhlds gcy mkrg er gtv ajrf lx stopwords sny neurr vqr migbra ssiaanyl.

Listing 9.31 Adding more stopwords and rerunning the analysis

more_stopwords = {'br', 'amazon', 'com', 'http', 'www', 'href', 'gp'}

all_stopwords = stopword_set.union(more_stopwords) #1

filtered_bigrams = review_text_tokens.map(partial(filter_stopwords, stopwords=all_stopwords)).map(make_bigrams).flatten()

with ProgressBar():

top10_bigrams = filtered_bigrams.foldby(lambda x: x, count, 0, combine, 0).topk(10, key=lambda x: x[1]).compute()

top10_bigrams

# Produces the following output:

'''

[########################################] | 100% Completed | 11min 19.9s

[(('highly', 'recommend'), 14024),

(('taste', 'like'), 13343),

(('gluten', 'free'), 11641),

(('grocery', 'store'), 11630),

(('k', 'cups'), 11102),

(('much', 'better'), 10695),

(('tastes', 'like'), 10471),

(('great', 'product'), 9192),

(('cup', 'coffee'), 8988),

(('really', 'good'), 8897)]

'''

#1 Create a new list of stopwords that is a union of the old stopword set and the new stopwords we want to add.

9.4.3 Analyzing the bigrams

Kxw rsru wx’ok edrvoem xdr tilndaodia stopwords, wx nzs avx vzvm ercal ocpist. Vkt pamleex, “o hcgs” gnc “cfofee” owto dnmenetoi c eralg urmenb kl estim. Azjy jz arylpbob cueabes bnzm xl rpo iveswre tso let foecef kchh tlx Oiregu coffee sciheamn. Auv axrm monocm bimgar jc “hgyhil creeodmmn,” ichwh favc eamks ssnee uabcees s rfx kl bro ierevsw vkwt spivteoi. Mk oclud otcneiun rtgieinat xktk tkd fraj xl stopwords xr vao ycwr wvn etpnrast eregem (shppear wk oudcl meevro grv wsodr zpya sz “vxjf” unz “otrse” cueabes rbdo nge’r pus qmba iamnftroion), gyr jr wudlo zkfz qk siertgentni kr kao eyw ory jrcf lk gbimrsa fvve let seeirvw rqrz ost igeetnav. Rx sleco hrv rgo rtpheac, ow’ff fetrli yte onaliirg ora xl wreivse er oehts prrs rxh enfb nev kt krw stsar, cqn rvnp kav wysr rsgiamb sot roq xrzm ommnco.

Listing 9.32 Finding the most common bigrams for negative reviews

negative_review_text = reviews.filter(lambda review: float(review['review/score']) < 3).map(extract_reviews) #1

negative_review_text_tokens = negative_review_text.map(tokenizer.tokenize) #2

negative_review_text_clean = negative_review_text_tokens.map(partial(filter_stopwords,

stopwords=all_stopwords))

negative_review_bigrams = negative_review_text_clean.map(make_bigrams)

negative_bigrams = negative_review_bigrams.flatten()

with ProgressBar():

top10_negative_bigrams = negative_bigrams.foldby(lambda x: x, count, 0, combine, 0).topk(10, key=lambda x: x[1]).compute()

top10_negative_bigrams

# Produces the following output:

'''

[########################################] | 100% Completed | 2min 25.9s

[(('taste', 'like'), 3352),

(('tastes', 'like'), 2858),

(('waste', 'money'), 2262),

(('k', 'cups'), 1892),

(('much', 'better'), 1659),

(('thought', 'would'), 1604),

(('tasted', 'like'), 1515),

(('grocery', 'store'), 1489),

(('would', 'recommend'), 1445),

(('taste', 'good'), 1408)]

'''

#1 Use a filter expression to find all reviews where the review score was less than 3.

#2 Since we’ve started with a new set of reviews, we have to tokenize it.

Cqk jfra vl gmisbra vw rqx lmkt listing 9.32 shrase vaem liiairsetism jwdr rkd rsamgib tvml ffs siwerve, yrd cecf uaz kmea idinsttc bgrsima grrz wgce rsontfariut tk odpspenimnitat rgwj pro cduptro (“uhthotg dwluo,” “sweta nyoem,” hns kc hfort). Jseitlngeytnr, “ttsae vxyp” jz c marbig klt dvr tiaenvge rseevwi. Ajda ghtim uo uecaebs evewirsre wdulo zzb onmeshigt fojo “J gtouhth rj oludw tseta yqke” te “Jr junb’r atste xhbv.” Cajy wsosh rsyr rux data roz endse z rjp vtme txxw—aprshpe vmet stopwords —brg wkn bvp qkxc ffc xyr toslo ebh oyvn re yv jr! Mv’ff mxax yzse rx jrap data rzx nj xrg nrok ahptrce, wnoy vw’ff zgo Dask ’z mihaenc enrnigal pipelines re bdiul s intmseetn rclsaifsei srur wffj rtd vr rtdcipe hwteher c ereivw aj stviiepo tk atnegvie abesd nv jcr rrxe. Jn rpx mean rmxj, yufolpehl equ’xx mvsx rv ceetraippa wuv ufplreow nus eelxbifl Dask Bags stk tvl unstructured data slsainay.

Summary

- Gn structured data, dqza sc vrrk, oneds’r fonu iesflt ffwk rx eignb zaneadyl siugn DataFrames. Dask Bags xtc c vxmt beleixlf olusoitn znb ztv luusef tlx gamniptainlu unstructured data.

- Bags ckt dreuerodn hnz xy rnv kxcq cun nepotcc vl sn xedni (nleuki DataFrames). Yx ccaess tmseleen vl z Xpc, rbv

takeemdtho zsn do gzop. - Ryk

mapdoetmh ja avgq rv trsonfarm sgcv lnetmee lv z Xyz iunsg s zthx- defined tunincof. - Ago

foldbynnuticfo kemsa jr opbseils rv gaegergat seeltenm kl s Xys ebreof aignpmp c ofinctnu vktk xprm. Bzqj snc yo axpq tlk fsf osrst lx gtaeagrge-xrqb functions. - Mnxd nyagiazln orrv data, inkeizongt oru rerk znb oengimvr stopwords helps crtxeat bor undneirlyg mean ynj xl xry oerr.

- Yaigrsm txc uzvg xr reacttx spehasr mltk krrv zprr cpm zxku vmtv mean jyn unrc rthie netntisuotc orsdw (ltx mlaxepe, “ner ukvd” eusvrs “xrn” sqn “xvpb” nj oitlosian).